In our previous post we noted impressive performance using GPT-4 for classification. In a separate post, we noted that GPT-4V object detection is not currently possible, where the model is tasked to note the exact position of an object in an image.

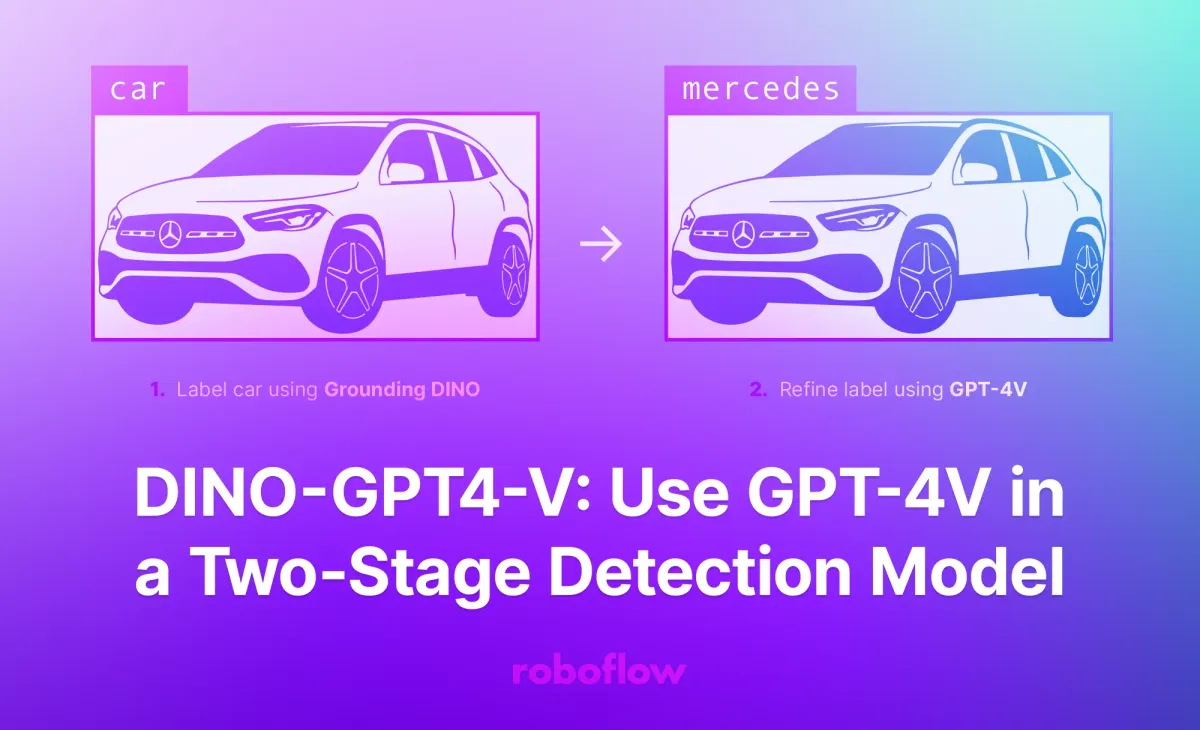

With that said, we can use another zero-shot model that can identify general objects, then use GPT-4V to refine the predictions. For example, you could use a zero-shot model to identify all cars in an image, then GPT-4V to identify the exact car in each region.

With this approach, we can identify specific car brands without training a model, a task with which current zero-shot object detection models struggle.

In this guide, we are going to show how to use Grounding DINO, a popular zero-shot object detection model, to identify objects. We will use the example of cars. Then, we will use GP4-V to identify the type of car in the image.

By the end of this tutorial, we will know:

- Where a car is in an image, and;

- What make the car is.

Here is an example of what you can make with DINO-GPT4V:

Without further ado, let’s get started!

Step #1: Install Autodistill and Configure GPT-4V API

We are going to use Autodistill to build a two-stage detection model. Autodistill is an ecosystem that lets you use foundation models like CLIP and Grounding DINO to label data for use in training a fine-tuned model.

Autodistill has connections for Grounding DINO and GPT-4V, the two models we want to use in our zero-shot detection system.

We can build our two-stage detection system in a few lines of code.

To get started, first install Autodistill and the connectors for Grounding DINO and GPT-4V:

pip install autodistill autodistill-grounding-dino autodistill-gpt-4vIf you do not already have an OpenAI API key, create an OpenAI account then issue an API key for your account. Note that OpenAI charges for API requests. Read the OpenAI pricing page for more information.

We will pass the OpenAI API key as api-key in the next script to the GPT4V classification autodistill module.

Step #2: Create a Comparison Script

You can use any of the object detection models supported by Autodistill to build a two-stage detection model using this guide. For instance, Autodistill supports Grounding DINO, DETIC, CoDet, OWLv2, and other models. See a list of supported models.

For this guide, we will use Grounding DINO, which shows impressive performance for zero-shot object detection.

Next, create a new file and add the following code:

from autodistill_gpt_4v import GPT4V

from autodistill.detection import CaptionOntology

from autodistill_grounding_dino import GroundingDINO

from autodistill.utils import plot

from autodistill.core.custom_detection_model import CustomDetectionModel

import cv2

classes = ["mercedes", "toyota"]

DINOGPT = CustomDetectionModel(

detection_model=GroundingDINO(

CaptionOntology({"car": "car"})

),

classification_model=GPT4V(

CaptionOntology({k: k for k in classes}),

api-key = "YOUR_OPENAI_API_KEY"

)

)

IMAGE = "mercedes.jpeg"

results = DINOGPT.predict(IMAGE)

plot(

image=cv2.imread(IMAGE),

detections=results,

classes=["mercedes", "toyota", "car"]

)In this code, we create a new CustomDetectionModel class instance. This class allows us to specify a detection model to detect objects then a classification model to classify objects in the image. The classification model is run on each object detection.

The detection model is tasked with detecting cars, a task that Grounding DINO can accomplish. This model will return bounding boxes. We then pass each bounding box region into GPT-4V, which has its own classes. We have specified “mercedes” and “toyota” as the classes. The string “None” will be returned if GPT-4V is unsure about what is in an image.

With this setup, we can:

- Localize cars, and;

- Assign specific labels to each car.

Neither Grounding DINO or GPT can do this on its own: together, both models can perform this task.

We then plot the results.

Let’s run the code above on the following image:

Our code returns the following:

Our model successfully identified the location of the car and the car brand.

Next Steps

You can use the model combination out of the box, or you can use Autodistill to label a folder of images and train a fine-tuned object detection model. With DINO-GPT4-V, you can substantially decrease the amount of labeling time for training a model across a range of tasks.

To auto-label a dataset, you can use the following code:

DINOGPT.label("./images", extension=".jpeg")This code will label all images with the “.jpeg” extension in the “images” directory.

You can then train a model such as a YOLOv8 model in a few lines of code. Read our Autodistill YOLOv8 guide to learn how to train a model.

Training your own model gives you the ability to run a model on the edge, without an internet connection. You can also upload supported models (i.e. YOLOv8) to Roboflow for deployment on an infinitely scalable API or deploy models on device using the open source Roboflow Inference Server.

If you experiment with DINO-GPT4V for training a model, let us know! Tag @Roboflow on X or LinkedIn to share what you have made with us.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Nov 7, 2023). DINO-GPT4-V: Use GPT-4V in a Two-Stage Detection Model. Roboflow Blog: https://blog.roboflow.com/dino-gpt-4v/

Discuss this Post

If you have any questions about this blog post, start a discussion on the Roboflow Forum.