Introduction

Cornhole is a game I like to play with my family during summer get-togethers. However, it can be hard to keep track of the score, leading to arguments and, occasionally, long-term family feuds. By creating a computer vision model to keep track of the score, I’m hoping to finally put these cheating allegations pinned against me to rest!

My goal was to create a vision-based system that would:

- Identify bags and their locations.

- Apply game logic to deduce the score.

- Determine who is in the lead in real time.

Systems like this can be used to help automate capturing statistics, create real-time scoring systems for large tournaments, create automated highlight reels, or more easily find specific points in a game.

Creating the Vision Model

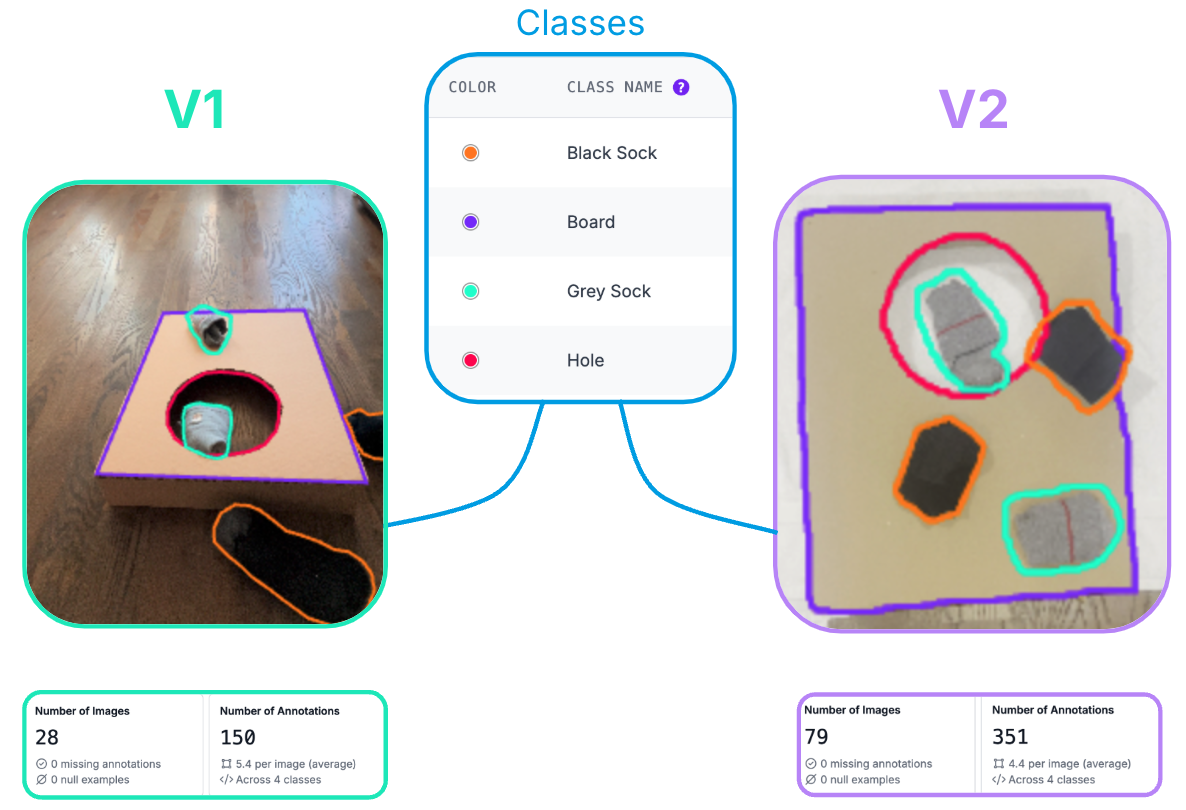

Living in a small NYC apartment, I had to get creative. I built a mock cornhole setup using cardboard and socks as beanbags. For the first version of my model, I positioned a camera behind the board and recorded a few videos of myself throwing socks onto the board. These videos were segmented into frames and uploaded to Roboflow, where I labeled the socks, board, and hole in each frame.

The resulting model performed well at detecting each object. However, applying the model in a real-world workflow revealed some challenges:

- Perspective Distortion: The camera’s angle made it difficult to produce a rectangular crop around the board.

- Lighting Issues: Poor lighting and a small sample size made it hard to detect socks during live video inference.

To address these issues, I:

- Repositioned the Camera: Placed it directly above the board to eliminate perspective distortion.

- Improved Contrast: Added a white towel beneath the board for better contrast.

- Increased Data: Collected twice as many images to enhance detection accuracy.

These adjustments significantly improved the model’s performance within the workflow!

Building a Workflow

With a reliable model in hand, the next step was to create a workflow that could:

- Detect the board and hole.

- Identify socks in each zone.

- Transform these detections into scores.

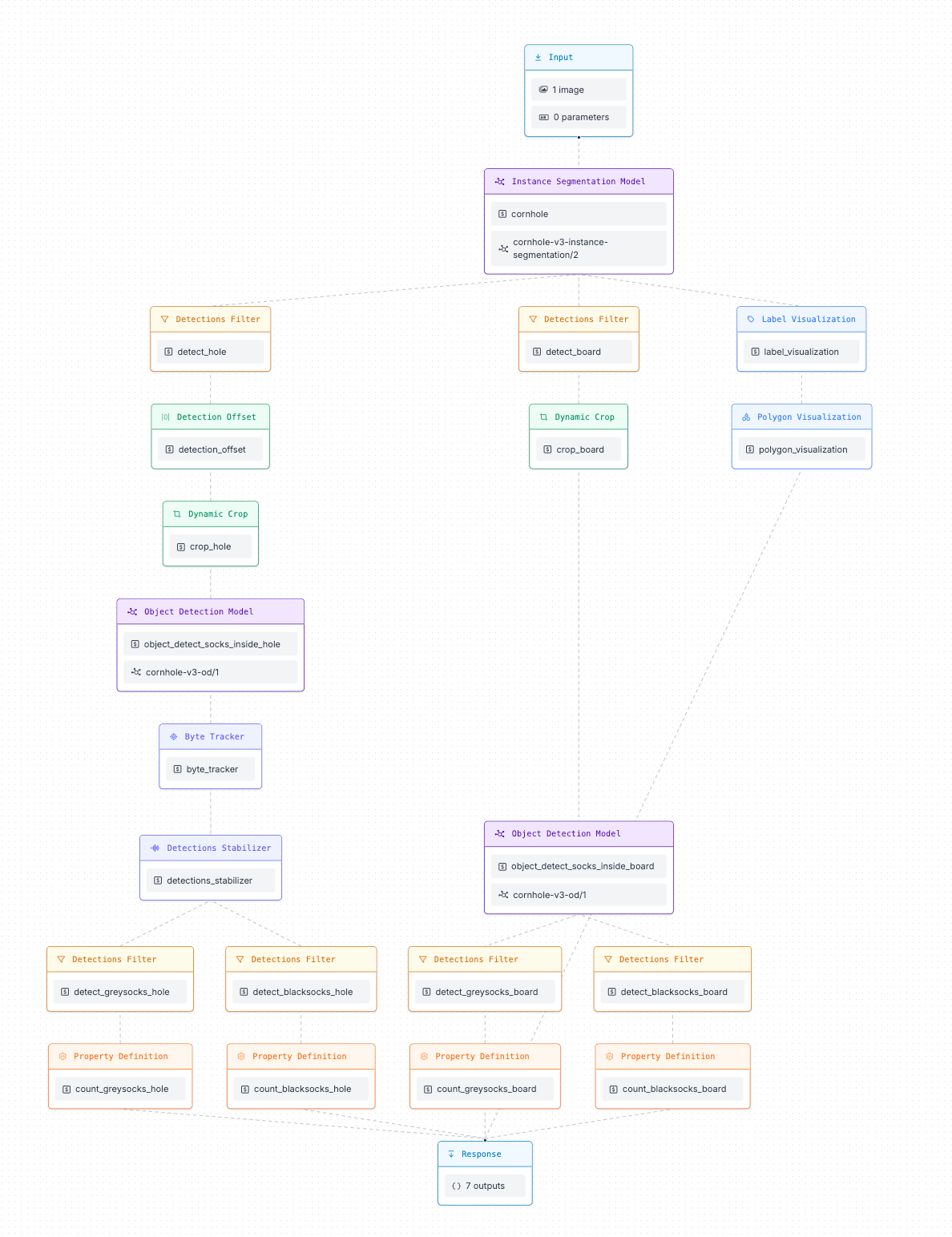

Below is the workflow created to achieve those results.

At a high level:

- Left Branch: Detects and zooms into the hole, then identifies and counts socks in that zone.

- Middle Branch: Repeats the process for the board.

- Right Branch: Visualizes the results to confirm functionality during deployment.

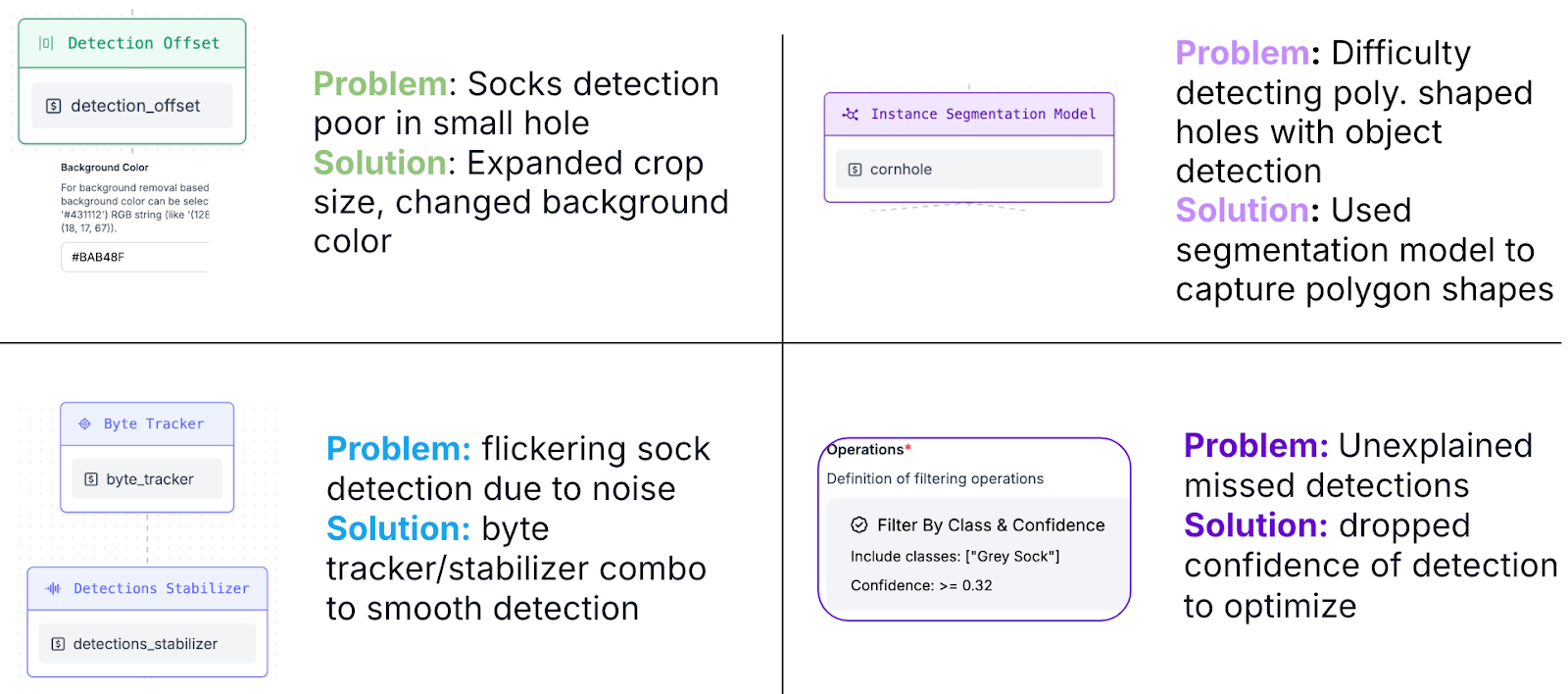

Different blocks and parameter adjustments were crucial in this stage to overcome challenges presented by deploying a model on a live video stream. Below I outlined the solutions Roboflow’s varied tools provided for me.

With the results from this workflow, we initialized an inference pipeline, running against the video frames at a rate of 4fps. Using the Roboflow Inference Pipeline, predictions were processed as follows:

- On prediction, a custom sink wrote score lines onto the image frames.

- The workflow’s predictions, which included socks on the board or in the hole, were used to perform simple math and render score lines directly in the images.

Finally, the processed frames were combined using FFmpeg to create the output video.

Results

The final result was a working cornhole score tracker that accurately recorded scores and displayed who was in the lead.

This system eliminates any room for disputes—my opponents can no longer accuse me of cheating!

Cite this Post

Use the following entry to cite this post in your research:

Willem Zook. (Dec 19, 2024). Automate Game Scorekeeping with Vision AI. Roboflow Blog: https://blog.roboflow.com/automate-game-scorekeeping-vision-ai/