Automated sorting with computer vision is a technology-driven process where computer vision systems are used to identify, classify, and sort items or materials based on specific characteristics, such as size, shape, color, texture, barcode, or other visual features. This approach is commonly applied in industries like manufacturing, logistics, agriculture, and recycling to increase efficiency, reduce labor costs, and minimize errors.

The following are the key components of computer vision based automated sorting system:

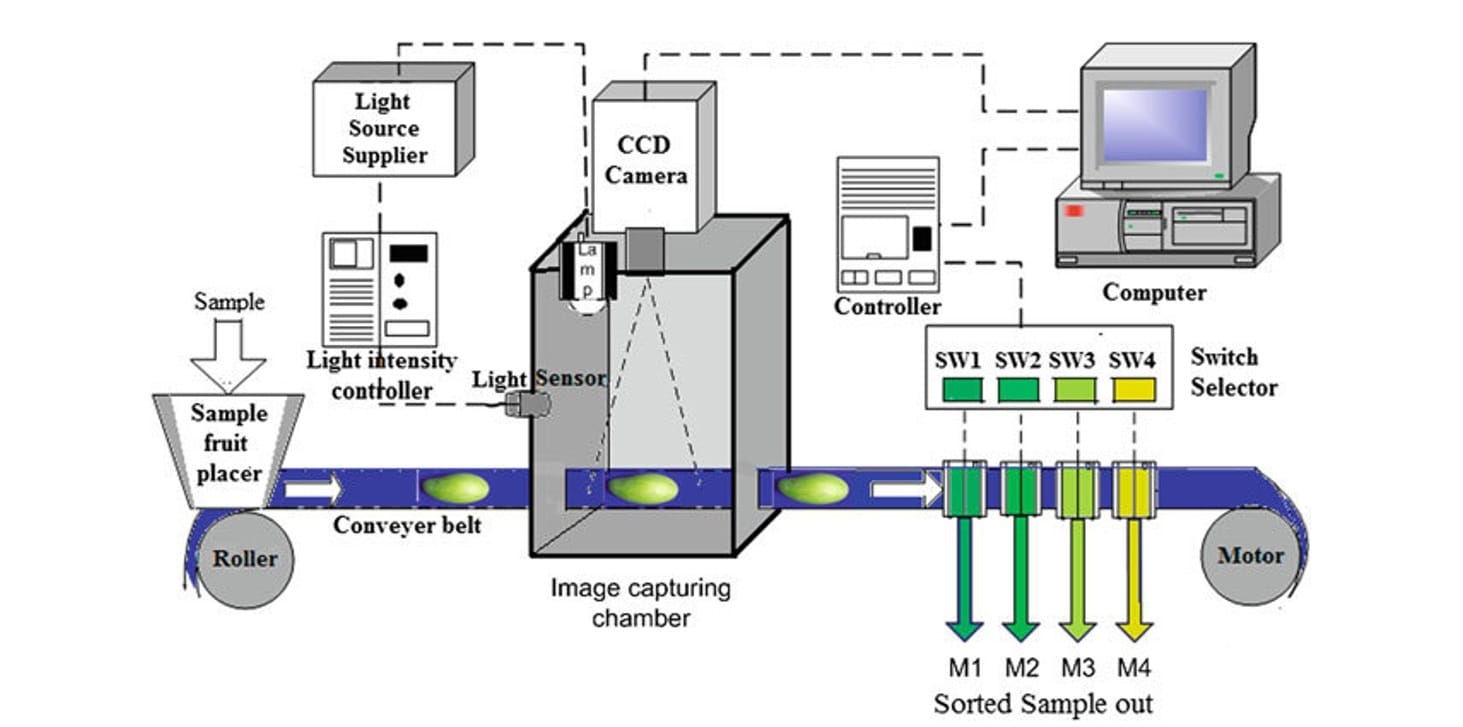

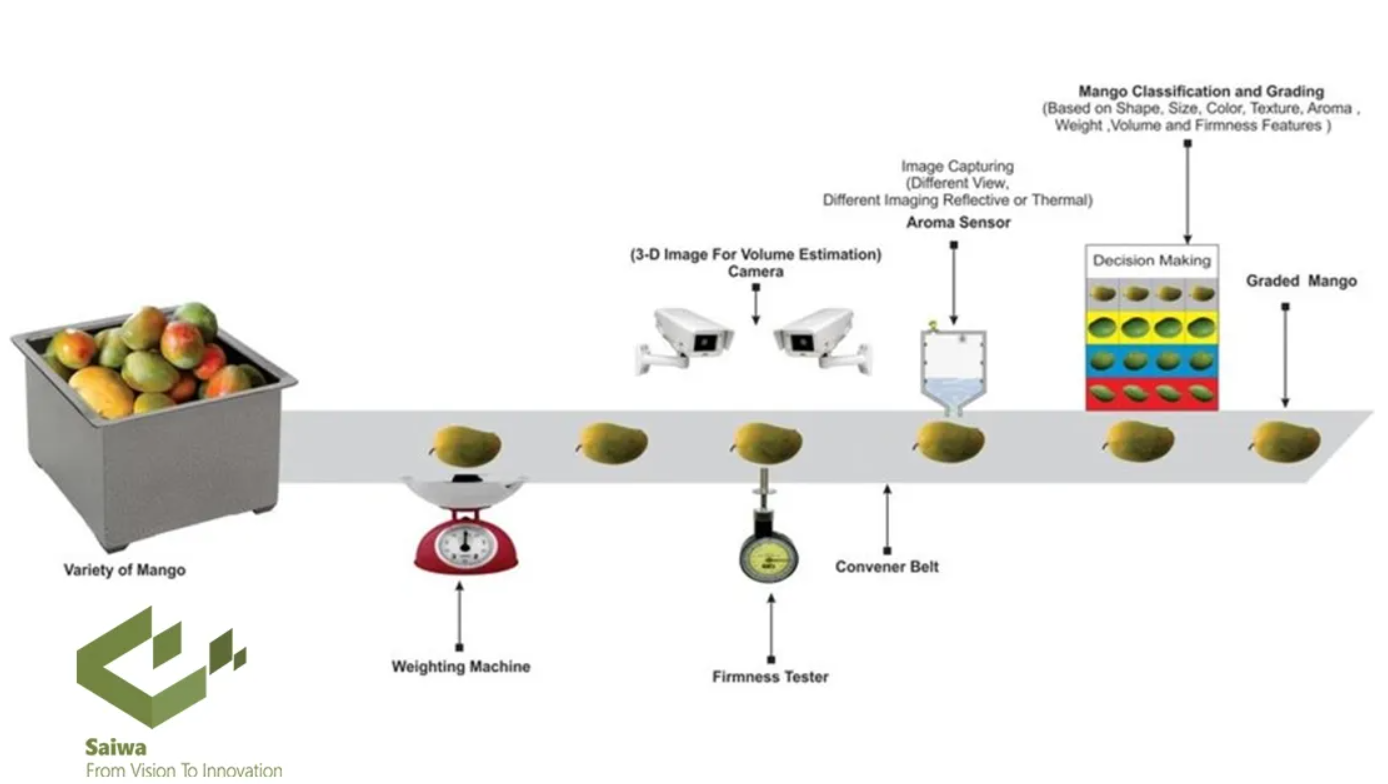

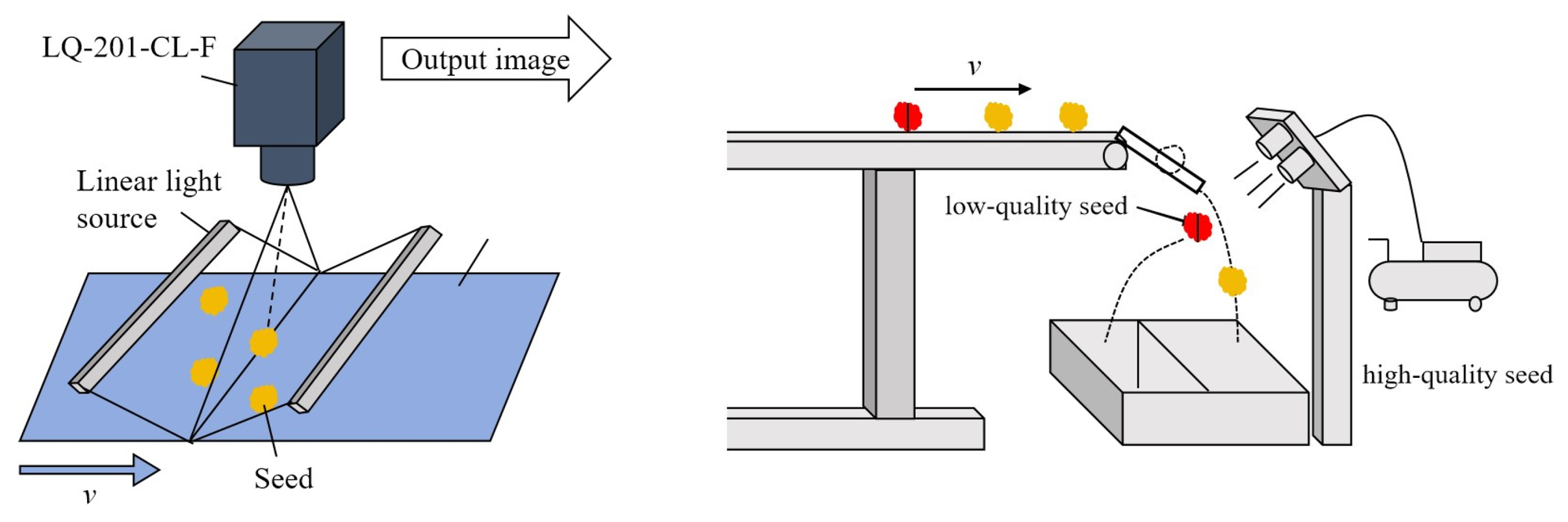

- Cameras and Sensors: High-speed cameras capture images or videos of items moving along a conveyor belt. Additional sensors like depth cameras, infrared cameras, or lasers may be used to capture detailed object information.

- Computer Vision Algorithms: These algorithms analyze visual data to identify and classify objects. Technologies like convolutional neural networks (CNNs) or machine learning models often underpin these systems.

- Sorting Mechanism: Actuators, robotic arms, air jets, or diverters physically sort the items into appropriate categories based on the analysis.

Computer Vision-Based Autonomous Sorting Use Case Examples

Computer vision based sorting is applied across several industries. Following are some of the example use cases.

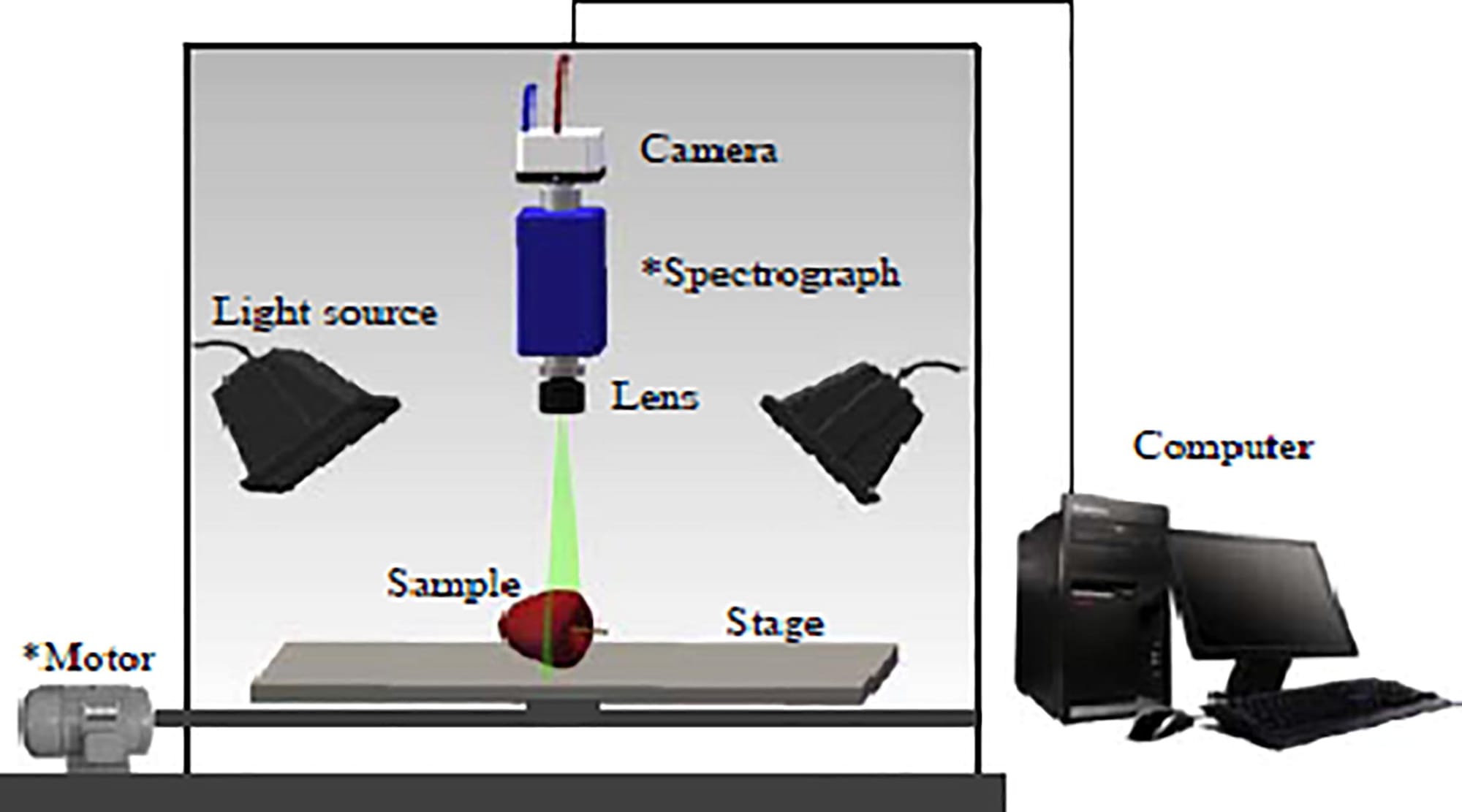

Agriculture: Fruit Grading and Sorting

This computer vision based systems can grade fruits like apples, oranges, and bananas based on their size, shape, and color or other physical features. The system uses cameras to capture images of fruits on conveyor belts, analyzes their quality, and sorts them into grades such as premium, standard, or reject. This system increases efficiency, ensures consistency, and reduces manual labor in grading.

Agriculture: Seed Sorting

This seed sorting system uses high-speed cameras and AI models identify defective seeds or foreign materials in batches. Sorting mechanisms of the system separate viable seeds from defective ones based on size, texture, and color. It helps improve the quality of seeds for planting and boosts crop yield.

Agriculture: Vegetable Defect Sorting

In this vegetable defect sorting system, computer vision model detects defects in vegetables like potatoes, tomatoes, carrots or other vegetables and even fruits, such as cracks, bruises, or spots, and automatically removes the defective ones from the production line. This ensures only healthy produce reaches consumers and reduces waste.

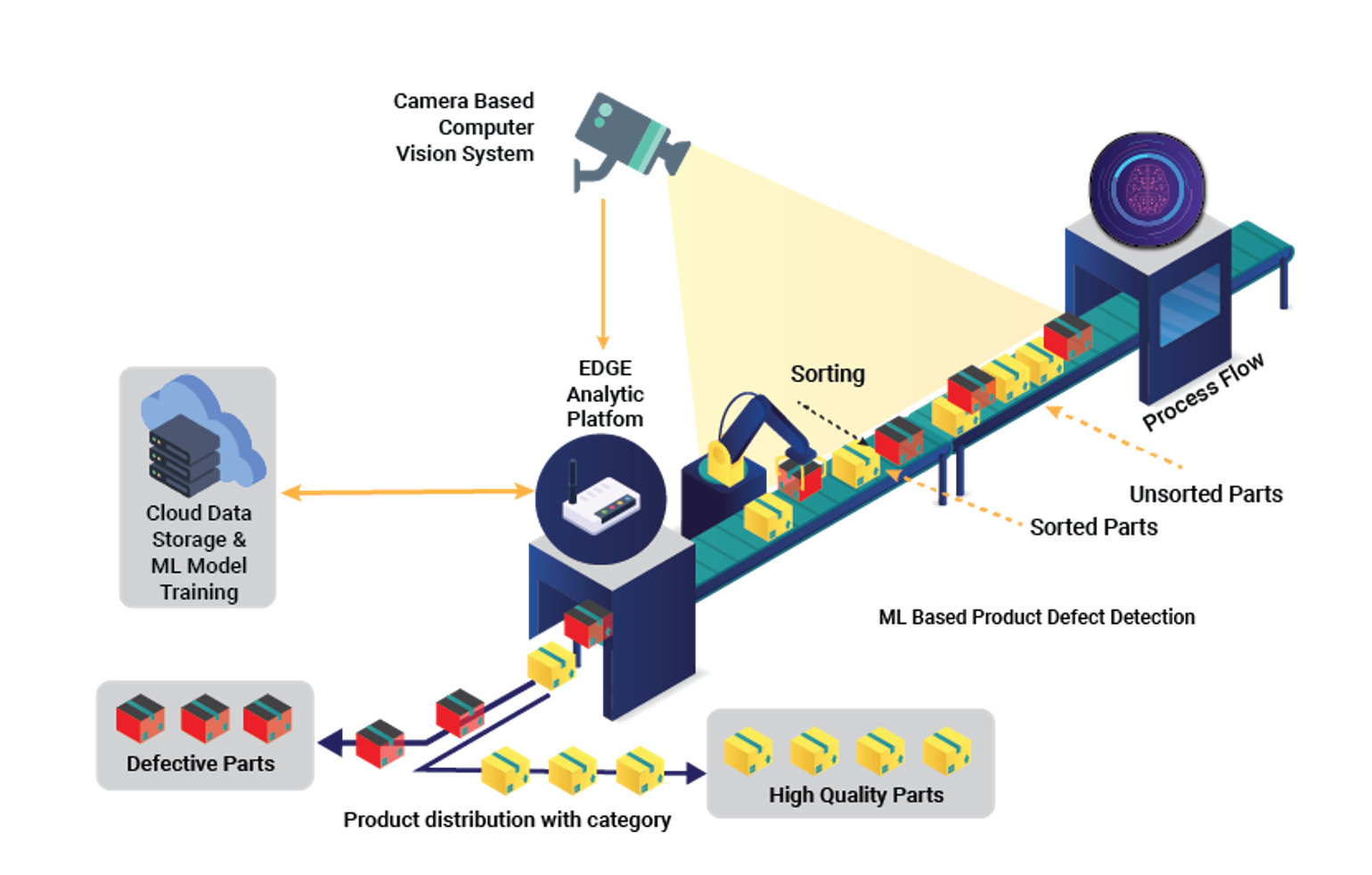

Manufacturing: Defective Product Sorting

This defective product sorting system uses computer vision model to inspect products for defects like scratches, dents, or missing components on assembly lines. Faulty products are flagged and removed automatically. It helps improve product quality and reduces customer complaints.

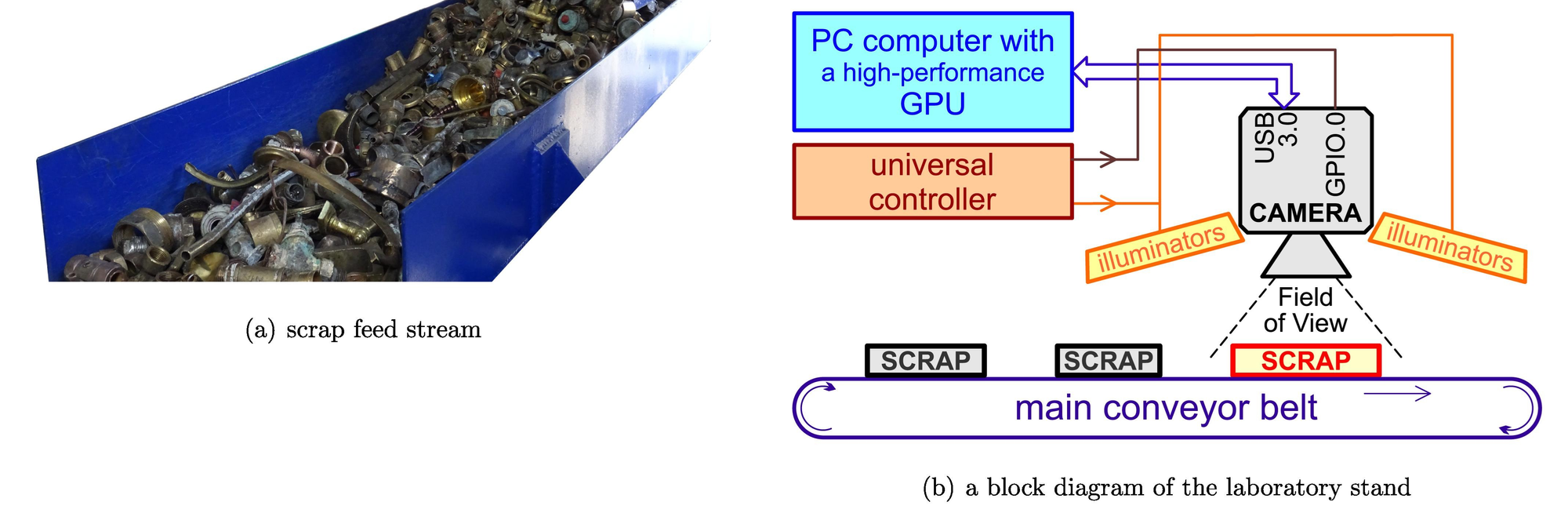

Manufacturing: Metal Scrap Sorting

In recycling facilities, this computer vision based metal scrap sorting system identifies and sorts different types of metals based on their physical characteristics and reflective properties. This helps optimizing recycling processes and reduces contamination in recycled materials.

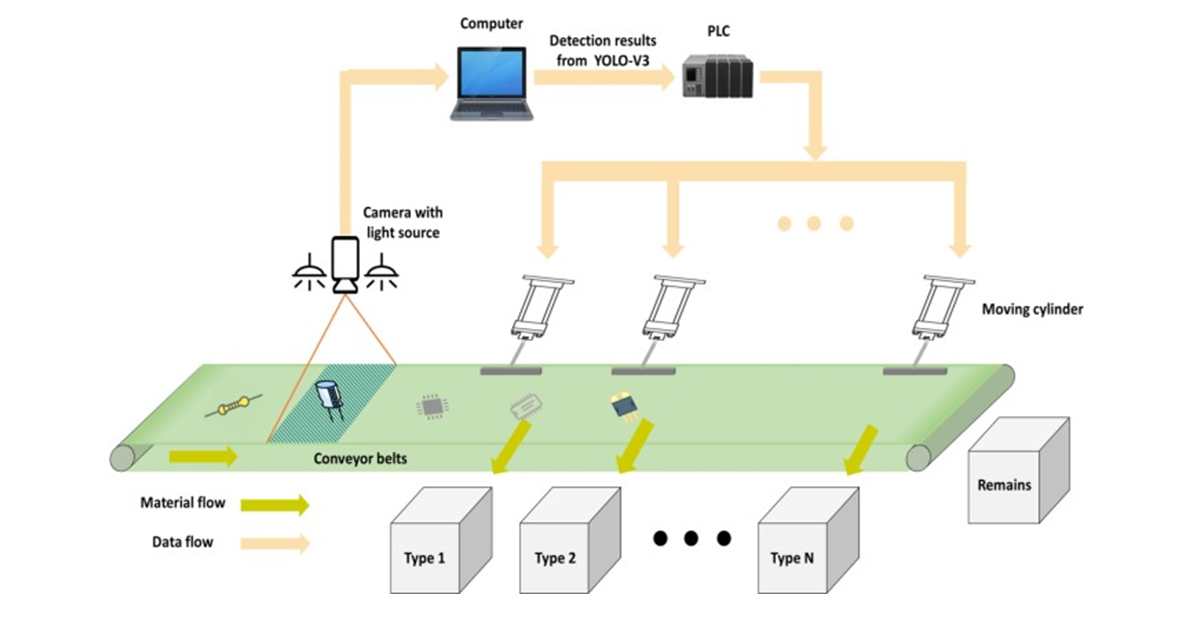

Manufacturing: Electronics Component Classification and Sorting

This electronic components classification and sorting system uses computer vision to identify electronic components such as resistors, capacitors, or chips and sort them based on type, size, and placement requirements. This system increases efficiency in PCB assembly and reduces errors in component placement.

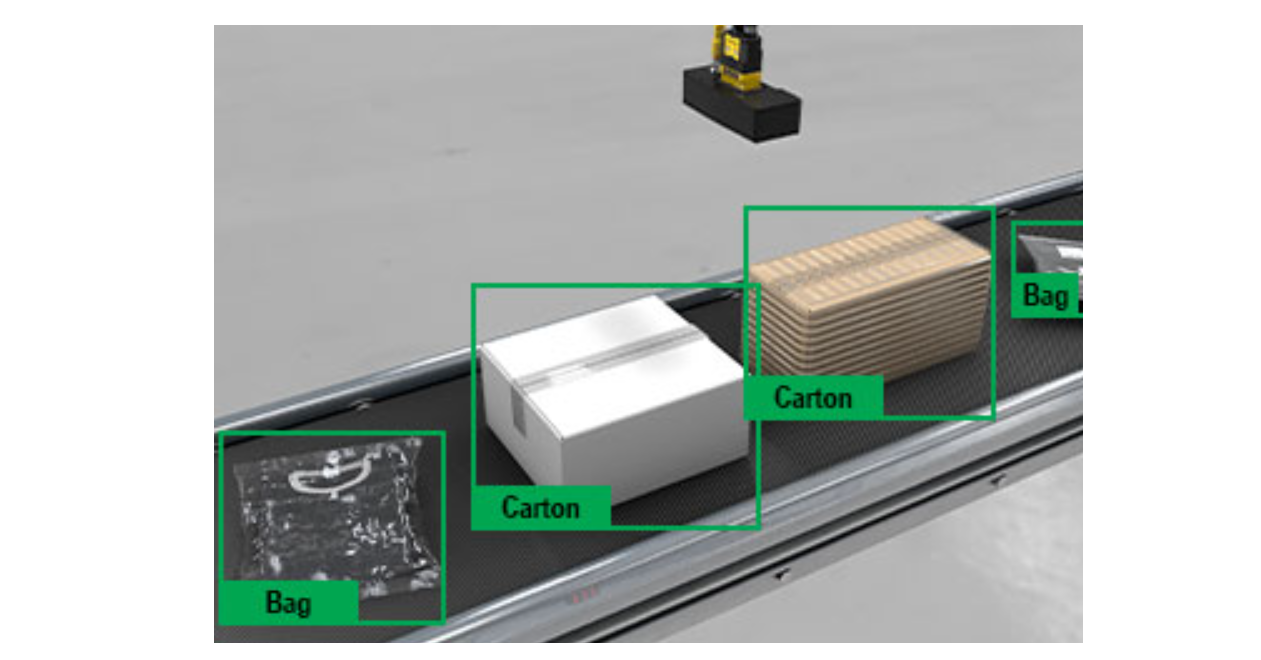

Logistics: Parcel Sorting in Warehouses

This computer vision based system enhances parcel sorting in logistics by automating the detection and classification of diverse packages, including boxes, padded envelopes, and polybags. By processing data using computer vision, it varifies packaging types, preventing misrouting and equipment jams. This system track parcels on conveyor belts, and robotic arms move them to the correct bin. It increases sorting speed and reduces shipping errors.

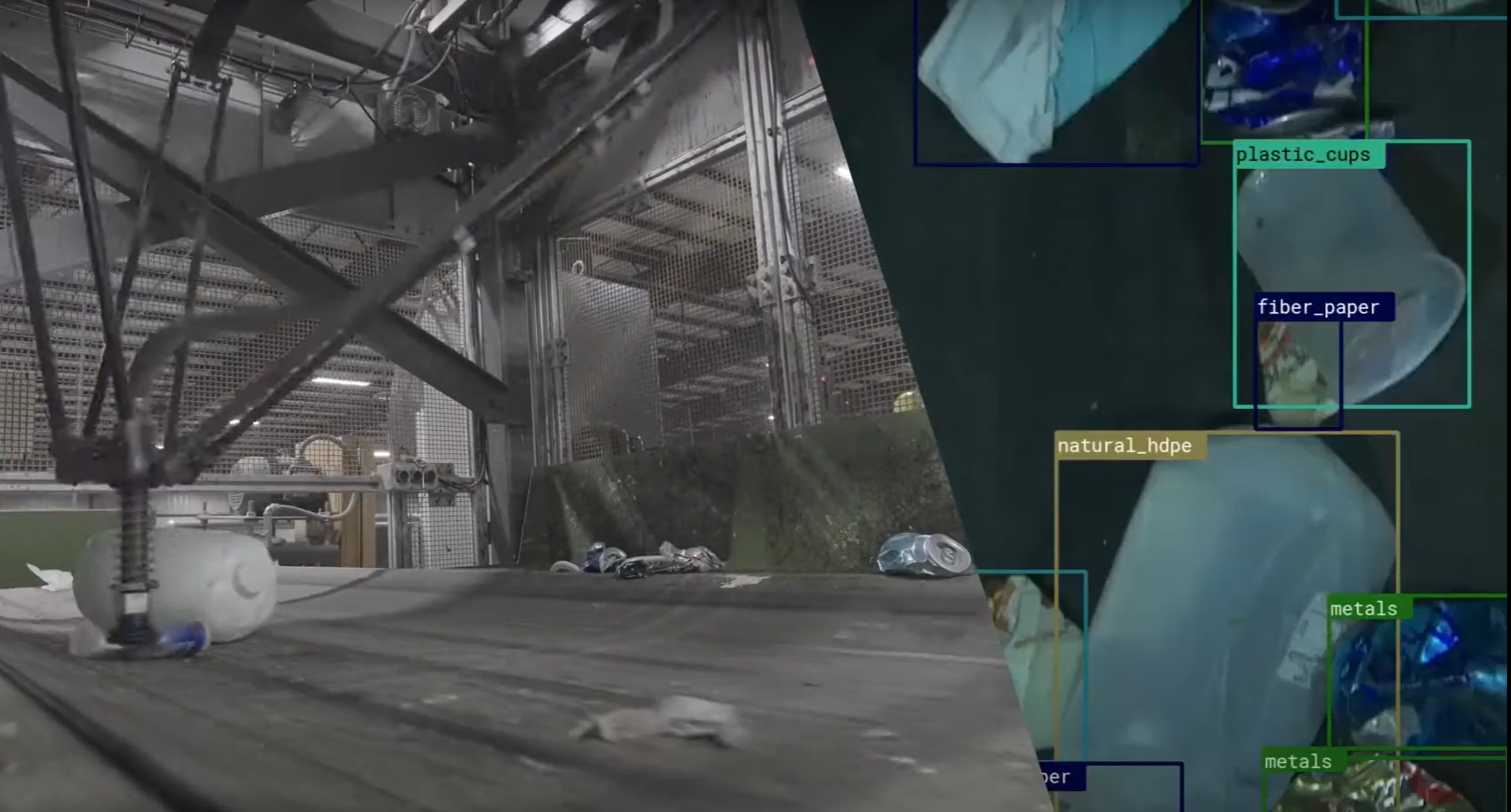

Supply Chain: Trash and Recycling Sorting

This system uses computer vision in waste management by enhancing the efficiency and accuracy of trash and recycling sorting processes. By using cameras and AI models, this systems can identify and classify various materials (such as plastics, glass, metals, and paper) on conveyor belts, ensuring they are directed into the appropriate recycling categories. This automation not only increases sorting precision but also significantly reduces the need for manual intervention, leading to higher recovery rates of recyclable materials and a decrease in landfill waste. The AI-guided robots have been developed to sort recycled materials with high accuracy, improving the overall efficiency of recycling facilities.

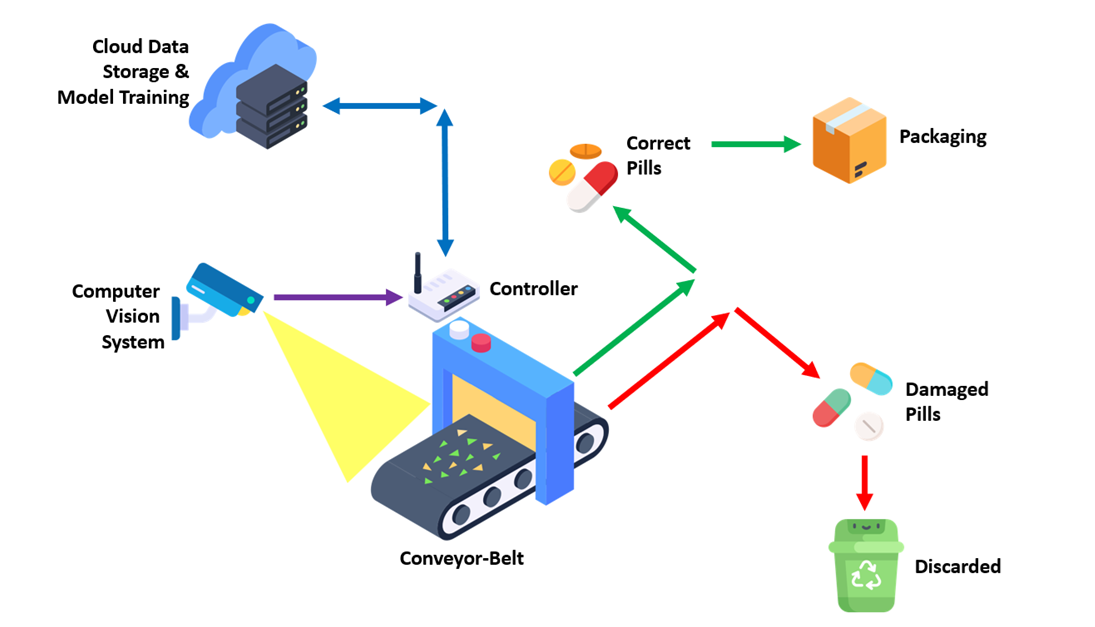

Pharmaceuticals: Pill Sorting

The pharmaceutical industry uses computer vision-assisted pill sorting to enhance efficiency and accuracy. Automated systems equipped with camera and computer vision algorithms inspect and sort tablets, identifying defects such as chips, discolorations, or incorrect sizes, ensuring only quality products proceed through the supply chain. For example, pharmaceutical pill sorters can process many tablets (pills) per minute, effectively removing broken tablets and particles. This automation reduces manual labor, minimizes errors, and maintains product integrity throughout distribution.

Here we discussed some use cases of computer vision-based sorting systems. The use cases are endless across various other domains. Computer vision significantly enhances sorting processes across various industries by automating and improving accuracy. By integrating computer vision into sorting operations, industries achieve greater efficiency, accuracy, and productivity.

How to Build an Automated Sorting System

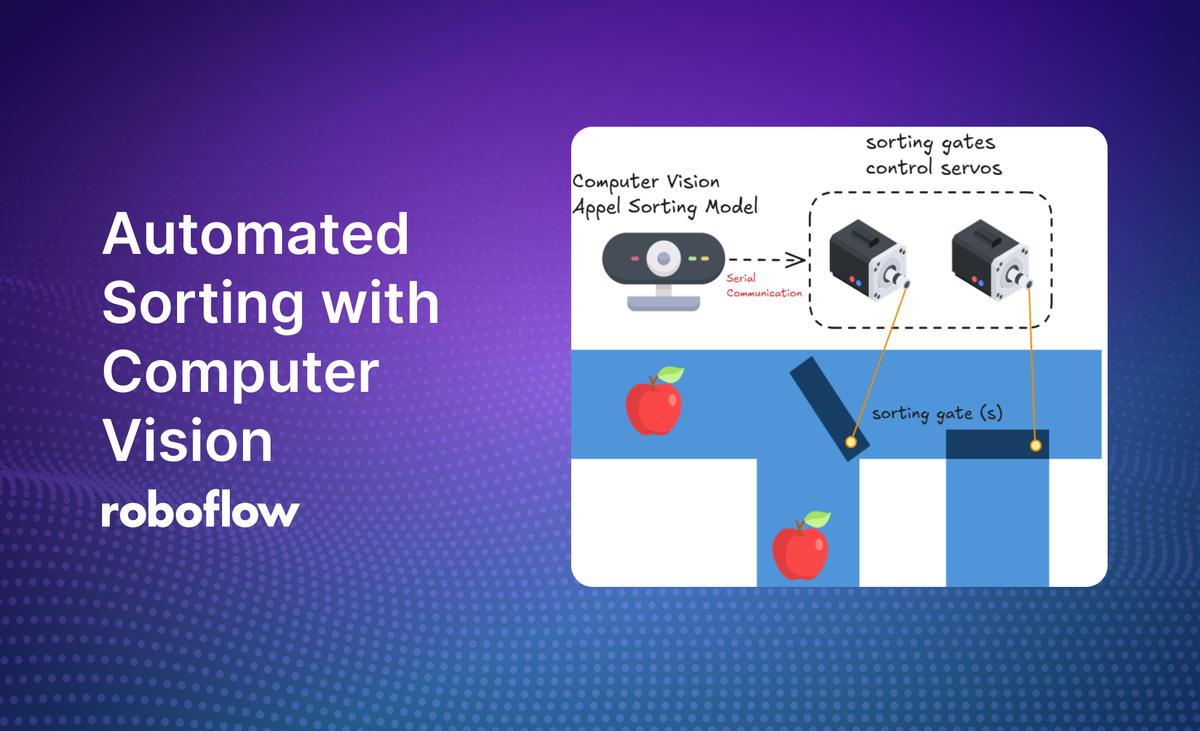

This section explores how Roboflow simplifies the creation of automated sorting systems using computer vision, with apple fruit sorting as an example. As highlighted in earlier sections, the core component of any sorting system is the computer vision model, which drives the decision-making process. Here, we will demonstrate how Roboflow aids in building such a model. The steps outlined below provide a clear path to developing an automated sorting system.

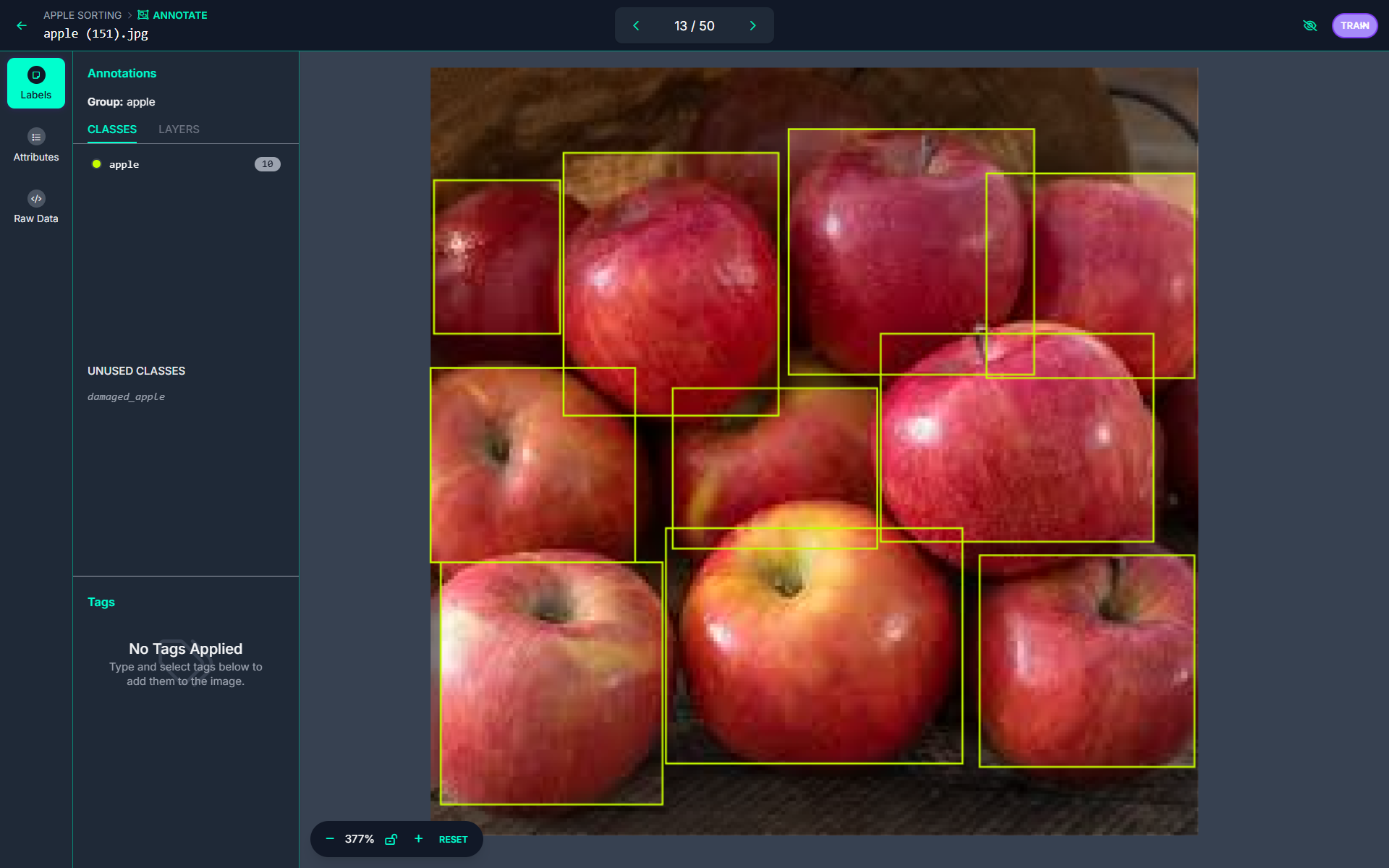

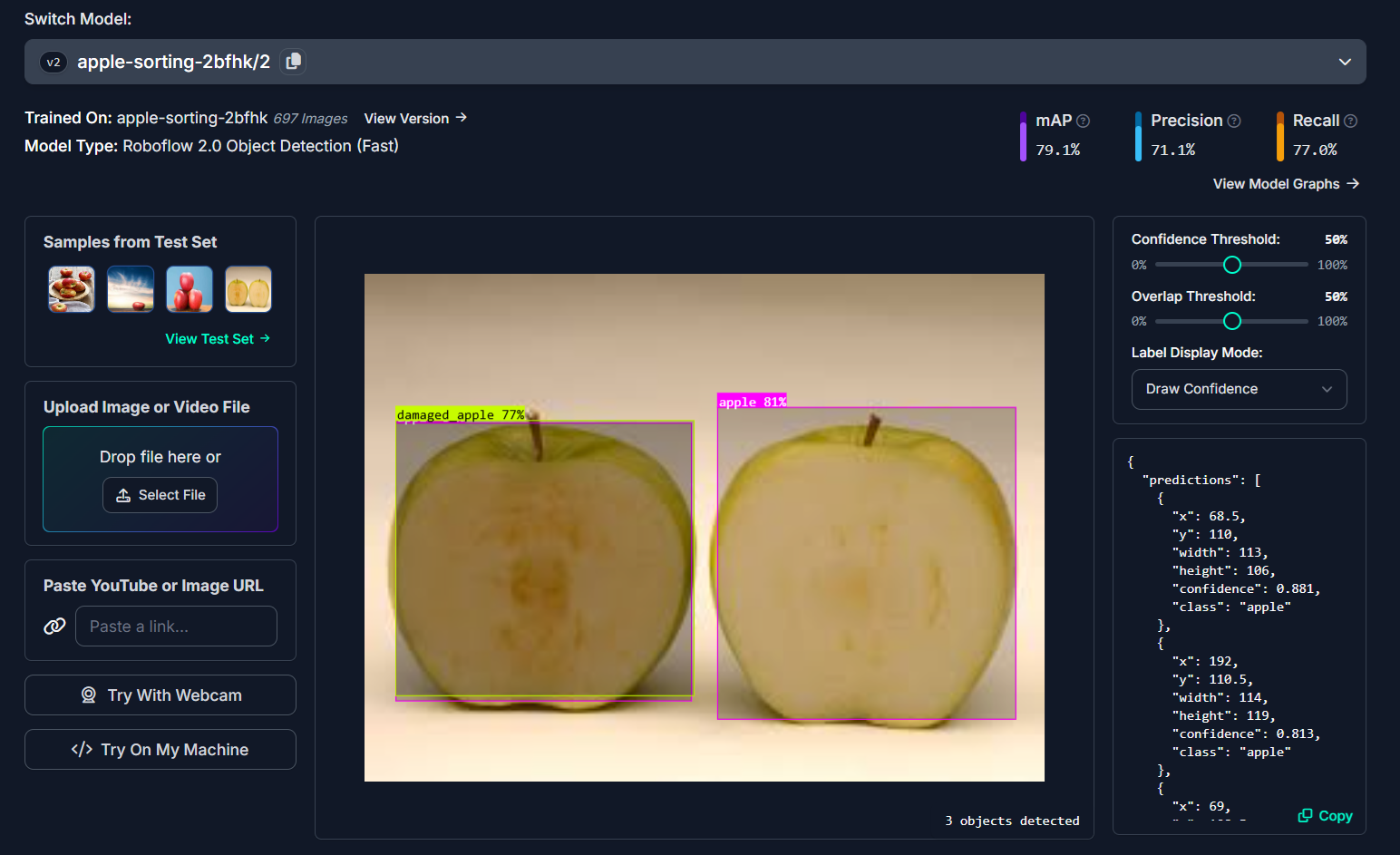

We are developing an object detection model for the Apple Sorting using Computer Vision to distinguish between good apples and damaged ones. The classes for this project are defined as "apple" and "damaged_apples." Start by creating a Roboflow project for object detection to manage dataset and streamline the model development process.

Step #1: Collect and annotate dataset

The first step in building an object detection model is to gather a diverse dataset of apple images. These images should include various scenarios, such as different lighting conditions, angles, and sizes of apples, to ensure the model's robustness. The dataset should capture both good apples and damaged ones to represent the two classes effectively.

After collecting the images, annotation is performed using a bounding box tool. Draw rectangles around the Apples, labeling them as either "apple" or "damaged_apples". These labeled bounding boxes serve as ground truth data, enabling the model to learn to identify and classify objects accurately during training.

Step #2: Train the Model

Once the dataset has been annotated, the next step is to train the object detection model using Roboflow 2.0 fast object detection pipeline.

The above image shows the result of an object detection model trained using Roboflow 2.0 Object Detection (Fast) for apple sorting. The model identifies and classifies apples as either "apple" (good) or "damaged_apple" with respective confidence scores. The performance metrics (mAP: 79.1%, Precision: 71.1%, Recall: 77.0%) indicate the model's effectiveness in identifying and classifying apples.

Step #3: Build inference script

In this step, we will learn how to build an inference script using the Roboflow Inference API and the Supervision library. The script processes a real-time camera stream to identify good and damaged apples and sends the results to a controller for automated sorting. The identified labels are used to transmit the commands to control the sorting machenism over a serial port to the connected controller, such as an Arduino development kit. The code enables seamless integration with the sorting mechanism to sort good and damaged apple.

api_key = "YOUR_ROBOFLOW_API_KEY"

from inference import InferencePipeline

from inference.core.interfaces.camera.entities import VideoFrame

import cv2

import supervision as sv

import serial

import time

import sys

# Initialize serial communication with Arduino

arduino = serial.Serial('COM4', 9600, timeout=1)

time.sleep(2) # Allow Arduino to reset

def send_command_to_arduino(command: str):

"""Send a command to Arduino via serial."""

try:

arduino.write(command.encode()) # Send command as bytes

print(f"Sent to Arduino: {command}")

except Exception as e:

print(f"Error sending data to Arduino: {e}")

# Initialize annotators

label_annotator = sv.LabelAnnotator()

box_annotator = sv.BoxAnnotator()

# Initialize a variable to store the last sent command

last_command = None

def my_custom_sink(predictions: dict, video_frame: VideoFrame):

global last_command # Declare the variable as global to retain its value across calls

# Extract labels from predictions

labels = [p["class"] for p in predictions.get("predictions", [])]

# Convert predictions to Supervision Detections

detections = sv.Detections.from_inference(predictions)

# Annotate the frame

image = label_annotator.annotate(

scene=video_frame.image.copy(), detections=detections, labels=labels

)

image = box_annotator.annotate(image, detections=detections)

# Display the annotated image

cv2.imshow("Predictions", image)

# Determine the appropriate command based on the labels

if "apple" in labels:

current_command = "G_APPLE" # Command for damaged apples

elif "damaged_apple" in labels:

current_command = "D_APPLE" # Command for good apples

else:

current_command = None # No command for other cases

# Send the command only if it has changed

if current_command != last_command:

if current_command is not None: # Only send if there's a valid command

send_command_to_arduino(current_command)

last_command = current_command # Update the last command

if cv2.waitKey(1) & 0xFF == ord('q'):

return

pipeline = InferencePipeline.init(

model_id="apple-sorting-2bfhk/2",

video_reference=0, # Use the default webcam

on_prediction=my_custom_sink,

api_key=api_key,

)

pipeline.start()

pipeline.join()

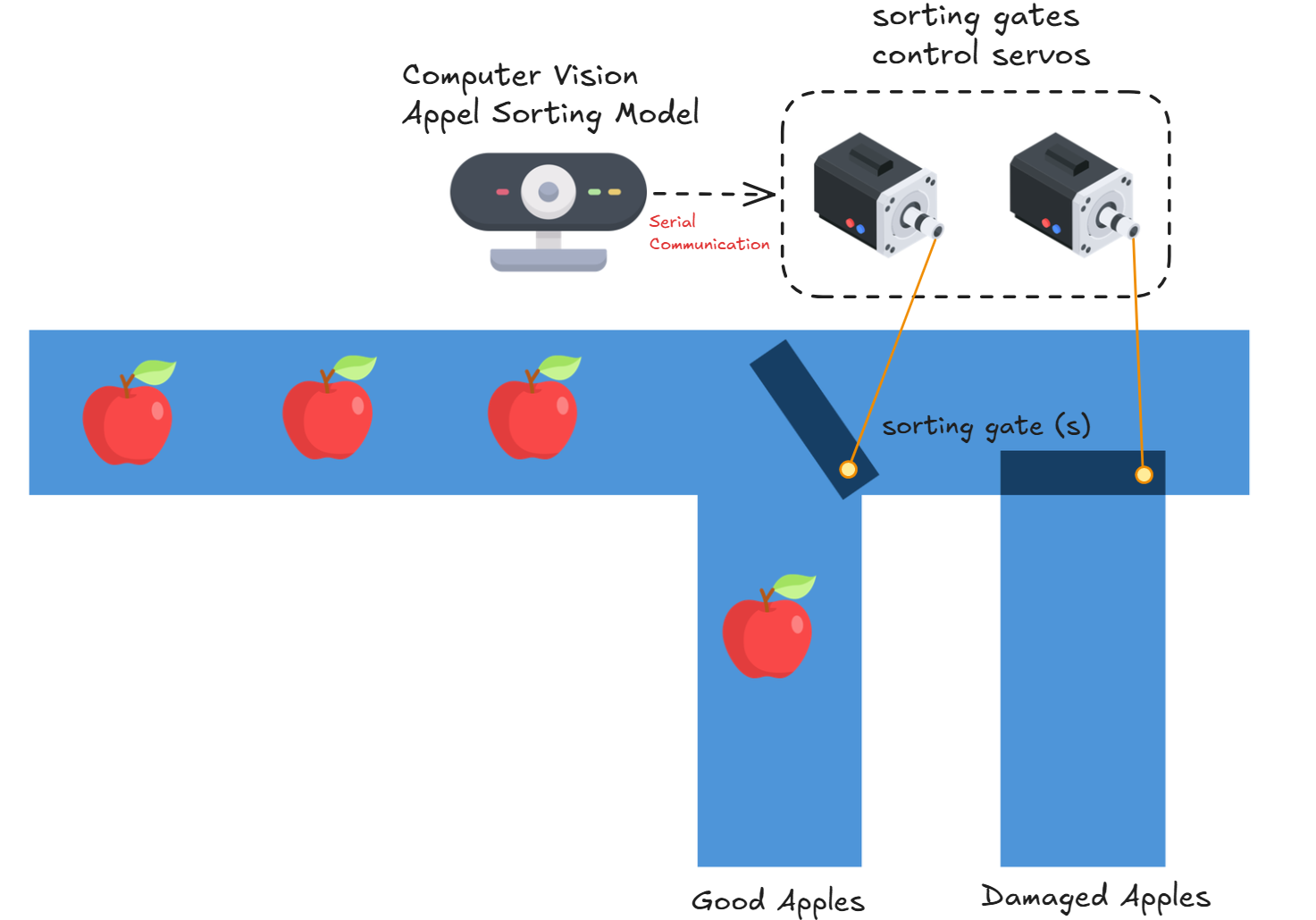

Step #4: Build the sorting mechanism

The system shown in the image is an automated sorting mechanism for apples, where servos are used to guide apples into appropriate bins based on their classification as "good apple" or "damaged apple".

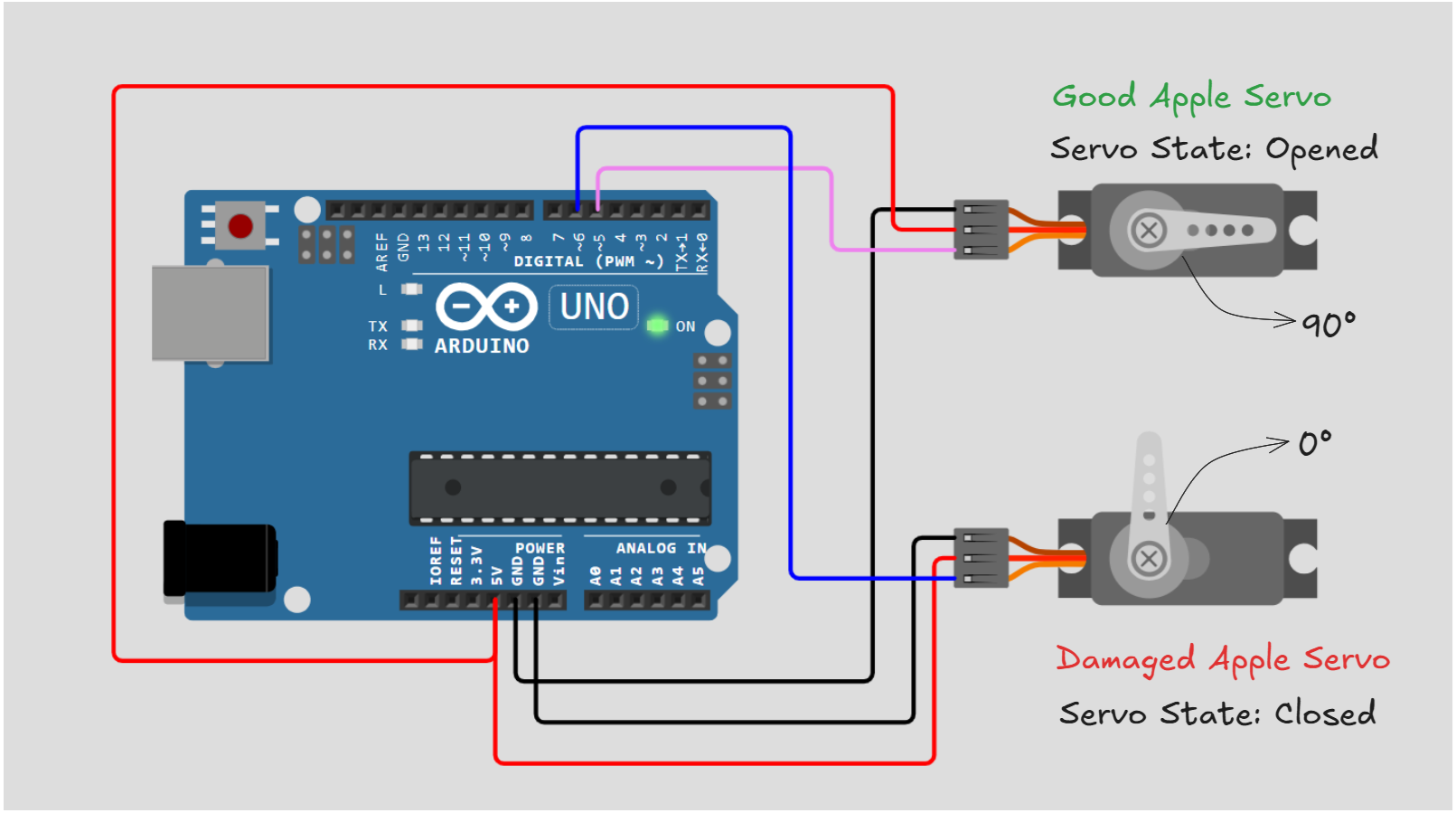

The circuit consists of an Arduino Uno, and two servo motors. The servo motors are connected to the Arduino's PWM pins (pin 5 for the Good Apple Servo and pin 6 for the Damaged Apple Servo).

The Arduino communicates with the computer vision system through a USB connection, receiving commands via the serial port. These commands dictate the servo positions, allowing them to open or close sorting gates. The circuit ensures that the servos operate in coordination with the classification results, directing apples into their respective bins.

Here is the firmware code for Arduino:

#include <Servo.h>

int servoPin_1=5;

int servoPin_2=6;

Servo good_apple_servo, damaged_apple_servo;

void setup() {

// put your setup code here, to run once:

Serial.begin(9600);

good_apple_servo.attach(servoPin_1);

damaged_apple_servo.attach(servoPin_2);

good_apple_servo.write(0);

damaged_apple_servo.write(0);

}

void loop() {

// put your main code here, to run repeatedly:

if (Serial.available() > 0) {

String command = Serial.readStringUntil('\n'); // Read the command

command.trim(); // Remove extra whitespace

// Debug: Print the received command

Serial.print("Received command: ");

Serial.println(command);

if (command == "G_APPLE") {

good_apple_servo.write(90); // Opens sort line for good apple

damaged_apple_servo.write(0); // closes sort line for damaged apple

} else if (command == "D_APPLE") {

damaged_apple_servo.write(90); // Opens sort line for damaged apple

good_apple_servo.write(0); // closes sort line good apple

}

}

}

How the Autonomous Sorting System Works

Components Setup

Two servos are connected to an Arduino Uno, each responsible for controlling a sorting path. The Good Apple Servo operates the path for apples classified as "good," while the Damaged Apple Servo manages the path for damaged apples.

Classification Process

A computer vision model processes the real-time camera stream to classify apples as either "good" or "damaged." Via the inference script in the above step #3. Based on the classification, a specific command (G_APPLE for good apples or D_APPLE for damaged apples) is sent to the Arduino via a serial port.

Servo Control

The Arduino receives the command and adjusts the position of the servos accordingly:

- For a good apple, the Good Apple Servo moves to 90° (open) to allow the apple to pass, while the Damaged Apple Servo stays at 0° (closed).

- For a damaged apple, the Damaged Apple Servo moves to 90° (open) to direct the apple, while the Good Apple Servo stays at 0° (closed).

Sorting in Action

When an apple passes through the camera stream, the classification triggers servo movement. The servos act as gates to direct apples into separate bins or chutes, ensuring accurate sorting.

Automation and Feedback

The system continuously processes apples, adjusting servo positions dynamically based on the classification results. This real-time decision-making allows for high-speed and efficient sorting without human intervention.

This setup demonstrates how computer vision and Arduino-controlled servos work together to create a robust, automated sorting mechanism.

Autonomous Sorting Conclusion

In this blog, we explored different use cases of computer vision based sorting system and learnt how to build an automated apple sorting system using a combination of computer vision and Arduino-controlled servos.

By using an inference script powered by the Roboflow Inference API and Supervision library, we achieved real-time classification of apples as "good" or "damaged." The integration of a simple circuit demonstrates a dynamic control of sorting paths based on classification results.

This setup demonstrates the potential of combining AI-driven insights with hardware automation to streamline automated sorting process. With some additional components and customization, this scalable framework can be adapted for various sorting applications across different industries.

Cite this Post

Use the following entry to cite this post in your research:

Timothy M. (Dec 20, 2024). Automated Sorting with Computer Vision. Roboflow Blog: https://blog.roboflow.com/automated-sorting-with-computer-vision/