In this blog post, we will guide you through creating a system for library management to detect books and retrieve relevant information such as the book title, author, and publisher details.

This computer vision-based book inventory system offers numerous features, including the ability to automatically extract the book details and prepare book inventory data for a library management system without the need of manually typing each of these details.

Additionally, this system can categorize books by genre or subject matter without any human intervention. Since it is an automated system, it can process a larger number of entries over time, enhancing efficiency and accuracy in inventory management.

The extracted information can then be used for various purposes like cataloging, inventory management, and digital archiving.

The application we will develop uses an object detection model to detect book cover trained using Roboflow, Microsoft's Florence-2 vision foundation model, and the GPT-4o model API. We will build the graphical user interface (GUI) for the project using Gradio.

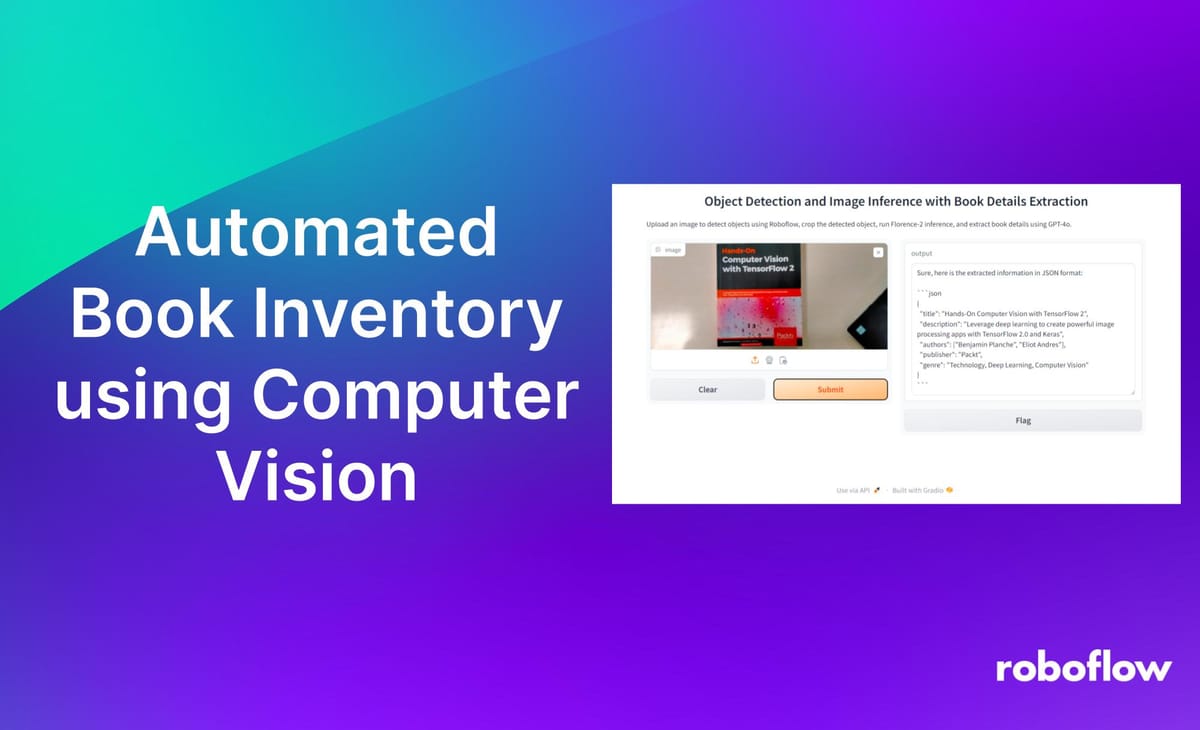

Here is an example of the system in action:

How the System Works

Here is how the automated book inventory system we will build could be worked:

- Library staff present books to a camera-equipped device (e.g., smartphone or tablet) at the inventory check-in point. The device captures an image of each book cover or spine.

- An object detection model, trained using Roboflow, identifies the book in the captured image and crops the region of interest (ROI) containing the book cover or spine.

- The cropped image is processed using the Florence-2 vision foundation model. This advanced AI model accurately detects and extracts text from the book cover or spine.

- The extracted text is then sent to the GPT-4o multimodal model, which analyzes the text to precisely identify and structure the information (i.e. book title, author names, ISBN, and other relevant information such as descriptions or genres) in JSON format.

- This information can then be integrated into the library's digital inventory without any human intervention, allowing for efficient and scalable inventory management over time.

The following diagram illustrates the working of the system.

Detecting and cropping the relevant Region of Interest (ROI) from an image is a crucial step in this system to avoid unnecessarily sending the entire image to the vision-language model. Additionally, deploying the Roboflow model to an edge device can enhance processing speed significantly.

Steps for building the project

Following are the steps to build this project:

- Train and deploy object detection model.

- Write code to extract text in cropped images using Florence-2.

- Write code to detect and structure book information in the extracted text.

- Build the final Gradio application.

Please install the following libraries before executing the code:

!pip install gradio

!pip install timm flash_attn einops

!pip install roboflow

!pip install inference-sdk

!pip install openai

Step #1: Train and deploy object detection model

Our first step is to build the object detection model using Roboflow. You can quickly create and host a computer vision model using Roboflow by following getting started guide. In our example, we'll use Book Inventory model using Roboflow. Following image shows the Book Inventory project that I have built for this blog post. It contains images of book covers.

Roboflow offers an inference script that utilizes the inference-sdk to run predictions on the deployed model.

# import the inference-sdk

from inference_sdk import InferenceHTTPClient

# initialize the client

CLIENT = InferenceHTTPClient(

api_url="https://detect.roboflow.com",

api_key="YOUR_API_KEY"

)

# infer on a local image

result = CLIENT.infer("YOUR_IMAGE.jpg", model_id=" book_inventory/3")

We will use this code to create a Gradio application which detects the book cover in an image (uploaded from computer or captured using webcam) and crop the region-of-interest (ROI). You can follow this guide to understand how to crop the ROI.

Following is the full code which demonstrates how to detect objects using Roboflow inference, crop the detected object, and then display the cropped image.

The crop_detected_object function saves the uploaded image (or image captured from webcam), run inference to detect objects, and crops the image around the first detected object using the predicted bounding box coordinates. If no objects are detected, it returns a message indicating this.

A Gradio interface is created, allowing users to upload images, detect objects, and display the cropped image.

# Import necessary libraries

import gradio as gr

from inference_sdk import InferenceHTTPClient

from PIL import Image, ImageDraw

import requests

from io import BytesIO

# Initialize the Roboflow client

API_URL = "https://detect.roboflow.com"

API_KEY = "YOUR_API_KEY" # Replace with your Roboflow API key

MODEL_ID = "book_inventory/3" # Replace with your model ID

client = InferenceHTTPClient(api_url=API_URL, api_key=API_KEY)

# Function to run inference and crop the detected object

def crop_detected_object(image):

# Save the image locally

image_path = "uploaded_image.jpg"

image.save(image_path)

# Run inference

result = client.infer(image_path, model_id=MODEL_ID)

# Get the first prediction

if result['predictions']:

prediction = result['predictions'][0]

x, y = prediction['x'], prediction['y']

width, height = prediction['width'], prediction['height']

# Calculate the bounding box

left = x - width / 2

top = y - height / 2

right = x + width / 2

bottom = y + height / 2

# Crop the image

cropped_image = image.crop((left, top, right, bottom))

return cropped_image

else:

return "No objects detected"

# Create the Gradio interface

interface = gr.Interface(

fn=crop_detected_object,

inputs=gr.Image(type="pil", mirror_webcam=False),

outputs=gr.Image(type="pil", format="png"),

title="Object Detection and Cropping",

description="Upload an image to detect objects using Roboflow, crop the detected object, and display the cropped image."

)

# Launch the Gradio app

interface.launch(share=True)

Here is the output of the code:

After running the application, download the cropped image from the interface (click download button in top right corner of output). We will use this image to test code in the next step.

Step #2: Write code to extract text in cropped image using Florence-2

Now, let's explore how to use Microsoft's Florence-2 model. We will build a Gradio app that utilizes Florence-2 to detect text in an uploaded image. Later, we will use this code to run inference on the cropped image from the previous step. Here’s the code

# Import necessary libraries

import gradio as gr

import requests

from PIL import Image

from transformers import AutoProcessor, AutoModelForCausalLM

# Load the model and processor

model = AutoModelForCausalLM.from_pretrained("microsoft/Florence-2-large", trust_remote_code=True)

processor = AutoProcessor.from_pretrained("microsoft/Florence-2-large", trust_remote_code=True)

# Define the function to run inference

def run_inference(image, prompt):

prompt = "<OCR>"

inputs = processor(text=prompt, images=image, return_tensors="pt")

generated_ids = model.generate(

input_ids=inputs["input_ids"],

pixel_values=inputs["pixel_values"],

max_new_tokens=1024,

num_beams=3,

do_sample=False

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(generated_text, task="<OCR>", image_size=(image.width, image.height))

return parsed_answer

# Create Gradio interface

interface = gr.Interface(

fn=run_inference,

inputs=[gr.Image(type="pil")],

outputs=gr.Textbox(),

title="Florence-2 Image Inference",

description="Upload an image to run inference using Florence-2 model."

)

# Launch the Gradio app

interface.launch(share=True)

Run the code and upload the cropped image that you have downloaded from previous step. Running the code will generate following output.

Step #3: Write code to detect and structure book information in the extracted text

Now, we will use the GPT-4o model to identify book details within the input text. Large language models like GPT-4o are highly efficient at identifying and extracting specific information such as book titles in the text.

In the following code, we will test the GPT-4o model to extract only the book title from the input text. Later, in the final step, we will use this code to detect other relevant information about the book that we want to store from the text extracted from the uploaded book cover image.

# Import necessary libraries

from openai import OpenAI

import os

import json

text = "Computer Vision: Algorithms and Applications, 2nd ed. © 2022 Richard Szeliski, The University of Washington."

client = OpenAI(

# This is the default and can be omitted

api_key=os.environ.get("OPENAI_API_KEY", "YOUR_API_KEY"),

)

# Example OpenAI Python library request

MODEL = "gpt-4o"

response = client.chat.completions.create(

model=MODEL,

messages=[

{"role": "user", "content": f"Extract the book title from the following text: {text}"},

],

temperature=0,

)

# Print Response

print(response.choices[0].message.content)

The following will be the output

In this example we are extracting the book title, however any other relevant information like author name, publisher etc. can also be extracted.

Step #4: Build the final Gradio Application

This is the final step. We will build a Gradio application that integrates the code from all the previous steps. Following is the code for detecting and processing text from an uploaded image. It initializes a Roboflow client to detect objects in the image (using the object detection model trained with Roboflow), crops the detected area, and runs text extraction using Microsoft's Florence-2 model. The extracted text is then processed by the GPT-4o model to identify and extract book details.

The complete pipeline includes object detection and cropping, text inference, and book details extraction. A Gradio interface is created to allow users to upload an image, process it through these steps, and display the extracted text.

# Import necessary libraries

import gradio as gr

from inference_sdk import InferenceHTTPClient

from PIL import Image

from transformers import AutoProcessor, AutoModelForCausalLM

from openai import OpenAI

import os

import json

# Initialize the Roboflow client

API_URL = "https://detect.roboflow.com"

API_KEY = "YOUR_API_KEY" # Replace with your actual API key

MODEL_ID = "book_inventory/3" # Replace with your actual model ID

client = InferenceHTTPClient(api_url=API_URL, api_key=API_KEY)

# Load the Florence-2 model and processor

model = AutoModelForCausalLM.from_pretrained("microsoft/Florence-2-large", trust_remote_code=True)

processor = AutoProcessor.from_pretrained("microsoft/Florence-2-large", trust_remote_code=True)

# Function to run Roboflow inference and crop the detected object

def crop_detected_object(image):

try:

# Save the image locally

image_path = "uploaded_image.jpg"

image.save(image_path)

# Run inference

result = client.infer(image_path, model_id=MODEL_ID)

# Get the first prediction

if result['predictions']:

prediction = result['predictions'][0]

x, y = prediction['x'], prediction['y']

width, height = prediction['width'], prediction['height']

# Calculate the bounding box

left = x - width / 2

top = y - height / 2

right = x + width / 2

bottom = y + height / 2

# Crop the image

cropped_image = image.crop((left, top, right, bottom))

return cropped_image

else:

return "No objects detected"

except Exception as e:

return f"Error in crop_detected_object: {str(e)}"

# Function to run Florence-2 inference

def run_inference(image, prompt="<OCR>"):

try:

inputs = processor(text=prompt, images=image, return_tensors="pt")

generated_ids = model.generate(

input_ids=inputs["input_ids"],

pixel_values=inputs["pixel_values"],

max_new_tokens=1024,

num_beams=3,

do_sample=False

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(generated_text, task="<OCR>", image_size=(image.width, image.height))

return parsed_answer

except Exception as e:

return f"Error in run_inference: {str(e)}"

# Function to extract book details from text using GPT-4o

def extract_details(text):

try:

client = OpenAI(

# This is the default and can be omitted

api_key=os.environ.get("OPENAI_API_KEY", "YOUR_API_KEY"),

)

MODEL = "gpt-4o"

response = client.chat.completions.create(

model=MODEL,

messages=[

{"role": "user", "content": f"Extract book title, description, author names, publisher suggest genere from the following text: {text} and generate JSON output."},

],

temperature=0,

)

details = response.choices[0].message.content

return details

except Exception as e:

return f"Error in extracting book details: {str(e)}"

# Function to handle the combined pipeline

def combined_pipeline(image):

try:

# Step 1: Crop the detected object using Roboflow

cropped_image = crop_detected_object(image)

if isinstance(cropped_image, str): # Check if the result is an error message

return cropped_image

# Step 2: Run Florence-2 inference on the cropped image

extracted_text = run_inference(cropped_image)

# Step 3: Extract book details from the text using ChatGPT

details = extract_details(extracted_text)

return details

except Exception as e:

return f"Error in combined_pipeline: {str(e)}"

# Create the Gradio interface

interface = gr.Interface(

fn=combined_pipeline,

inputs=gr.Image(type="pil", mirror_webcam=False),

outputs=gr.Textbox(),

title="Object Detection and Image Inference with Book Details Extraction",

description="Upload an image to detect objects using Roboflow, crop the detected object, run Florence-2 inference, and extract book details using GPT-4o."

)

# Launch the Gradio app

interface.launch(share=True)

Following is the output when you run the code.

Conclusion

In this blog post, we have explored how to build automated book inventory using Computer Vision and Large Language Models (LLMs). We demonstrated how to detect and crop book cover from an image using Roboflow and how to extract and identify relevant information about the book from the text.

This system eliminates the need for manual data entry by automatically extracting book details from the text on covers and preparing the information to be recorded in the database. This enables processing a large number of records in a shorter amount of time.

Cite this Post

Use the following entry to cite this post in your research:

Timothy M. (Jul 19, 2024). Automated Book Inventory using Computer Vision. Roboflow Blog: https://blog.roboflow.com/book-inventory-system/