Industrial robotics is changing fast. For decades, robots on factory floors followed rigid instructions: hard-coded movements, fixed waypoints, and carefully engineered assembly lines. But that era is giving way to something new: robots that can see, understand, and adapt using Vision AI.

At Almond, a robotics company focused on AI-powered manufacturing, that transformation is already here. “Around 90% of manufacturing robots leverage hard-coded movements,” explains Shawn Patel, Co-Founder & CTO of Almond. “With AI, our robots can handle complex tasks without needing to renovate your manufacturing line. The robot just drops in and starts working from day one.”

Why Vision AI Is the Missing Link in Robotics

Traditional industrial robots excel in structured environments like automotive assembly lines where every car door, bolt, and weld happens in precisely the same place. But most manufacturers, especially small and midsize ones, operate in unstructured settings: products change frequently, part bins vary, and lighting conditions shift throughout the day.

These are environments where human workers thrive and robots struggle. Vision AI changes that equation. By enabling robots to perceive their surroundings, detect parts, estimate position and rotation, and make data-driven decisions, it bridges the gap between automation and adaptability.

As Patel puts it, “We’re moving from pre-programmed, fixed waypoints to tasks where you don’t know where an item is until the task starts. You need AI to find items and adapt.”

Case Study: Teaching Robots to Sort Complex Parts

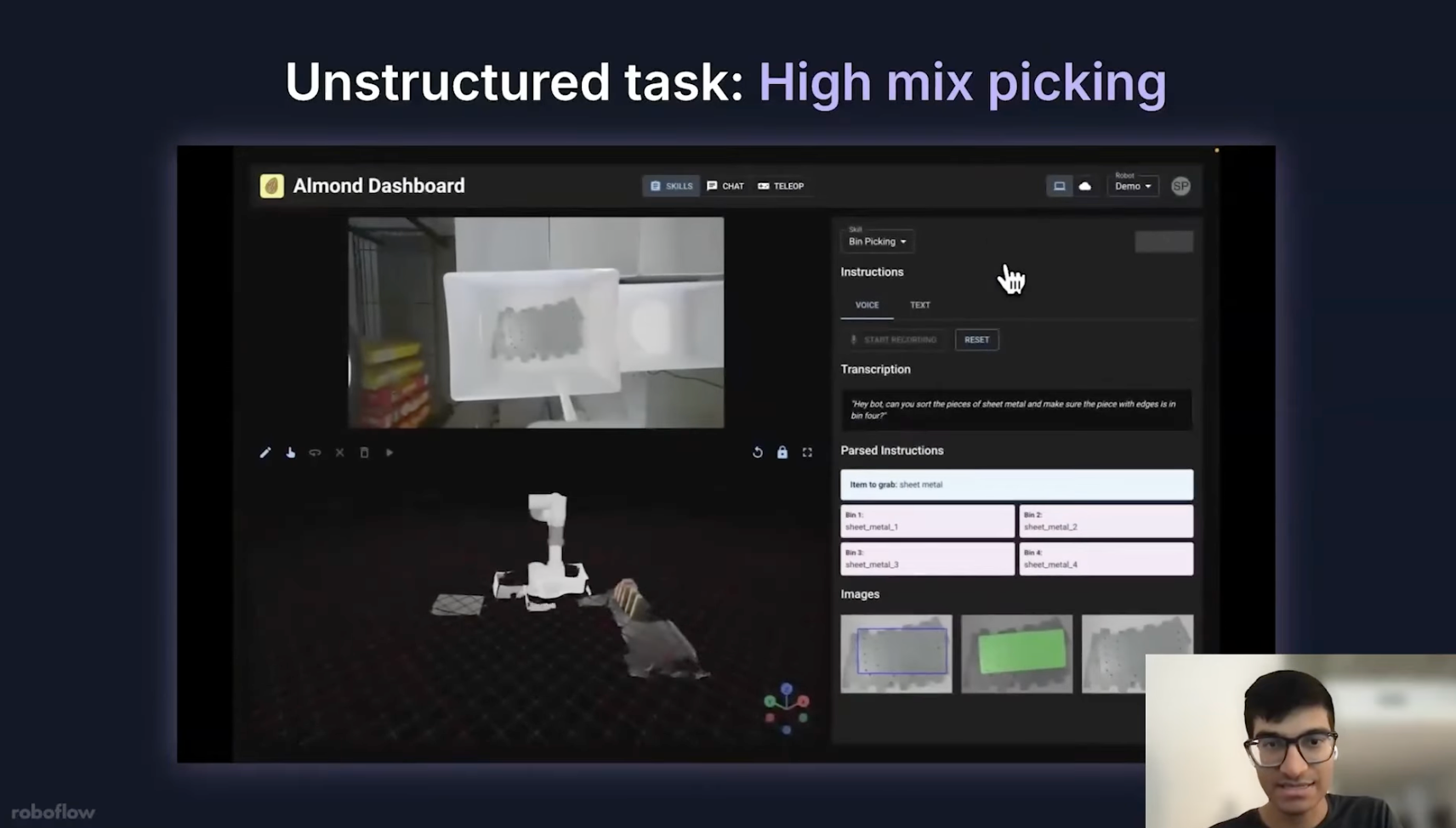

One of Almond’s most challenging projects was high-mix picking: teaching a robot to sort piles of sheet metal with different shapes, sizes, and orientations.

In their early tests, traditional automation failed completely. The robot could only perform if every part was perfectly aligned.

Then the team added vision. “You just speak into the interface how you want the pieces sorted,” Patel explained in a demo. “The robot identifies each piece, grabs it, knows which bin to put it in, and executes.”

The system interprets both text and visual context, transforming a vague command like “sort by shape” into a real-world sequence of movements. The result: a robot that handles variability as naturally as a human worker.

How to Build Factory Robots with Vision AI

Building vision-enabled robots no longer requires a PhD in computer vision. With modern tools like Roboflow, you can go from raw images to production-ready models in weeks, not months. Here’s how teams like Almond built theirs.

1. Collect and Label Real Factory Data

Every successful model starts with representative data. Capture images from your actual environment, conveyor belts, bins, inspection tables, and include real variations in lighting, reflections, and angles.

Almond learned this firsthand: “Lighting was huge,” Patel said. “With reflective metal, the model had to handle different orientations and glare conditions. We added those edge cases until the robot could handle anything we threw at it.”

Using Roboflow Annotate, teams can label thousands of images quickly with AI-assisted tools that automatically suggest bounding boxes or masks. It’s faster, more consistent, and ideal for iterative training.

2. Train a Vision Model That Understands Your Environment

Start small. Almond began with only 50 labeled images and trained multiple models inside Roboflow Train. When they compared them, RF-DETR, Roboflow’s transformer-based object detector, significantly outperformed alternatives like YOLO.

“We checked every model available, and RF-DETR performed the best by a lot,” Patel recalled.

The model achieved 81 % accuracy, compared to YOLO’s 67 %, even in complex metal-part environments.

From there, the team scaled up to 2,000 images using AI-assisted labeling and retraining - proof that small, high-quality datasets can evolve into robust production models through iteration.

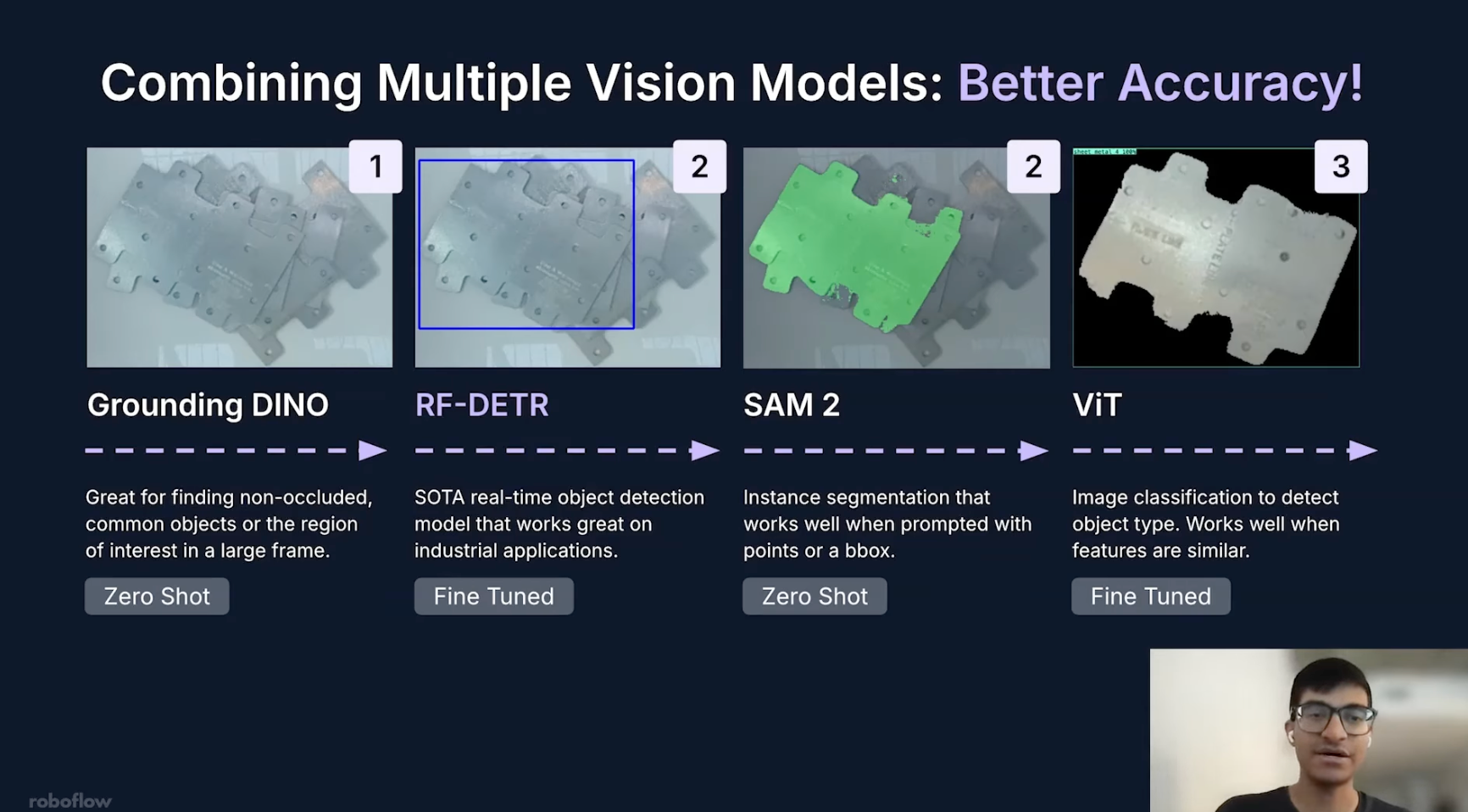

3. Combine Models for Greater Precision

Complex robotic tasks often require more than one vision model. Almond’s multi-stage pipeline combined:

- Grounding DINO to find regions of interest and eliminate background noise.

- RF-DETR to detect which sheet sat on top of a stack.

- SAM 2 for segmentation masks so the robot could align its gripper angle.

- Vision Transformer (ViT) to classify each part before sorting.

Each model performed a specialized role, and together they produced human-level spatial understanding — even for overlapping, irregular shapes.

“For humans, figuring out which item is on top is simple. For a robot, we needed several different vision models working together,” said Patel.

4. Deploy at the Edge and in the Cloud

When it comes to deployment, latency and flexibility matter. Almond runs lighter models locally on NVIDIA Jetson devices for instant feedback, while heavier, multi-model pipelines run on Roboflow Inference with hosted GPUs.

This hybrid setup lets manufacturers choose what’s best for each station, whether it’s fast, offline operation or large-scale processing in the cloud.

5. Iterate Until It’s Production-Grade

Accuracy in robotics isn’t a vanity metric: it’s survival. For tasks such as machine tending or precision sorting, every mis-grasp can mean a scrapped part or damaged tool.

Patel explained: “When you’re putting a piece of metal into a machine, a human can do that with near 100 % accuracy. The robot has to match that. Otherwise, you’re not delivering the right value to the customer.”

Almond’s models reached that level through incremental retraining - from 50 to 200 to 2,000 images - refining each edge case until their robots matched human reliability.

The Impact: Agile, Adaptive, and Affordable Robotics

By combining off-the-shelf robot arms with Roboflow-trained models, Almond built automation that any manufacturer can deploy. No costly reprogramming. No months-long setup. Just smart robots that learn from data and start working immediately.

Their approach reduces downtime, handles SKU variation automatically, and turns visual complexity into a competitive advantage. Robots that once required seven-figure engineering budgets now deliver ROI within weeks.

The Future of Vision-Powered Robotics

Vision AI is turning factory robots into collaborators. The next wave of systems will combine vision, language, and foundation models - allowing teams to simply talk to robots, describe tasks, and watch them adapt in real time.

As Patel described in his demo, “Instead of having to manually program it, you just talk to it.”

This convergence of perception and natural language will define the next decade of automation: one where machines see the world, understand context, and respond intelligently.

Start building your first robotic vision pipeline from annotation to edge inference using Roboflow’s hosted training and deployment platform.

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Nov 4, 2025). How to Build Factory Robots with Vision AI. Roboflow Blog: https://blog.roboflow.com/build-robots-vision-ai/