Computer vision is improving life sciences. From the early identification of cancer to improving plant health, machine vision is enabling us to create more accurate diagnoses, cures, and research methods.

Mateo Sokac is advancing progress in cancer research with object detection. Mateo is a Danish-based researcher at Aarhus University concurrently working at the University hospital (Aarhus Universitetshospital). His work builds on his undergraduate studies at the Rochester Institute of Technology, a leader in image recognition techniques.

Identifying Neutrophils with Computer Vision

Neutrophils are a type of white blood cell that play a key role in animal immune systems. In humans, neutrophils help heal injuries and fight infections. Identifying and measuring their presence in microscopy is an essential step in assessing the efficacy of given experiments.

Mateo notes there are two techniques his labs leverage to identify neutrophils: immersion and dry microscopy. Immersion is generally slower, more expensive, and more precise. On the other hand, dry microscopy is faster, cheaper, and of lower quality.

Mateo's team is currently running experiments to identify which areas of a cell population are enriched in animal populations. In time, these same technique will be applied to humans to advance cancer research.

In order to increase his lab's throughout, Mateo sought to train a model to more accurately identify neutrophils in dry microscopy as accurately as one can in immersion. Mateo trained a model on images labeled from immersion and immersion pictures yet validated from image on dry microscopy alone. Mateo initially had 127 images, augmented with Roboflow to 451, and saw a mean average precision of 0.70 – a 10 percent increase compared to his un-augmented results.

Mateo easily attempted training multiple architectures via the Roboflow Model Library, including YOLOv5, EfficientDet, MobileNetSSD, and more.

Using Image Tiling to Improve Performance

Moreover, due to the large (27000 x 27000 pixels) input images, Mateo's team leverages tiling to improve model performance. Tiling is the practice of breaking a large input image into smaller input images so that a model may train and performance inference on higher pixel density images. For example, if an input image is 1600 x 1600 yet a model may only be able to perform on, say, 800 x 800 input images, breaking an image into four pieces enables the input image to retain its original image quality. (Roboflow Pro supports easy tiling as a preprocessing step.)

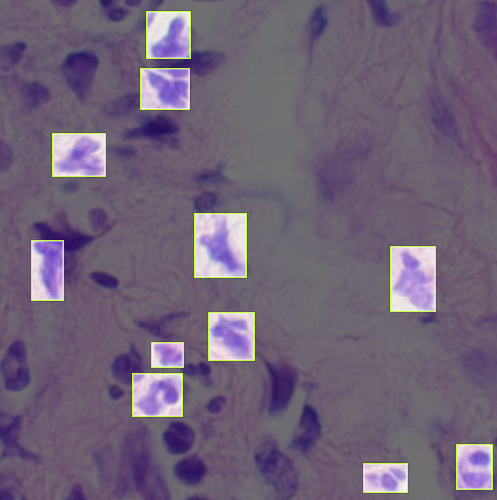

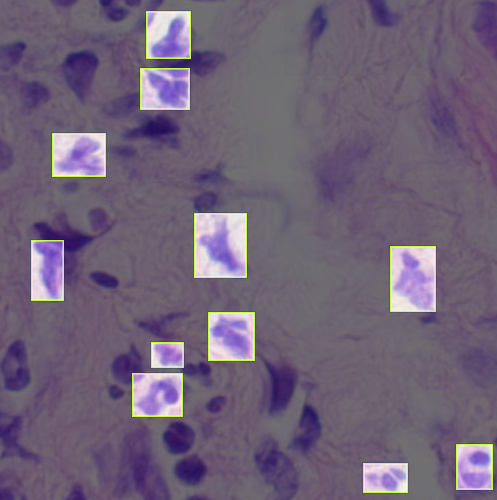

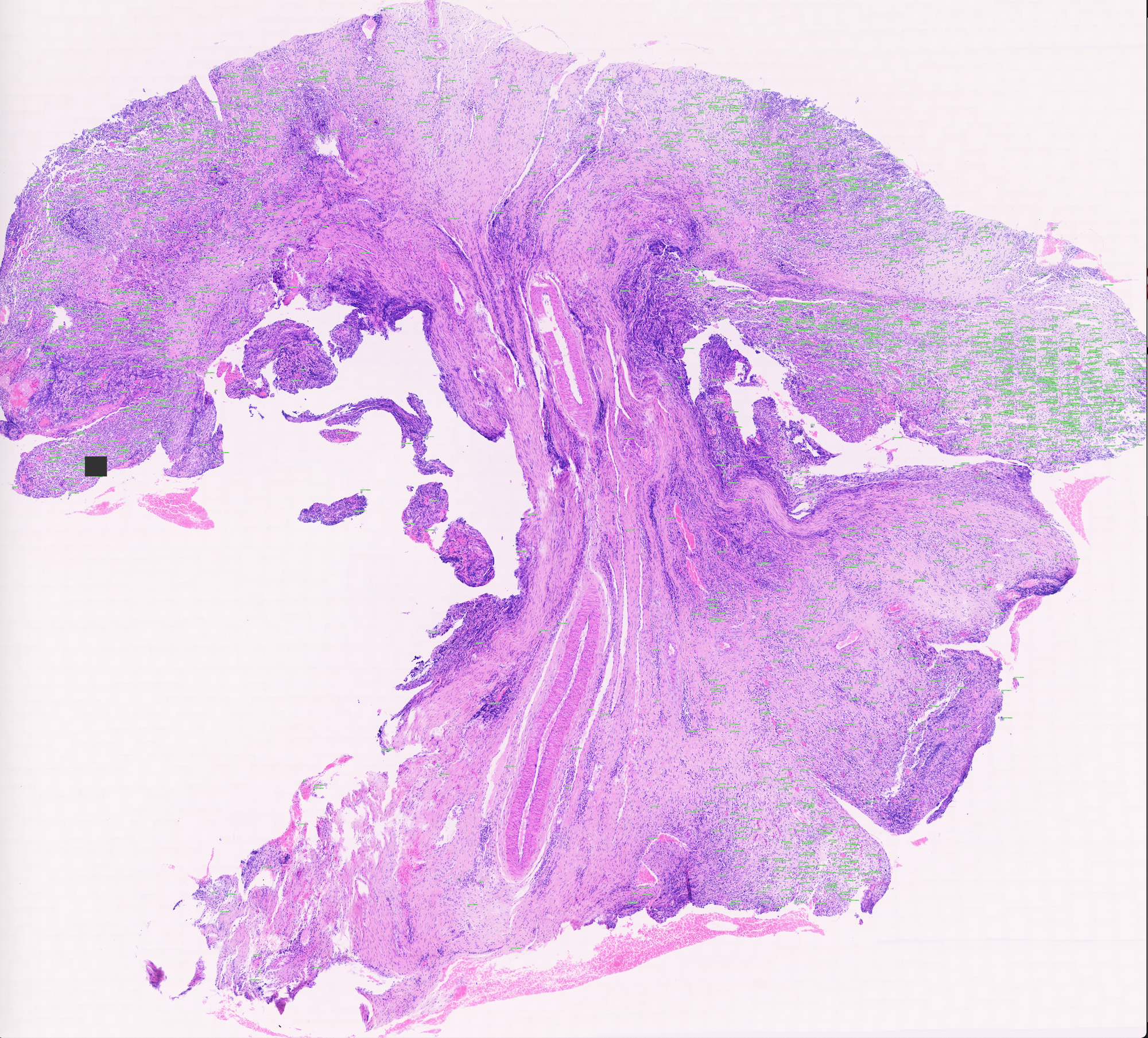

In Mateo's case, an individually labeled example appeared like the following:

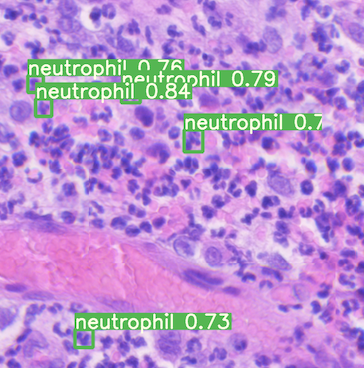

After an image is tiled, it is run through the model to count neutrophils, and the team then reconstructs the individual tiles to create a full image output.

A prediction for one of these individual tiles appears like the following:

Resulting Images

Once each individual tile has inference conducted, the tiles are reconstructed into their 27000 x 27000 pixel final form. The results enable Mateo's team to move more quickly and accurately in determining experiment efficacy.

The output images are truly stunning:

Upon seeing the fully sized image, is it clear why tiling is requisite to capture the detailed granularity necessary for Mateo's team to achieve accurate results!

Interested in advancing computer vision in your lab? Curious how Pro features like tiling can improve your results? Contact Roboflow.

Cite this Post

Use the following entry to cite this post in your research:

Joseph Nelson. (Oct 4, 2020). Improving Cancer Research with Computer Vision. Roboflow Blog: https://blog.roboflow.com/cancer-research-computer-vision/