Zero-shot computer vision models, where a model can return predictions in a set of classes without being trained on specific data for your use case, have many applications in computer vision pipelines. You can use zero-shot models to evaluate if an image collection pipeline should send an image to a datastore, to annotate data for common classes, to track objects, and more.

To guide a zero-shot model on what you'd like it to identify, you can use a prompt or set of prompts to feed the model. A “prompt” refers to a text instruction that a model will encode and use to influence what the model should return.

But, choosing the right prompt – instruction for an object to identify – for a task is difficult. One approach is to guess what prompt may work best given the contents of your dataset. This may work for models that identify common objects. But, this tactic is less appropriate if you are building a model that should identify more nuanced features in image data.

That's where CVevals comes in: our tool for finding a statistically strong prompt to use with zero-shot models. You can use this tool to find the best prompt to:

- Use Grounding DINO for automated object detection;

- Automatically classify some data in your dataset, and;

- Classify an image to determine whether it should be processed further (useful in scenarios where you have limited compute or storage resources available on a device and want to prioritize what image data you save).

In this post, we’re going to show how to compare prompts for Grounding DINO, a zero-shot object detection model. We’ll decide on five prompts for a basketball player detection dataset and use CVevals, a computer vision model evaluation package maintained by Roboflow, to determine the prompt that will identify basketball players the best.

By the end of this post, we will know what prompt will best label our data automatically with Grounding DINO.

Without further ado, let’s get started!

Step 1: Install CVevals

Before we can evaluate prompts, we need to install CVevals. CVevals is available as a repository on GitHub that you can install on your local machine.

To install CVevals, execute the following lines of code in your terminal:

git clone https://github.com/roboflow/evaluations.git

cd evaluations

pip install -r requirements.txt

pip install -e .Since we’ll be working with Grounding DINO in this post, we’ll need to install the model. We have a helper script that installs and configures Grounding DINO in the cvevals repository. Run the following commands in the root cvevals folder to install the model:

chmod +x ./dinosetup.sh

./dinosetup.shStep 2: Configure an Evaluator

The CVevals repository comes with a range of examples for evaluating computer vision models. Out of the box, we have comparisons for comparing prompts for CLIP and Grounding DINO. You can also customize our CLIP comparison to work with BLIP, ALBEF, and BLIPv2.

For this tutorial, we’ll focus on Grounding DINO, a zero-shot object detection model, since our task pertains to object detection. To learn more about Grounding DINO, check out our deep dive into Grounding DINO on YouTube.

Open up the examples/dino_compare_example.py file and scroll down to the section where a variable called `evals` is detected. The variable looks like this by default:

evals = [

{"classes": [{"ground_truth": "", "inference": ""}], "confidence": 0.5}

]Ground truth should be exactly equal to the name of the label in our dataset. Inference is the name of the prompt we want to run on Grounding DINO.

Note that you can only evaluate prompts for one class at a time using this script.

We’re going to experiment with five prompts:

- A player on a basketball court

- A sports player

- A basketball player

For each prompt, we need to set a confidence level that must be met in order for a prediction to be processed. For this example, we’ll set a value of 0.5. But, we can use this parameter later to ascertain how increasing and decreasing the confidence level impacts the predictions returned by Grounding DINO.

Let’s replace the default evals with the ones we specified above:

evals = [

{"classes": [{"ground_truth": "Player", "inference": "player"}], "confidence": 0.5},

{"classes": [{"ground_truth": "Player", "inference": "a sports player"}], "confidence": 0.5},

{"classes": [{"ground_truth": "Player", "inference": "referee"}], "confidence": 0.5},

{"classes": [{"ground_truth": "Player", "inference": "basketball player"}], "confidence": 0.5},

{"classes": [{"ground_truth": "Player", "inference": "person"}], "confidence": 0.5},

]For reference, here is an example image in our validation dataset:

Step 3: Run the Comparison

To run a comparison, we need to retrieve the following pieces of information from our Roboflow account:

- Our Roboflow API key;

- The model ID associated with our model;

- The workspace ID associated with our model, and;

- Our model version number.

We have documented how to retrieve these values in our API documentation.

With the values listed above ready, we can start a comparison. To do so, we can run the Grounding DINO prompt comparison example that we edited in the last section with the following arguments:

python3 examples/dino_example.py --eval_data_path=<path_to_eval_data> \

--roboflow_workspace_url=<workspace_url> \

--roboflow_project_url<project_url> \

--roboflow_model_version=<model_version> \

--config_path=<path_to_dino_config_file> \

--weights_path=<path_to_dino_weights_file>The config_path value should be GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py and the weights_path value should be GroundingDINO/weights/groundingdino_swint_ogc.pth, assuming you are running the script from the root cvevals folder and have Grounding DINO installed using the install helper script above.

Here's an example completed command:

python3 dino_compare_example.py \

--eval_data_path=/Users/james/src/webflow-scripts/evaluations/basketball-players \

--roboflow_workspace_url=roboflow-universe-projects \

--roboflow_project_url=basketball-players-fy4c2 \

--roboflow_model_version=16 \

--config_path=GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py \

--weights_path=GroundingDINO/weights/groundingdino_swint_ogc.pthIn this example, we’re using the Basketball Players dataset on Roboflow Universe. You can evaluate any dataset on Roboflow Universe or any dataset associated with your Roboflow account.

Now we can run the command above. If you are running the command for the first time, you will be asked to log in to your Roboflow account if you have not already logged in using our Python package. The script will guide you through authenticating.

Once authenticated, this command will:

- Download your dataset from Roboflow;

- Run inference on each image in your validation dataset for each prompt you specified;

- Compare the ground truth from your Roboflow dataset with the Grounding DINO predictions, and;

- Return statistics about the performance of each prompt, and information on the best prompt as measured by the prompt that resulted in the highest F1 score.

The length of time it takes for the Grounding DINO evaluation process to complete will depend on the number of images in your validation dataset, the number of prompts you are evaluating, whether you have a CUDA-enabled GPU available, and the specifications of your computer.

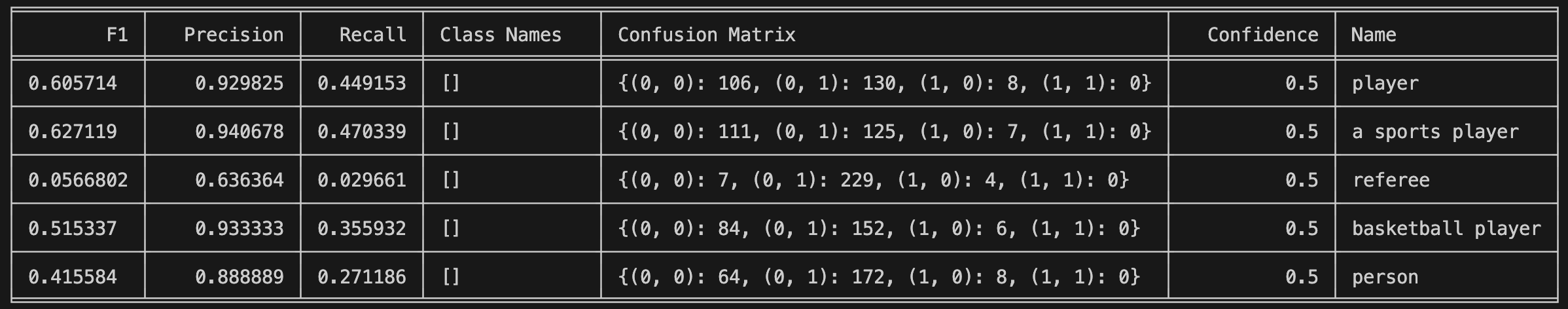

After some time, you will see a results table appear. Here are the results from our model:

With this information, we can see that "a sports player" is the best prompt out of the ones we tested, as measured by the prompt with the highest f1 score. They both perform equally. We could use this data to automatically label some data in our dataset, knowing that it will perform well for our use case.

If your evaluation returns poor results across prompts, this may indicate:

- Your prompt is too specific for Grounding DINO to identify (a key limitation with zero-shot object detectors), or;

- You need to experiment with different prompts.

We now know we can run Grounding DINO with the "a sports player" prompt to automatically label some of our data. After doing so, we should review our annotations in an interactive annotation tool like Roboflow Annotate to ensure our annotations are accurate and to make any required changes.

You can learn more about how to annotate with Grounding DINO in our Grounding DINO guide.

Conclusion

In this guide, we have demonstrated how to compare zero-shot model prompts with the CVevals Python utility. We compared three Grounding DINO prompts on a basketball dataset to find the one that resulted in the highest F1 score.

CVevals has out-of-the-box support for evaluating CLIP and Grounding DINO prompts, and you can use existing code with some modification to evaluate BLIP, ALBEF, and BLIPv2 prompts. See the examples folder in CVevals for more example code.

Now you have the tools you need to find the best zero-shot prompt for your use case.

We have also written a guide showing how to evaluate the performance of custom Roboflow models using CVevals that we recommend reviewing if you are interested in diving deeper into model evaluation with CVevals.