The restaurant industry is filled with inefficiencies. Orders get mixed up, food arrives cold, lines stretch out the door, and hosts turn guests away despite open tables sitting just out of sight. Behind the scenes, the pressure is even greater: short-staffed teams juggle chaotic kitchen workflows, malfunctioning equipment, inconsistent food quality, and poorly managed inventory. These issues don’t just frustrate customers and employees; they cost restaurants revenue. Missed opportunities to seat guests, misfires in order accuracy, and unaddressed bottlenecks all eat into the bottom line. Today, with competition growing fiercer, operational efficiency isn’t just a bonus; it’s a necessity.

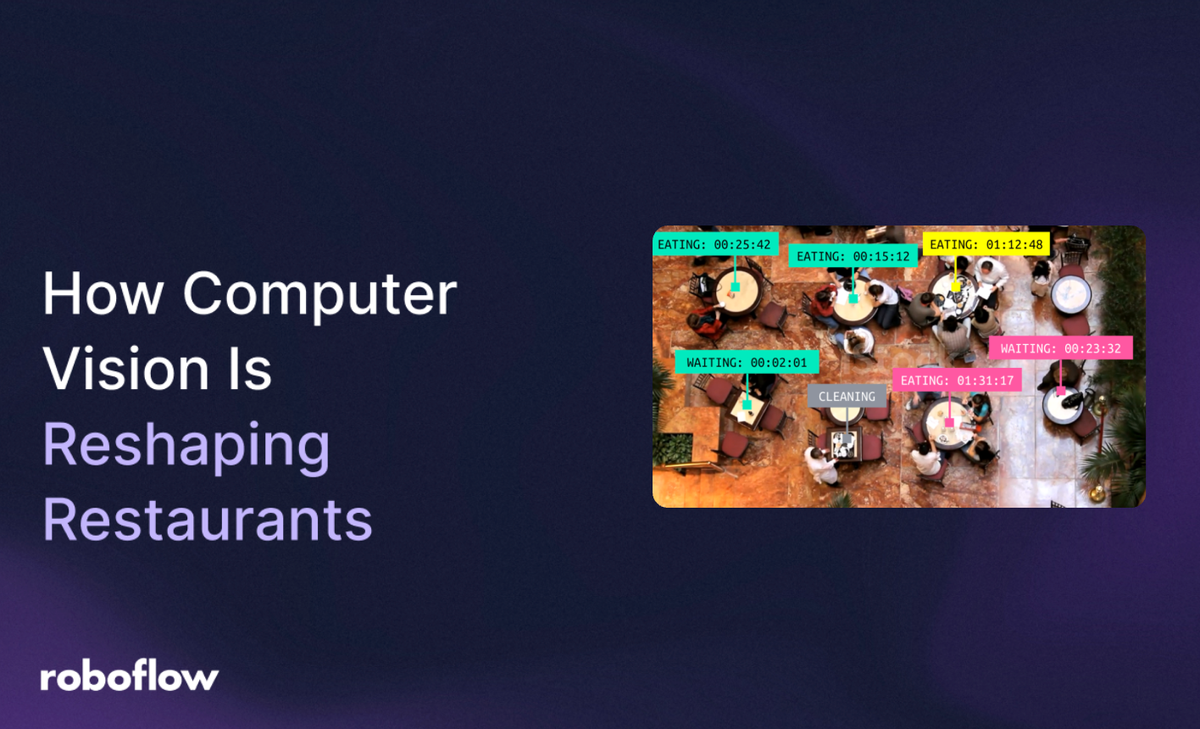

As a result, dozens of fast food and fine dining chains around the world are turning to computer vision to unlock new value across their operations. By using AI to track line-wait times, monitor table occupancy, verify food items, and more, restaurants are streamlining workflows, reducing errors, and gaining real-time visibility into their locations. Vision AI not only makes day-to-day operations more efficient, but also provides actionable insights that help businesses optimize staffing, improve the guest experience, and make smarter, data-driven decisions across every level of the organization. This article will cover real-world computer vision applications across both fast food and fine dining restaurants, highlighting how top brands are using AI today. As well as share emerging opportunities to further streamline operations, enhance service, and improve food quality.

Here’s how leading restaurants are putting computer vision to work.

Fast Food Restaurant Computer Vision Use Cases

Below are two real-world examples of how fast food chains are already using computer vision to automate kitchen tasks, ensure food quality, and improve operational efficiency at scale.

- White Castle (Kitchen Automation with Flippy Robot): White Castle, America’s first fast-food restaurant, is leading the way in AI-driven kitchen automation. With eleven freezer-to-fryer menu items, employees struggled to keep track of fry times and product flow, creating bottlenecks. To solve this, White Castle implemented a robot-on-a-rail powered by computer vision and machine learning. The robot identifies menu items, moves them between bins for cooking, and delivers finished products to staff. It leverages object detection, visual timers, and food texture and color analysis to monitor each stage of the fry cook process. The result: higher kitchen efficiency, improved labor productivity, and more consistent food quality.

- Domino’s Pizza (Real-Time Pizza Quality Control): Domino’s faced a widespread quality issue: pizzas were often inconsistent with customer expectations. Toppings were uneven, cheese slid to one side, and crusts were frequently over- or underbaked. To address this, Domino’s introduced the DOM Pizza Checker, a mounted computer vision system that inspects every pizza as it exits the oven and before it’s boxed. The system evaluates topping distribution, bake quality, and overall presentation against brand standards. Since implementation, Domino’s reports a 14–15% improvement in product quality and significantly fewer customer complaints.

Fine Dining Restaurant Computer Vision Use Cases

In fine dining and hospitality settings, computer vision is being applied to elevate service quality, reduce waste, and create more responsive front-and back-of-house operations. Here’s how leading restaurants are putting it to use.

- Compass Group (Food Waste Reduction): Compass Group, the world’s largest food and support services company, focused its efforts on cutting food waste. They installed AI-enabled cameras above kitchen waste bins to analyze what gets discarded, how much, and from which station. Using image classification to identify food types, object detection to recognize serving trays and packaging, and weight estimation in sync with digital scales, the system delivers actionable insights. This data helps kitchens reduce overproduction, cut waste by 30–50%, and save costs, while supporting sustainability goals.

- National Restaurant Chain (Food Measurement Accuracy with Vision AI): One of the largest privately owned fast-food chains in the U.S. deployed a computer vision system that improves food measurement accuracy and kitchen workflows. The team placed a camera above serving stations to monitor food levels. The vision model reached 95% accuracy and replaced unreliable weight sensors. This system gave real-time insights that helped staff restock items proactively, avoid shortages, and reduce waste. Following its success, the restaurant plans to expand the system to predictive cooking and quality control across thousands of locations.

Fast Food and QSR Workflows: High-Impact Computer Vision Use Cases

Computer vision is rapidly transforming the foodservice industry, unlocking new levels of automation, accuracy, and real-time decision-making across both fast food/QSR and fine dining environments. Below, we explore how advanced vision systems can be applied in the restaurant industry with clear technical workflows, supporting technologies, and operational impact. First, we’ll take a look at fast food applications, followed by fine dining.

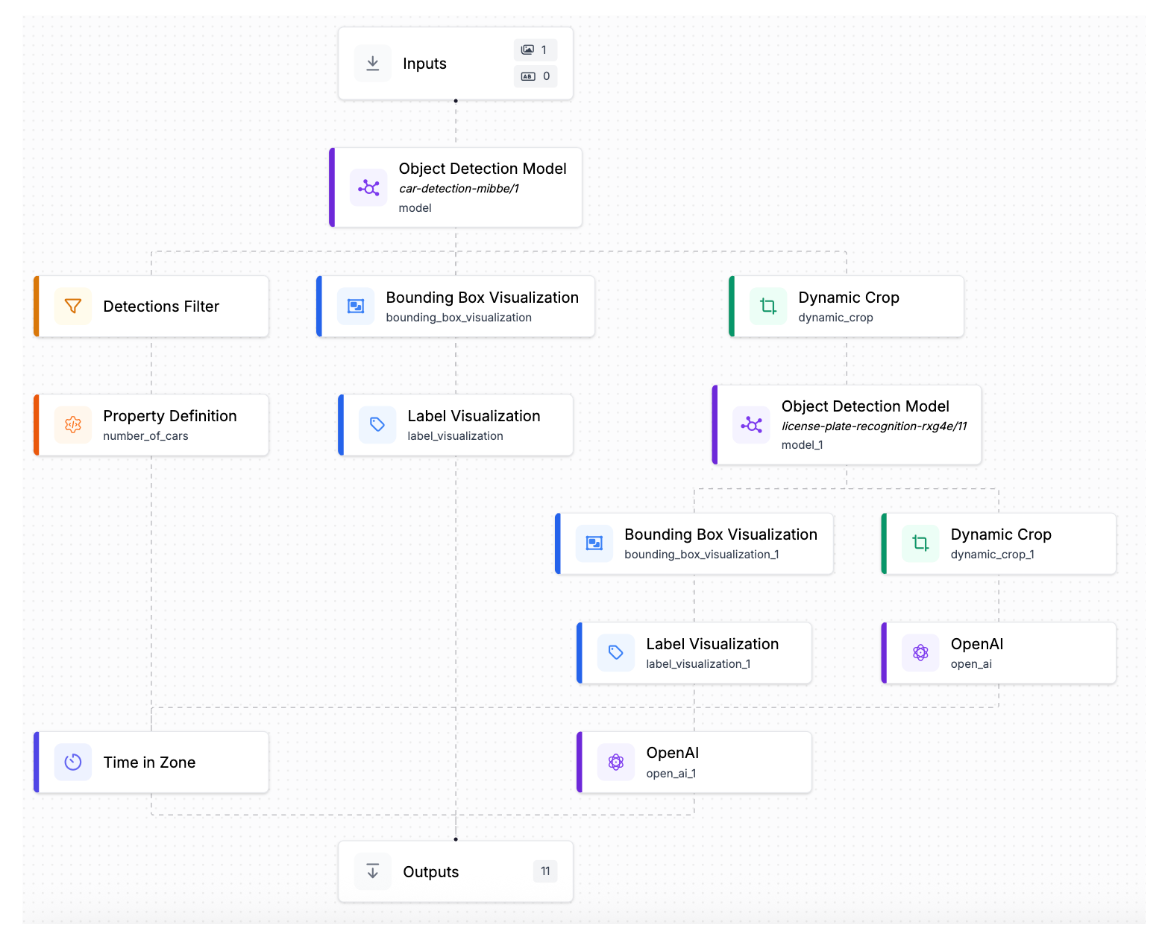

1. Drive-Thru Vehicle Detection & Customer Personalization

At some drive-thrus, computer vision systems are used to capture live camera feeds for vehicle detection, tracking, and license plate recognition (LPR). When a returning customer is identified by their license plate, AI platforms (such as Dynamic Yield) dynamically tailor digital menu boards to reflect personalized promotions or frequently ordered items. Simultaneously, cameras monitor queue lengths and dwell times to provide managers with real-time visibility into traffic flow and drive-thru congestion. This enables personalized upselling, optimizes staffing decisions during high-traffic periods, and improves overall drive-thru throughput and order accuracy. Behind the scenes, the system is powered by LPR models, queue length tracking algorithms, and AI-based recommendation engines.

2. Kitchen Robotics Integration and Fry Cook Monitoring

In modern kitchens, overhead cameras can leverage object detection and zone-based monitoring to track items as they’re placed in fryers or on grills. These visual inputs will feed into automated systems that trigger alerts or initiate robotic actions (such as flipping or removing food) based on how long items remain in specific cooking zones. Flippy by Miso Robotics is a real-world example of this approach. The result is consistent cook times, improved food quality, and reduced manual oversight. Staff can redirect their attention to other prep tasks or customer service, while the underlying system runs on object detection, heatmap analysis, and IoT integrations with robotic tools.

3. Food Item Verification at Packaging Stations

When it comes to takeout and delivery, computer vision can play a crucial role in quality control. For example, at packaging stations, cameras can use instance segmentation to verify that all ordered items are present before bags are sealed. The system could be built to compare actual contents with the digital order, flagging missing items or packaging errors before they leave the kitchen. This would reduce delivery mistakes, improve packaging accuracy, and integrate with existing POS and delivery management software. Models such as RF-DETR, YOLOv8 and Mask R-CNN can provide the technical backbone for these checks.

4. Real-Time Staff Scheduling and Occupancy Optimization

To manage labor efficiency, CV models can analyze zone-based occupancy throughout a restaurant. By counting the number of customers and employees in areas like the kitchen, lobby, or counter, the system could recommend dynamic staffing changes or alert managers to bottlenecks. When combined with historical foot traffic data, this capability would help restaurants avoid overstaffing or underserving key areas during peak hours. The system relies on people detection, multi-zone tracking, and predictive analytics to forecast and optimize scheduling patterns.

5. Speed of Service and Workflow Bottleneck Analysis

Speed is essential in QSR environments, and computer vision offers an objective lens into where delays occur. Cameras can track the movement of food items from prep to packaging, logging timestamps and identifying bottlenecks in the workflow. If a specific station (like toppings or assembly) is slowing down orders, real-time data would allow managers to reallocate labor or adjust processes. This could accelerate order throughput, enforce time standards, and enhance coordination between front- and back-of-house teams. Object tracking and zone entry/exit timing form the basis of this real-time workflow visibility.

Fine Dining Workflows: High-Impact Computer Vision Use Cases

While some of these capabilities are already being deployed, the following examples provide a higher-level overview of potential computer vision applications in fine dining, offering a glimpse into how restaurants can enhance service, monitor guest experiences, and optimize front- and back-of-house operations.

1. Full-Service Dining Cycle Monitoring

Fine dining establishments can benefit greatly from computer vision systems that can track the full lifecycle of a dining experience. For example, with overhead cameras monitoring tables and surrounding zones, using bounding box detection and zone occupancy, business owners can identify key milestones such as: customers being seated, menus being opened or closed, orders being taken, patrons leaving, and tables being reset.

2. Waitstaff Engagement Monitoring

Using pose estimation, person re-identification, and zone-based dwell time analysis, restaurateurs can monitor staff presence and attentiveness at each table. The system could log how frequently staff engage with patrons and how long tables remain unattended. This would help managers detect underperforming teams, highlight training needs, and ensure service consistency across shifts.

3. Buffet and Hot-Holding Unit Monitoring

In buffet-style settings or areas with hot-holding trays, both thermal and visual cameras can be used to monitor food status. The system could detect low fill levels and unsafe temperatures, automatically alerting staff when a tray needs to be refilled or replaced. This eliminates the need for manual temperature checks, maintains food safety compliance, and ensures a visually appealing, well-stocked service line. The implementation would use thermal imaging and tray-level detection models powered by object classification or area segmentation.

4. Order Progression and Plate Readiness Management

At pass-through stations, cameras can monitor when dishes are ready to be served. Using Optical Character Recognition (OCR), the system would read order numbers on containers or paper tickets and notify the appropriate waitstaff. Vision models can also track steam and dwell time to ensure food remains hot and is served promptly. This approach minimizes delays in service, improves timing precision, and helps maintain dish quality.

5. Plating and Presentation Quality Assurance

Plating consistency is essential to brand integrity in upscale restaurants. Computer vision could be used to compare plated dishes to reference templates using classification and anomaly detection models. If a plate deviates from the visual standard - whether through missing garnishes or poor arrangement - it would be flagged for correction before it reaches the customer. This ensures a consistent dining experience across kitchen staff and locations. Image classification and template matching technologies power this solution.

How Roboflow Workflows Enable Restaurant AI Applications

Roboflow Workflows make it easy for restaurants to turn computer vision models into real-time decision systems. Instead of just detecting objects in images, these workflows connect different steps such as detection, tracking, classification, and alerts, so the system can take immediate action without human intervention. Below are simple examples of how Roboflow's workflow tools can be deployed across different restaurant environments to improve operations, service, and food quality:

Dining Process Monitoring (Fine Dining Use Case)

- Workflow: Object Detection (tables, customers, servers) → Event Detection (seated, menu open, waiter arrives) → Tracker IDs assigned per table → Timers activated for service milestones → Data logged to dashboard

- Function: Each table is tracked using bounding boxes and assigned a tracker ID. Server presence, timing of service milestones, and duration of each interaction are recorded. Managers gain real-time visibility into guest pacing, service frequency, and table readiness.

Pizza Quality Control (Domino’s Use Case)

- Workflow: Object Detection (pizza) → Instance Segmentation (toppings) → Classification (burnt/good) → QA Alert

- Function: Model detects the pizza and evaluates topping presence and bake quality. If it fails visual standards, a QA alert is sent to the kitchen screen for immediate correction.

Line Monitoring in Fast Food (e.g., Drive-Thru Optimization)

- Workflow: Object Detection (vehicle) → Object Tracking (by ID) → Time in Zone (dwell time) → Alert if threshold exceeded

- Function: Cameras monitor the drive-thru lane. If vehicles spend too long in line, a webhook sends an alert to shift managers to add staff or open another register.

Computer Vision Restaurant Applications

Whether it's increasing throughput in a drive-thru, ensuring soup is delivered while it is still hot, or reducing waste in the kitchen, computer vision is proving to be a critical tool for modern restaurants looking to stay competitive. Fast food prioritizes speed and consistency, while fine dining focuses on experience and precision: computer vision meets the needs of both worlds.

Cite this Post

Use the following entry to cite this post in your research:

Sylvie Goldner. (Jul 18, 2025). How Computer Vision Is Reshaping The Restaurant Industry. Roboflow Blog: https://blog.roboflow.com/computer-vision-restaurant-industry/