Semantic embeddings are an increasingly foundational part of the modern computer vision toolbelt. Models like CLIP are used for tasks ranging from image search to image generation to content moderation and more.

Now you can seamlessly use embeddings as part of your computer vision pipelines with Roboflow Workflows. You can calculate embeddings, and use our outlier detection system with embeddings to find outlier frames in video streams.

CLIP in Workflows

The first embedding model we're launching with is OpenAI's CLIP model, which is great for making image to image and text to image comparisons. To get an embedding, simply add the CLIP embedding model to your Workflow.

Wire its input to a string or image and it will output the list of floats that represent the datapoint's position in its latent space. A single embedding alone isn't very useful, it's when you compare it to others that the magic happens. By using the Cosine Similarity block you can measure the similarity of two embeddings.

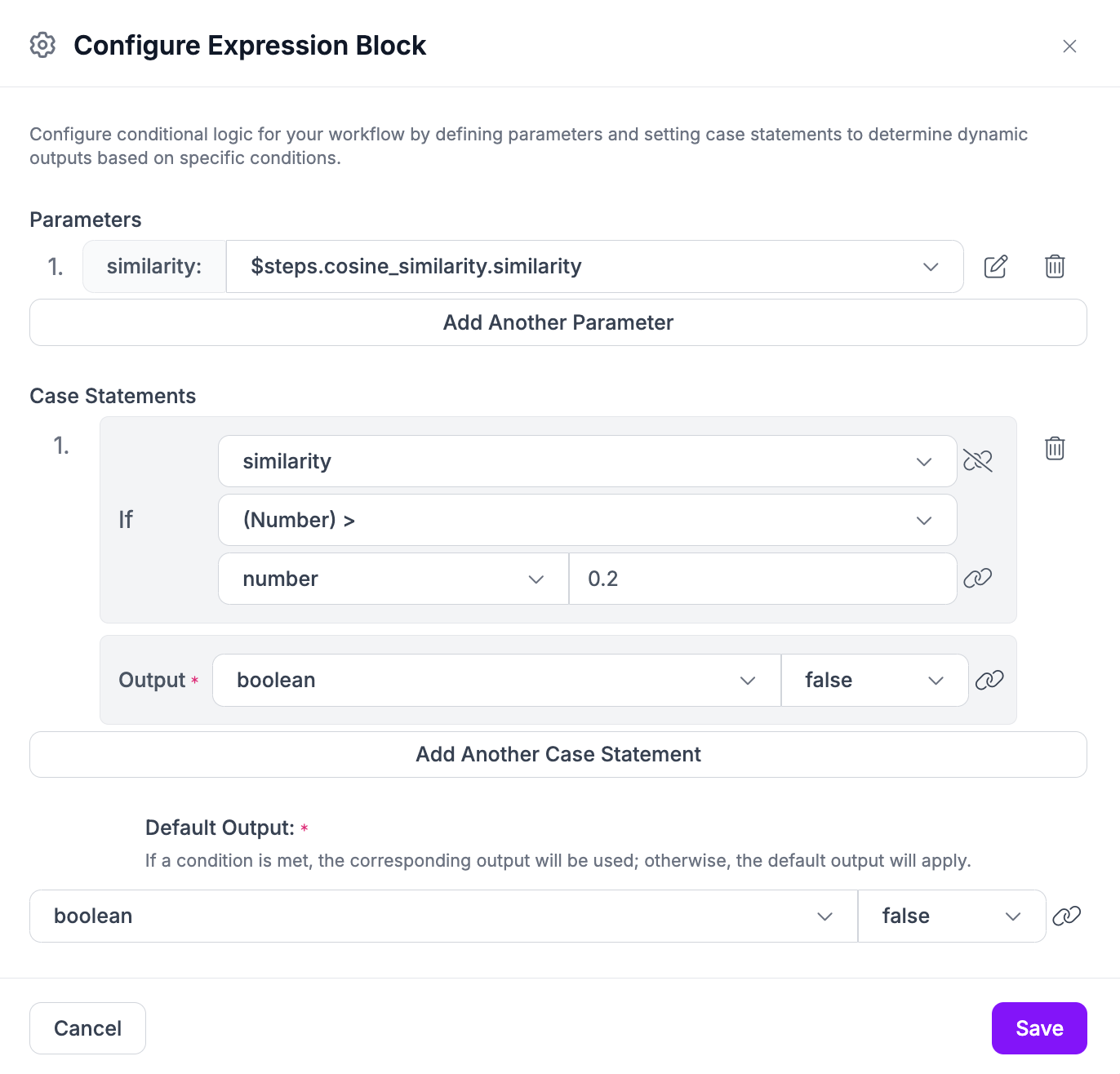

The cosine similarity is measured on a scale of zero to one (where 1.0 means the two inputs are exactly the same). To add a threshold, use the Expression block. For example, for an image moderation use-case you might compare the CLIP embedding of an image a user uploaded to your app with the CLIP embedding of the text "NSFW".

Using this same pattern you could build all sorts of other projects like:

- If an image is too blurry (compare with the text vector for "blurry"), don't run your object detection model on it.

- Decide contextually whether to run a model trained on summer images or winter images (compare with the text vector for "snow").

- Build a scoring system for a game like paint.wtf (compare to the drawing prompts).

- Given a set of photos, choose the best one (compare with the text vector for "beautiful" or "award winning").

- Ensure your camera hasn't been bumped (compare with the average image vector of the images from your dataset).

Detect Outliers with Computer Vision

One huge use-case for embeddings is for detecting changes & anomalies. It's important to capture edge-cases for continual re-training and improvement of your models. Roboflow now has built-in embedding-based anomaly identification blocks that use embeddings to make this active learning process much easier and more powerful.

Imagine a security camera pointed at a parking lot running analytics on the vehicles. The initial data used to train the model likely doesn't include rare occurrences it may see from time to time in the wild like:

- A visit from a tow truck, ambulance, fire truck, police car, food truck, or the wienermobile

- Someone doing donuts in the middle of the night

- A fallen tree or light pole

- A snowstorm, hurricane, or flash flood

- Construction work or newly painted lines

- A pop-up weekend car-show or farmer's market

- Wild animals meandering through

Capturing these edge-cases would be really valuable data for your model to learn about. Even if you could anticipate all the things that might happen, how would you ever find them in the footage? Enter embedding-based anomaly detection.

Our two new blocks, Identify Changes and Identify Outliers work by looking at the distribution of semantic embeddings in a video feed over time and flagging frames that are out of the ordinary. This means you don't have to predict all the edge cases that might happen and look for them manually; you can capture anything that looks different from "normal".

The Identify Changes Block

This block works by comparing the current frame against a historical average & seeing how the magnitude of that difference relates to the typical magnitude it's seen historically. If the entropy of the system increases it will flag the frame as an outlier for closer inspection.

You can tune the averaging strategy in a few ways:

- Exponential Moving Average (EMA) will weight more recent frames more highly. This will tend to filter out more gradual changes over time (for example, in the parking lot example above, this wouldn't mark the shift from day to night at sunset as an outlier but it would probably flag a solar eclipse).

- Simple Moving Average (SMA) will take into account all frames since the beginning of the stream and is more suitable for situations that should stay relatively consistent (like an assembly line).

- Sliding Window is similar to the simple moving average but only looks at a finite set of previous frames vs all frames since the beginning of the stream. This can be useful if many different types of outliers happen over time & you don't want them to affect the sensitivity.

You can also tune the threshold which determines how far from the average something needs to be to be considered an outlier and the warmup which is the number of initial frames to use to set the baseline before starting to look for outliers.

The Identify Outliers Block

This block uses a von Mises distribution to determine the "typical" range of vectors & then determining how far outside of normal a given vector is. It's more likely to work "out of the box" without tuning than the Identify Changes block, but is less flexible if it's not giving the results you desire for your particular data.

The threshold and warmup parameters work similarly to the Identify Changes block.

Example

In this example, we use the Identify Outliers block to sample frames that are out of distribution and find the raccoon in the hen house.

The Workflow that produced this visualization uses some additional blocks:

- Rate Limiter to only look for an outlier once per second

- Buffer to create a list of all the outliers detected

- Cache Get and Set to make the list of outliers available even on frames where embeddings weren't calculated

- Custom Python to prepend the current live video frame to the list

- Grid Visualization to show the frames next to each other

Putting it all together, we get a Workflow that looks like this (click here to Fork it to your Roboflow account & try it on a video of your own):

What's Next

Anomaly detection is an active area of research. We plan to iterate and improve on these blocks over time. We also plan to add more embedding models besides CLIP to Workflows to capture wider expressiveness and lower compute requirements.

Cite this Post

Use the following entry to cite this post in your research:

Brad Dwyer. (Dec 23, 2024). Launch: Embeddings in Workflows. Roboflow Blog: https://blog.roboflow.com/embeddings-in-workflows/