The below post is a guest post written by Samay Lakhani and Sujay Sundar, two budding data scientists. Samay currently interns with a Silicon Valley tech company; Sujay currently does research under the mentorship of a CUNY Staten Island neuroscience professor. Both are sophomores at Jericho High School, showing that they're a lot smarter than the average Roboflow employee was in high school. (The post was edited and compiled by Roboflow.)

Art has the unmatched ability to inspire. That is why we set out to create it.

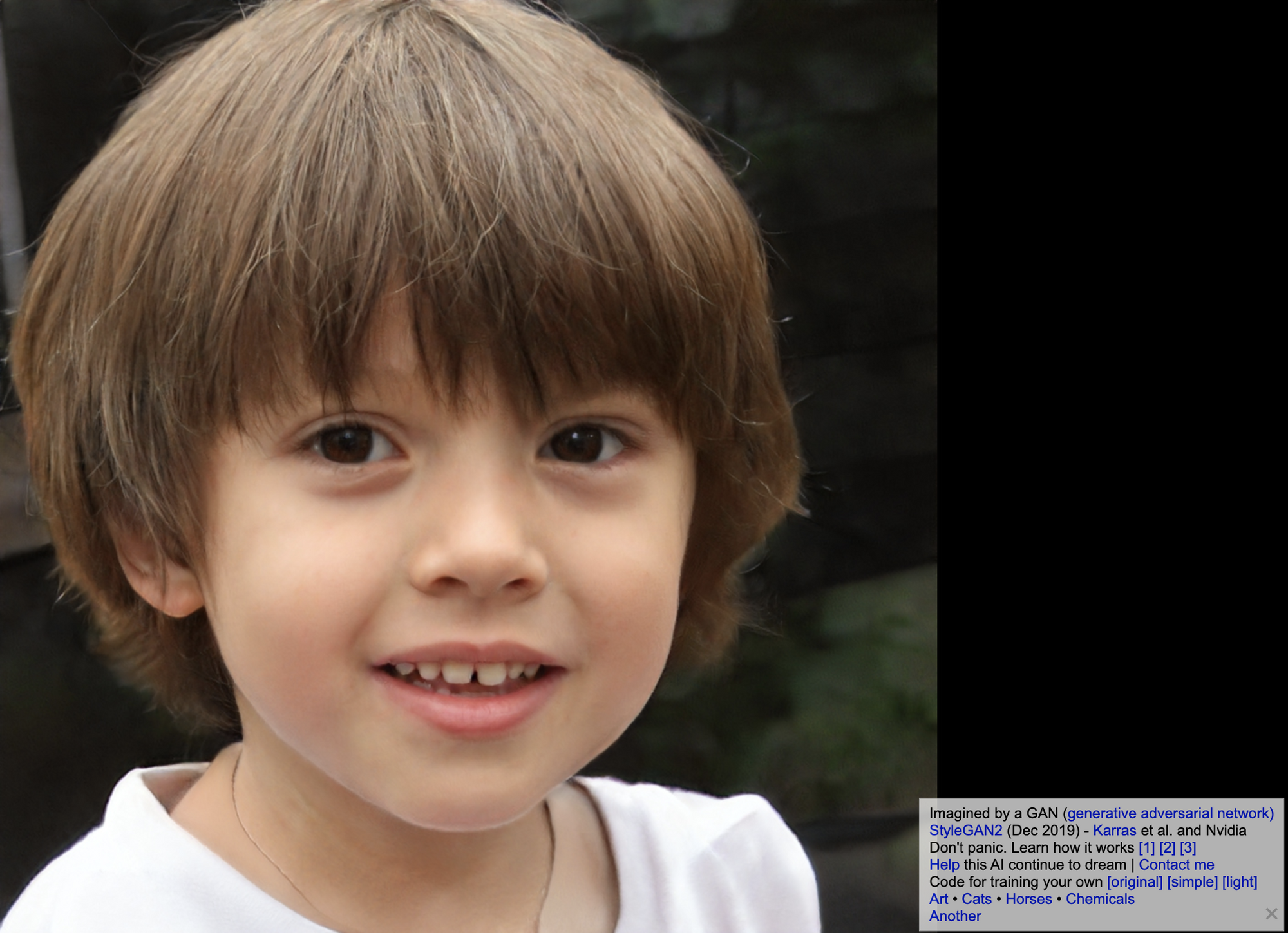

Inspired by ThisPersonDoesNotExist.com - a website that generates fake people who look like real people - our team built a Generative Adversarial Network - GAN for short. Our goal was to extract and reproduce the most important features in Renaissance paintings, then generated abstract versions of Renaissance art.

We wanted to show anyone that they can build a working computer vision model quickly. We participated in a 24-hour hackathon where we grabbed 300 images from around the Internet. The images we gathered represented the best known paintings from the Renaissance times, like paintings by Michelangelo, da Vinci, and portraits of nobles. We wanted to focus on paintings, so we discarded images of sculptures and 3-dimensional art.

We pre-processed our data using Roboflow by scaling the images down to 32 by 32 pixels. We opted to fit a DCGAN (Deep Convolutional Generative Adversarial Network). A GAN works by taking in usually one category of input images, then minimizes loss on two main pieces: the generator and discriminator.

- The generator produces noise.

- The discriminator decides if that noise represents the category.

It eventually should converge to create realistic images.

Initially, we did not augment our images. In this first trial, the model failed and produced what looks like a lot of noise.

To attempt to improve model performance, we wanted to augment our dataset with reflections and rotations. Because this was a 24-hour hackathon, we didn’t have the time to create a pipeline that would be easily modifiable. With a few clicks in Roboflow, we more than tripled our dataset size via data augmentation. Roboflow saved us hours that we would otherwise spend writing and testing code. This helped us achieve our goal -- we won the hackathon in the social impact category!

Below is some of what we generated after training our model on the original and augmented images. The abstractions are less noisy and the colors are much closer to what you might expect from Renaissance paintings.

This isn’t our first time using Roboflow. We’ve used Roboflow’s model library for proprietary object detection work and gotten over 96% mean average precision.

In order to get similar results without Roboflow, we would have to spend hours writing code, then re-writing it for every new application. Roboflow regularly saves us tremendous amounts of time!

Cite this Post

Use the following entry to cite this post in your research:

Matt Brems. (Nov 30, 2020). Generating Renaissance Art with Computer Vision. Roboflow Blog: https://blog.roboflow.com/gan-generating-art-computer-vision/