If you are passionate about AI and love hands-on work with visible results, becoming a computer vision engineer is a great career option.

In this guide, we are going to talk about:

- What a computer vision engineer is;

- The educational requirements for a vision engineer job;

- The skills you will need to be a computer vision engineer;

- The tools of the trade, and more.

Let's get started!

What is Computer Vision?

Computer vision, a branch of AI, allows computers to see and understand the real world. Applications like facial recognition, flaw detection, autonomous vehicles, and medical imaging use computer vision techniques to improve efficiency and accuracy.

What is a Computer Vision Engineer?

Over the last decade, deep learning has brought forth a new revolution in image processing with computers. It is now possible for computers to process image data to classify images, identify objects in images, and segment exact regions of images with incredibly high rates of accuracy.

A computer vision engineer is a developer who specializes in creating software solutions that can extract visual information and insights from images and videos. There are many tasks that a vision engineer may work across, including classifying images, identifying objects in images, segmenting exact regions in an image, finding key points in an image, and more. Computer vision is widely used in industries like robotics, automotive, finance, manufacturing, healthcare, and agriculture.

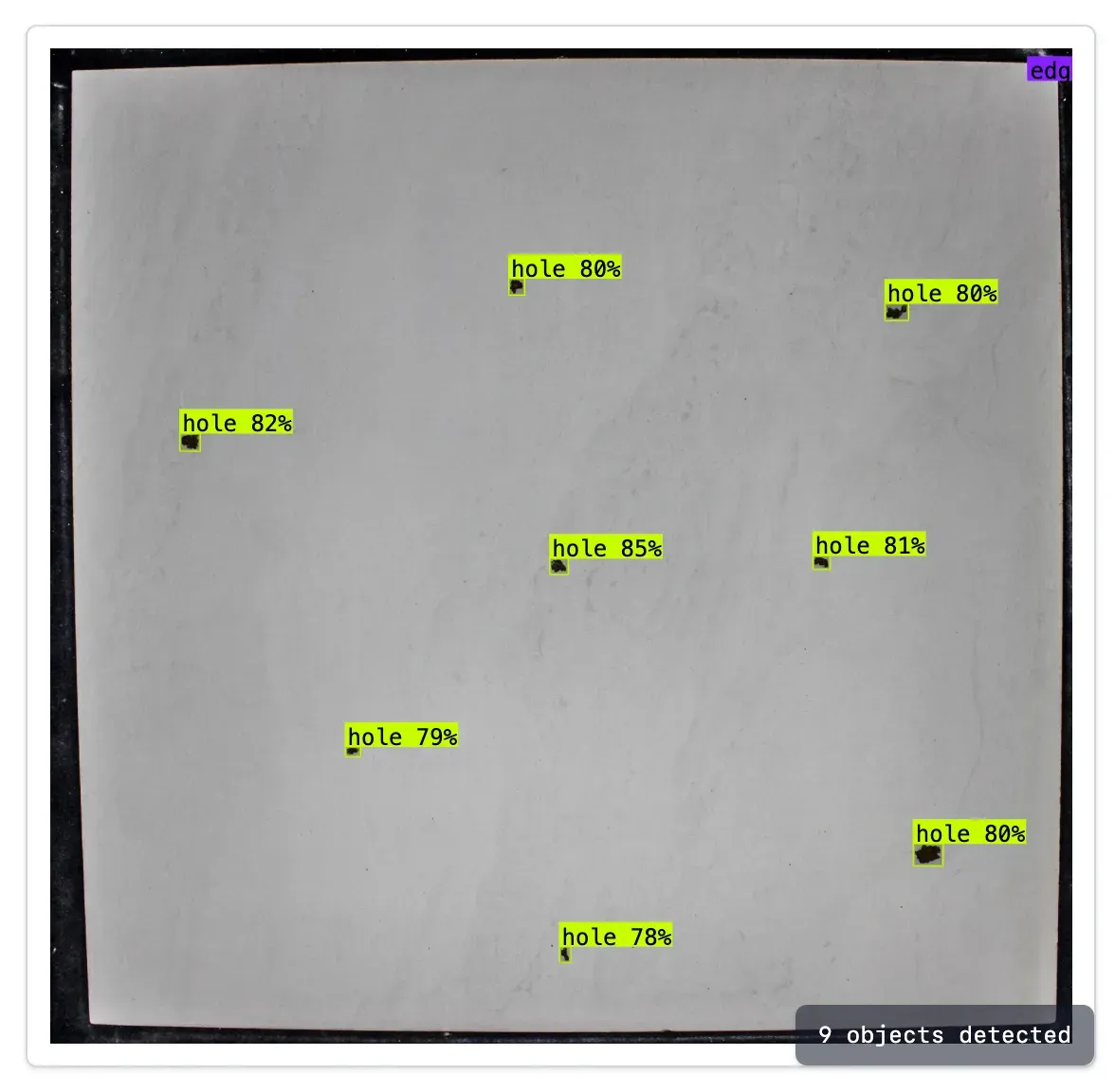

For instance, take a conveyor belt carrying finished products in the manufacturing industry. Computer vision can be used to inspect the products to look for defects such as cracks, scratches, or any minute deformation that might be missed by human inspection to ensure quality and reduce waste.

Computer vision models can perform tasks that humans typically do but with greater efficiency.

Computer vision engineers are experts in using deep learning and traditional, pattern-based vision processes to solve problems. An engineer should be able to take an abstract business problem that involves visual data – for example, identifying defects on an assembly line – and build a system that is able to solve that problem.

A computer vision engineer may build systems to:

- Identify defects in products;

- Identify safety hazards;

- Analyze aerial imagery;

- Identify anomalies in a dataset;

- Monitor defect rates at different points in an assembly line;

- Search large datasets of images;

- And more.

Computer Vision Engineer Responsibilities

Computer vision engineers work closely with business stakeholders to build solutions to problems.

For example, consider a scenario where you are working for a beverage manufacturer who wants to reduce defect rates in bottle caps. The manufacturing staff would outline the problem: the team wants to reduce defect rates. Then, a vision engineer would work to solve the problem with the team. See our guide on how a computer vision engineer would build this exact system.

With this problem statement, an engineer would devise a plan to solve the problem. This may involve documenting existing defects, then training a classification or detection model to identify those defects. This is called “model building”.

The engineer would then work on deploying the model. This involves rolling out the model to the environment in which it will be used. In this example, the engineer would work to ensure the model can run on camera feeds from the assembly line. The model could then be integrated into business logic. For example, the engineer may work with their team to:

- Build an alert system that triggers if the incidence of a particular defect is too high;

- Ensure the assembly line automatically rejects products that do not meet quality standards, and;

- Build a central dashboard that shows defect rates across different parts of a facility.

Once a model has been developed, the engineer would evaluate the model and tune it as needed. For example, if the model struggles to identify a particular defect – for example, a malformed bottle cap – the engineer would use their skills to find out the source of a problem and improve the system.

There are several paths of entry for computer vision engineers. You can study computer science, image processing, or deep learning in university and proceed to work as a computer vision engineer. You can also pursue a career in data science, then transition to computer vision.

For many vision roles – for example, in manufacturing – a degree in computer or data science, or equivalent experience, will be sufficient to find a role.

Skills You Need as a Computer Vision Engineer

Computer vision engineers apply many skills in their day-to-day role. Let’s talk about soft skills first, then technical skills.

Ultimately, the role of a computer vision engineer is to solve problems and think critically about them. This involves taking in lots of information from different stakeholders and using it to come up with a solution to a problem. Often, vision engineers need to explore multiple different solutions, then evaluate which one makes the most sense for a given situation.

Any solution will need to be developed within the parameters given to an engineer. For example, a solution may have to work on particular hardware, achieve at least a specific rate of accuracy, run at a certain speed, and more.

Consider a scenario where you are building a model to identify metal defects. The model may need to achieve 98% or higher accuracy. To reach this level of accuracy, the engineer will have to continually iterate on their system, using critical thinking skills to identify opportunities for improvement. For example, a system could be made more accurate by collecting more representative images, pruning a dataset, using or changing augmentation settings, and more.

In terms of technical skills, vision engineers need to have a strong grasp of machine learning foundations and programming. Here are a few technical skills you can expect to need as a computer vision engineer:

- A strong understanding of Python

- The ability to train a computer vision model

- Knowledge of ways in which a model can be deployed

- Knowing how to improve a vision model

- Writing reports and documents that outline how a vision system works

There are several software libraries with which you may need experience, too. These include:

- OpenCV

- Transformers (for deep learning tasks)

- Any vision model library (i.e. MMDetection)

- Deployment infrastructure (i.e. Inference)

- Libraries that help you process predictions (i.e. Supervision)

Educational Background and Prerequisites for Becoming a Computer Vision Engineer

The traditional route to becoming a computer vision engineer begins with choosing a relevant college major like computer science or information technology. You can also use online courses to learn about computer vision if you already have programming knowledge or a technical background.

In any case, whether you attend a course or college, you will learn about key topics like machine learning, data structures, deep learning, and image processing techniques.

Having a strong mathematical foundation, particularly in linear algebra, calculus, probability, and statistics, would help you brainstorm solutions to real-world problems.

In your studies, you will learn Python and various visual processing libraries, such as:

- OpenCV: Used for image processing, it provides tools to manipulate images and videos, like detecting objects, faces, and features.

- TensorFlow: A popular framework for machine learning and deep learning, particularly useful for building and training neural networks.

- PyTorch: Another deep learning framework, favored for its flexibility and ease of use when experimenting with neural network models.

- Supervision: Provides reusable computer vision tools, which are crucial for developing computer vision solutions.

- Transformer: A library that gives developers access to pre-trained models for tasks across various domains like computer vision, natural language processing, and audio.

In some cases, you might also need to learn other languages like C and C++, especially when working on performance-critical parts of a project, such as optimizing code for real-time processing or interfacing with hardware (e.g., cameras, sensors). These languages are often used in embedded systems and environments where speed and efficiency are vital.

That said, many computer vision engineers take a different path. So, even if you didn’t study computer science or IT, don’t worry - you can still break into the field. If you're new to coding, it’s completely possible to learn the essential programming languages and tools along the way. For beginners, check out this guide on computer vision Python packages to start and get your hands dirty with real-world projects.

Core Skills Required for a Computer Vision Engineer

Coding and mathematical skills are the building blocks for computer vision. Along with that, a strong understanding of core computer vision concepts like image processing, object segmentation, and machine learning is key to solving real-time and practical applications.

Image Processing

Image processing is a fundamental concept used in computer vision applications to manipulate, enhance, and analyze digital images. It is particularly useful in computer vision because it helps refine digital images for advanced analysis. Most image processing techniques make pixel-level changes to images, like adjusting their intensity to brighten or darken them.

Here are some more examples of image processing techniques:

- Filtering involves removing noise and unwanted elements to enhance an image, with Gaussian filters being widely used for this purpose.

- Edge detection creates boundaries between different objects by identifying discontinuities that correspond to various objects or regions through intensity variations between neighboring pixels.

- Object segmentation isolates distinct objects in an image using parameters like color, shape, texture, and depth.

- Thresholding is primarily applied to grayscale images, converting them into binary images based on a predefined threshold value. Pixels below this threshold turn black (0), while those above become white (1).

Machine Learning and Deep Learning

Machine learning and deep learning models in computer vision make it possible for computers to perform tasks usually done by humans, such as scene classification and medical diagnostics. These models often involve convolutional neural networks (CNNs). CNNs consist of multiple layers, each extracting different information from images, that can help with computer vision tasks like object detection, segmentation, and classification.

An Example of Object Detection (Source)

Another commonly used model is Generative Adversarial Networks (GANs). These networks feature two components: the generator, which creates new samples, and the discriminator, which evaluates their authenticity. GANs are primarily used for image generation and data augmentation.

Algorithms and Optimization Techniques

When it comes to computer vision, it’s essential to know the ins and outs of algorithms, optimization techniques, and performance tuning for various applications. These factors directly impact how accurate and fast detection is, which is important for real-time innovations like autonomous driving and surveillance systems. These applications have very little room for latency; data must be captured, processed, and acted upon within microseconds. Optimization techniques such as pruning, model distillation, and quantization can help you meet time constraints without sacrificing accuracy.

It’s also important to know what works best for different scenarios. For example, both MobileNet and VGG16 are convolutional neural network (CNN) models that can be used for image classification and object detection.

While they can produce similar results, MobileNet is 32 times smaller and ten times faster than VGG16. MobileNet is ideal for mobile and embedded devices with limited computational power, whereas VGG16 is better suited for high-accuracy tasks without computational constraints. Knowing these technical details is key because choosing the right model can make a huge difference in overall performance and efficiency.

Computer Vision Tools and Frameworks You Should Master

If you are a beginner, starting with the Roboflow notebooks in our GitHub repository is a great choice. They provide step-by-step guides for various computer vision tasks, with detailed explanations, ready-to-use Python templates, and video tutorials to help you along the way.

It’s also a good idea to be familiar with frameworks like OpenCV. OpenCV serves as a foundational framework for computer vision models. It is open-source and accessible for contributions. Alongside OpenCV, mastering TensorFlow, PyTorch, and Keras is beneficial. They are widely used for tasks like object detection and natural language processing. For deploying models, cloud services like AWS, Google Cloud AI, and Microsoft Azure provide the necessary infrastructure and resources.

Computer Vision Certifications and Courses

Structured online computer vision courses from platforms like Roboflow, Udacity, edX, or Coursera are great resources for learning new skills. The Nanodegree Computer Vision Program by Sebastian Thrun on Udacity is particularly valuable for beginners, covering essentials like CNNs, Image Classification, and Cloud Computing. Updated in October 2024, it includes the latest advancements. Similarly, Andrew Ng’s Deep Learning Specialization course provides extensive knowledge on neural networks and optimization, though it requires a solid foundation.

If you are new to coding, you can try online courses like “Introduction to Computer Vision and Image Processing by IBM” on Coursera. It is a beginner-friendly course with no or little programming skills needed. It covers image processing, machine learning, CNNs, object detection, and a real-time project. For advanced learners, Stanford’s Deep Learning for Computer Vision course offers a deep dive into recent industry developments.

For more sophisticated hands-on learning, explore this GitHub repository for a list of courses, and refer to Roboflow Learn for a dedicated learning platform. On Roboflow Learn, you can find a range of resources, including blog posts covering topics from the basics of computer vision to practical applications like controlling OBS Studio. The platform also features many videos and tutorials on training computer vision models, along with a dedicated YouTube channel for in-depth content.

To strengthen and enhance your skills, you can also consider obtaining certifications such as Google’s TensorFlow Developer Certificate or Microsoft’s Azure AI Engineer Associate Certificate.

Building a Strong Portfolio as a Computer Vision Engineer

Once you have mastered the basics, you can start working on open-source or personal projects to build your portfolio. There are various great options to explore. You can sign up for Kaggle, a data science competition platform, to take part in various competitions and refine your skills. Or, you can train and test your custom model on no-code training platforms to upskill your problem-solving and creative thinking capabilities.

Contributing to Supervision is another interesting way to help build your credibility. As we saw earlier, Supervision is a Python package that offers a suite of reusable tools to streamline development. It enables users to use computer vision for various tasks, including object detection, segmentation, annotation, and visualization. For example, Supervision libraries can help you detect small objects in large areas.

Similarly, contributing to GitHub repositories and OpenCV can give you valuable experience. After working on personal and open-source projects, the Vision AI community encourages applying for internships in computer vision roles. Focus on industries like robotics, autonomous vehicles, or healthcare, where the demand for computer vision engineers is high.

Networking and Staying Updated

Porter Gale, a well-known networker and entrepreneur, has emphasized the value of connections by saying, “Your network is your net worth.”

Networking is a key skill, not just in computer vision but in any profession. By attending events like the Conference on Computer Vision and Pattern Recognition (CVPR) or the European Conference on Computer Vision (ECCV), you can build connections and keep you updated on industry trends. You can join also online communities on platforms like LinkedIn, Stack Overflow, Reddit, and GitHub for networking and collaboration. Being active in online communities and sharing insights can help you build your professional network.

Computer Vision Career Path and Job Opportunities

As an entry-level computer vision engineer, you can expect to primarily focus on data labeling, basic algorithms, and collaborating closely with senior engineers. Your responsibilities will also include staying updated on industry trends, assisting team members with using and training computer vision models, and maintaining models thorough documentation.

You can then progress onto a senior role, which will involve more complex modeling, leading projects, and working more closely with organizational data pipelines for use in vision applications.

According to Indeed, the average base salary for a computer vision engineer in the USA is $122,948 per year, with a range of $72,761 to $207,752. In England, the average salary is $94,687 per year. As mentioned in Geeksforgeeks, in terms of experience, entry-level engineers (0-2 years) can get $8000 to $12000 per month. Mid-level engineers (3-5 years) can earn $13000 to $18000 monthly. Senior-level engineers (6+ years) can get $19000+ per month. However, the salary range can depend on factors like location, company, and technical skills.

Industries that Employ Computer Vision Engineers

Computer vision engineers are hired across many sectors of the economy. Let’s talk through a few industries that employ computer vision engineers.

Manufacturing

Computer vision has been used extensively in manufacturing processes over the last few decades. Computer vision is commonly used to identify defects in products and to ensure specific quality standards are met.

If you are working for a manufacturing organization, you can expect to build systems that identify defects, identify safety hazards, and assure the quality of a product manufactured on an assembly line. You may also work on quality assurance systems for manual assembly.

Logistics

As a computer vision engineer at a logistics company, you may build systems to:

- Identify packages as they enter a facility and automatically add them to an inventory system;

- Route packages based on given parameters (i.e. package size, letter vs. box);

- Build systems that check when intermodal containers arrive at a shipping yard, and more.

Retail

Retailers can use computer vision to ensure shelves are fully and properly stocked. As a computer vision engineer working for a retail company, you may build a system that ensures shelves meet a planogram (a diagram that states where different products should appear on shelves throughout a store).

You may also work on building systems directly for use by customers, such as self-service stores that can track what you have purchased without having to use a checkout.

Other Industries

There are many other industries in which computer vision is used, including:

- Defense

- Medicine

- Agriculture

- Security

- Construction

For some industries, such as defense and medicine, you will have to have extensive education in the subject matter area as well as how computer vision applies to that subject before working as an engineer. In other industries, such as manufacturing and retail, extensive knowledge of computer vision is enough to find a role.

Become a Computer Vision Engineer

On a given day, a computer vision engineer may start developing a new model to identify defects (i.e. for an assembly line), work to improve the performance of an existing model, monitor model performance, deploy models to a new device, and more.

Computer vision is a rapidly evolving field that requires continuous learning and hands-on experience. To excel in this field, stay updated on the latest advancements, experiment with different models and datasets, and develop strong problem-solving skills. Engage with the computer vision community by attending workshops and conferences.

Building a strong portfolio, networking with industry professionals, seeking mentorship, and pursuing relevant certifications can help nurture your career. Along the way, contributing to open-source projects and staying active on platforms like GitHub can showcase your expertise in this field. Build practical skills, create real-time solutions for real-world problems, and start your journey toward becoming a computer vision engineer!

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Oct 30, 2024). How to Become a Computer Vision Engineer. Roboflow Blog: https://blog.roboflow.com/how-to-become-a-computer-vision-engineer/