Moondream 2 is a “tiny vision language model” developed by vikhyat. The model comes in two sizes: 2B and 0.5B. The 2B model offers strong performance on a range of general vision tasks like VQA and object detection, whereas the 0.5B model is ideal for use on ‘.

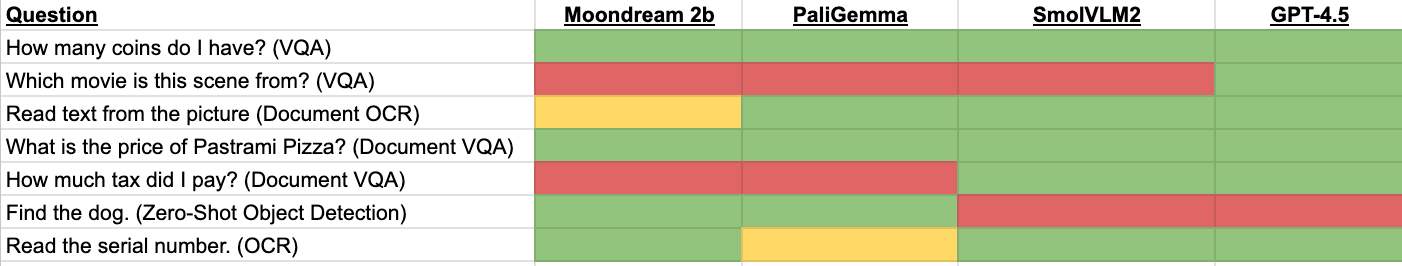

We evaluated Moondream 2 on the qualitative set of tests we use to evaluate multimodal vision models. In this guide, we are going to walk through the results of our analysis. Curious to see a summary of our results? Here is a table showing how Moondream 2 compares to other vision language models on our tests:

Moondream passed four of the seven tests we ran. Moondream did not pass the movie VQA and receipt OCR tasks. It missed one letter from the document in our document OCR task.

What is Moondream 2?

Moondream 2 is the latest model in the Moondream series of “tiny vision language models”. The model, developed by vikhyat, is trained to perform a wide range of tasks, from VQA to image captioning to object detection and calculating x-y points of regions in an image. The model is licensed under an Apache 2.0 license.

Moondream 2 can be run on both CPU and GPUs. You can run the model with the moondream Python package or through the Hugging Face Transformers Python package. The moondream Python package does not support GPUs at the time of writing this guide according to the project repository, although this may change in the future.

The Moondream transformers implementation has four modes of inference:

- caption() (Image captioning)

- query() (VQA)

- detect() (Object detection)

- point() (Calculate x/y coordinates of a region in an image)

At the time of writing this post, the latest model checkpoint was released on 2025-01-09.

Evaluating Moondream 2 for Multimodal Tasks

We evaluated Moondream 2’s multimodal support on several tasks, including:

- Zero-shot object detection

- Object counting

- Visual question answering (VQA)

- Document OCR

- Document VQA

- Real-world OCR

Below, we show our results from each test.

We run our analysis on a T4 GPU in Google Colab using the 2025-01-09 checkpoint of the vikhyatk/moondream2 project via the Hugging Face transformers package.

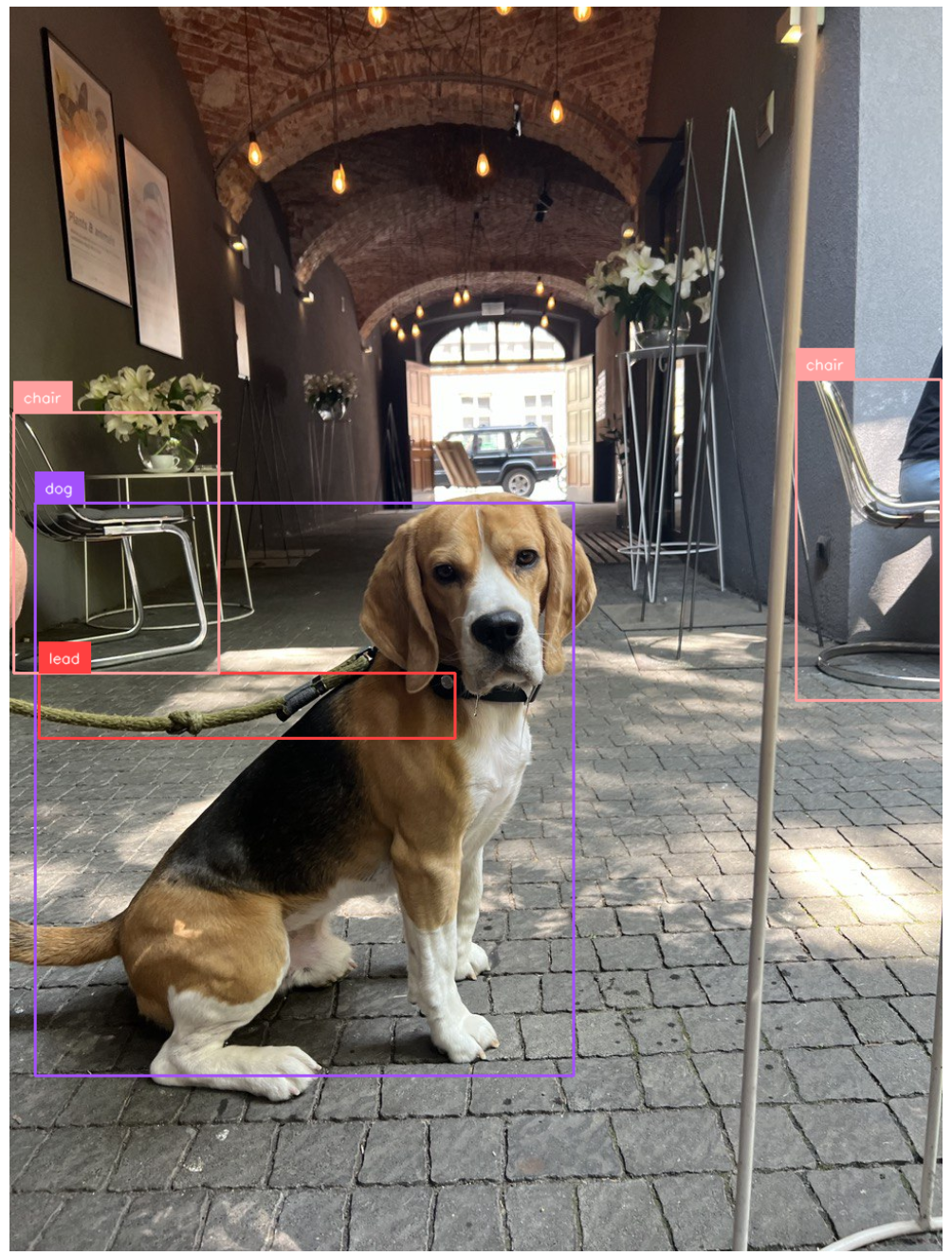

Moondream 2 Zero-shot object detection

Moondream 2 has impressive zero-shot object detection capabilities. Whereas many multimodal language models we have tested have struggled with zero-shot detection – including OpenAI o3-mini, SmolVLM 2.0, and Claude 3 Opus – Moondream was designed with detection in mind.

We provided Moondream an image and asked the model to identify the following objects:

- dog

- lead

- Chair

You need to make one detect() call per class that you want to identify. You cannot yet provide multiple prompts in the same detect() call. With that said, we run detect() calls for each class, merge the results, and plot them on our input image.

Here is the image we provided:

Moondream returned the following results:

dog [{'x_min': 0.02783203125, 'y_min': 0.39892578125, 'x_max': 0.60498046875, 'y_max': 0.85888671875}]

lead [{'x_min': 0.0322265625, 'y_min': 0.53515625, 'x_max': 0.4775390625, 'y_max': 0.587890625}]

chair [{'x_min': 0.84326171875, 'y_min': 0.2998046875, 'x_max': 0.99853515625, 'y_max': 0.5576171875}, {'x_min': 0.00439453125, 'y_min': 0.326171875, 'x_max': 0.22412109375, 'y_max': 0.53515625}]With this information, we scaled the coordinates and plotted them on our input image with the open source supervision Python package.

Here is the result of the coordinates when plotted on the image:

The model successfully identifies the locations of the objects in the image.

For reference, here is the code we used to plot the results from our model:

import cv2

import supervision as sv

import numpy as np

image = Image.open("lmms-tests/questions/simple-doge.jpeg")

# scale coords from relative to absolute

scaled = []

class_ids = []

classes_to_find = ["dog", "lead", "chair"]

for cls in classes_to_find:

result = model.detect(image, cls)["objects"]

for r in result:

x_min = int(r["x_min"] * image.width)

y_min = int(r["y_min"] * image.height)

x_max = int(r["x_max"] * image.width)

y_max = int(r["y_max"] * image.height)

scaled.append([x_min, y_min, x_max, y_max])

class_ids.append(classes_to_find.index(cls))

detections = sv.Detections(xyxy=np.array(scaled), class_id=np.array(class_ids))

labels = [

f"{classes_to_find[class_id]}"

for class_id in detections.class_id

]

box_annotator = sv.BoxAnnotator()

label_annotator = sv.LabelAnnotator()

annotated_image = box_annotator.annotate(

scene=image, detections=detections)

annotated_image = label_annotator.annotate(

scene=annotated_image, detections=detections, labels=labels)

annotated_imageMoondream 2 object counting

We started by asking Moondream 2 to count the number of coins in an image. We provided the following image and asked ”How many coins do I have?”:

The model returned:

There are four coins in the image.

This is the correct answer.

Moondream 2 Visual question answering (VQA)

We then asked Moondream 2 to identify the scene in a screenshot taken from a popular movie. We provided the following image and asked “Which movie is this scene from?”:

The model replied “This scene is from the movie "The Kid." Some other open source models we have tested have struggled with this test, such as SmolVLM2 and PaliGemma.

We then asked the model to answer a question about a menu. We provided the following screenshot of a digital menu and asked the model “What is the price of Pastrami Pizza?”:

Moondream 2 returned:

$27

The model successfully answered the question.

Moondream 2 Document OCR

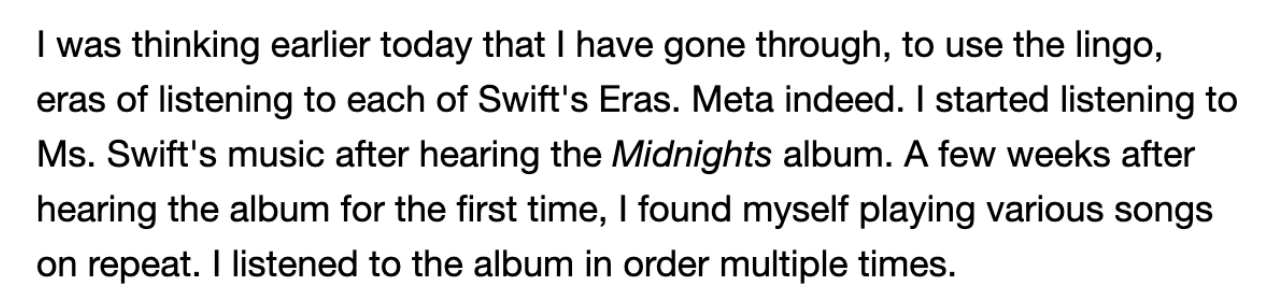

We then tested Moondream 2 on a document OCR task. We provided the following screenshot of a text document and asked Moondream to “Read the text”:

Moondream 2 returned:

I was thinking earlier today that I have gone through, to use the lingo, eras of listening to each of Swift's Eras. Meta indeed. I started listening to Ms. Swift's music after hearing the Midnight album. A few weeks after hearing the album for the first time, I found myself playing various songs on repeat. I listened to the album in order multiple times.

The model was almost correct. It missed the “s” in the word “Midnights”. It also did not italicise the word Midnights. The original image contains the word Midnights in italics.

Moondream 2 Document question answering

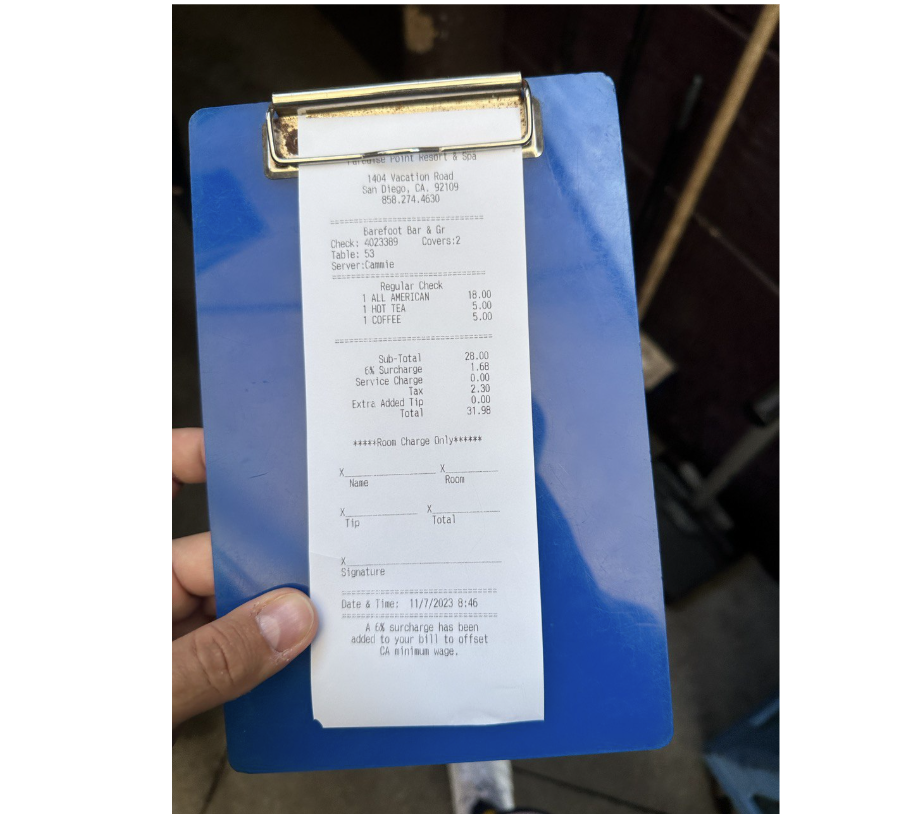

We then challenged Moondream 2 to identify a number on a receipt. We provided the following image and asked “How much tax did I pay?”:

In query() model, Moondream 2 returned $0.00. The model failed the test.

With that said, we were curious to see what the caption mode would return.

In the short caption mode, Moondream returned:

A blue clipboard holds a receipt from Barefoot Bar & Grill, displaying a total of $31.98, with a 5% surcharge and a 6% minimum wage added.

The model correctly identified the business from which the receipt was issued, and the total. The model hallucinated the “5% surcharge and a 6% minimum wage added” part of the response. The receipt contains a 6% surcharge but no “minimum wage” value.

The caption did not contain the tax due, but this is reasonable because we were unable to prompt for this information specifically.

Moondream 2 Real-world OCR

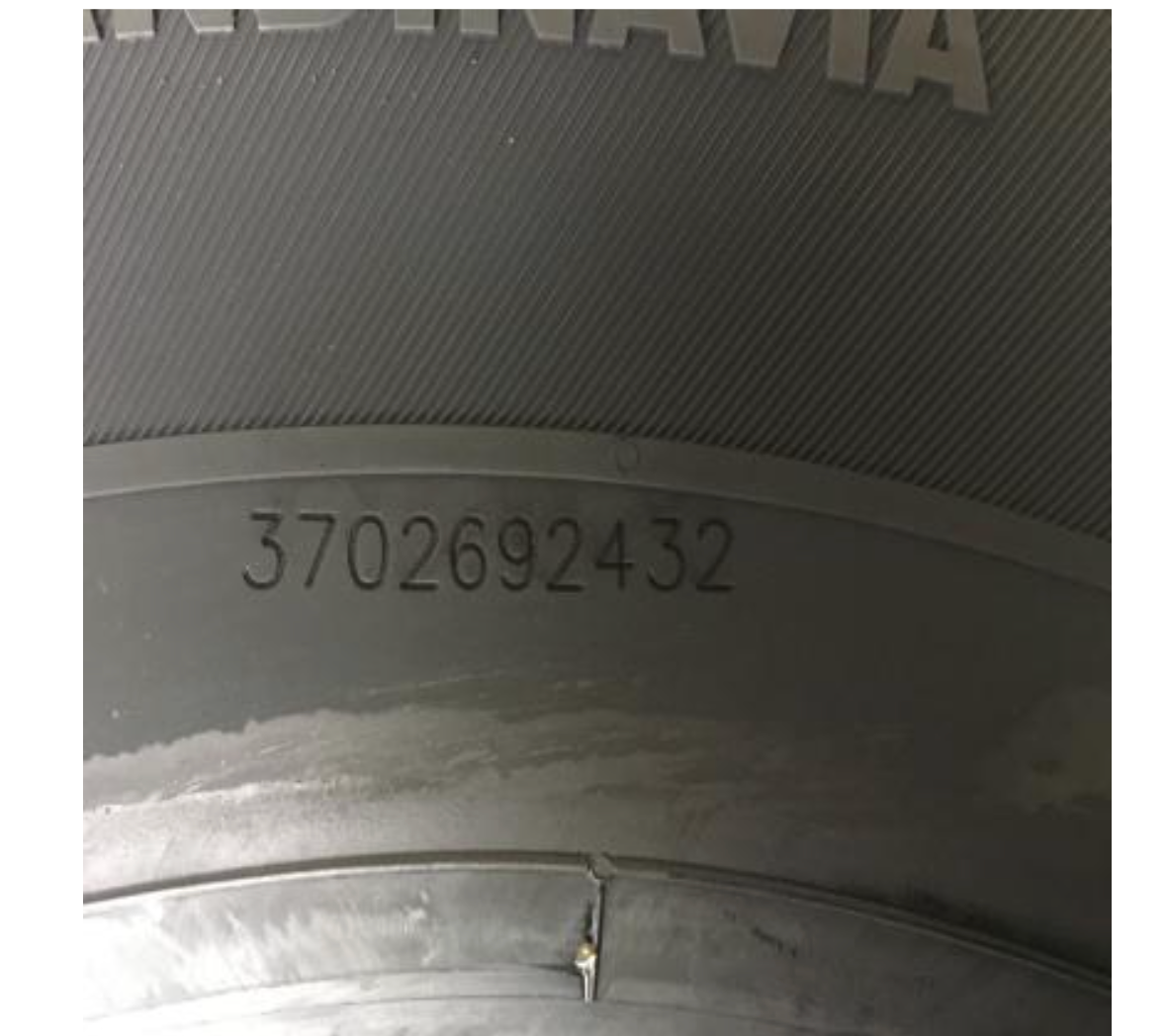

Finally, we tested Moondream 2 on a real-world OCR task. We provided the following image of a tire and asked the model to “Read the serial number”:

The model returned:

3702692432

Our model successfully returned the serial number.

Conclusion

Moondream 2 is a family of tiny, multimodal vision models. You can use Moondream 2 for visual question answering, image captioning, zero-shot object detection, and x-y point region calculation.

In this guide, we tested Moondream 2 on a wide range of tasks, including object detection, VQA, receipt OCR, and real-world OCR. Moondream 2 passed four of our seven tests. The model was particularly impressive at zero-shot detection, a task at which other multimodal models struggle.

Curious to learn more about how other multimodal models perform on our tests? Check out our list of multimodal model blog posts in which we analyze the capabilities of various new and state-of-the-art multimodal models.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Mar 11, 2025). Moondream 2: Multimodal and Vision Analysis. Roboflow Blog: https://blog.roboflow.com/moondream-2/