On January 31st, 2025, OpenAI released o3-mini, the latest model in their reasoning model series. OpenAI o3-mini has been "optimised for STEM reasoning" and achieves "clearer answers, with stronger reasoning abilities" than the O1 model series released last year.

When the model was released, input methods were restricted to text. But, on February 12th, various Redditors noticed that OpenAI started to quietly roll out multimodal support for the OpenAI o3-mini model series. OpenAI then confirmed the update on X. This support means that you can now upload an image to an O3 model and retrieve a result. Note that as of this writing, vision capabilities are not available via API.

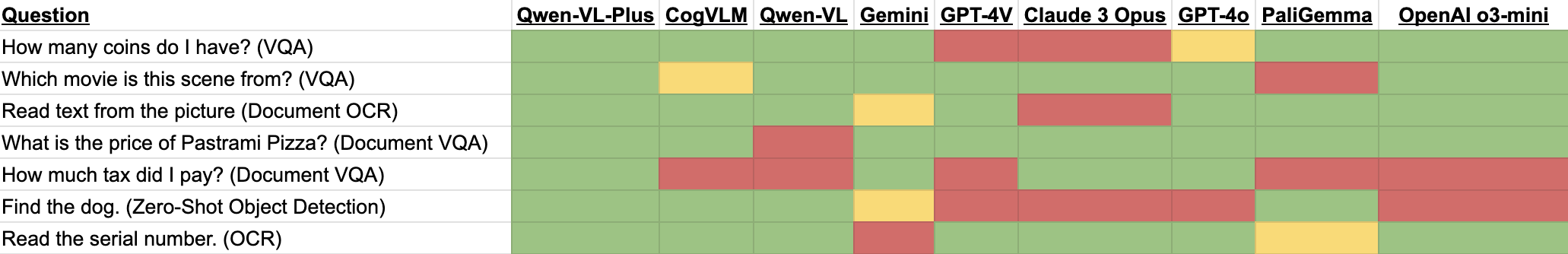

The Roboflow team has tested OpenAI o3-mini on several standard tasks we use to evaluate the breadth of tasks with which a multimodal model may be able to be used. In summary, O3 passed all five of seven of our tests. It failed at document VQA on a receipt and zero-shot object detection of a common object.

Here are our results in context of other models we have tested:

Our results with analysis below.

o3-mini Models and Support

o3-mini comes in three versions:

- O3 Low

- O3 Medium

- O3 High

All of these models are designed for complex reasoning, where the model will use chains of thought to analyze a question before rendering an answer. This can lead to more thoughtful answers than the output offered by models.

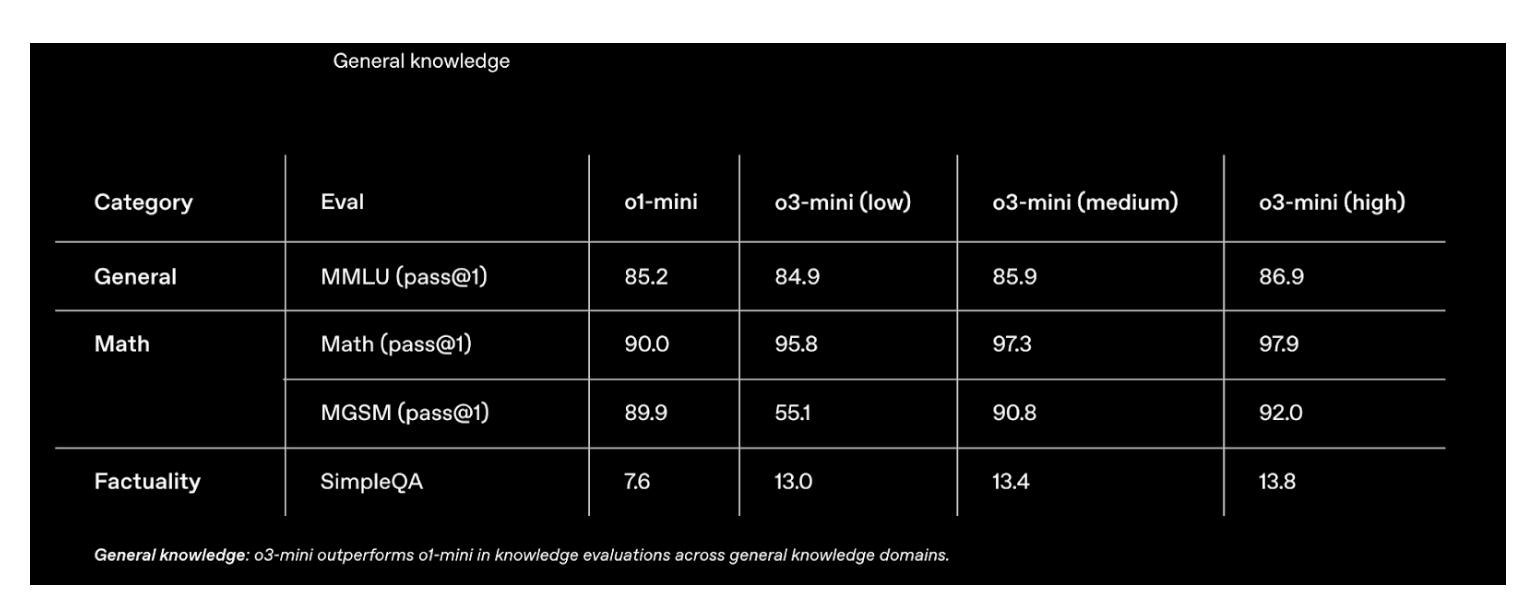

Each model achieves progressively better scores on the benchmarks OpenAI used to evaluate the model. You can read more about the benchmarks run on the model in OpenAI’s o3-mini launch post. Here is an example showing 03-mini High achieve better performance than o1-mini on all tested tasks.

All of these model versions now support multimodal input from the ChatGPT web interface (although support may still be rolling out at the time of publishing this guide).

With that said, in our tests of the OpenAI API, we were not able to get O3 to accept image inputs. When testing o3-mini, we received this error:

openai.BadRequestError: Error code: 400 - {'error': {'message': 'Invalid content type. image_url is only supported by certain models.', 'type': 'invalid_request_error', 'param': 'messages.[0].content.[1].type', 'code': None}}

We expect this will change as the model rolls out completely.

03-mini should not be confused with O3, another reasoning model by OpenAI but one that has not been released yet. O3 is expected to become part of a “unified” model release in the future.

OpenAI o3-mini Multimodal Tests

We evaluated OpenAI o3-mini’s multimodal support on several tasks, including:

- Object counting

- Visual question answering (VQA)

- Document OCR

- Document VQA

- Zero-shot object detection

- Real-world OCR

Below, we show our results from each test.

Our tests were run using “o3-mini”, which we assume to be the base OpenAI o3-mini. We used the ChatGPT web interface for our testing.

Object counting with OpenAI o3-mini

A task with which multimodal models often struggle is object counting. To probe at how OpenAI o3-mini does with this task, we asked the o3-mini model “How many coins do I have?” with the following input image:

The model answered:

It looks like there are four coins in the photo.

The model successfully responded to the question and answered correctly.

Visual question answering (VQA) with OpenAI o3-mini

A common use case for multimodal models like OpenAI o3-mini is answering questions about the contents of an image. To test o3-mini for visual question answering, we asked “What movie is this from?” with the following image from Home Alone:

The model reasoned for 11 seconds and returned:

It’s a still from the original Home Alone (1990).

The model correctly answered the question.

Document OCR with OpenAI o3-mini

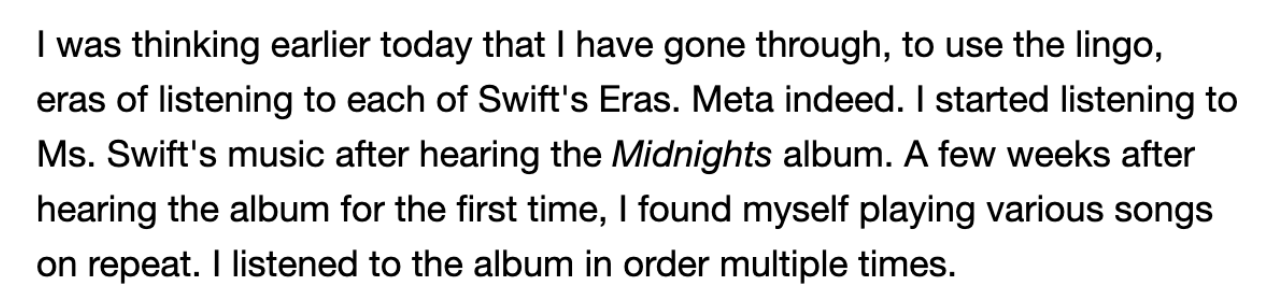

Multimodal models have proven effective for a wide range of document OCR use cases. Curious to see how OpenAI o3-mini performs, we uploaded the following screenshot with the prompt “Read text from image.”:

The model returned the text verbatim, except for missing the italics for the “Midnights” word.

Document question answering with OpenAI o3-mini

Another common use case for multimodal models is document question answering. This involves providing a document as an input and asking for a specific piece of information from the document.

To test OpenAI o3-mini at this task, we provided the following input image and asked “How much does Pastrami Pizza cost?”:

The model “reasoned for a couple of seconds” and returned:

According to the menu in the image, the Pastrami Pizza costs $27.

The model provided the correct answer. Of note, the model both acknowledged the type of document that had been uploaded – a menu – as part of its answer. This hints at the degree to which the model understood the type of document with which it was working.

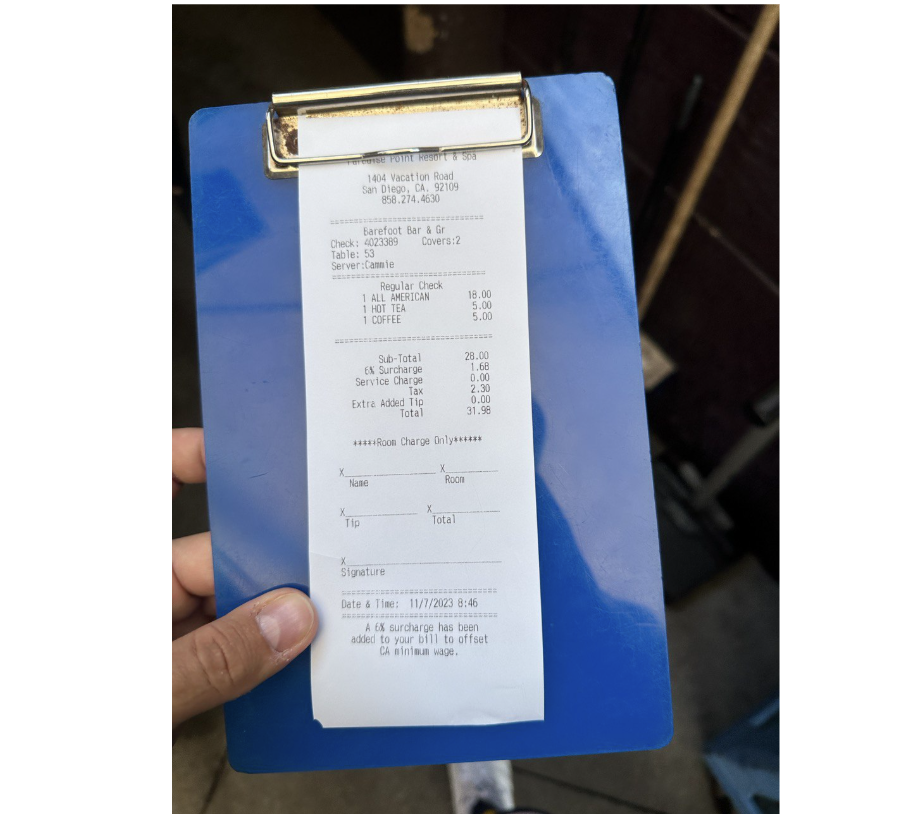

We also evaluated how the model performed at answering a question about a receipt. We uploaded the following receipt and asked “How much tax did I pay?”:

After reasoning for 32 seconds, the model answered:

> From the line labeled “Tax” on your receipt, it shows $2.18 in tax.

The model was incorrect. The correct answer was $2.30. This is a task at which some multimodal models struggle. For instance, we noticed Claude 3 struggled with this asks at its launch. GPT4-V could not complete this task at launch. PaliGemma struggled with receipts without fine-tuning.

Real-world OCR with OpenAI o3-mini

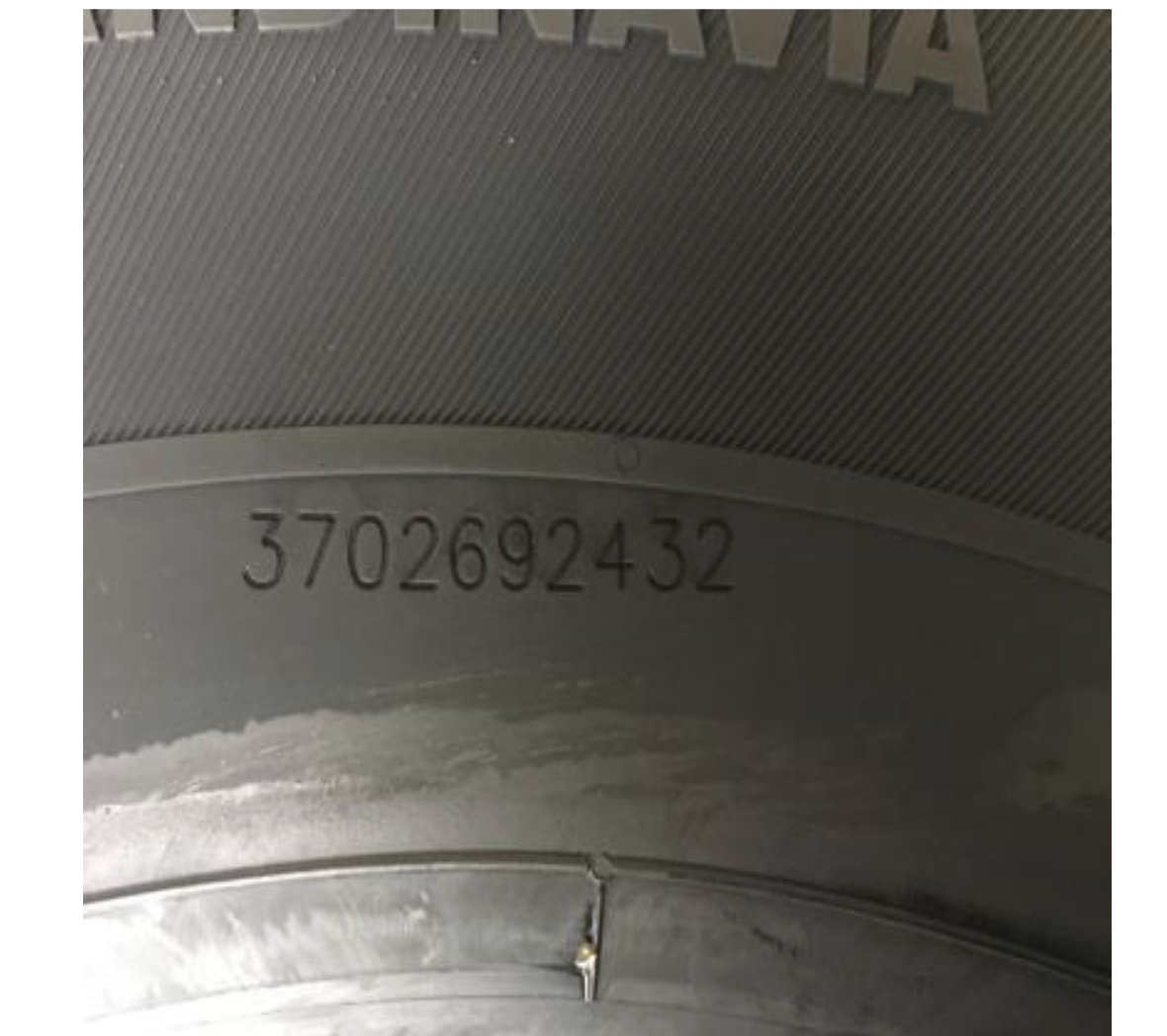

We also tested OpenAI o3-mini at reading serial numbers, an example of a “real-world” OCR task similar to the receipt reading example we explored in the last section.

We provided the following image to OpenAI o3-mini and asked “Read the serial number.”:

The model reasoned for “a couple of seconds” and returned:

It appears the serial number shown in the image is 3702692432.

O3 successfully read all the digits in the serial number.

Zero-shot object detection with OpenAI o3-mini

Zero-shot object detection involves using a model to identify the location of an object without fine-tuning the model. This is a task with which many multimodal models struggle out-of-the-box, unless they have been designed specifically for object detection.

We have found previous OpenAI models to have limited zero-shot object detection capabilities. Of note, you can fine-tune GPT-4o for object detection which lets you achieve better performance. But, we still find fine-tuning a smaller model dedicated to object detection – for example, YOLO11 – to be more effective.

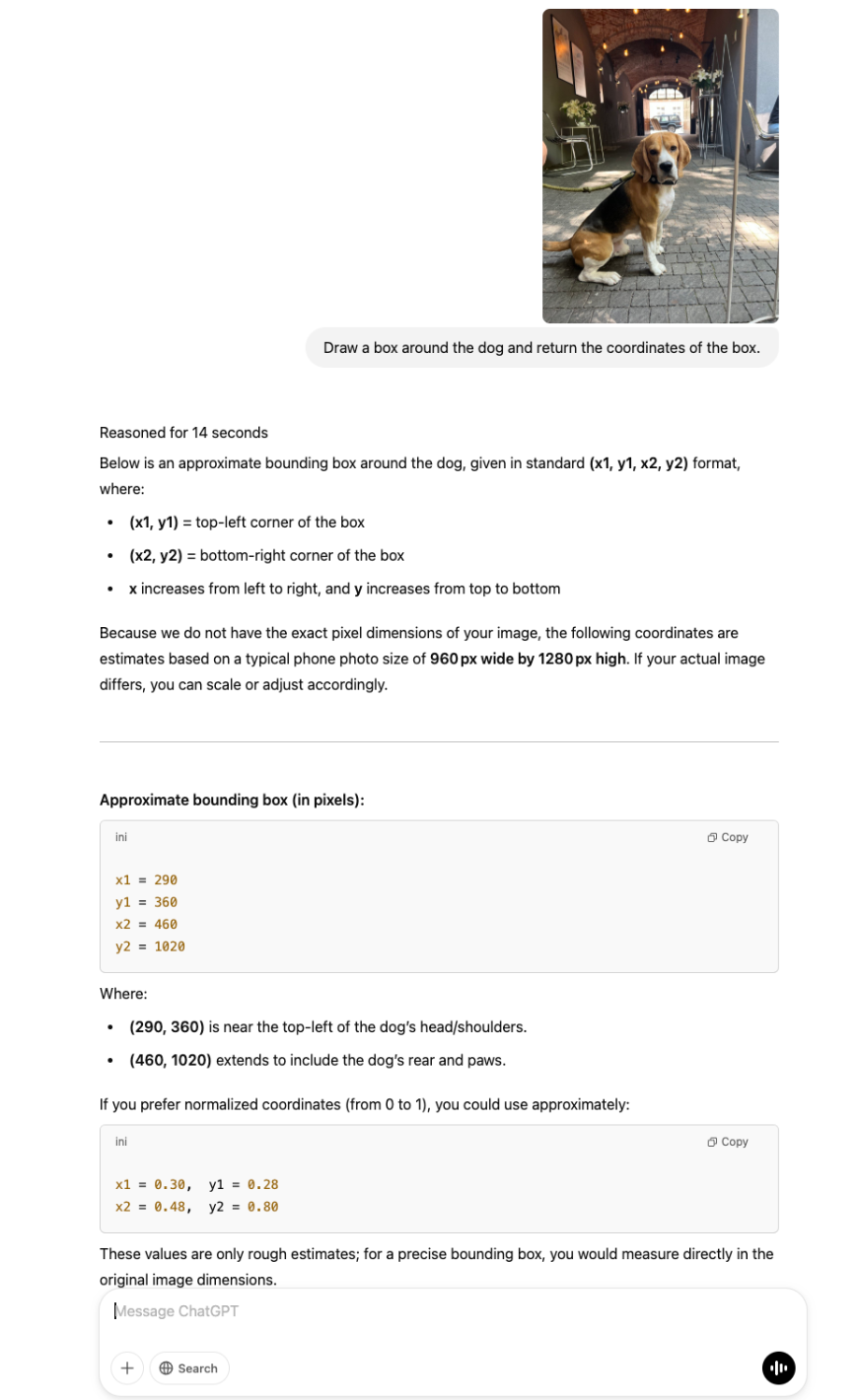

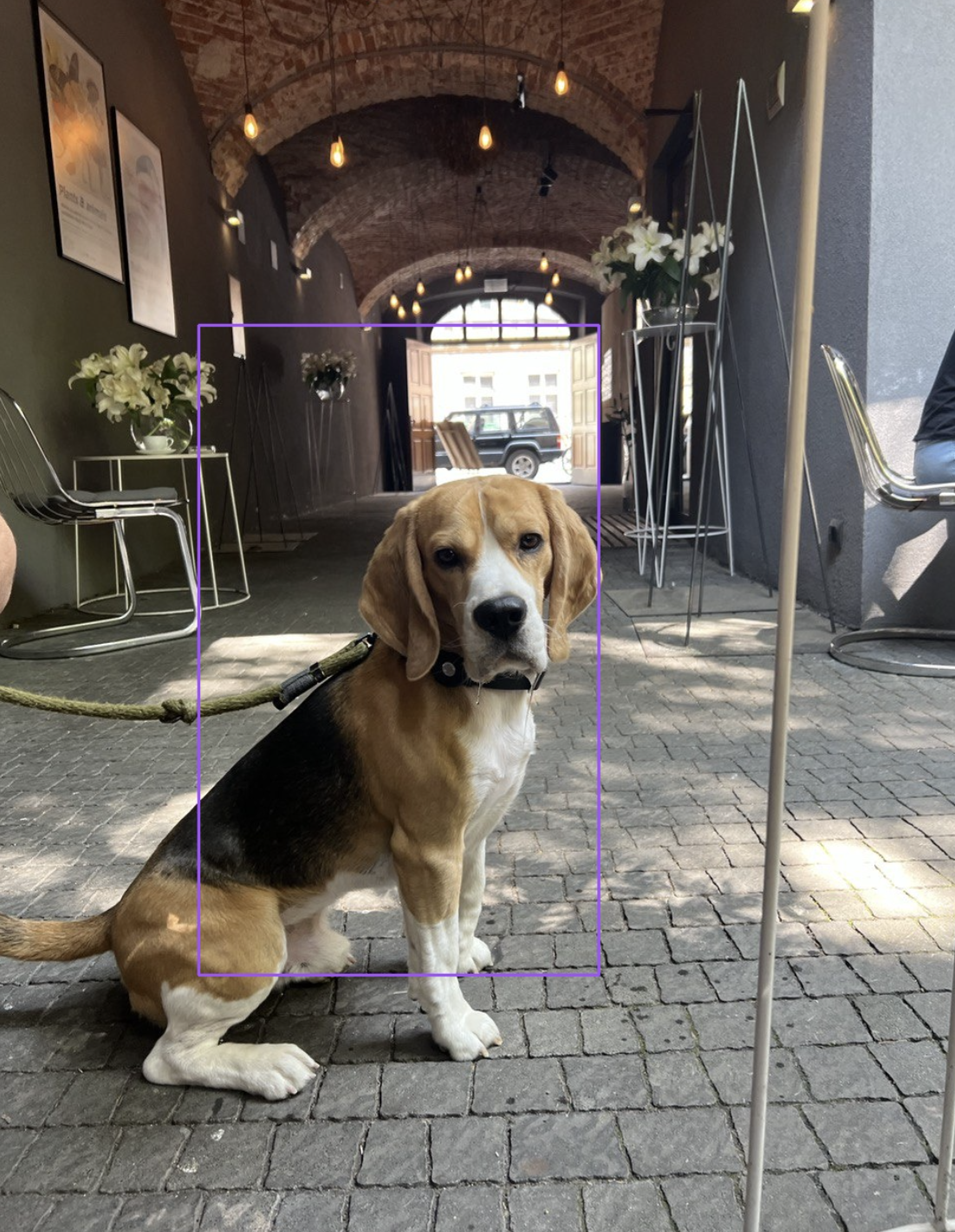

We provided the following image asked “Draw a box around the dog and return the coordinates of the box”:

O3 reasoned for 14 seconds and returned a long response walking through the structure of bounding box coordinates it returned, and more.

The model acknowledged that because it did not have the image size, it would estimate the size of the image. This shows that OpenAI’s model must not pass in image resolution during the reasoning process.

The full result was:

We plotted the x1, y1, x2, y2 coordinates on an image and got the following:

While the model was able to identify the general location of the dog in the image, the bounding box coordinates are not precise; they miss most of the dog, and include additional background that does not relate to the dog.

We then used the prompt:

Draw a box around the dog and return the coordinates of the box. The image resolution is:

height 1280

width 960

This prompt includes the exact resolution of the image.

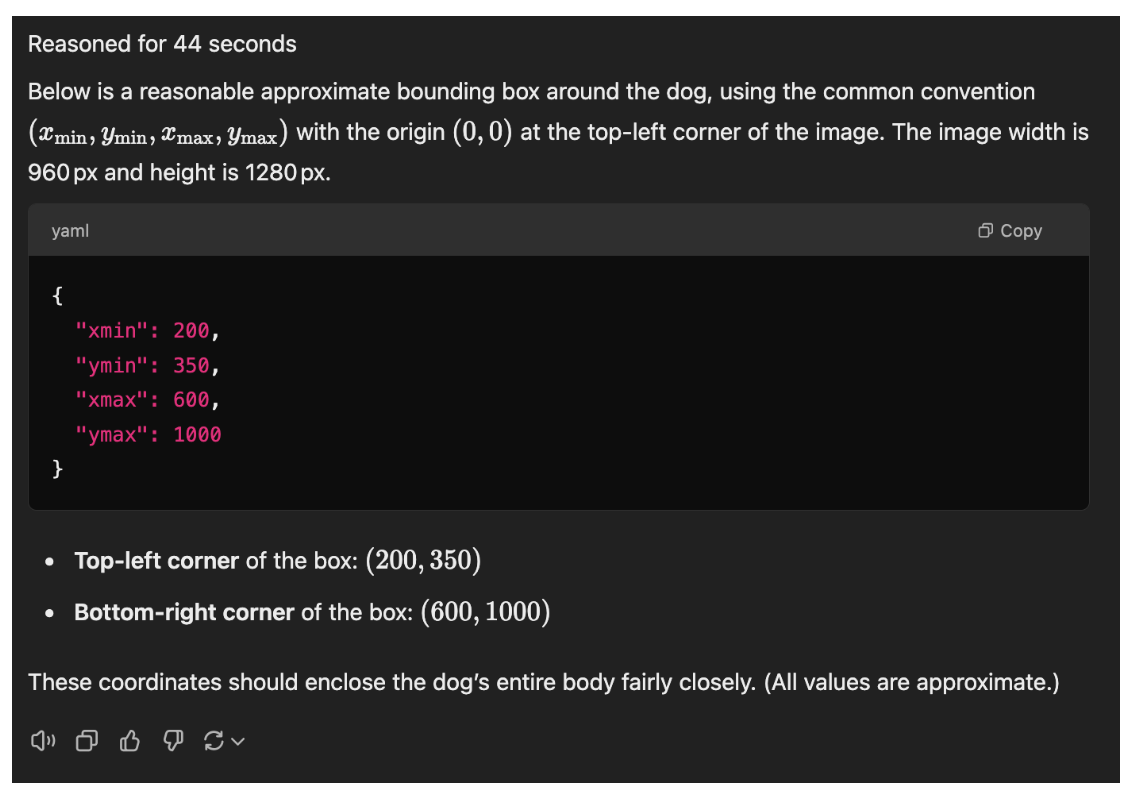

The model reasoned for 43 seconds then returned:

Here are how the coordinates look when plotted on the input image:

The bounding box encapsulates more of the dog but is still not placed correctly. This demonstrates the limitations of O3 for object detection.

We also tested o3-mini High on this task and achieved similar results.

Conclusion

Released in January 2025, o3-mini is the latest in OpenAI’s series of reasoning models. The model is now rolling out with support for image inputs. This means you can ask a question about an image and have the model reason before returning an answer.

In this guide, we demonstrated the breadth of multimodal tasks that OpenAI o3-mini can help with, from document VQA to receipt reading to object counting. The model thrived at all of the tasks we sent except for object detection, a task that multimodal models that have not been designed specifically for object detection tend to struggle.

For object detection, we recommend training a fine-tuned model with a custom dataset using an architecture designed for object detection. These architectures include YOLO11 and Florence-2. Object detection architectures can usually be run at several FPS, in contrast to large multimodal models like those in the GPT series that may take seconds to respond to a query.

In the case of receipt VQA, the model returned an accurate response but in a much longer period of time than it would take to use another model without reasoning capabilities. This shows that one should ask: Do I need reasoning for my task? before rushing to use a reasoning model.

As is always the case when a new model comes out, we recommend testing the model to see where it works for your use case. Then, you can make a plan as to when the model can be used in your workflow.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Feb 13, 2025). OpenAI o3-mini: Vision and Multimodal Features. Roboflow Blog: https://blog.roboflow.com/o3-mini-multimodal/