The Open Images Dataset was released by Google in 2016, and it is one of the largest and most diverse collections of labeled images. Since then, Google has regularly updated and improved it. The latest version of the dataset, Open Images V7, was introduced in 2022.

Globally, researchers and developers use the Open Images Dataset to train and evaluate computer vision models. Its impact is colossal and unique. Unlike other datasets, the Open Images Dataset supports multiple types of annotations and can be used for various computer vision tasks. It has over nine million images covering almost 20,000 categories.

In this article, we’ll walk through the features of the Open Images Dataset and how you can use it in your computer vision projects. Let’s dive right in!

What is the Open Images Dataset?

The Open Images Dataset is a vast collection of around 9 million annotated images. The dataset is divided into a training set of over nine million images, a validation set of 41,620 images, and a test set of 125,436 images.

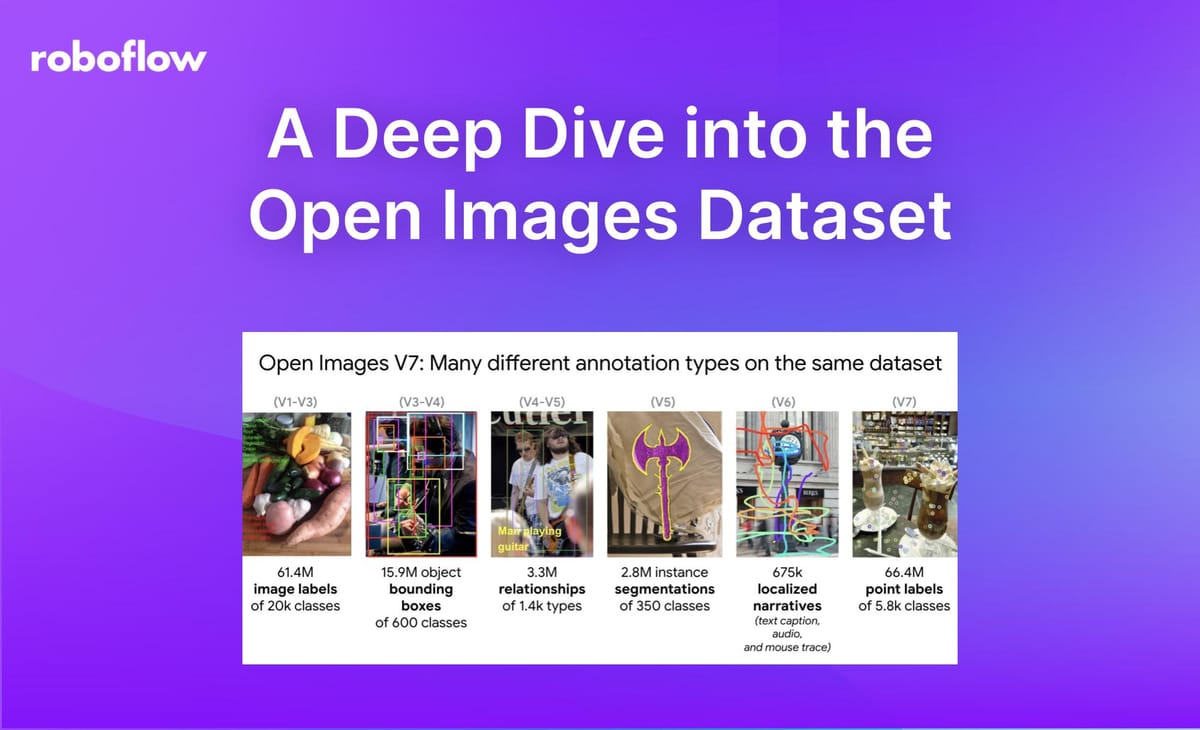

One of its standout features is its comprehensive annotations. It includes image-level labels, object bounding boxes, object segmentation masks, visual relationships, and localized narratives. Using just this dataset, you can test multiple approaches to solving a computer vision problem. This is special because, normally, datasets do not offer such a wide range of annotations.

Why is the Open Images Dataset Useful?

The Open Images Dataset is incredibly valuable to the computer vision community for a variety of reasons. For starters, the diversity and scale of the dataset help models trained on it be more robust and better at handling different real-world scenarios. The annotations in the Open Images Dataset are also of very high quality. Most of the bounding boxes are manually drawn by professional annotators.

The dataset's extensive annotations make it suitable for numerous applications across various industries. For instance, in manufacturing and logistics, the dataset can be used to develop models that understand and monitor worker locations on a manufacturing floor, enhancing safety and operational efficiency. In retail, it helps in creating systems for automated inventory management and visual search. The dataset's richness and detail also make it ideal for developing advanced AI applications in areas such as robotics, augmented reality, and smart city technologies.

Components of the Open Images Dataset

To give you a better sense of its scale and richness, let’s take a closer look at the types of annotations that the dataset includes.

Image-Level Labels

The dataset contains 61.4 million image-level labels across 20,638 classes. These labels help classify images into various categories by providing a general description of the content present in each image. Image-level labels are useful for tasks such as image classification, where the goal is to identify an image's main subject or theme.

Bounding Boxes

It boasts about 16 million bounding boxes covering 600 object classes on 1.9 million images. Bounding boxes are rectangular annotations that define the location and size of objects within an image. They are crucial for object detection tasks, where the objective is to identify and locate specific objects in an image.

Segmentation Masks

Version 5 of the dataset introduced segmentation masks for 2.8 million objects in 350 classes. These masks outline objects, providing detailed spatial information by marking the exact pixels that belong to each object. Segmentation masks help with instance segmentation, where individual instances of objects are distinguished at the pixel level.

Relationship Annotations

The dataset includes 3.3 million annotations that capture relationships between objects. It details 1,466 unique relationship triplets, object properties, and human actions. These annotations describe how objects in an image are related to each other (e.g., "person riding a bike") and are valuable for scene understanding and visual relationship detection tasks.

Localized Narratives

Version 6 added 675,000 localized narratives, combining voice, text, and mouse traces to describe objects in images. Localized narratives provide rich contextual information about objects and their interactions within an image, using a combination of spoken descriptions, textual annotations, and spatial references. This annotation type is useful for tasks requiring comprehensive scene descriptions, such as image captioning and visual storytelling.

Point-Level Labels

Version 7 introduced 66.4 million point-level labels across 1.4 million images, covering 5,827 classes. These annotations consist of specific points of interest within an object, such as key features or landmarks. They are particularly useful for tasks like zero/few-shot semantic segmentation, where precise localization of object parts is needed.

How to Use the Open Images Dataset

The Open Images Dataset is a great resource for training and evaluating computer vision models. Here’s a simple guide on accessing this dataset, training an object detection model using this dataset, and deploying a model using this dataset and Roboflow.

Accessing the Dataset

You can manually download a subset of the Open Images Dataset in a few simple steps. First, download the `downloader.py` script from the Open Images Dataset repository.

You can do this by running:

Wget https://raw.githubusercontent.com/openimages/dataset/master/downloader.pyNext, create a text file with the IDs of the images you want to download. Then, use the Explore tab of the Open Images Dataset repository to browse the images in the dataset and select the ones you are interested in. Since we are going to train an object detection model, we are interested in bounding box annotations.

Each line of the text file should look like $SPLIT/$IMAGE_ID, where $SPLIT is "train", "test", "validation", or "challenge2018".

For example:

train/f9e0434389a1d4dd

train/1a007563ebc18664

test/ea8bfd4e765304dbThen, you can run the script you downloaded as follows:

python downloader.py $IMAGE_LIST_FILE --download_folder=$DOWNLOAD_FOLDER --num_processes=5You can also download the entire dataset in multiple compressed files from the Open Images Dataset GitHub Repository. The dataset’s official documentation offers a couple of other download options.

Uploading to Roboflow

Once you’ve downloaded your dataset, you can use Roboflow to train your model. Start by creating a Roboflow account, signing in, and creating a new project for object detection on your dashboard, as shown below.

Then, you can upload the images and annotations in your dataset by dragging and dropping as shown below. Since your object detection dataset is a subset of the Open Images dataset, it already includes annotations, and you can skip the annotation step. If your dataset lacks annotations or you want to add any annotations, you can annotate it using Roboflow's tools.

Create a Dataset Version

After uploading your dataset, you can generate a dataset Version. For the initial version, avoid setting pre-processing or augmentation steps to assess the performance of your annotated data accurately. Click "Generate" to create the dataset version. Generating the dataset version may take a few minutes, depending on the size. Once ready, you can start training your model.

Training Your Model

Next, navigate to the dataset version page and click "Train a Model." Choose the "Fast" training option and follow the on-screen instructions, as shown below. Roboflow will allocate a cloud-hosted computer to handle your training job.

During the training process, a graph will dynamically update, showing the model's performance metrics. Once the training is complete, you will receive an email notification.

Deploying Your Model

With your object detection model trained, the next step is to deploy it. Roboflow provides flexible deployment options, allowing you to deploy the model on the cloud, locally, or on an edge device. Here, we will understand how to deploy the model using Roboflow Inference.

The Roboflow Inference Server is an HTTP microservice interface that supports many different deployment targets via Docker and is optimized to route and serve requests from edge devices or via the cloud in a standardized format.

We’ll start by Installing the Inference SDK. Open your terminal or command prompt and run:

pip install inference-sdkThen, you can use the following code to deploy your model and run inference on a local image. Be sure to replace "YOUR_IMAGE.jpg" with the path to your local image file and "YOUR_MODEL_ID" with your model's ID. This code sets up the InferenceHTTPClient with the necessary API URL and key and then performs inference on the specified image.

from inference_sdk import InferenceHTTPClient

CLIENT = InferenceHTTPClient(

api_url="https://detect.roboflow.com",

api_key="YOUR_API_KEY"

)

result = CLIENT.infer("YOUR_IMAGE.jpg", model_id="YOUR_MODEL_ID")By following these steps, you can seamlessly use the Open Images Dataset to train and deploy powerful computer vision models, using comprehensive annotations and rich data to achieve accurate and robust results.

Limitations of the Open Images Dataset

Despite its extensive and detailed annotations, the Open Images Dataset has certain limitations that need to be kept in mind while using it.

Data Volume

One of the biggest challenges is the sheer amount of data. With around nine million images and a wealth of annotations, managing this dataset requires a lot of storage and processing power. Smaller research teams or organizations with limited resources may find it difficult to handle such large datasets. Working with this data requires high-performance hardware, significant storage capacity, and efficient data management strategies.

Annotation Quality

Even though the Open Images Dataset is known for its high-quality annotations, maintaining consistency and accuracy across millions of annotations is tough. Despite the use of professional annotators and advanced tools, some errors and inconsistencies can still slip through. For example, bounding boxes might not perfectly fit the objects, or segmentation masks might lack precise detail. These issues can affect the performance and reliability of the models trained on this data.

Narrowing Performance Gains

As modern benchmark datasets like Open Images push the limits of what’s possible, the performance differences among top models become minimal. For instance, the top five teams in the 2019 Open Images Detection Challenge had less than a 0.06 difference in mean average precision (mAP). While the community continues to develop new techniques, the improvements are becoming minor, suggesting a potential slowdown in performance gains from current datasets and models.

Conclusion

The Open Images Dataset is an excellent tool for exploring computer vision. Its vast and varied collection of annotated images makes it perfect for research. Open Images Dataset’s detailed annotations help in creating more accurate and reliable models. If you are interested in computer vision and AI, use this dataset to explore new possibilities and push the limits of what can be achieved in computer vision!

Keep Reading

- Learn more about Roboflow Inference

- Find out more about Improving Computer Vision Datasets and Models

- Explore Different Computer Vision Tasks

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Jul 16, 2024). What is the Open Images Dataset? A Deep Dive.. Roboflow Blog: https://blog.roboflow.com/open-images-dataset/