Roboflow now has an integrated solution to help our customers leverage third-party outsourcing services for image labeling. This solution allows you to get the labels you need to train high-quality models without having to set up an internal team for labeling.

Today, we will explore how Roboflow’s customers can use our vetted third-party partners to outsource image labeling, resulting in improved scalability, cost-effectiveness, and accelerated development.

The Importance of Image Labeling in Computer Vision

Image labeling is an important process in the development of computer vision models. It involves assigning relevant tags or annotations to images, enabling algorithms to recognize and understand visual elements. Accurate labeling of vast amounts of data is essential for training computer vision systems to accurately detect objects, recognize patterns, and perform various tasks, such as object classification, object detection, and semantic segmentation. Here are some ways to ensure your data is labeled well to set your model up for success.

While there are large amounts of public data available for use in models, it is hard to find data specific to your use case (i.e. identify a particular defect in a product). For all models, having annotated images tailored to your use case is crucial for building a high-performance model.

With our outsourced labeling solution, you can request detailed annotations for the images you need to train computer vision models.

Challenges Faced When You Label Your Own Images

Labeling images in house often requires allocating significant internal resources. You need to find contributors who can label data, appoint owners to oversee the process, and more.

This approach presents several challenges, including time constraints, scalability limitations, and potential biases introduced by internal labeling teams. As the demand for more labeled data grows, it can become increasingly difficult for you to maintain the required scale, consistency, and efficiency in-house.

Outsource Image Labeling with Roboflow

To overcome these challenges, you can now use Roboflow’s preferred labeling partners to label images for your projects. These services provide access to a vast workforce of skilled annotators and advanced labeling tools, enabling you to label large datasets quickly and accurately. By partnering with Roboflow’s third-party providers, you can achieve:

- Scalability: Outsourcing image labeling allows you to handle large volumes of data without compromising quality or speed. Third-party providers can effortlessly scale their workforce to meet demanding project requirements, ensuring a seamless labeling process.

- Cost-effectiveness: Building and maintaining an in-house image labeling team can be expensive, requiring infrastructure, recruitment, training, and ongoing management. Outsourcing image labeling reduces costs significantly, as you only pay for the labeled data you require, without incurring additional overhead expenses.

- Expertise and Quality: Third-party image labeling providers have extensive experience in annotating diverse datasets and possess domain-specific expertise. Their annotators are trained to follow specific guidelines and maintain consistency, resulting in high-quality labeled data that improves the performance of computer vision models.

- Faster Development Cycles: By leveraging outsourcing, you can accelerate your development cycles. You can rapidly iterate and fine-tune their models by accessing large volumes of labeled data in shorter periods of time, ultimately leading to faster time-to-market for their products or services.

- Bias Mitigation: Internal labeling teams can unintentionally introduce biases due to various factors. Outsourcing to third-party providers introduces an additional layer of objectivity and diversity, reducing the risk of biased annotations and enhancing the overall fairness and inclusivity of the computer vision models.

Get Started With Outsourced Data Labeling Today

By partnering with Roboflow’s third-party providers, you can harness the power of a skilled workforce, advanced tools, and specialized expertise to label large datasets quickly and accurately. This approach not only accelerates the development of computer vision models but also enhances their accuracy, fairness, and overall performance.

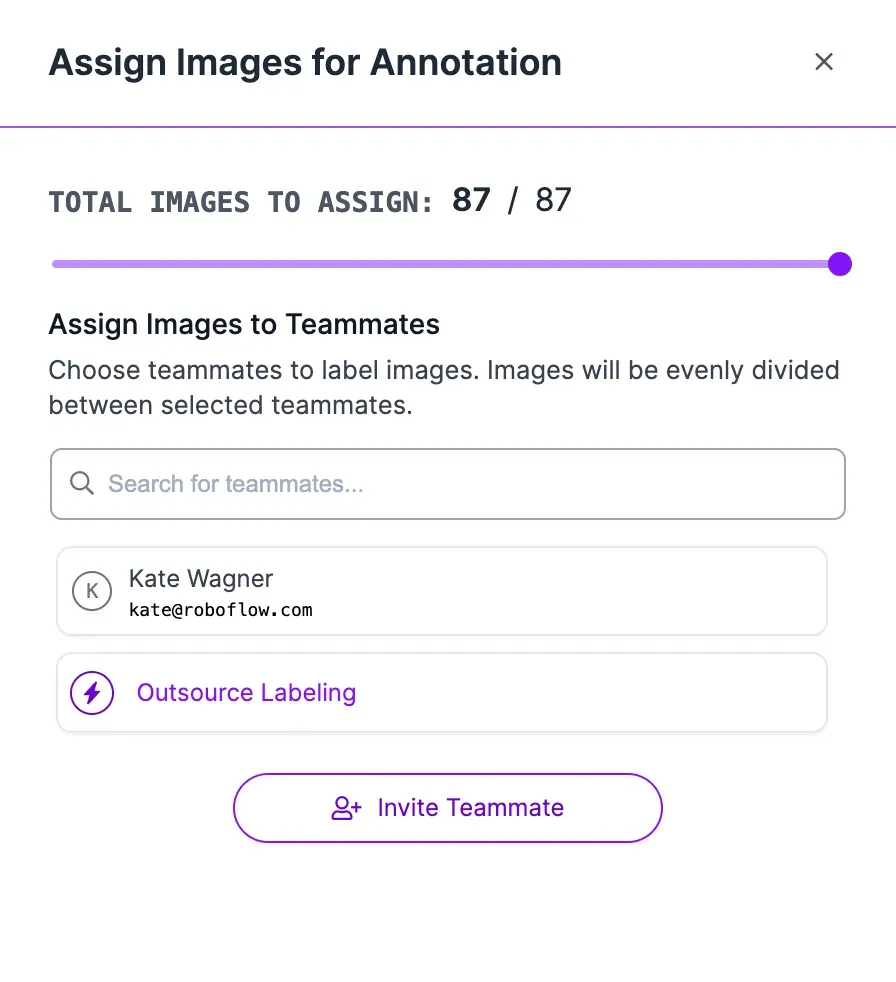

To get started, select the "Outsourced Labeling" button in your Roboflow workspace, located under "Assign Images" in your annotations tab.

If you have additional questions, contact us and a member of our team will get back to you shortly.

How to Provide Detailed Labeling Instructions to Outsourced Labelers

When working with Roboflow’s Outsource Labeling team, providing instructions to labelers is a critical part of the workflow to guarantee the best possible curation of your dataset. Here are some best practices to follow which will help ensure that labelers receive a high quality set of instructions to reference throughout their work. Detailed instructions help ensure labelers can meet your expectations given the ontology you have in mind for your project.

Tip #1: Provide Positive Examples

Examples of well annotated images are the most informative way to explain to other labelers how to annotate your data. By providing examples of what the correct outcome should look like, labeling teams have a source of truth that can be referenced at all times.

As a general rule of thumb: the larger that source of truth is, the less confusion or need for communication throughout the labeling process there is.

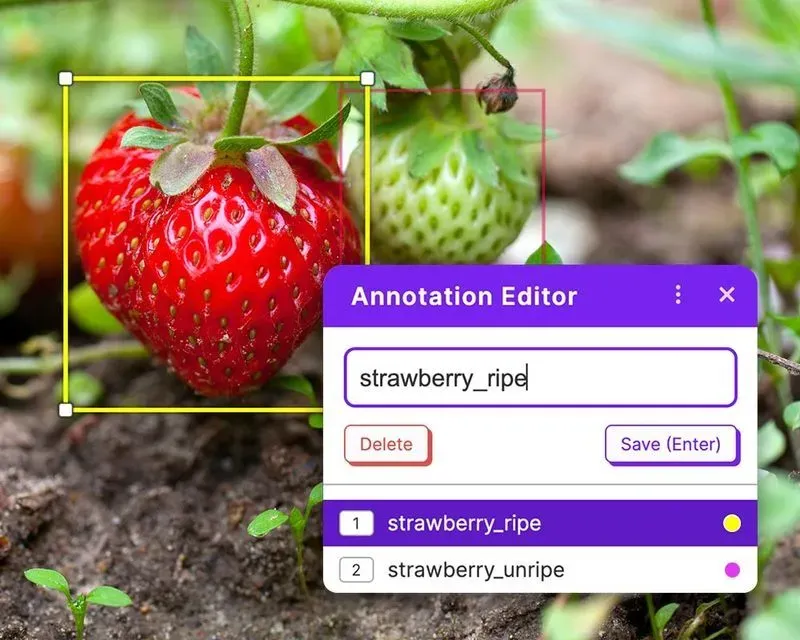

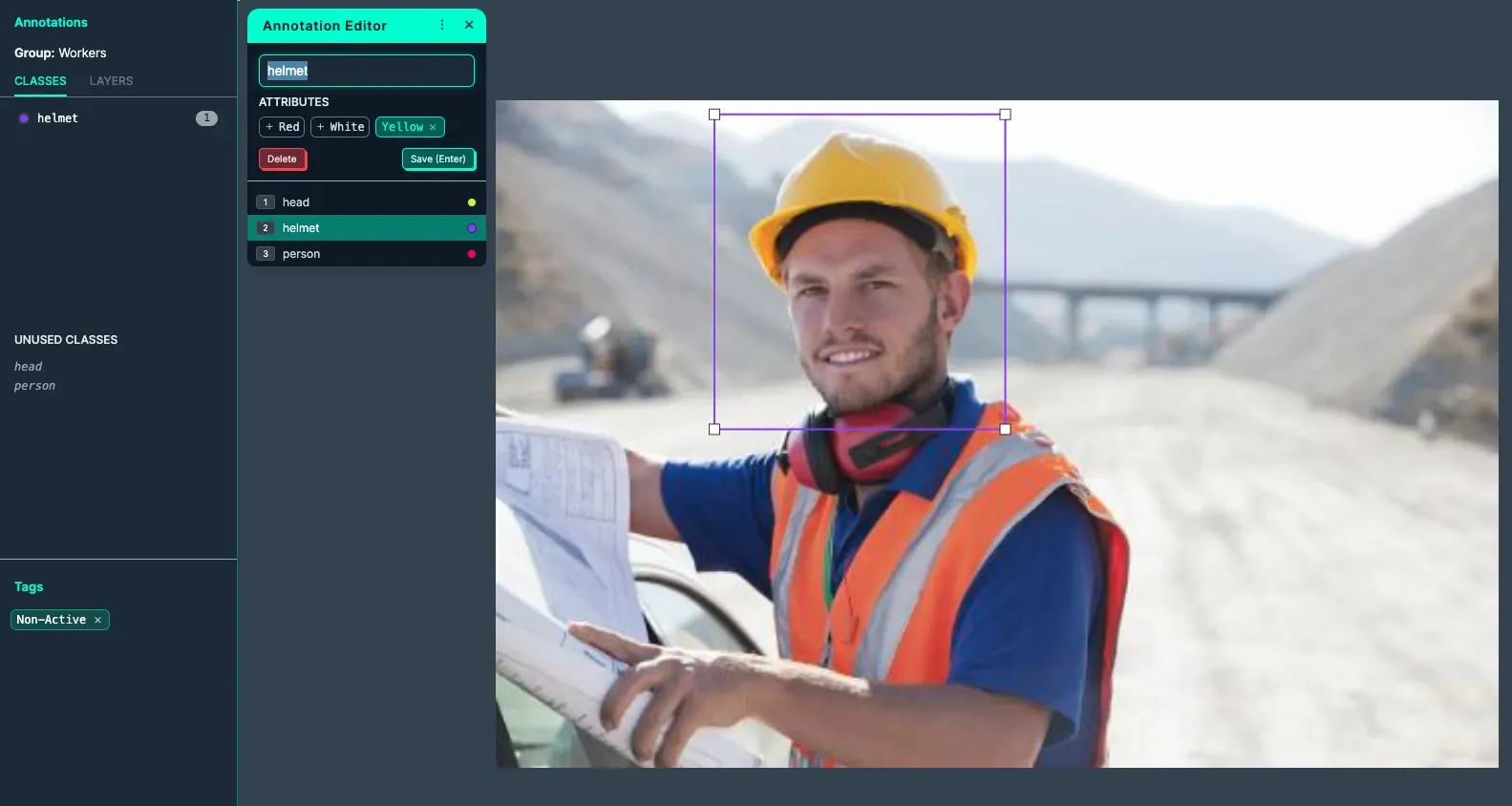

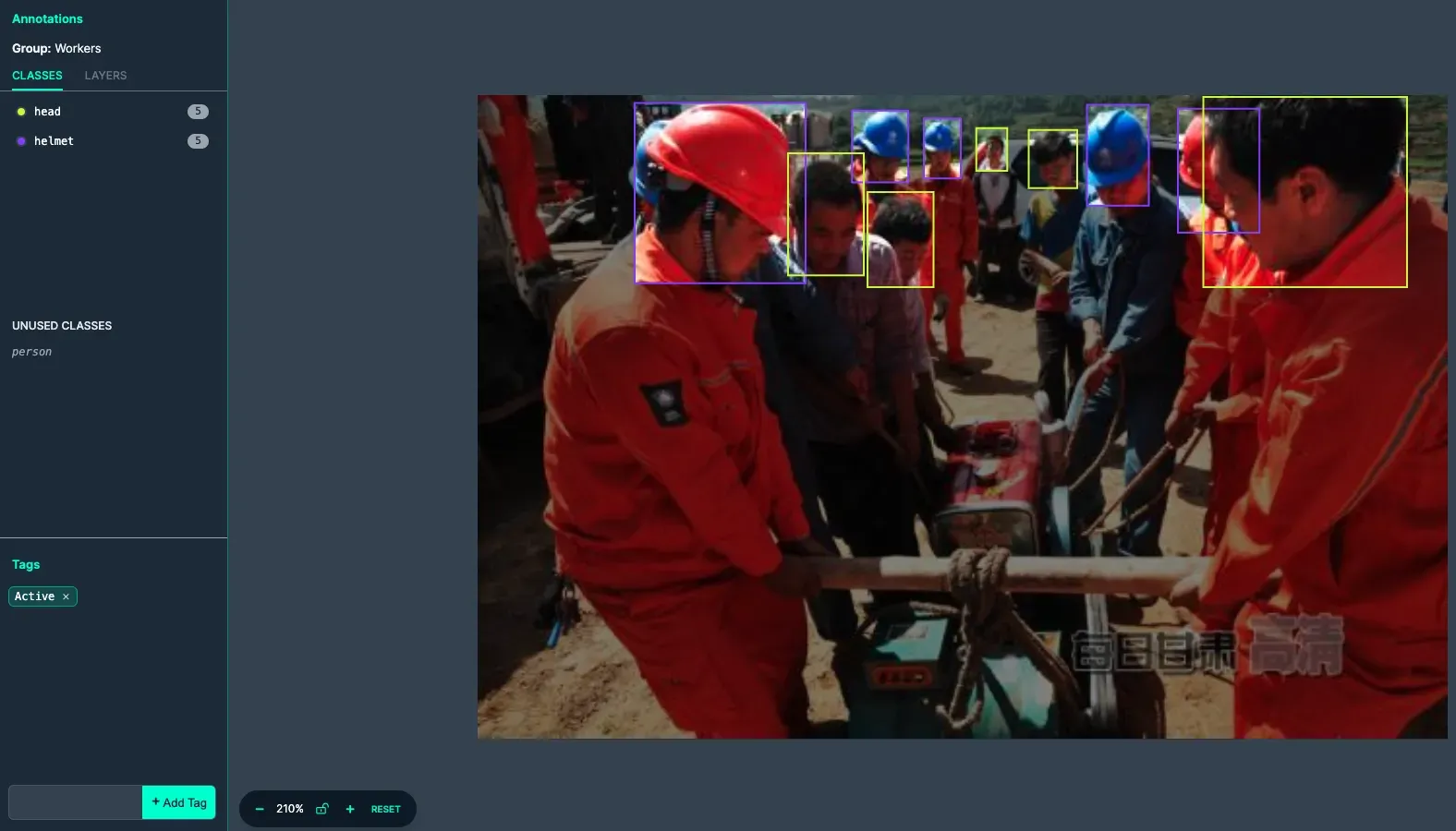

If you do not have any examples of pre-annotated data, you can label example images in Roboflow Annotate within your project. Here are some examples of well annotated images:

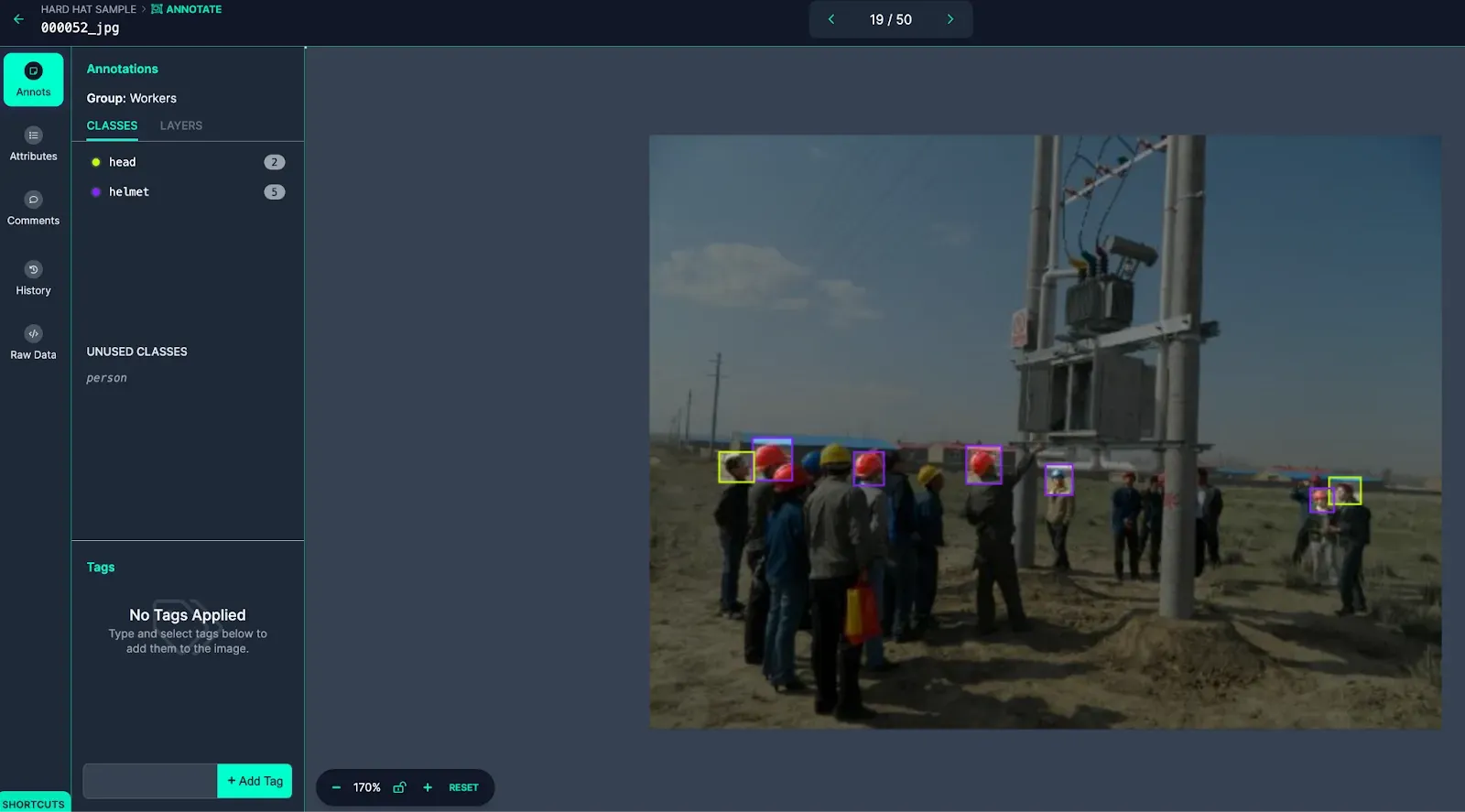

The image above has all its classes labeled properly. The image is also tagged to help with filtering and organization once added to the dataset.

The image above is labeled with an Annotation Attribute to help increase labeling granularity (Yellow) within the class (Helmet). The image is also tagged to help with filtering and organization once added to the dataset.

This image includes multiple classes that are all labeled correctly. The image is also tagged to help with filtering and organization once added to the dataset.

Tip #2: Provide Negative Examples

Negative examples, where you feature an image that has been annotated incorrectly, can also help labelers navigate annotation jobs. Negative examples are particularly useful when there is an element of subjectivity to the classes which lends itself to mislabelling. Alternatively, if there are many objects in the image that can get missed, including insufficient examples is also helpful.

Here are some examples of poorly annotated images:

The image above is missing annotations of visible objects, making it an insufficiently labeled image.

The image above is labeled incorrectly as both people pictured are wearing helmets, despite only one of them being labeled as Helmet. This image would be rejected and not added to the dataset.

Regardless or whether or not the instructions are positive or negative, explaining why they are examples is just as important as the images themselves.

Tip #3: Provide Guidance on Unannotated Images

As you lay the foundation for labeling your data through positive and negative examples, including unannotated images in the instructions can be helpful for labelers to confirm they are properly understanding how the data should be interpreted. If you provide positive and negative examples and labelers still have questions on how to label the unannotated data, that can be a strong signal that the instructions have not been clear enough.

Ultimately, the better a labeler can understand how you view the data, the more successful the labeling process will go. Having unannotated examples can serve as an initial litmus test of the quality of the instructions.

Tip #4: Detail Context for Your Project

By providing labeling instructions, you are teaching labelers how to walk in your shoes when it comes to annotating data. Providing context at a high level regarding the problem you are solving with computer vision can fill in the “why” behind the project.

This allows labelers to not only look at annotations through the lens of what is being annotated but also why it is being annotated. This may spark more informed questions throughout the labeling process or make sure details do not get missed.

Adding project context can be as simple as explaining “we are labeling whether or not workers at construction sites are wearing hard hats to help improve worker safety and reduce workplace injury”.

Outsourcing Data Labeling Solution

Outsourcing image labeling with Roboflow's trusted partners gives you the flexibility to scale your computer vision projects quickly, affordably, and with confidence. Our integrated solution removes the operational burden of managing an internal labeling team - so you can focus on building better models, faster. Get started today.

Cite this Post

Use the following entry to cite this post in your research:

Kate Wagner. (Jun 27, 2023). Launch: Outsourced Data Labeling in Roboflow. Roboflow Blog: https://blog.roboflow.com/outsourced-labeling-roboflow/