We recommend following the Roboflow Inference documentation to set up inference on a Raspberry Pi. The Inference documentation is kept up to date with new features and changes.

See the Quickstart to get started.

At Roboflow, our goal is to simplify all of the parts of the computer vision process from collecting images, to choosing which ones to label, to annotating datasets, to training and deploying models so that you can focus on solving the problems that are unique to your business and creating value instead of building redundant CV infrastructure.

One major component of simplifying deployment is support for the myriad of platforms and edge devices our customers want to use. Roboflow supports deploying into the cloud, onto a web browser, to smartphone apps, or directly to edge devices like NVIDIA Jetson and Luxonis OAK. And, starting today, we've added support for deploying to the Raspberry Pi via our Inference Server.

This means you can use all of your existing application code that works with our Hosted API or other supported edge devices and it will work seamlessly with your Raspberry Pi. Read the full Roboflow Rapsberry Pi Documentation here.

Before You Deploy

The below post assumes you have a model ready to deploy to your Raspberry Pi, and only walks through deployment of that model. If you're looking to start from images and no trained model, follow this guide: how to deploy computer vision on a Raspberry Pi.

System Requirements

You'll need a Raspberry Pi 4 (or Raspberry Pi 400) running the 64bit version of Ubuntu. To verify that you're running a compatible system, type arch into your Raspberry Pi's command line and verify that it outputs aarch64.

Setup and Installation

Our inference server runs as a microservice via Docker. You'll need to install Docker on your Raspberry Pi; we recommend using the Docker Ubuntu convenience script for installation.

curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.shThen pull our inference server from Dockerhub. It will automatically detect that you're running on a Raspberry Pi and install the correct version.

sudo docker pull roboflow/inference-server:cpuThen run the inference server (and pass through your network card):

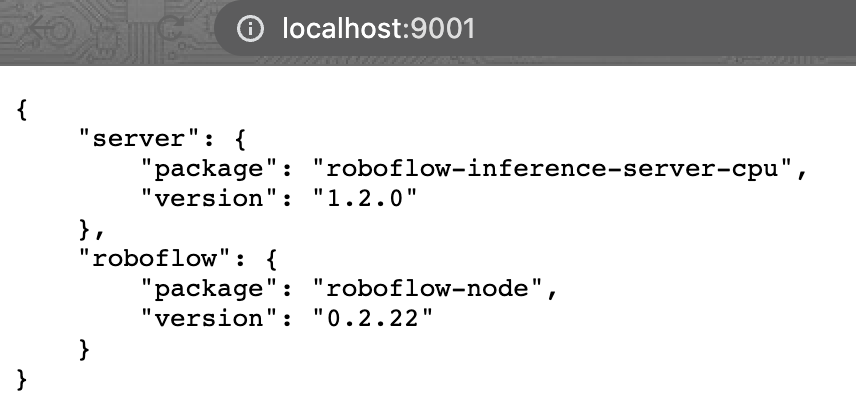

sudo docker run --net=host roboflow/inference-server:cpuYou can now verify that the inference server is running on port 9001 by visiting http://localhost:9001 in your browser on the Raspberry Pi (or curling it if you're running in headless mode). If everything's running correctly, you'll get a welcome message.

Using it With Your Application

The Roboflow Inference Server for Raspberry Pi is compatible with the API spec of our Hosted API and other inference servers, so you can use your existing code by swapping out https://detect.roboflow.com with http://localhost:9001

Additionally, our Roboflow pip package now supports local inference servers, so if you're writing a Python application, using your model is as simple as pip install roboflow then

from roboflow import Roboflow

rf = Roboflow(api_key="YOUR_API_KEY_HERE")

version = rf.workspace("your-workspace").project("YOUR_PROJECT").version(1, local="http://localhost:9001")

prediction = version.model.predict("YOUR_IMAGE.jpg")

print(prediction.json())Putting it Through its Paces

You can try using our Server Benchmarker to see how fast your model runs.

- Clone the repo

- Run

npm installin the top level directory of the repo - Add your Roboflow API Key in a file called

.roboflow_key - Edit the configuration in

benchmark.jsto pointservertohttp://localhost:9001and reference your Roboflowworkspaceandmodel - Run

node benchmark.js

It will use your Pi to get predictions for the images in your valid set and return back the mAP and speed. We saw inference speed of about 1.3fps on our Raspberry Pi which is plenty for many use-cases, like security camera monitoring, entry control, occupancy counting, retail inventory, and more.

If you need faster speed, consider using an OAK Camera with your Pi.

Next Steps

If you have images already, create a dataset and train a model. Otherwise, all 7000+ Pre-Trained models on Roboflow Universe are now supported on Raspberry Pi, so you can browse the models other users have already shared to find one that suits your fancy and jump right to building a vision-powered application.