Computer vision can enable robots to intelligently adapt to dynamic environments. With Roboflow and a Luxonis OAK, you can develop and run powerful computer vision models on your robots.

Throughout this guide, we’ll be using the FIRST Robotics Competition (FRC) as an example, but the same setup can be applied to various robotics platforms and usages.

Getting Started:

Here are some things you’ll need to follow along with this guide:

- Raspberry Pi 4

- Luxonis OAK camera (such as the OAK-D-Lite or OAK-D Pro)

- Peripherals, such as a keyboard and mouse, to set up your Raspberry Pi.

Make sure to set up your Raspberry Pi with the latest version of Raspbian OS by following the official documentation. You’ll also want your Raspberry Pi to have a working internet connection so we can install dependencies later.

Building a Robotics Dataset:

Roboflow provides a convenient platform to annotate training data, create datasets, and train powerful machine learning models. ⚡

Head over to roboflow.com to create an account. You’ll see an option to create a workspace – this is where we’ll be storing and managing our images.

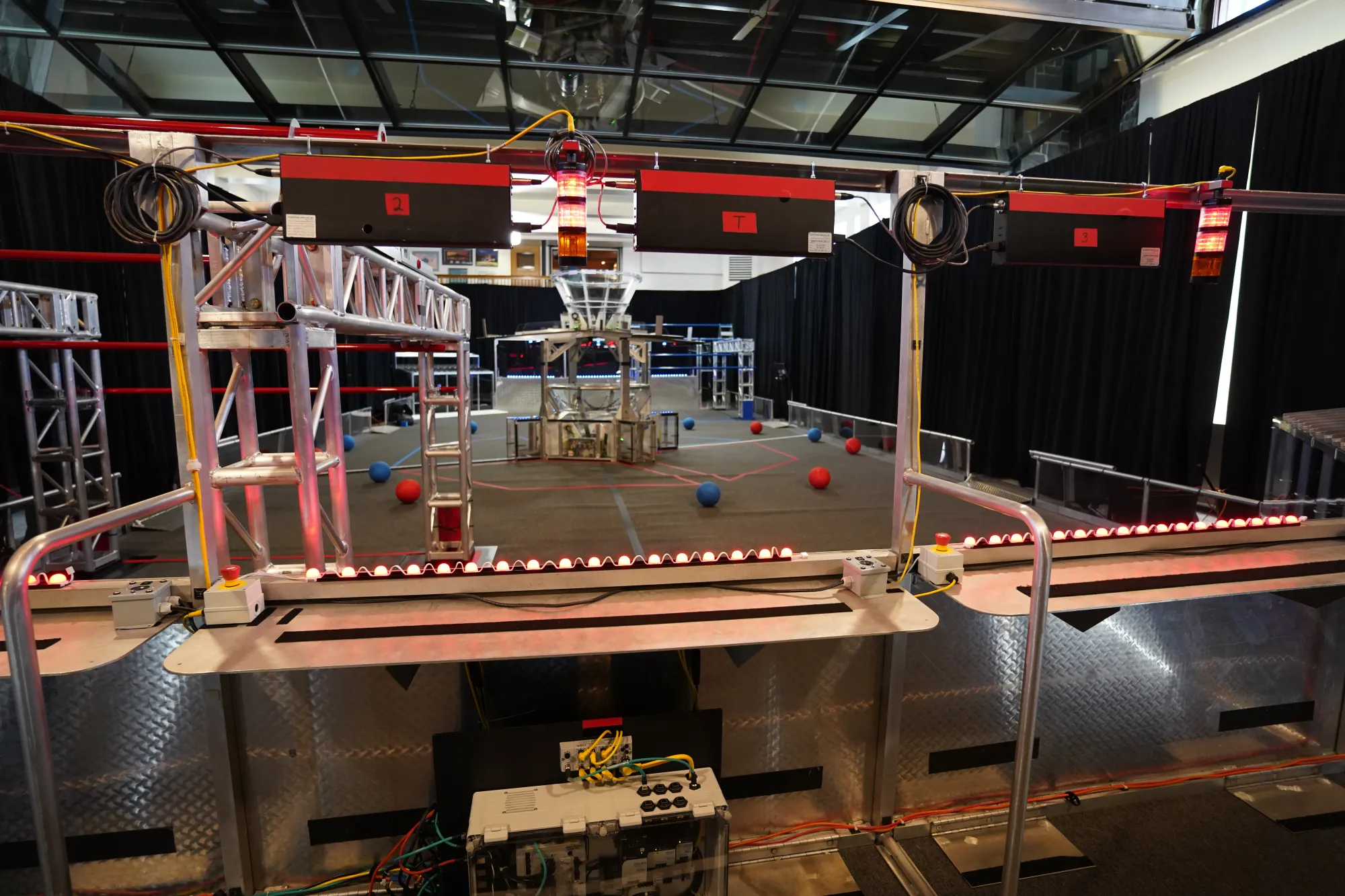

Our models learn to detect objects of interest by training on labeled examples. To collect images, we can take photos of objects in real-world scenarios and upload them to our Roboflow Workspace. For the 2022 FRC competition, this might have been cargo staged in various locations around the field, for example.

One resource is Roboflow Universe, a growing collection of thousands of public datasets and models. For example, here are some other public FIRST datasets.

Here’s some photos we took and uploaded to Roboflow:

Capturing pictures of the objects in a variety of placements and lighting conditions helps the model learn how to detect the object in a variety of situations.

Expanding a Robotics Dataset using Augmentation and Preprocessing

Preprocessing and Augmentation help computer vision models become more robust. Under the “Generate” tab, you can select transformations to be applied to your data.

Here are some augmentations you can do to help your model train:

- Flip: This helps your model be insensitive to subject orientation.

- Grayscale: This forces models to learn to classify without color input.

- Noise: Adding noise helps models become more resilient to camera artifacts.

- Mosaic: Joins several training images together and can improve accuracy on smaller objects.

With Roboflow augmentations, you can generate datasets up to 3x the size of your training data for free.

Once your dataset has been generated, it’ll pop up under the “Versions” tab. There, you can one-click train a robotics computer vision model that we’ll deploy onto our Raspberry Pi and Luxonis OAK Camera. After you’ve trained your model, we can visualize its results.

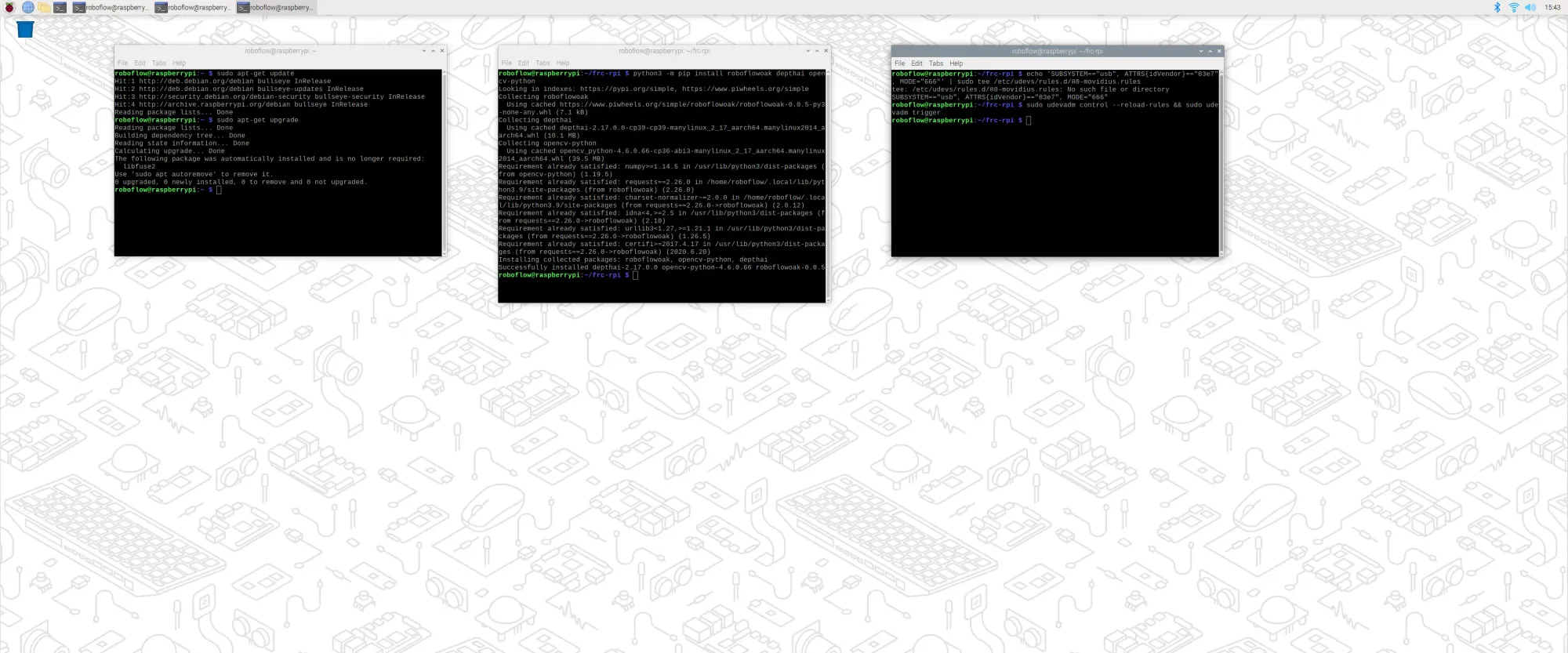

Installing dependencies

For the Raspberry Pi software to interact with a Luxonis OAK camera, it requires other pieces of software, or dependencies, to work. We can install them onto the Raspberry Pi by running the following commands in the terminal application:

sudo apt-get update

sudo apt-get upgrade

python3 -m pip install roboflowoak==0.0.5 depthai opencv-python

The Luxonis OAK camera also requires a specific setting on the Raspberry Pi. To ensure it’s set, also run the following commands in the terminal:

echo 'SUBSYSTEM=="usb", ATTRS{idVendor}=="03e7", MODE="0666"' | sudo tee /etc/udev/rules.d/80-movidius.rules

sudo udevadm control --reload-rules && sudo udevadm trigger

Running your Trained Model on the Luxonis OAK

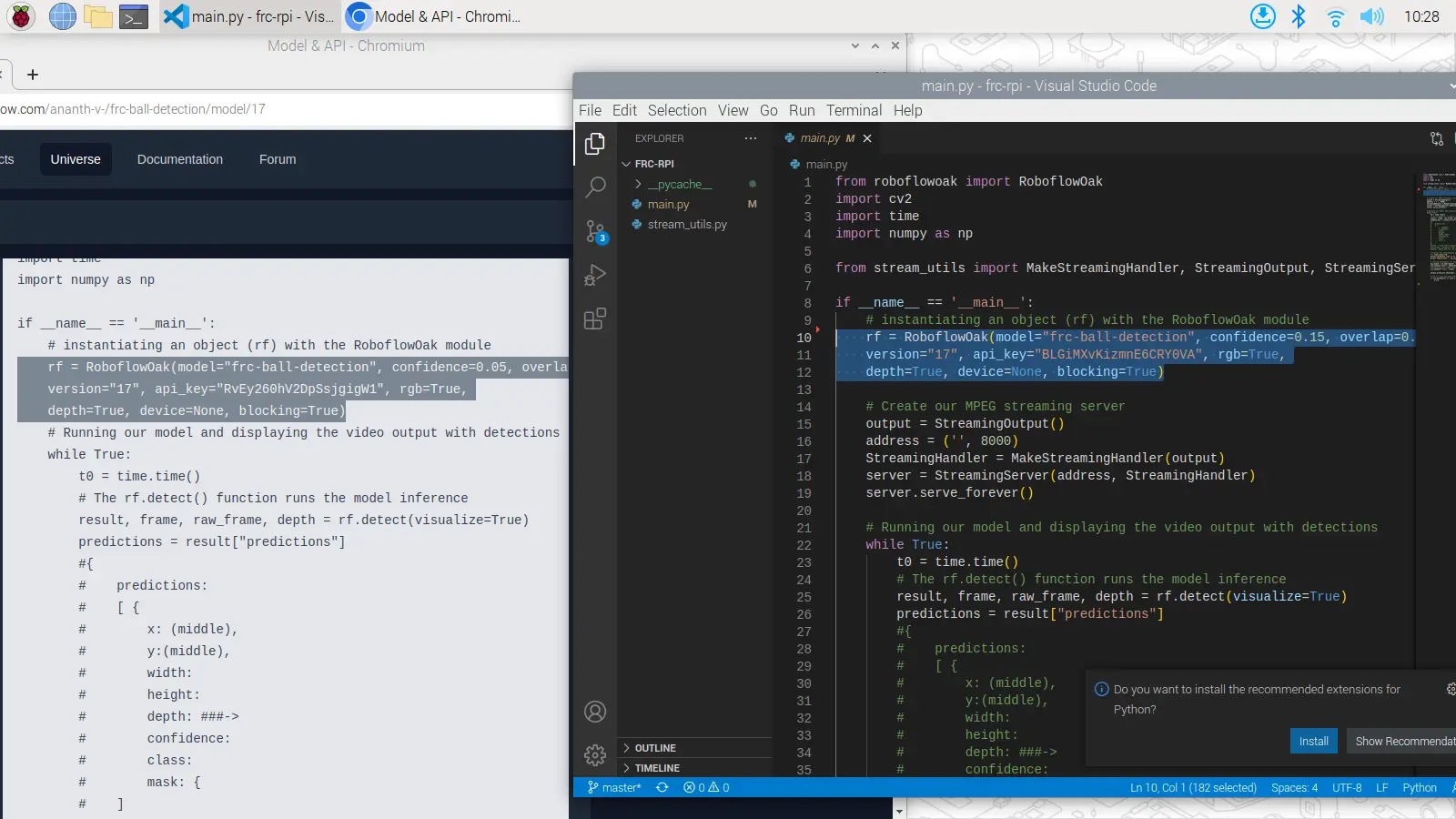

We’ve made a Github repository with handy snippets. Clone it by running the following commands:

git clone https://github.com/roboflow-ai/roboflow-api-snippets.git

cd Python/LuxonisOak

And now, open the file main.py in the cloned repository and edit lines 10 through 12 to specify the model and the version you’d like to run, along with your API key. You can find this by clicking the “Luxonis OAK” tab under “Use with” on the model’s test page. We encourage you to experiment with the other deploy options that Roboflow offers as well.

To run our detection, we can run:

python3 ./main.py

In the terminal output, we see that inference running at approximately 10 frames per second.

Building on top of this

By editing the main.py Python script, you can code functionality for your robot. For example, you can use libraries like networktables to send the coordinates of detected objects over a local network to other devices.

By using the Raspberry Pi as a vision co-processor, you can easily integrate this system into your existing robotics control system.

Our Roboflow, Raspberry Pi, and Luxonis OAK system in action.

Cite this Post

Use the following entry to cite this post in your research:

Ananth Vivekanand. (Aug 3, 2022). Use Raspberry Pi and Luxonis OAK to Deploy Vision Models in Robotics. Roboflow Blog: https://blog.roboflow.com/raspberry-pi-luxonis-oak-computer-vision/