According to the Center for Construction Research and Training, struck-by accidents are a leading cause of death on construction sites. The Center cites OSHA saying that “the four most common struck-by hazards are being struck-by a flying, falling, swinging, or rolling object.”

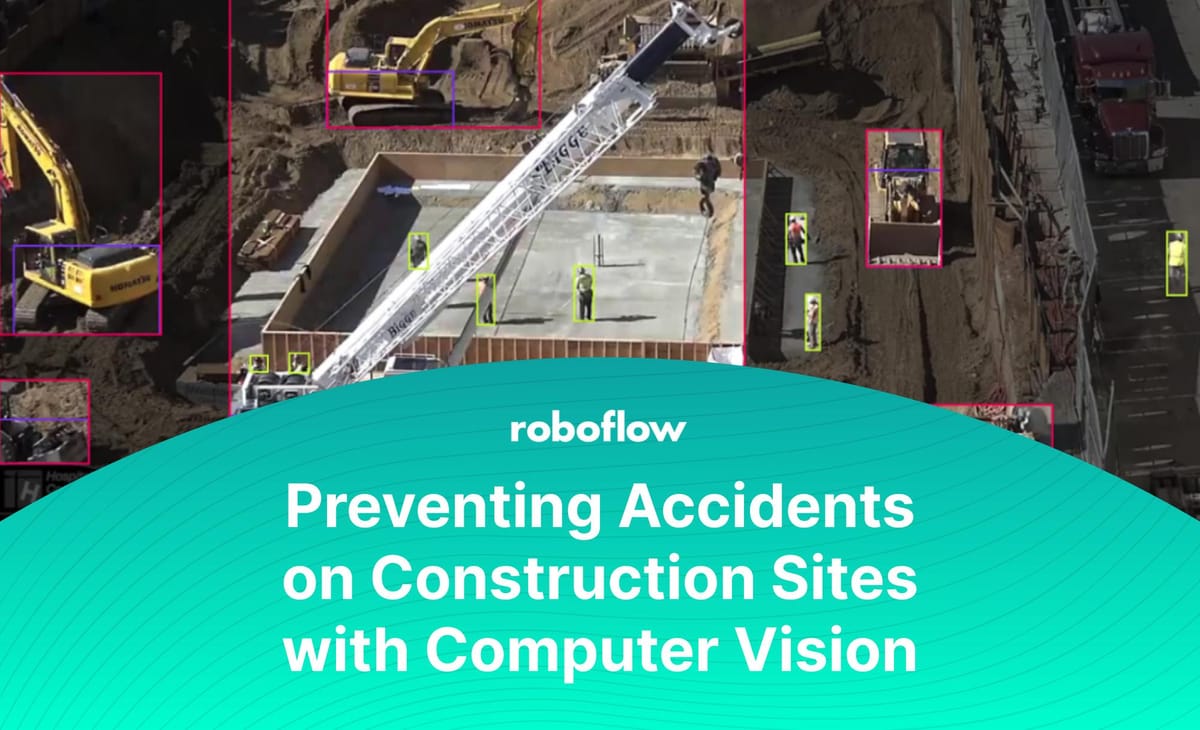

Governments and safety organizations have strict recommendations about how to prevent struck-by hazards from causing injury and harm on construction sites. With the use of computer vision, accident rates could be further reduced by using cameras to alert people when they get close to a moving vehicle.

In this guide, we are going to discuss a project by a Roboflow community member, Troy, that tracks how close people are to moving machinery on a construction site. Information gathered by the model could be used to build a warning system for workers who are in danger zones.

Here is a video showing the model in action:

0:00/1×

Understanding the problem and theorizing a solution

All computer vision projects begin with a problem statement. This project theorizes that computer vision could be used to identify workers who are dangerously close to construction equipment on work sites. This application could feature an early warning system to alert drivers that they are close to a person on the construction site, thus helping to avoid accidents.

To solve this problem, a model is built to identify machinery and people on a worksite. With help from a camera and an object detection model, computers can monitor a construction site’s safety as efficiently as a safety officer.

This can be done by using an object detection algorithm that detects each person, the base of a piece of equipment (the part that a person can touch from the ground, such as wheels), and an entire piece of equipment (including the top of a crane). Calculating the base of equipment was important for this use case as videos are recorded in 2D.

If the entirety of a piece of equipment were used to determine proximity to a vehicle, workers at the back of a construction site may appear close to the top of a crane in the foreground of the camera feed, depending on the position of the camera.

A script is used that can look at the coordinates of a machine (specifically, the “bounding box” that identifies the location of a machine) to see if they have changed from previous frames in a video.

If the coordinates of the bounding box have changed between frames in the video, then the script can mark that equipment as active. Within the same script, we can use the coordinates of the bounding box around a person to calculate the distance between them and the base of the equipment.

Preparing data for and training the model

With a clear problem statement in mind and an idea of a solution, the next step is preparing data for the computer vision model. To gather data, a video of a construction site from YouTube was uploaded to Roboflow. Then Roboflow’s platform is used to annotate the video, drawing boxes around all of the objects of interest in the video: people, the base of equipment, and the entirety of a piece of equipment. You can access the full construction site dataset and model if you'd like to follow along and build this project.

Here is an example of an annotated image for the project:

This annotation features boxes around all of the pieces of equipment, including a crane, five diggers, and 10 people.

After annotating the image, the model is ready for training. During the training phase, an auto-orient augmentation is applied to prevent the orientation of images from affecting the training process. There is also a range of augmentation techniques applied, listed below, to improve the sample size of the dataset and. The augmentation techniques applied also help the model perform better in different lighting conditions, camera angles, and with noise present in data (as might be the case when, for example, it is raining).

Here are the augmentations added:

With a working model, the next step is to write a script that identifies workers who are dangerously close to equipment.

Writing a script to identify workers dangerously close to equipment

Let's begin with writing code to capture frames from different construction videos. These frames could then be annotated with results from the Roboflow API and altered to include labels that indicate whether a worker is too close to equipment.

The code first divides a construction video into a range of different frames. In production, this stage would not be necessary because the model could be hooked up to a live video camera. Then, each frame is sent to the Roboflow API. The API returns a JSON object that shows a list of predictions featuring any people and equipment featured in the image of the construction site.

This preparatory work is then used to build a system that identifies the proximity of each worker to different pieces of equipment. This keeps track of the coordinates of all workers and pieces of machinery in a list. If a person is close to a construction site, a record is taken to note that a worker may be in danger.

At this stage, the model would raise an alarm any time a worker was close to equipment. This would return a lot of false positives, as a worker mounting a crane to begin their work would be flagged as dangerously close to a piece of equipment. That’s where the next stage of work comes in: only flag workers if they are too close to a piece of equipment that is in motion.

To identify whether a piece of equipment is in motion, the script keeps track of the predictions in the last four frames. If a piece of equipment has changed position more than a certain number of pixels between frames, the script adds a label to the equipment to indicate “Active”.

This use case has to consider “what happens if the coordinates of a bounding box have moved slightly?” This is a crucial consideration because the trained model may return slightly varying coordinates indicating the position of a person or a piece of equipment. Troy explained he used the following approach to identify whether an object is moving:

To determine if a machinery is active, we save coordinate data from previous frames and use this data with current data. If we go through each bounding box’s coordinates from the current and previous frames and find the ones with a close center, we can determine that these two are the same object. If these centers are within an acceptable distance of each other to determine they are the same object, yet are far enough to say they have moved, then we can determine this object as an active object.

Then, the script draws bounding boxes around each image for each object. This was a crucial step so that you can visualize how the model performed on a video recording.

Further, a table is added in the top right corner of the image feed to show how many workers were in the frame, how many machines were active, how many times workers had been dangerously close to equipment, among other pieces of information. This tracker summarizes what has been seen in a scene thus far and would be.

Workers who were close to a piece of equipment were marked with a label called “CAUTION”, as seen below:

In the image above, one worker is close to a moving piece of machinery. The movement in the machinery is denoted by the “Active” label. The worker is given an orange “CAUTION” label to indicate they are too close to the vehicle. Another worker is close to the top of a crane and is marked with the label "Caution". Other vehicles are in the background but they are not labeled as active because they had not moved in recent frames.

Each frame is recomposed into a video and saved into a file for later analysis. As aforementioned, this step would not be necessary in a model deployed to production on a construction site. The model could be run live to analyze the site and immediately flag safety issues in real time.

Conclusion

Monitoring the proximity of workers to moving equipment is a large step toward an alert system that notifies drivers when there are people too close to their vehicle. In production, a model such as this could be used to send notifications to monitors in construction vehicles.

For example, a red danger icon could appear on a screen in a crane to indicate there is someone close to the base. This way, a driver has an additional system to monitor for workers near their equipment in case they haven’t noticed someone.

Incidents could be recorded in a record book that is kept up to date with qualitative information about an incident so that a construction company knows exactly what happened when a worker got close to moving equipment.

If you are interested in training your own computer vision model, check out Roboflow. We have created an end-to-end computer vision platform that can assist you with all of your needs when building a model, from preparing your data to training and deploying multiple versions of your model.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Dec 7, 2022). Preventing Accidents on Construction Sites with Computer Vision. Roboflow Blog: https://blog.roboflow.com/preventing-accidents-on-construction-sites-with-computer-vision/