Performing real-time video inference is crucial for many applications like autonomous vehicles, security systems, logistics, and more. However, setting up a robust video inference pipeline can be time consuming. You need a trained computer vision model, inference code, and visualization tools to annotate each frame. This is a lot of overhead just to run a model on video!

In this post, I'll show how you can run scalable video inference using Roboflow Universe, accessing customizable pre-trained models with just a few lines of Python.

We'll use a Roboflow Logistics Object Detection Model from Roboflow Universe and add custom annotations like boxes, labels, tracking, and more on top of the detected objects using the Supervision library.

Here is a quick overview of the steps we'll cover:

- Getting started

- Load custom model from Roboflow Universe

- Run inference on a video

- Apply Custom Annotations

- Annotate frames

- Extract the annotated video using Supervision

Use Case: Logistics Monitoring

Let's look at a use case in logistics - monitoring inventory in warehouses and distribution centers. Keeping track of inventory and assets accurately is critical but can be a manual process. We can use cameras to record video feeds inside warehouses. Then object detection models can identify and locate important assets like pallets, boxes, carts, and forklifts. Finally, we can add custom overlays like labels, box outlines, and tracking to create an inventory monitoring system.

Getting Started

First, we'll import Roboflow and other dependencies:

import os

from roboflow import Roboflow

import numpy as np

import supervision as sv

import cv2Roboflow provides easy APIs for working with models and runs inference automatically in the background while Supervision contains annotators that let us easily customize the output video.

Load Custom Model from Roboflow Universe

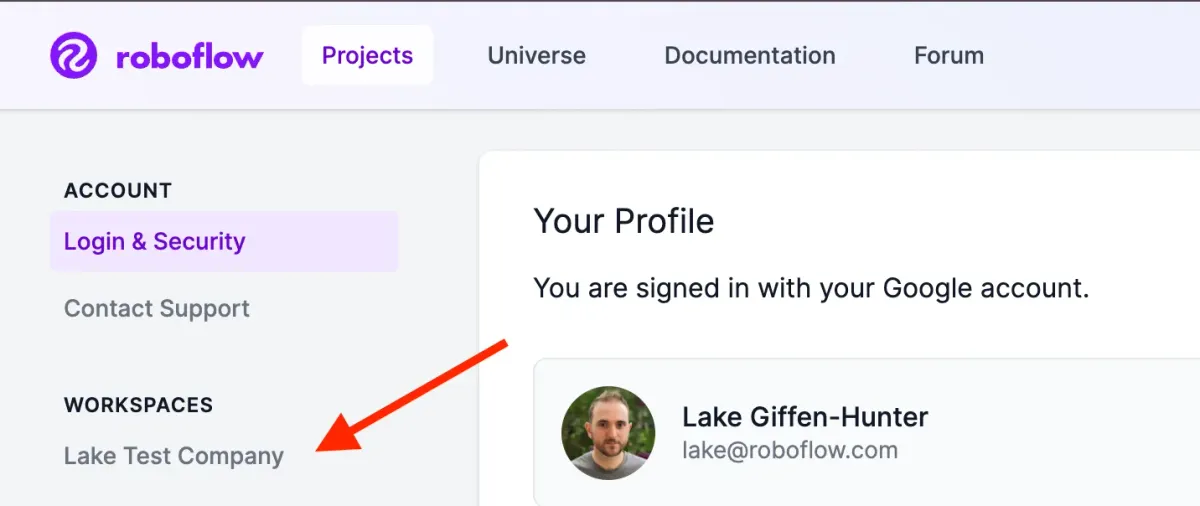

Next, we configure our Roboflow project. You can pick any deployed model from Roboflow Universe.

PROJECT_NAME = "logistics-sz9jr"

VIDEO_FILE = "example.mp4"

ANNOTATED_VIDEO = "output.mp4"

rf = Roboflow(api_key="ROBOFLOW_API_KEY")

project = rf.workspace().project(PROJECT_NAME)

model = project.version(2).model

Run Video Inference

Then, we call the predict_video method to run inference on the video and get the results:

job_id, signed_url, expire_time = model.predict_video(

VIDEO_FILE,

fps=5,

prediction_type="batch-video",

)

results = model.poll_until_video_results(job_id)Apply Custom Annotations

To enhance the quality of our annotations, we can utilize custom annotators from the 'supervision' package. In this example, we use 'BoxMaskAnnotator' and 'LabelAnnotator' to improve our annotations:

box_mask_annotator = sv.BoxMaskAnnotator()

label_annotator = sv.LabelAnnotator()

tracker = sv.ByteTrack()

# box_annotator = sv.BoundingBoxAnnotator()

# halo_annotator = sv.HaloAnnotator()

# corner_annotator = sv.BoxCornerAnnotator()

# circle_annotator = sv.CircleAnnotator()

# blur_annotator = sv.BlurAnnotator()

# heat_map_annotator = sv.HeatMapAnnotator()Annotate Frames

To annotate frames in the video, we'll define a function called `annotate_frame` that utilizes the obtained results from video inference.

Then for each frame, we:

- Get the detection data from Roboflow's results

- Create annotations with Supervision for that frame

- Overlay annotations on the frame

cap = cv2.VideoCapture(VIDEO_FILE)

frame_rate = cap.get(cv2.CAP_PROP_FPS)

cap.release()

def annotate_frame(frame: np.ndarray, frame_number: int) -> np.ndarray:

try:

time_offset = frame_number / frame_rate

closest_time_offset = min(results['time_offset'], key=lambda t: abs(t - time_offset))

index = results['time_offset'].index(closest_time_offset)

detection_data = results[PROJECT_NAME][index]

roboflow_format = {

"predictions": detection_data['predictions'],

"image": {"width": frame.shape[1], "height": frame.shape[0]}

}

detections = sv.Detections.from_inference(roboflow_format)

detections = tracker.update_with_detections(detections)

labels = [pred['class'] for pred in detection_data['predictions']]

except (IndexError, KeyError, ValueError) as e:

print(f"Exception in processing frame {frame_number}: {e}")

detections = sv.Detections(xyxy=np.empty((0, 4)),

confidence=np.empty(0),

class_id=np.empty(0, dtype=int))

labels = []

annotated_frame = box_mask_annotator.annotate(frame.copy(), detections=detections)

annotated_frame = label_annotator.annotate(annotated_frame, detections=detections, labels=labels)

return annotated_frameWrite Output Video

Finally, we annotate the full video:

sv.process_video(

source_path=VIDEO_FILE,

target_path=ANNOTATED_VIDEO,

callback=annotate_frame

)Conclusion

Roboflow combined with Supervision provides an end-to-end system to easily add visual intelligence to video feeds. This helps build production-ready solutions for inventory management, warehouse monitoring and more.

The full code is available on Colab. Happy inferring!

Cite this Post

Use the following entry to cite this post in your research:

Arty Ariuntuya. (Nov 27, 2023). Roboflow Video Inference with Custom Annotators. Roboflow Blog: https://blog.roboflow.com/roboflow-video-inference-with-custom-annotators/