In May, Google released a new lightweight and open Vision Language Model (VLM) called PaliGemma. PaliGemma is relatively small (three billion parameters), has terms that support commercial use, and offers the ability to fine-tune. Combined, these factors make PaliGemma an exciting model for computer vision tasks.

As someone who builds computer vision applications, I was curious to see what tasks this model potentially improves in real world applications. Let's build an application with PaliGemma and find out.

What We're Building

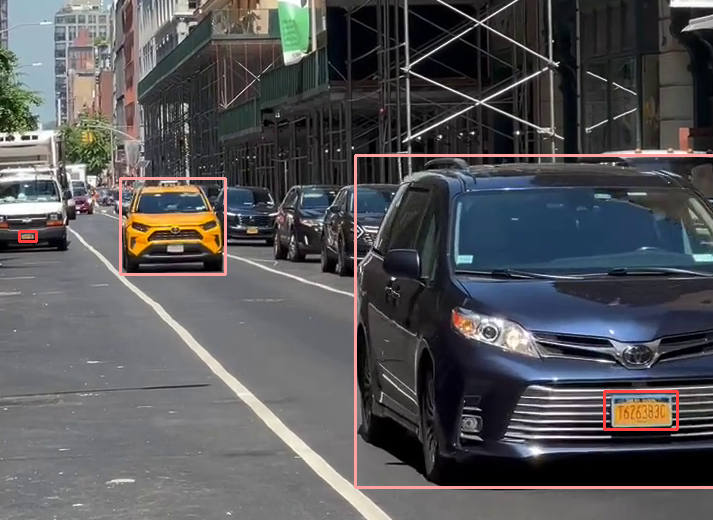

To see some of the benefits PaliGemma has to offer, let's build a vehicle analytics application. Our application will detect vehicles, analyze vehicle information including the make, color and style, read the license plate, and save this information to a spreadsheet for historical view. Here is a little sneak peak of the running application.

In order to build this application, let's break down the problem into some smaller steps and talk through some requirements of the system.

1. Detecting vehicles and license plates.

2. Cropping and saving unique detections.

3. Classifying vehicle color, brand, and type

4. Reading the license plate.

5. Saving results to a CSV file

We want our application to run in real time, and to be able to self host on our own hardware.

Detect vehicles and license plates

For this application we'll use a fine tuned Yolov8 model that can detect license plates and vehicles. I trained a model in 30 minutes, using Roboflow to auto-label my data. From there I used Inference to run my model on a video stream. We'll also utilize the Supervision package as our computer vision swiss army knife to display bounding boxes and display the frame with cv2.

from inference import InferencePipeline

import supervision as sv

import cv2

bb = sv.BoundingBoxAnnotator()

def call_back(results, frame):

detections = sv.Detections.from_inference(results)

annotated_frame = bb.annotate(

scene=frame.image.copy(),

detections=detections,

)

cv2.imshow("Vehicle Analytics", annotated_frame)

cv2.waitKey(1)

pipeline = InferencePipeline.init(

model_id="vehicle-recognition-z5mpj/4",

api_key="ROBOFLOW_API_KEY",

video_reference=["output.MOV"],

on_prediction=call_back,

)

pipeline.start()

pipeline.join()

Crop and save unique detections

This part of the application is the most complex, so let's work through it step by step.

First, we'll need a mechanism to track unique vehicles and trigger the classification and OCR tasks on these specific detections. In order to give PaliGemma a higher chance at accurate OCR and classification tasks, we want to advantageously grab a frame of the vehicle when it's clearly visible. For this we'll use ByteTrack to save unique vehicles as they enter a polygon zone at the far right of the video when vehicles are closest.

import numpy as np

import threading

...

tracker = sv.ByteTrack()

detection_polygon = np.array([[717, 6], [717, 1270], [681, 1271], [683, 9], [714, 9]])

detection_zone = sv.PolygonZone(polygon=detection_polygon, triggering_anchors=(sv.Position.BOTTOM_RIGHT,))

unique_cars = set()

def background_task(results, frame):

print(results, frame)

def call_back(results, frame):

...

tracked_detections = tracker.update_with_detections(detections)

detected_cars = tracked_detections[tracked_detections.class_id == 2]

cars_in_zone = detected_cars[detection_zone.trigger(detected_cars)]

global unique_cars

for car in cars_in_zone.tracker_id:

if not car in unique_cars:

unique_cars.add(car)

background_thread = threading.Thread(target=backgroud_task, args=(results, frame))

...

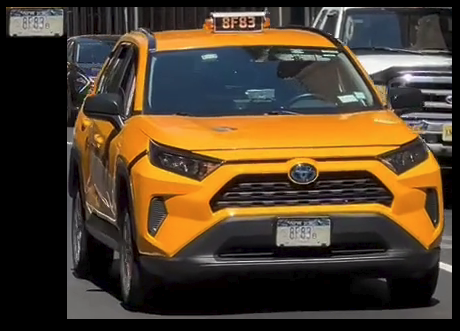

Now that we have a frame with a unique and clear vehicle and license plate, we'll need to filter the vehicle and license plate that are closest. We'll do this in a background task with another polygon zone that is slightly larger than our detection zone. We'll then crop the regions of interest of the license plate and the vehicle and display them to the user.

from PIL import Image

...

filter_polygon = np.array([[718, 5], [373, 4], [392, 1267], [714, 1266], [712, 14]])

filter_zone = sv.PolygonZone(polygon=filter_polygon, triggering_anchors=(sv.Position.BOTTOM_RIGHT,))

...

def get_crops(detections, image):

result = {}

for bbox, class_name in zip(detections.xyxy, detections.data["class_name"]):

x1, y1, x2, y2 = bbox

crop = image[int(y1):int(y2), int(x1):int(x2)]

crop = cv2.cvtColor(crop, cv2.COLOR_BGR2RGB)

if class_name in result:

result[class_name].append(crop)

else:

result[class_name] = [crop]

return result

def background_task(results, frame):

detections = sv.Detections.from_inference(results)

car_features = detections[filter_zone.trigger(detections)]

crops = get_crops(car_features, frame.image)

plate_img = Image.fromarray(crops["license-plate"][0])

vehicle_img = Image.fromarray(crops["vehicle"][0])

total_width = plate_img.width + vehicle_img.width

max_height = max(plate_img.height, vehicle_img.height)

combined_img = Image.new('RGB', (total_width, max_height))

combined_img.paste(plate_img, (0, 0))

combined_img.paste(vehicle_img, (plate_img.width, 0))

combined_img.show()

...

Using PaliGemma

Now time for the fun part! we'll use inference to load in our PaliGemma weights and utilize the model for our classification and OCR tasks! First let's make sure we've got the correct dependencies installed by following the setups steps in the Roboflow PaliGemma training notebook. Since this model is a VLM, we can interact with it by passing it an image and a prompt. Note that on the initial run, it takes a few minutes to download the weights.

from inference.models.paligemma.paligemma import PaliGemma

...

pg = PaliGemma(api_key="ROBOFLOW_API_KEY")

def call_pali(prompt, image):

global pg

response = pg.predict(image, prompt)

return response[0]

...PaliGemma for Classification and OCR Tasks

Now that we have a mechanism for using PaliGemma, let's see what our classification and OCR tasks look like. We'll simply pass our vehicle and license plate crops to our function `call_pali` with our specific question we have. Feel free to play around with the prompts here as well, but I've found the below to work well for this use case.

def background_task(results, frame):

... # code from earlier

color = call_pali("What color is the car?", vehicle_img)

brand = call_pali("car make", vehicle_img)

car_type = call_pali("car;van;suv;truck", vehicle_img)

plate = call_pali("read", plate_img)

print(color, brand, car_type)Saving Our Results

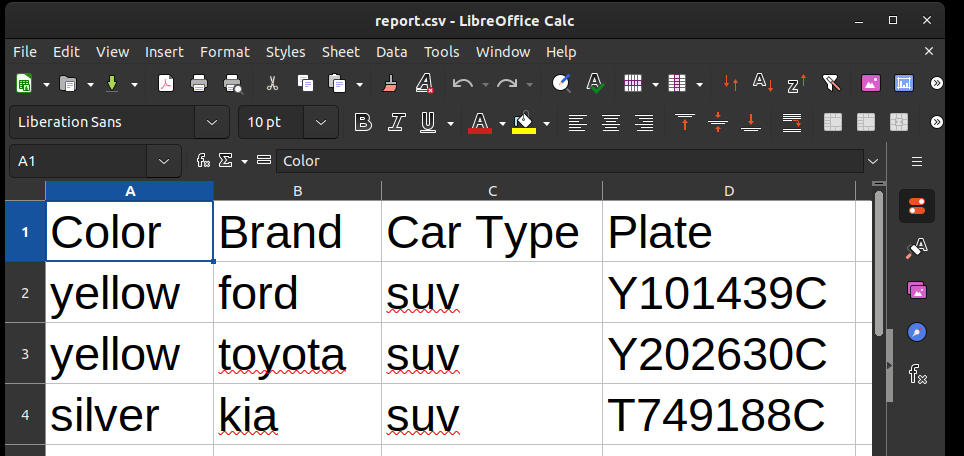

Finally, we'll save our resulting crops to a directory named vehicles. We will also create a CSV file to save our data.

import csv

report = "path_to_csv.csv"

with open(report, mode="w", newline='') as f:

fieldnames = ["Color", "Brand", "Car Type", "Plate"]

writer = csv.DictWriter(f, fieldnames=fieldnames)

writer.writeheader()

def background_task(results, frame):

... # code from earlier

with open(report, mode="a", newline='') as f:

fieldnames = ["Color", "Brand", "Car Type", "Plate"]

data = [{"Color": color, "Brand":brand, "Car Type": car_type, "Plate": plate}]

writer = csv.DictWriter(f, fieldnames=fieldnames)

writer.writerows(data)

file_name = f"./vehicles/{color}-{brand}-{car_type}-{plate}.jpg"

combined_img.save(file_name)

Summary

There we have it! A locally hosted, real time, vehicle analytics application. Prior to PaliGemma, I may have reached for CLIP to complete the classifying tasks, and one of the many OCR models to accomplish reading the license plate.

With PaliGemma we can reduce the number of models we use, while also simplifying our logic. I also think it's pretty incredible that this demo runs entirely on my own hardware in near real time with a Nvidia RTX 3090. My prediction is that these VLM will continue to get better over time, and the hardware cheaper, unlocking incredible enterprise and hobby applications that today are slightly out of reach.

These are exciting times to be building with computer vision and are made drastically easier with the Roboflow suite of tooling and platform. What will you build with PaliGemma?

Cite this Post

Use the following entry to cite this post in your research:

Nick Herrig. (Jun 21, 2024). Building a Vehicle Analytics Application with PaliGemma. Roboflow Blog: https://blog.roboflow.com/vehicle-analytics/