This article was contributed to the Roboflow blog by Abirami Vina.

When you look at a person, you can instinctively tell whether they are sitting, standing, slouching, or walking around. Your brain has an intuitive understanding of the human body and the different postures associated with it. Can a machine also be taught to identify poses in images? Yes! That’s exactly what pose estimation is.

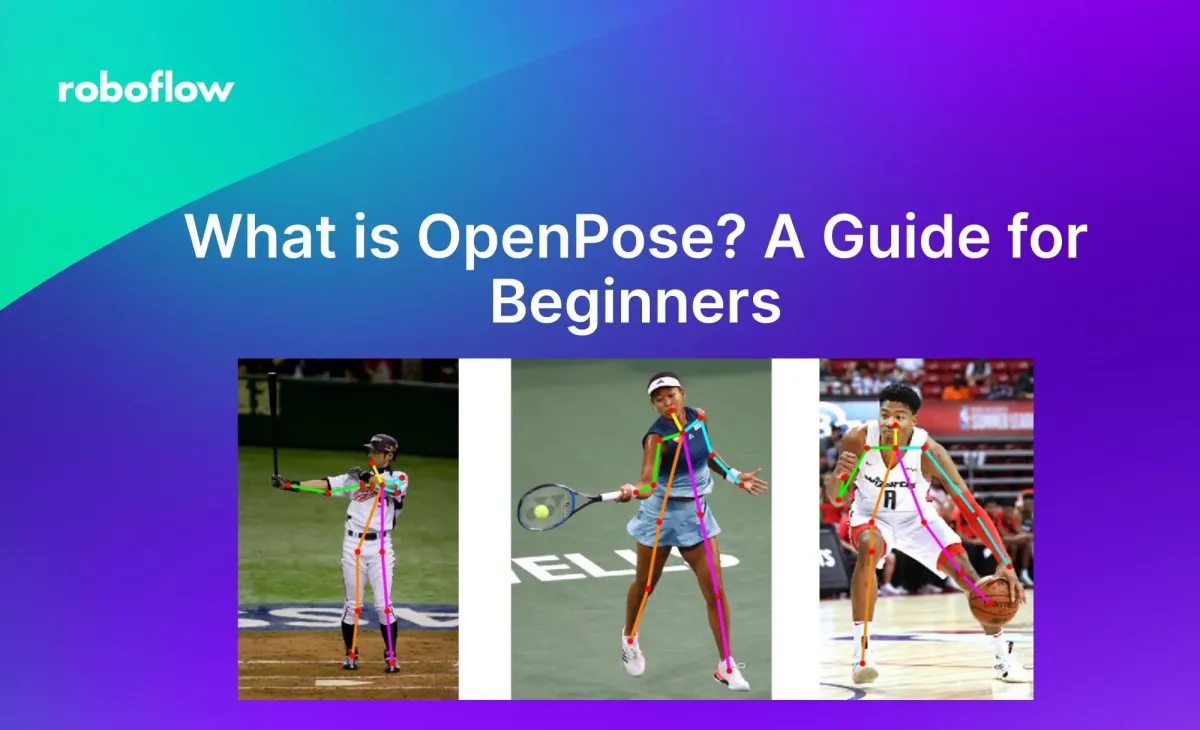

Pose estimation is a computer vision technique that can pinpoint the key body joints of a person in images and videos to understand their pose. OpenPose is a popular computer vision real-time system designed for multi-person keypoint detection. It can identify and track various human body parts, including the body, foot, face, and hands, through images and videos.

In this article, we’ll take a closer look at what OpenPose is and what you can do with it. Let’s get right to it!

Easily annotate keypoint data at scale with Roboflow's AI-assisted labeling. Get started free.

What is OpenPose?

OpenPose is a pose estimation system developed by researchers at Carnegie Mellon University (CMU) that can detect and track the human body in real-time and accurately determine its pose in 3D space. It is well known for being the first real-time multi-person pose estimation system to accurately detect human body, hand, facial, and foot key points (a total of 135 key points) on single images.

Okay, but what does that really mean?

That means if you show the system an image of a ballerina dancing, it can tell you exactly which ballet pose she is in, from a mathematical perspective. The results from the model can then be converted into a human-readable label.

In the image above, OpenPose precisely pinpoints the pose of the people in the image by mapping the body's key points, from limb alignment to finger positioning. We can easily analyze the dancer's form and help her improve her performance and prevent injuries.

How OpenPose Works

OpenPose analyzes the input image with a Convolutional Neural Network (CNN), a deep learning algorithm suited for processing visual data. This initial step extracts what are known as "feature maps" from the image. Feature maps are essentially detailed layers of the image that highlight various aspects, such as edges, textures, or specific shapes. After the feature maps are extracted, OpenPose uses a specialized, multi-stage CNN pipeline.

The pipeline processes the feature maps to produce two key outputs: Part Confidence Maps and Part Affinity Fields. The Part Confidence Maps are detailed overlays for each image that indicate the likelihood of each part of the body (like elbows, knees, or the face) being in a specific location. The Part Affinity Fields identify the orientation and association between different body parts. They help the system understand how body parts are connected and interact.

Finally, OpenPose uses a greedy bipartite matching algorithm to bring it all together. We can call it "greedy" because the algorithm makes the most immediate optimal choice at each step in the hopes of finding a global optimum for pose estimation. It helps with identifying individual poses in images that contain multiple people.

Evolution of OpenPose

OpenPose is the result of extensive research on pose estimation techniques over the years. Check out our blog post on pose estimation techniques to get a detailed understanding of how they have evolved from the 1990s to now.

OpenPose itself has also changed over time. Here are the major milestones:

- April 2017: Launch of OpenPose with basic body key point detection capabilities.

- June 2017: Introduction of face keypoint detection and enhancements for Windows 10 compatibility.

- July 2017: Hand keypoint detection is added

- November 2017: Enhanced processing for images of varying aspect ratios and IP camera support.

- May 2019: Significant processing speed improvements and the introduction of a single-person tracker.

- April 2020: Advancements in multi-camera (3D) functionality for asynchronous processing modes.

- November 2020: Update for compatibility with newer CUDA and cuDNN versions, alongside improvements to the Python API.

Each update has expanded OpenPose's capabilities and applications in various fields, such as sports analytics and healthcare monitoring.

Core Features and Technologies

Now that we’ve covered what OpenPose is, let’s understand its core features and technologies.

2D and 3D Keypoint Detection

With respect to 2D keypoint detection, OpenPose can estimate 15, 18, or 25 key points for the body and feet, including 6 points for the feet. Even if multiple people are in an image, the time taken to predict doesn’t change. It can also estimate two sets of 21 key points for the hands and 70 key points for the face, and the time taken for these estimations depends on how many people are detected in the image.

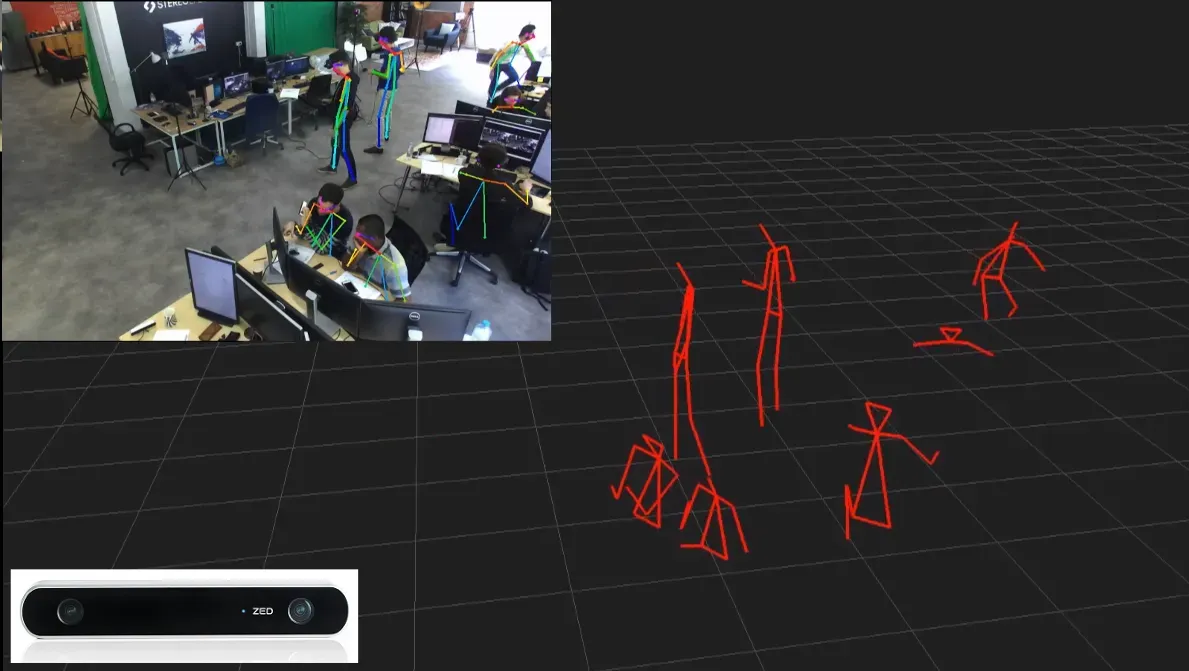

OpenPose can detect key points in 3D for a single person in real-time by triangulating points from multiple views. Single-person tracking can improve the processing speed and smoothness of the visual output. It does this by synchronizing the cameras, and it works with both Flir and Point Grey cameras. The Calibration Toolbox can help estimate distortion, intrinsic, and extrinsic camera parameters.

OpenPose Input & Output

OpenPose can take different types of input, such as images, videos, webcams, Flir/Point Grey cameras, IP cameras, and even custom-input images and videos from sources like depth cameras.

As for output, OpenPose can provide key points such as 2D coordinates, 3D coordinates, or heatmap values. This flexibility can be used for different applications and needs.

OS and Hardware Compatibility

OpenPose is compatible with several operating systems, including Ubuntu (versions 20, 18, 16, and 14), Windows (versions 10 and 8), Mac OSX, and Nvidia TX2. In terms of additional hardware, it supports CUDA for Nvidia GPUs, OpenCL for AMD GPUs, and also other non-GPU versions for CPU-only systems.

OpenPose also has APIs in several popular programming languages, such as Python, C++, and MATLAB, and can be integrated with other machine learning libraries and frameworks, such as TensorFlow, PyTorch, and Caffe.

OpenPose Applications

There are many real-world applications for pose estimation models like OpenPose. Below, we talk about a few applications.

Healthcare

OpenPose can monitor patients' movements during rehabilitation exercises and provide real-time feedback and techniques to improve their posture. Doctors can take better care of their patients, and their patients recover much faster.

Another example is fall detection being implemented where old people live alone. Such applications can send real-time alerts to caretakers and family members as soon as a fall is detected.

Pose estimation could also be used to build exercise challenge applications that monitor how close someone is to making a specific position, and count for how long the person holds their position.

Entertainment

OpenPose can change the way we see entertainment. By tracking a person’s body movements, virtual reality applications and simulations can create an immersive experience.

Motion-tracking-enabled games like Just Dance could use pose estimation in the future. These games track the player’s body movements to manipulate the game avatars. This technology can also be implemented in animation, film, and TV to capture the motion of an actor’s body and facial expressions to create realistic and expressive digital characters.

Just Dance could use pose estimation in the future. Source

How to Use OpenPose

You can try running an inference using a TensorFlow implementation of OpenPose on an image of yourself in less than 5 minutes. Here’s the Google Colab notebook for the same.

First, git clone the following repository using this command:

git clone https://github.com/misbah4064/human-pose-estimation-opencv.gitThen cd into the following folder you cloned.

cd human-pose-estimation-opencv/

Then, install the opencv-python package using pip install opencv-python and run the following code. Be sure to edit the file path to your input image in the code:

# Import the necessary libraries

import cv2 as cv

import numpy as np

from google.colab.patches import cv2_imshow

# Define a dictionary mapping human body parts to their corresponding indices in the model's output

BODY_PARTS = {

"Nose": 0, "Neck": 1, "RShoulder": 2, "RElbow": 3, "RWrist": 4,

"LShoulder": 5, "LElbow": 6, "LWrist": 7, "RHip": 8, "RKnee": 9,

"RAnkle": 10, "LHip": 11, "LKnee": 12, "LAnkle": 13, "REye": 14,

"LEye": 15, "REar": 16, "LEar": 17, "Background": 18

}

# Define a list of pairs representing the body parts that should be connected to visualize the pose

POSE_PAIRS = [

["Neck", "RShoulder"], ["Neck", "LShoulder"], ["RShoulder", "RElbow"],

["RElbow", "RWrist"], ["LShoulder", "LElbow"], ["LElbow", "LWrist"],

["Neck", "RHip"], ["RHip", "RKnee"], ["RKnee", "RAnkle"], ["Neck", "LHip"],

["LHip", "LKnee"], ["LKnee", "LAnkle"], ["Neck", "Nose"], ["Nose", "REye"],

["REye", "REar"], ["Nose", "LEye"], ["LEye", "LEar"]

]

# Specify the input dimensions for the neural network

width = 368

height = 368

inWidth = width

inHeight = height

# Load the pre-trained OpenPose model from a file

net = cv.dnn.readNetFromTensorflow("graph_opt.pb")

thr = 0.2 # Set a confidence threshold for detecting keypoints

# Define a function to detect poses in an input frame

def poseDetector(frame):

frameWidth = frame.shape[1]

frameHeight = frame.shape[0]

# Prepare the input for the model by resizing and mean normalization

net.setInput(cv.dnn.blobFromImage(frame, 1.0, (inWidth, inHeight), (127.5, 127.5, 127.5), swapRB=True, crop=False))

out = net.forward()

out = out[:, :19, :, :] # Extract the first 19 elements, corresponding to the body part keypoints

# Ensure the number of detected body parts matches the predefined BODY_PARTS

assert(len(BODY_PARTS) == out.shape[1])

points = [] # Initialize a list to hold the detected keypoints

# Iterate over each body part to find the keypoints

for i in range(len(BODY_PARTS)):

# Extract the heatmap for the current body part

heatMap = out[0, i, :, :]

# Find the point with the maximum confidence

_, conf, _, point = cv.minMaxLoc(heatMap)

# Scale the point's coordinates back to the original frame size

x = (frameWidth * point[0]) / out.shape[3]

y = (frameHeight * point[1]) / out.shape[2]

# Add the point to the list if its confidence is above the threshold

points.append((int(x), int(y)) if conf > thr else None)

# Draw lines and ellipses to represent the pose in the frame

for pair in POSE_PAIRS:

partFrom = pair[0]

partTo = pair[1]

# Ensure the body parts are in the BODY_PARTS dictionary

assert(partFrom in BODY_PARTS)

assert(partTo in BODY_PARTS)

idFrom = BODY_PARTS[partFrom]

idTo = BODY_PARTS[partTo]

# If both keypoints are detected, draw the line and keypoints

if points[idFrom] and points[idTo]:

cv.line(frame, points[idFrom], points[idTo], (0, 255, 0), 3)

cv.ellipse(frame, points[idFrom], (3, 3), 0, 0, 360, (0, 0, 255), cv.FILLED)

cv.ellipse(frame, points[idTo], (3, 3), 0, 0, 360, (0, 0, 255), cv.FILLED)

t, _ = net.getPerfProfile() # Optional: Retrieve the network's performance profile

return frame # Return the frame with the pose drawn

# Load an input image

input = cv.imread("path_to_your_input_image.jpg")

# Pass the image to the poseDetector function

output = poseDetector(input)

# Display the output image with the detected pose

cv2_imshow(output)Below is an example of an output image with the pose detected:

We’ve showcased the TensorFlow version of detecting the pose of a single person using OpenPose here because it is more straightforward and user-friendly. It’s perfect for quick experiments on platforms like Google Colab.

The official CMU OpenPose Caffe version is known for its precision and robustness but has a more involved setup process. For anyone interested in trying it out, here’s the official GitHub repository, which offers comprehensive guides and tools for installation and exploration: CMU OpenPose GitHub Repository.

Future Trends and Impacts

OpenPose and pose estimation techniques are vital parts of computer vision. They can help obtain a more detailed understanding of people and objects. As these technologies evolve, they're set to transform different human-centric fields like healthcare.

A major trend in the future is going to be the integration of pose estimation with Edge AI technologies. For instance, Lightweight OpenPose is a fast and efficient pose estimation model designed for edge devices. This means that advanced pose estimation can now be used in everyday gadgets, from smartphones to smart home devices, bringing immediate, real-time interaction without compromising on privacy or speed.

Challenges with Pose Estimation

Real-time pose estimation systems can be very expensive and consume a lot of processing power. There are also concerns regarding the complexity of training data for pose estimation. They need large amounts of data and it can be difficult to obtain.

Another major challenge is regarding accuracy. OpenPose pose estimation systems are great but not perfect. There are concerns regarding foot keypoint failures.

There are also concerns regarding privacy as OpenPose’s pose estimation systems can collect sensitive data about individuals, like their appearance, motion, etc. With a community of thousands of highly skilled developers, these challenges are being worked on.

Conclusion

OpenPose is one of the most popular libraries for pose estimation. It is capable of real-time multi-person pose detection and analysis. We have learned that OpenPose can be applied in a variety of fields, such as motion capture, virtual reality, and human-computer interaction. It can detect minor occlusions, adjust for changes in lighting, and estimate the body's pose precisely in real-time applications.

As we look ahead, OpenPose showcases the remarkable achievements in artificial intelligence and computer vision. It also paves the way for future innovations, encouraging new research and applications that hold the potential to transform our interaction with technology.

Continue Your Learning

Here are some more resources to help you get started with OpenPose:

- The Official Paper on OpenPose

- Check out this OpenPose tutorial

- A Google Collab notebook with demonstration code

Accelerate your pose estimation projects with Roboflow’s smart keypoint annotation tools. Label faster, export easily, and build better models.

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Apr 3, 2024). What is OpenPose? A Guide for Beginners.. Roboflow Blog: https://blog.roboflow.com/what-is-openpose/