This article was contributed to the Roboflow blog by Abirami Vina.

Advances in AI over the last few years, such as those made by Google's MediaPipe, make it possible to create incredible experiences that blend the digital and physical worlds together. MediaPipe is an open-source framework developed for building machine learning pipelines. MediaPipe is especially useful for developers who are working with videos and images.

Whether you're making an app with fun filters for your face or one that can identify different sounds, MediaPipe has everything you need.

In this article, we’ll understand how MediaPipe has evolved over the years, some of its core features, and how it can be used in different applications. We’ll also guide you through a simple code example using MediaPipe to track your hands. Let’s get started!

What is MediaPipe?

Google developed Mediapipe as an open-source framework for building and deploying machine-learning pipelines. These pipelines can process multimedia data like text, video, and audio in real time. You can combine its pre-built components, also known as "Calculators,” to create computer vision pipelines. Thanks to its modular architecture and user-friendly graph-based design, this is possible.

MediaPipe works like a dataflow programming framework. Data moves through a series of connected 'calculators,' each performing a specific task on the data before passing it to the next one. The graph above shows these calculators as nodes connected by data streams. Each stream represents a series of data packets. The graph setup makes processing data step by step easier and ensures your machine-learning pipeline runs efficiently.

Pipelines created using MediaPipe can run smoothly on web apps, smartphones (Android and iOS), and even small embedded systems. Its cross-platform capabilities help developers create immersive and responsive applications for any device.

MediaPipe Over the Years

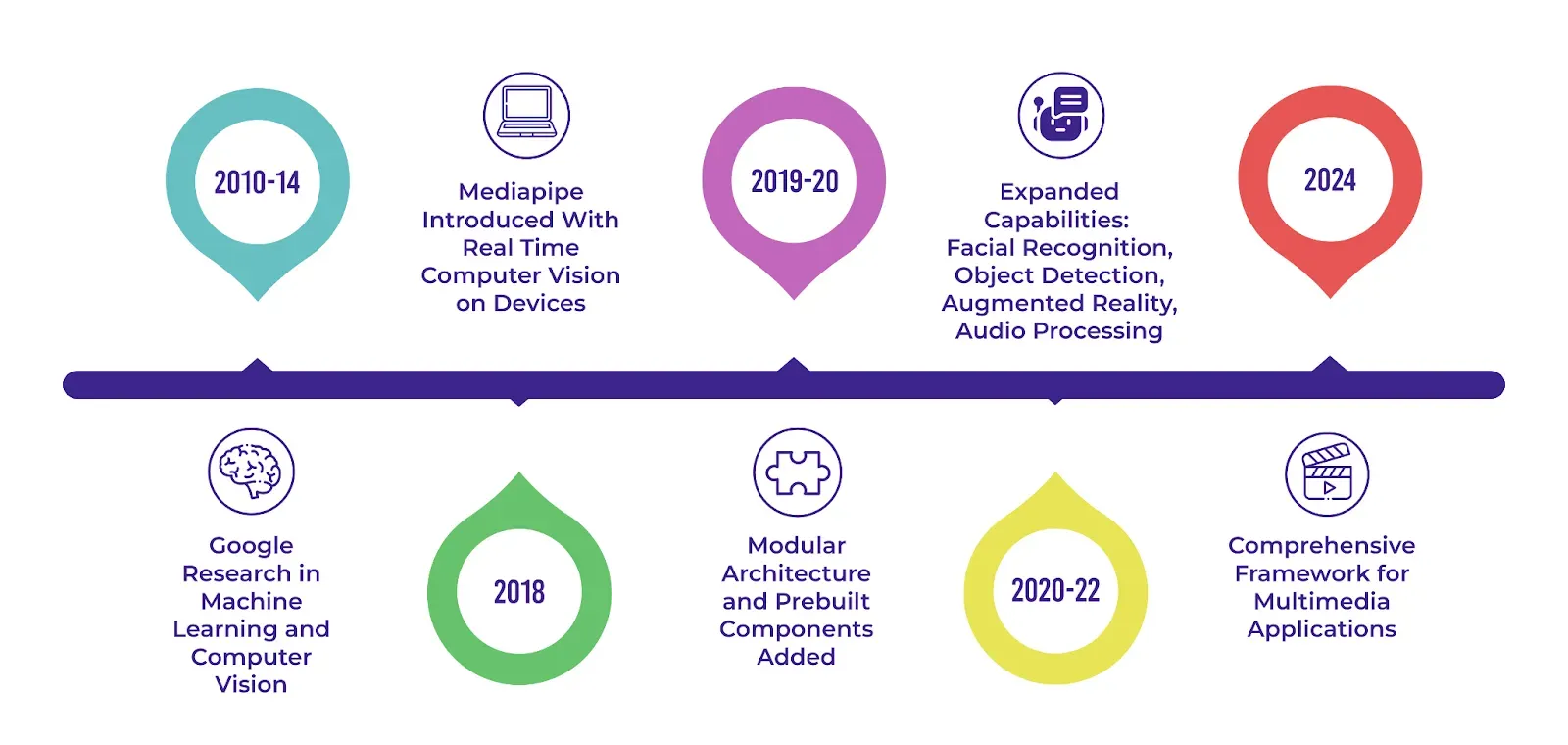

MediaPipe's roots go back to the early 2010s when Google was working on improving machine learning and computer vision technologies. It was first used in 2012 to analyze video and audio in real-time on YouTube.

In 2018, MediaPipe began solving problems related to using complex computer vision models on devices like smartphones and small computers. By 2020, there was a growing need for a fast and efficient way to process multimedia, so Mediapipe was updated with the same. Now, Mediapipe remains a strong framework for developers who want to create innovative multimedia apps that work really well.

Core Features and Technologies

MediaPipe comes with many exciting features. One such feature is that it can tap into the huge power of graphics processing units (GPUs) for faster processing. By using GPUs for tasks that need lots of computing power, MediaPipe can handle even the most demanding multimedia tasks in real time. Thanks to its ability to do parallel processing, it can also do several things at once, like processing many video streams or running multiple computer vision models

But that's just the tip of the iceberg. MediaPipe also makes use of OpenCV, a powerful open-source library for computer vision. OpenCV has lots of tools and algorithms for working with images and videos. By using OpenCV, MediaPipe can easily add features like video capture, processing, and rendering to its pipelines. MediaPipe also teams up with TensorFlow, Google's machine learning tool, to make adding pre-trained or custom models easy. This makes tasks like recognizing faces or understanding speech easy. MediaPipe can also support popular languages like C++, Java, and Python, so it's simple to add to your projects.

Here are some of the other core features of MediaPipe:

- Pre-Trained Models: Offers ready-to-run models to facilitate quick integration into applications

- Customization with MediaPipe Model Maker: Allows tailoring models for solutions with specific data

- Evaluation and Benchmarking: Aids in visualizing, evaluating, and benchmarking solutions directly in the browser

- Efficient On-device Processing: MediaPipe is optimized for on-device machine learning, ensuring real-time performance without relying on cloud processing.

MediaPipe Use Cases

The features that MediaPipe boasts create lots of exciting possibilities in different areas. Let’s explore some examples that show what MediaPipe can be used for.

Human Pose Estimation

MediaPipe is making a big splash in areas like fitness, sports, and healthcare with its precise human pose estimation. Pose estimation can detect and track body joints and movements in real-time. It's used in apps for exercise feedback, analyzing sports performance, and aiding in physical therapy.

MediaPipe's pose estimation abilities can be used to create virtual fitness apps for personalized coaching and form correction. These apps can enhance fitness experiences and boost overall health and wellness..

Video Call Enhancements

One good thing that came out of the COVID-19 pandemic is the increase in the use of remote communication and video conferencing applications. MediaPipe played a vital role in improving those technologies by adding features like dynamic frame adjustments and gesture control.

Dynamic frame adjustments make it possible to keep a person-centered and visible on the screen as they move. Gesture control lets users change settings or move through presentations using hand gestures, making it feel more natural. These upgrades make remote interactions more engaging than ever before.

Designing Augmented Reality Filters

Have you ever had fun playing around with Snapchat or Instagram filters? You can create your own similar Augmented Reality (AR)-based face filters using MediaPipe. The whole process involves more steps than you’d expect. It begins with face detection to accurately identify a person's facial features.

Developers use this data to add AR effects such as virtual masks, makeup, or animated overlays that react to facial movements and expressions. Facial filters have sparked creative ideas and let artists and brands connect with their audiences in fun and innovative ways.

How to Use MediaPipe with Python

Let’s check out a simple MediaPipe code example for implementing a hand-tracking application. We’ll use your webcam to detect your fingers as you wiggle them around!

You can try this out yourself in a few minutes. To get started, install the OpenCV and MediaPipe packages using pip (as shown below).

pip install opencv-python mediapipe==0.10.9Double-check that your webcam is working, and then run the following code.

import cv2 as cv

import mediapipe.python.solutions.hands as mp_hands

import mediapipe.python.solutions.drawing_utils as drawing

import mediapipe.python.solutions.drawing_styles as drawing_styles

# Initialize the Hands model

hands = mp_hands.Hands(

static_image_mode=False, # Set to False for processing video frames

max_num_hands=2, # Maximum number of hands to detect

min_detection_confidence=0.5 # Minimum confidence threshold for hand detection

)

# Open the camera

cam = cv.VideoCapture(0)

while cam.isOpened():

# Read a frame from the camera

success, frame = cam.read()

# If the frame is not available, skip this iteration

if not success:

print("Camera Frame not available")

continue

# Convert the frame from BGR to RGB (required by MediaPipe)

frame = cv.cvtColor(frame, cv.COLOR_BGR2RGB)

# Process the frame for hand detection and tracking

hands_detected = hands.process(frame)

# Convert the frame back from RGB to BGR (required by OpenCV)

frame = cv.cvtColor(frame, cv.COLOR_RGB2BGR)

# If hands are detected, draw landmarks and connections on the frame

if hands_detected.multi_hand_landmarks:

for hand_landmarks in hands_detected.multi_hand_landmarks:

drawing.draw_landmarks(

frame,

hand_landmarks,

mp_hands.HAND_CONNECTIONS,

drawing_styles.get_default_hand_landmarks_style(),

drawing_styles.get_default_hand_connections_style(),

)

# Display the frame with annotations

cv.imshow("Show Video", frame)

# Exit the loop if 'q' key is pressed

if cv.waitKey(20) & 0xff == ord('q'):

break

# Release the camera

cam.release()

When you execute the code above, a new window will pop up displaying the video feed from your camera. If hands are detected, landmarks will be drawn on them in the video. An example of the expected output is shown below.

Great! Can I customize and optimize my MediaPipe application? Yes, you can change the settings passed to the hands variable. For example, you can tweak max_num_hands to track more or fewer hands. You can also adjust min_detection_confidence to make hand detection more or less sensitive. It is also possible to optimize the code by using techniques such as multi-threading or GPU acceleration for much better performance.

Future of MediaPipe and Computer Vision

The future of MediaPipe and computer vision holds so much potential. New AI advancements and powerful hardware are bringing more and more innovations to the table. For example, as pose estimation becomes more advanced and the AI community does more research on this topic - we are seeing those capabilities being included in MediaPipe. 3D pose estimation was added in 2021, after the BlazePose model came out. Like this, it’s quite likely that we’ll see more and more additions in upcoming years.

Conclusion

Over the years, MediaPipe has evolved quite a bit. It now offers many new possibilities for multimedia processing. Whether you’re working on gesture control, facial recognition, pose estimation, or object tracking, MediaPipe can help you bring those ideas to life. As technology progresses, MediaPipe will keep empowering creators to discover new AI applications. The future looks bright!

Keep On Learning

Here are some resources to help you get started with MediaPipe:

- An article with easy-to-implement MediaPipe tutorials with explanations.

- Check out this MediaPipe for Dummies Tutorial that shows how to use MediaPipe's Python APIs.

- Find out how to use MediaPipe on Google Colab

- Check out this repository to learn the steps involved in creating apps with the MediaPipe platform.