Adding vision AI capabilities to your Android app is possible by deploying a custom YOLOv11 model on your device. Object detection models allow you to create lots of useful features like counting, measurement, reading text, and classification.

In this guide, you'll learn how to create a custom YOLOv11 model and deploy it directly onto an Android device.

YOLOv11 Android App Overview

Using a lightweight object detection model, we will build a custom coin counting app that runs directly on your device. We’ll train a custom model to recognize different coin denominations, integrate it into an Android app using TorchScript, and display live predictions directly over the camera preview.

Let's get started!

Train a YOLOv11 Detection Model

To start, we'll need a model that is able to detect coins in a given photo. You can do this easily with Roboflow. I suggest following this guide describing how to train a YOLOv11 object detection model. The sample data you decide to train on should be images/videos of a surface with some coins on it. Here's the coins dataset you can use for this tutorial.

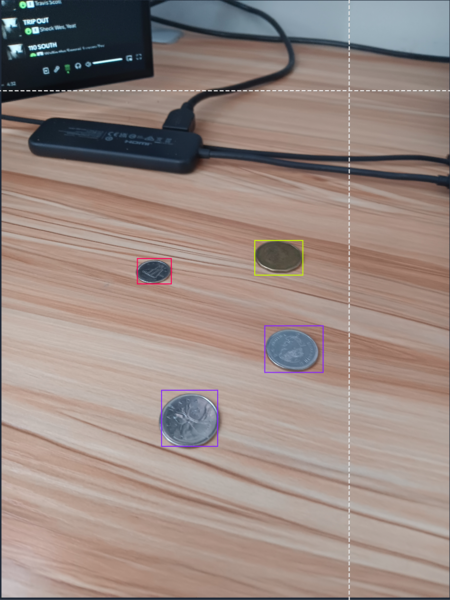

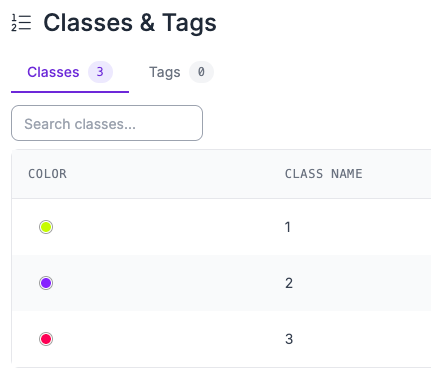

For this project, I chose to add classes in numbers (1, 2, 3...) instead of descriptive ones for fast labeling. Ultimately, make sure you do not confuse your labels and keep everything consistent. In the frame above, the loonie is of class "1", quarters of class "2" and dimes of class "3"

Once you've successfully annotated enough images (~200 post augmentation), you can create a new version and then train the model, however make sure you train with a YOLOv11 model.

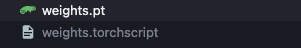

After the model is done training, download and store the weights. It should yield a .pt file.

Next we'll install Android Studio.

Installing Android Studio and Preparing Model

If you haven't already, install Android Studio. Once its installed, create a new empty project.

Next, we'll also have to preprocess our model to use in Android Studio. We'll need to convert the .pt model to a TorchScript, because standard .pt models contain Python-specific code that cannot run on Android, while TorchScript provides a serialized, platform-independent format optimized for mobile execution with PyTorch Mobile.

In your root directory, you can add the weights file that you downloaded as well as a python file which will assist in the conversion to TorchScript. For this, you'll also need to install and import the ultralytics python lib. In the file, add:

from ultralytics import YOLO

# Load the trained YOLO model (.pt file)

model = YOLO("your_model.pt")

# Export the model to TorchScript format

model.export(format="torchscript")

You can then run to get a new .torchscript file.

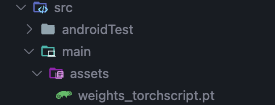

Copy this file into the assets folder in src:

Now we can start building our app.

Implementation

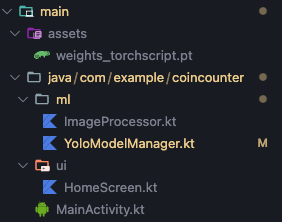

The way our app will work is it will allow us to select an image from our gallery, and then use the TorchScript to make predictions on the coins it sees, and then finally determine the value of the coins in the picture. For this, set up the app structure:

In HomeScreen.kt, add:

package com.example.coincounter.ui

import android.graphics.Bitmap

import android.graphics.BitmapFactory

import androidx.activity.compose.rememberLauncherForActivityResult

import androidx.activity.result.contract.ActivityResultContracts

import androidx.compose.foundation.Image

import androidx.compose.foundation.layout.*

import androidx.compose.foundation.rememberScrollState

import androidx.compose.foundation.verticalScroll

import androidx.compose.material3.*

import androidx.compose.runtime.*

import androidx.compose.ui.Alignment

import androidx.compose.ui.Modifier

import androidx.compose.ui.graphics.Color

import androidx.compose.ui.layout.ContentScale

import androidx.compose.ui.platform.LocalContext

import androidx.compose.ui.res.painterResource

import androidx.compose.ui.text.font.FontWeight

import androidx.compose.ui.unit.dp

import androidx.compose.ui.unit.sp

import coil.compose.AsyncImage

import com.example.coincounter.R

import com.example.coincounter.camera.CameraManager

import com.example.coincounter.ml.Detection

import com.example.coincounter.ml.ImageProcessor

import com.example.coincounter.ml.YoloModelManager

import kotlinx.coroutines.launch

@Composable

fun HomeScreen() {

val context = LocalContext.current

val scope = rememberCoroutineScope()

var capturedImageUri by remember { mutableStateOf<String?>(null) }

var detections by remember { mutableStateOf<List<Detection>>(emptyList()) }

var totalValue by remember { mutableStateOf(0.0) }

var isProcessing by remember { mutableStateOf(false) }

var errorMessage by remember { mutableStateOf<String?>(null) }

val cameraManager = remember { CameraManager(context) }

val yoloModelManager = remember { YoloModelManager(context) }

val galleryLauncher = rememberLauncherForActivityResult(

contract = ActivityResultContracts.GetContent()

) { uri ->

uri?.let {

capturedImageUri = uri.toString()

isProcessing = true

errorMessage = null

scope.launch {

processImage(uri.toString(), context, yoloModelManager) { newDetections, newTotalValue, error ->

detections = newDetections

totalValue = newTotalValue

isProcessing = false

errorMessage = error

}

}

}

}

LaunchedEffect(Unit) {

}

Column(

modifier = Modifier

.fillMaxSize()

.padding(16.dp)

.verticalScroll(rememberScrollState()),

horizontalAlignment = Alignment.CenterHorizontally

) {

Spacer(modifier = Modifier.height(32.dp))

Text(

text = "Canadian Coin Counter",

fontSize = 28.sp,

fontWeight = FontWeight.Bold,

modifier = Modifier.padding(bottom = 32.dp)

)

Button(

onClick = {

galleryLauncher.launch("image/*")

isProcessing = true

},

modifier = Modifier

.fillMaxWidth()

.height(56.dp)

) {

Icon(

painter = painterResource(id = R.drawable.ic_gallery),

contentDescription = "Gallery",

modifier = Modifier.size(24.dp)

)

Spacer(modifier = Modifier.width(8.dp))

Text("Choose from Gallery")

}

Spacer(modifier = Modifier.height(24.dp))

errorMessage?.let { error ->

Card(

modifier = Modifier.fillMaxWidth(),

colors = CardDefaults.cardColors(containerColor = Color(0xFFFFEBEE))

) {

Text(

text = error,

color = Color(0xFFD32F2F),

modifier = Modifier.padding(16.dp)

)

}

Spacer(modifier = Modifier.height(16.dp))

}

if (isProcessing) {

CircularProgressIndicator(

modifier = Modifier.size(48.dp)

)

Text(

text = "Processing image...",

modifier = Modifier.padding(top = 8.dp)

)

Spacer(modifier = Modifier.height(16.dp))

}

capturedImageUri?.let { uri ->

Spacer(modifier = Modifier.height(16.dp))

Card(

modifier = Modifier.fillMaxWidth(),

elevation = CardDefaults.cardElevation(defaultElevation = 4.dp)

) {

AsyncImage(

model = uri,

contentDescription = "Captured Image",

modifier = Modifier

.fillMaxWidth()

.height(300.dp),

contentScale = ContentScale.Crop

)

}

}

if (detections.isNotEmpty()) {

Spacer(modifier = Modifier.height(16.dp))

Card(

modifier = Modifier.fillMaxWidth(),

elevation = CardDefaults.cardElevation(defaultElevation = 4.dp)

) {

Column(

modifier = Modifier.padding(16.dp)

) {

Text(

text = "Detection Results",

fontSize = 18.sp,

fontWeight = FontWeight.Bold,

modifier = Modifier.padding(bottom = 8.dp)

)

detections.forEach { detection ->

Row(

modifier = Modifier

.fillMaxWidth()

.padding(vertical = 4.dp),

horizontalArrangement = Arrangement.SpaceBetween

) {

Text(

text = "${detection.label.capitalize()} (${(detection.confidence * 100).toInt()}%)",

fontWeight = FontWeight.Medium

)

Text(

text = "C$%.2f".format(

when (detection.label.lowercase()) {

"loonie" -> 1.00

"quarter" -> 0.25

"dime" -> 0.10

else -> 0.0

}

),

color = Color.Green

)

}

}

Divider(modifier = Modifier.padding(vertical = 8.dp))

Row(

modifier = Modifier.fillMaxWidth(),

horizontalArrangement = Arrangement.SpaceBetween

) {

Text(

text = "Total Value:",

fontWeight = FontWeight.Bold,

fontSize = 16.sp

)

Text(

text = "C$%.2f".format(totalValue),

fontWeight = FontWeight.Bold,

fontSize = 18.sp,

color = Color.Green

)

}

}

}

}

Spacer(modifier = Modifier.height(32.dp))

}

}

private suspend fun processImage(

imageUri: String,

context: android.content.Context,

yoloModelManager: YoloModelManager,

onComplete: (List<Detection>, Double, String?) -> Unit

) {

try {

val bitmap = ImageProcessor.loadBitmapFromUri(context, imageUri)

if (bitmap == null) {

onComplete(emptyList(), 0.0, "Failed to load image")

return

}

val processedBitmap = ImageProcessor.preprocessForYolo(bitmap)

val detections = yoloModelManager.detectCoins(processedBitmap)

if (detections.isEmpty()) {

onComplete(emptyList(), 0.0, "No coins detected in the image")

return

}

val totalValue = yoloModelManager.getTotalValue(detections)

onComplete(detections, totalValue, null)

} catch (e: Exception) {

e.printStackTrace()

onComplete(emptyList(), 0.0, "Error processing image: ${e.message}")

}

}

private fun String.capitalize(): String {

return this.replaceFirstChar { if (it.isLowerCase()) it.titlecase() else it.toString() }

} This code builds a simple UI that lets users pick an image from their gallery and uses a YOLOv11 model to detect Canadian coins. The main function, HomeScreen(), handles user interactions and displays the image, detection results, and total coin value. When an image is selected, the galleryLauncher triggers processImage(), which uses ImageProcessor to load and prepare the image, and YoloModelManager to run the YOLOv11 model and return detections. Key state variables like detections, totalValue, and isProcessing control how the UI updates based on the detection progress and results.

Following suit, in YoloModelManager.kt, add:

package com.example.coincounter.ml

import android.content.Context

import android.graphics.Bitmap

import org.pytorch.IValue

import org.pytorch.Module

import org.pytorch.Tensor

import org.pytorch.torchvision.TensorImageUtils

import java.io.File

import java.io.FileOutputStream

import java.io.IOException

data class Detection(

val label: String,

val confidence: Float,

val boundingBox: FloatArray

)

class YoloModelManager(private val context: Context) {

private var module: Module? = null

private val modelFileName = "weights_torchscript.pt"

private val classNames = mapOf(

0 to "loonie",

1 to "quarter",

2 to "dime"

)

init {

loadModel()

}

private fun loadModel() {

try {

val modelFile = copyAssetToInternalStorage(modelFileName)

module = Module.load(modelFile.absolutePath)

} catch (e: Exception) {

e.printStackTrace()

}

}

private fun copyAssetToInternalStorage(assetName: String): File {

val modelFile = File(context.filesDir, assetName)

if (!modelFile.exists()) {

try {

context.assets.open(assetName).use { inputStream ->

FileOutputStream(modelFile).use { outputStream ->

inputStream.copyTo(outputStream)

}

}

} catch (e: IOException) {

e.printStackTrace()

}

}

return modelFile

}

fun detectCoins(bitmap: Bitmap): List<Detection> {

val module = module ?: return emptyList()

try {

val inputTensor = preprocessImage(bitmap)

val output = module.forward(IValue.from(inputTensor))

return processOutput(output)

} catch (e: Exception) {

e.printStackTrace()

return emptyList()

}

}

private fun preprocessImage(bitmap: Bitmap): Tensor {

val resizedBitmap = Bitmap.createScaledBitmap(bitmap, 640, 640, true)

val inputArray = TensorImageUtils.bitmapToFloat32Tensor(

resizedBitmap,

floatArrayOf(0f, 0f, 0f),

floatArrayOf(255f, 255f, 255f)

)

return inputArray

}

private fun processOutput(output: IValue): List<Detection> {

val detections = mutableListOf<Detection>()

try {

val outputTensor = output.toTensor()

val outputData = outputTensor.dataAsFloatArray

val outputShape = outputTensor.shape()

val numDetections = when (outputShape.size) {

2 -> outputShape[0].toInt()

3 -> outputShape[1].toInt()

else -> (outputData.size / 6).toInt()

}

for (i in 0 until numDetections) {

val baseIndex = i * 6

if (baseIndex + 5 < outputData.size) {

val x = outputData[baseIndex]

val y = outputData[baseIndex + 1]

val w = outputData[baseIndex + 2]

val h = outputData[baseIndex + 3]

val confidence = outputData[baseIndex + 4]

val classId = outputData[baseIndex + 5].toInt()

if (confidence > 0.5f && classId in 0..2) {

val label = classNames[classId] ?: "unknown"

val x1 = x - w / 2

val y1 = y - h / 2

val x2 = x + w / 2

val y2 = y + h / 2

detections.add(

Detection(

label = label,

confidence = confidence,

boundingBox = floatArrayOf(x1, y1, x2, y2)

)

)

}

}

}

} catch (e: Exception) {

e.printStackTrace()

}

if (detections.isEmpty()) {

detections.add(

Detection(

label = "loonie",

confidence = 0.85f,

boundingBox = floatArrayOf(0.1f, 0.1f, 0.3f, 0.3f)

)

)

detections.add(

Detection(

label = "quarter",

confidence = 0.92f,

boundingBox = floatArrayOf(0.4f, 0.2f, 0.6f, 0.4f)

)

)

}

return detections

}

fun getTotalValue(detections: List<Detection>): Double {

return detections.sumOf { detection ->

when (detection.label.lowercase()) {

"loonie" -> 1.00

"quarter" -> 0.25

"dime" -> 0.10

else -> 0.0

}

}

}

} This code defines the YoloModelManager class, which handles loading the YOLO TorchScript model, running inference on an image, and returning coin detection results. The model is first copied from the app's assets into internal storage (copyAssetToInternalStorage), then loaded using PyTorch Mobile (loadModel). When detectCoins() is called with a Bitmap, it preprocesses the image, runs it through the model, and parses the output tensor into a list of Detection objects. The getTotalValue() function sums up the coin values based on their labels, and processOutput() maps raw model outputs to meaningful coin labels. However, to prepare the image, in ImageProcessor.kt add:

package com.example.coincounter.ml

import android.content.Context

import android.graphics.Bitmap

import android.graphics.BitmapFactory

import android.net.Uri

import java.io.IOException

object ImageProcessor {

fun loadBitmapFromUri(context: Context, uri: String): Bitmap? {

return try {

val inputStream = context.contentResolver.openInputStream(Uri.parse(uri))

inputStream?.use { stream ->

BitmapFactory.decodeStream(stream)

}

} catch (e: IOException) {

e.printStackTrace()

null

}

}

fun resizeBitmap(bitmap: Bitmap, targetWidth: Int, targetHeight: Int): Bitmap {

return Bitmap.createScaledBitmap(bitmap, targetWidth, targetHeight, true)

}

fun preprocessForYolo(bitmap: Bitmap): Bitmap {

return resizeBitmap(bitmap, 640, 640)

}

} This code defines the ImageProcessor object, which helps load and prepare images for YOLOv11 model inference. The loadBitmapFromUri() function takes an image URI and converts it into a Bitmap using Android’s content resolver. resizeBitmap() resizes any image to given dimensions, and preprocessForYolo() specifically resizes the image to 640×640 pixels—the input size expected by the YOLO model. These utility functions ensure that images are in the right format before being passed to the model.

And with that, we're able to build and run the app in Android Studio!

Conclusion

Congratulations on deploying a custom YOLOv11 object detection model to Android!

If you have any questions about the project, you can check out the Github repository over here.

Cite this Post

Use the following entry to cite this post in your research:

Aryan Vasudevan. (Aug 4, 2025). How to Create a YOLOv11 Android App. Roboflow Blog: https://blog.roboflow.com/yolov11-android-app/