Ampera Racing is a team formed by students from UFSC (Federal University of Santa Catarina) with the objective of developing an electric vehicle every year and promoting the mobility of the future.

In 2021 we realized that we could reach a new level if we also made an autonomous vehicle and so Ampera's driverless project started. We are currently at a well-advanced stage of this ambition and we intend to have one of the first autonomous racing vehicles in Latin America by the end of 2022.

In this post, you will understand how it is possible to create a low-cost self-driving vehicle pipeline using object detection.

How To Structure a Self-driving Vehicle's Architecture

In general, the software architecture of a self-driving car consists of 5 systems:

- Environment Perception: there are two goals, locating the vehicle in space and detecting and locating elements in the environment;

- Environment Mapping: creating different representations of the environment;

- Motion Planning: the perception and mapping modules are combined and used by the motion planning module to create a path through the environment;

- Controller: transforms the generated trajectory into a set of actuation commands for the vehicle;

- System Supervisor: continuously monitors all aspects of the self-driving car

Object Detection Tutorial for Formula Student Driverless

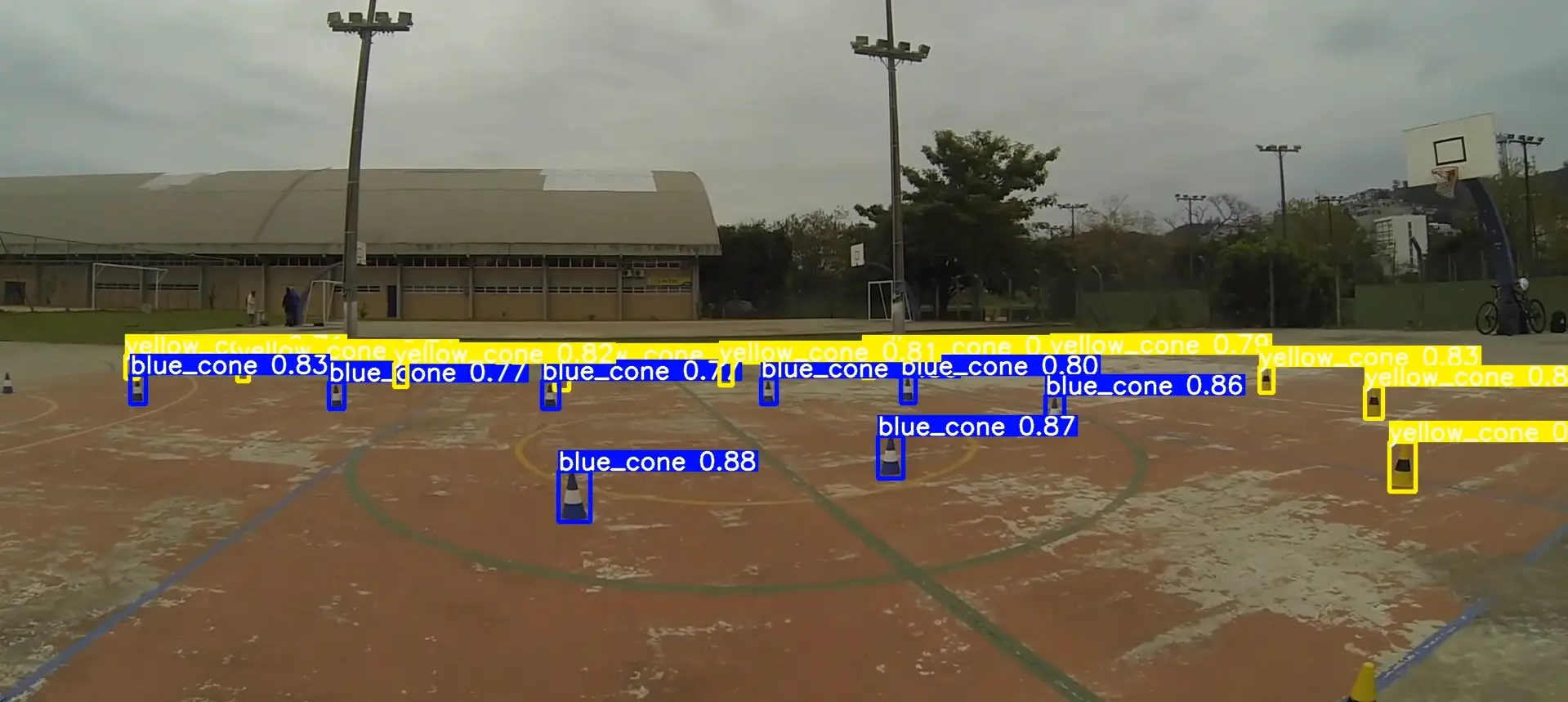

Object detection for Formula Student Driverless is much simpler than for an autonomous vehicle, needing to detect only cones and for that, we use Yolov5 as the architecture for the convolutional neural network. Below are the results of a model obtained with the Roboflow training feature used for cones. Datasets for self-driving cars can be used for other objects when training a model for use on roads.

The competition is divided into three parts: Business Plan Presentation Event, Design Highlight Challenge, and Dynamic Events. In 2020 and 2021 Ampera Racing participated in the Formula Student Online, an international competition created to replace the face-to-face competitions canceled in 2020 due to COVID-19. We competed with the best in the world and got 4th place overall in 2020 and 3rd place overall in 2021, in the electric and driverless categories, respectively.

Although there is still no driverless competition in Brazil, we are working on having this category in the near future. In the meantime, we intend to participate in the Formula Student Germany with our self-driving prototype.

Position estimation with just one monocular camera

One of the main challenges of the environment perception module is, after detecting the object, to get a good approximation of the object's distance from the vehicle. This task would be simple using a Lidar sensor, as we use in our normal pipeline. However, this task can also be done using just a monocular camera with object detection. At Ampera Racing we tested two methods that had good results, using a keypoints neural network, which has a high computational cost, and a bird's-eye view, which is much faster.

Keypoints Neural Network

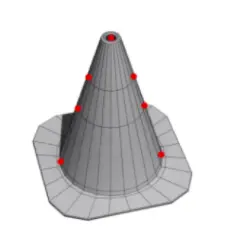

We realized that it would be possible to obtain a 3D position of the cone using the Perspective-n-Point algorithm, which estimates the position of objects using camera parameters, the real geometry of the object, and specific points of the object in the image, called keypoints. Therefore, we defined that the cone's key points would be 2 points at its base, 4 points at the stripe of the cone, and 1 point at the apex.

Since the chosen points were similar to corner features (which have gradients in two directions instead of one, making them a distinctive feature), a convolutional neural network capable of detecting these points could be created. The following is a result of the integration between the cone detection, keypoints detection, and Perspective-n-Point:

However, the computational cost is expensive, with 0.3 FPS on a Jetson Nano.

Bird's-eye View

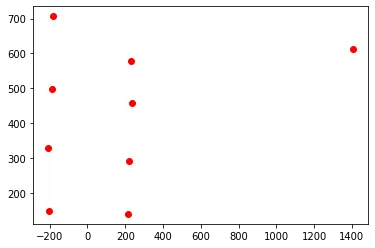

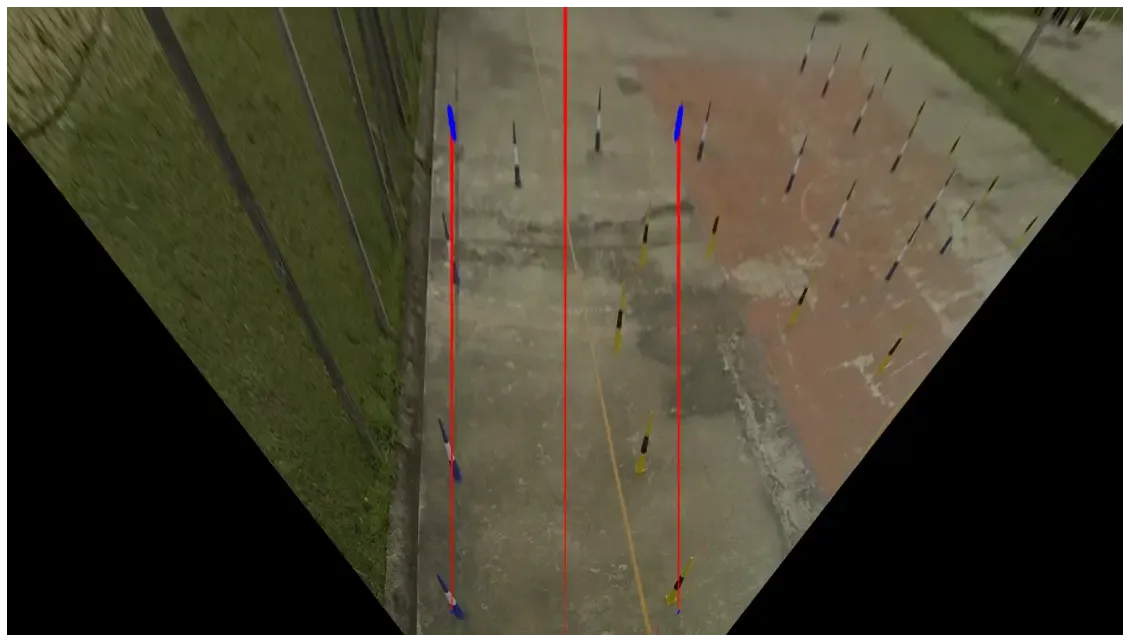

Another method is the bird's-eye view, also called inverse perspective mapping, which creates a top view of the image and it can be performed using OpenCV.

- OpenCV: Geometric Transformation of Images (see Perspective Transformation)

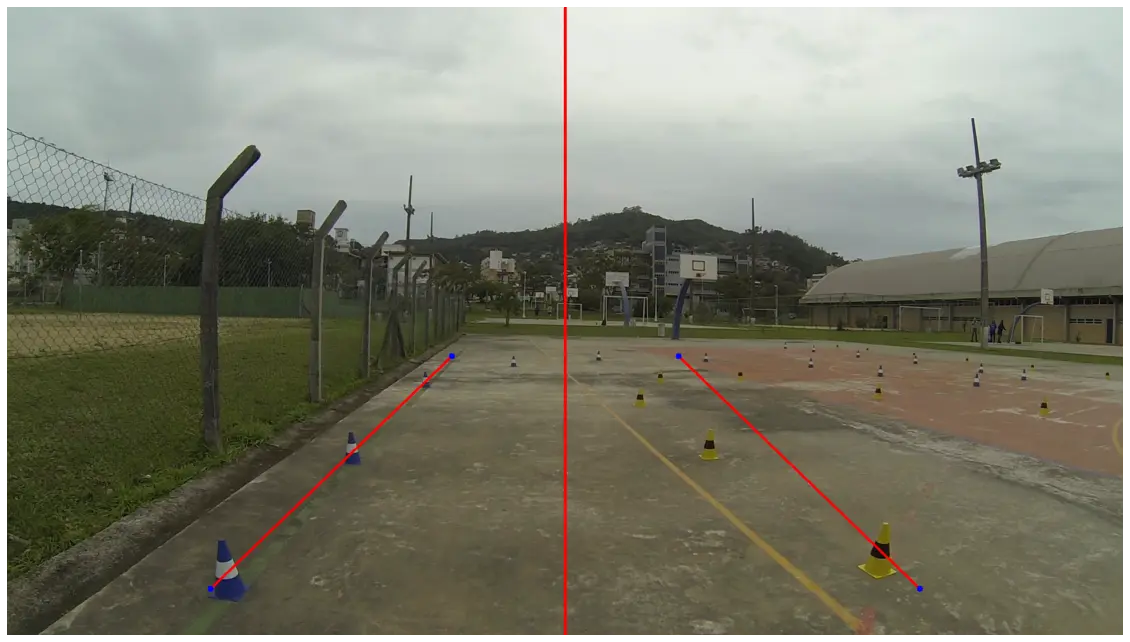

After that, we identify the centers of the cones bounding boxes in the distorted image, and based on the real distance between the cones (approximately 5 meters in the competition), we create a scale to transform the coordinates in the image into real-world coordinates.

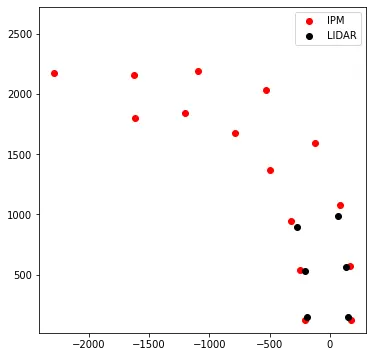

Although this method does not have better accuracy, the decrease in computational cost makes it much more attractive than the keypoints method. Knowing the relationship between the coordinate system of the transformation to the global reference frame it is possible to obtain surprising results. Below is a comparison between the position of the cones mapped by inverse perspective with cones detected by a lidar, a specific sensor to obtain spatial information about its surroundings.

Path Planning

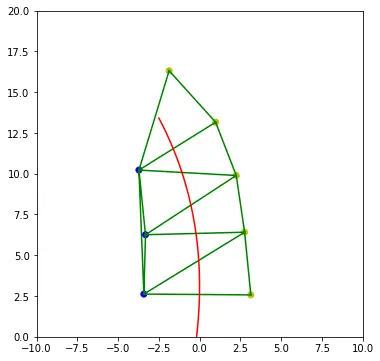

Our path planning algorithm consists of three steps. First, the space is discretized using a triangulation algorithm, then a tree of possible paths is created through discretization. Finally, all paths are sorted by a cost function and the path with the lowest cost is chosen.

To discretize the space, Delaunay Triangulation is used, in which the vertices of the generated triangles are the cones, and the midpoint of specific edges are connected to generate trajectories.

However, it is possible to generate several different trajectories, many of them inefficient and sometimes even wrong, leading to a planned path that veers off the track. From this tree of possibilities, a cost is defined for each path, which is related to the yaw change, the total length of the path, and the permanence of the car inside the track along the route. Finally, the path with the lowest cost is selected.

The following is a result of this trajectory planning integrated with the 3D position of the cones via inverse perspective mapping.

Lateral Control

With the path planned, it is necessary to pass this information to the vehicle's actuators through commands, which in our case will be passed only at the steering angle, keeping the speed constant. We have already used 3 methods to perform this lateral control: Pure Pursuit, Stanley Controller, and Model Predictive Control. The most famous and easiest to implement is the Pure Pursuit and for lower speeds, it can be used to tackle this lateral control challenge.

In this article, you can delve deeper into these lateral control methods.

How to Build Your Own Self-Driving Systems

We can implement this pipeline on Formula Student Driverless Simulator, a community project that recreates the racing environment and simulates all commonly used sensors. We use python and Robot Operating System (ROS) to build our autonomous system.

With more time and resources you can test your pipeline in the real world by building a miniature vehicle. Ampera Racing did this with a remote control car in which we added sensors like motor encoders, GPS, Inertial measurement unit (IMU), monocular camera, and Lidar. A Jetson Nano and Arduino Uno were used as a master computer and a slave computer respectively. A monocular camera is a common type of vision sensor used in automated driving applications.

Conclusion

The challenge that we at Ampera Racing will have to face by the end of the year goes far beyond what has been seen in this blog, but from the methods presented, we hope it was possible to understand in a simplified way how an autonomous vehicle can locate itself, plan its movement, and execute these commands using object detection.