What is CreateML?

CreateML is a machine learning tool developed by Apple that you can use to build models that work with images, sound, text, and more. CreateML integrates with the rest of the Apple ecosystem, providing a no-code platform through which you can train models for use in your applications.

In terms of computer vision, CreateML supports both image classification ("which of a set of labels best represents the contents of an image?) and object detection ("where are different instances of an object in an image?").

While Apple's platform is no-code, you still need data to build your model.

To load data into CreateML you'll need it in the proper format. Unfortunately, Apple CreateML uses a proprietary JSON format that isn't widely supported in labeling tools. So most users will need to write some boilerplate code to convert their annotations.

Luckily for us, Roboflow is the universal converter for computer vision and so by combining Roboflow with CreateML we can fully realize the vision of no-code model training.

To build a computer vision model using Apple's CreateML, we will:

- Collect data;

- Configure an object detection model in CreateML;

- Start training, and;

- Evaluate model performance.

Without further ado, let's get started!

Setup to Use Apple's CreateML for Computer Vision

To complete this tutorial you will need an Apple macOS device with CreateML installed (this comes free with XCode which is available in the Mac App Store).

Collect Data to Use With CreateML

You can collect data simply by taking pictures with your iPhone. Collect a variety of photos of your subject from different angles, with different backgrounds, and in different lighting. The more variation in the photos the better.

Once you've got a hundred or so images you're ready to label them. There are a variety of free tools available to do this. Take your pick from: Roboflow, CVAT, VoTT, LabelMe, or LabelImg and create bounding boxes around the objects you'd like to detect.

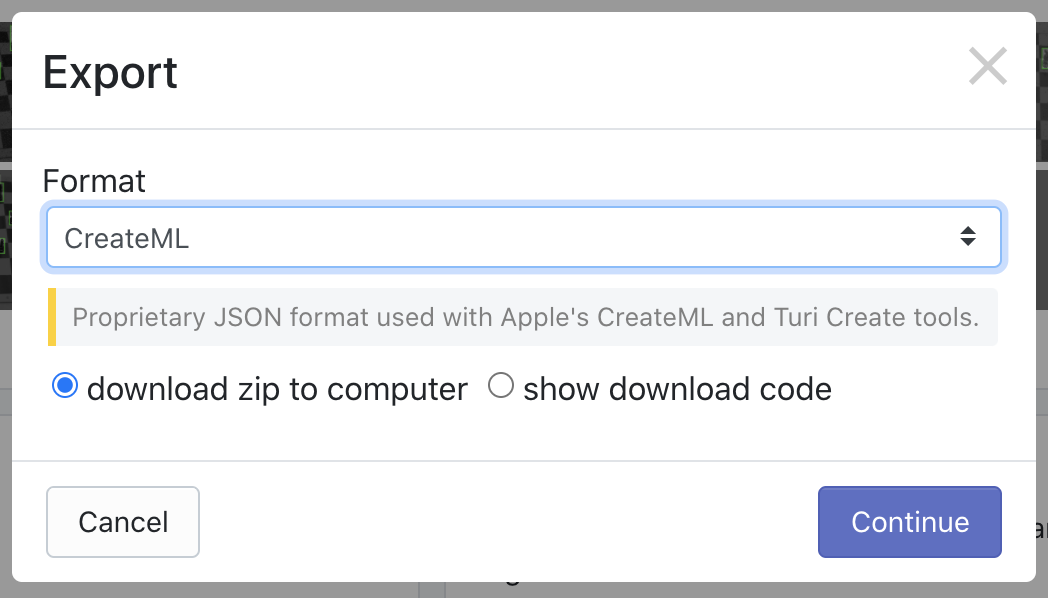

Then drop your images and annotations into Roboflow and export in CreateML format (optionally add some augmentations to increase the size of your dataset).

If you don't want to spend the time collecting and labeling your own images, you can also choose an open source dataset from Roboflow Universe and download directly in CreateML JSON format.

Train a Computer Vision Model with CreateML

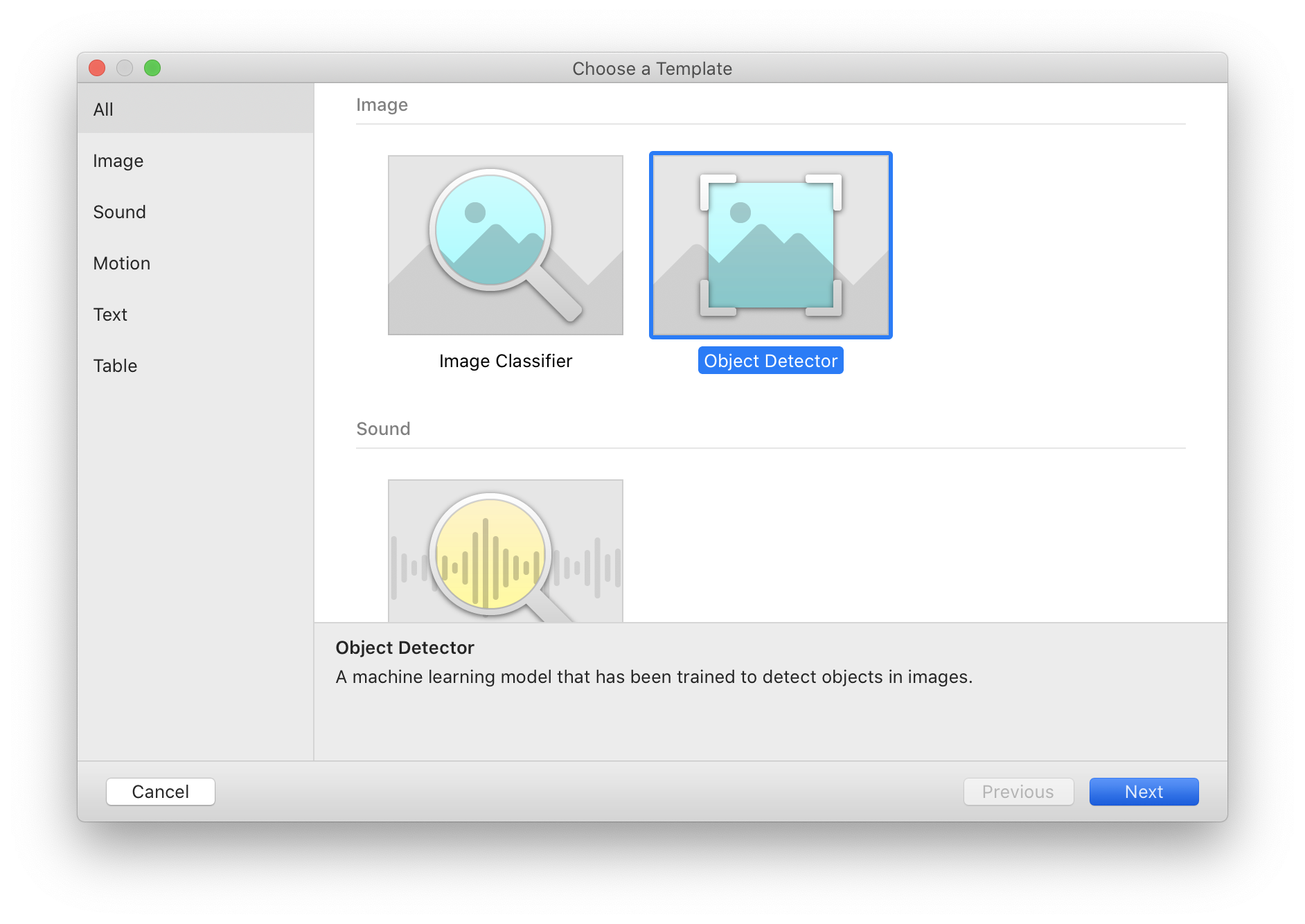

Now comes the fun part. We're ready to train a model! Fire up CreateML and create a new Object Detector project.

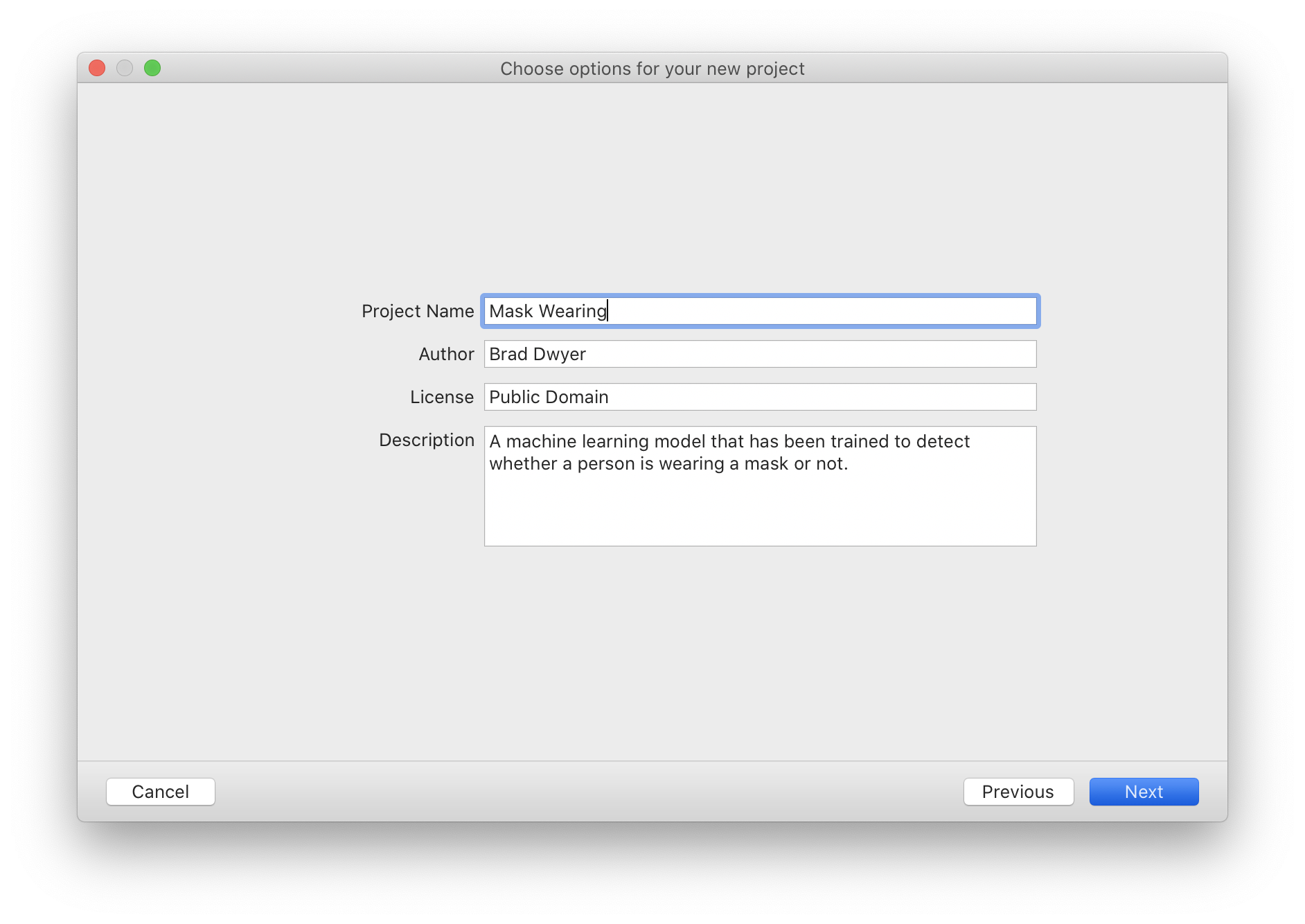

Next, give your project a name and description. I'll be training a model to detect whether a person is wearing a mask or not (based on this public mask wearing dataset).

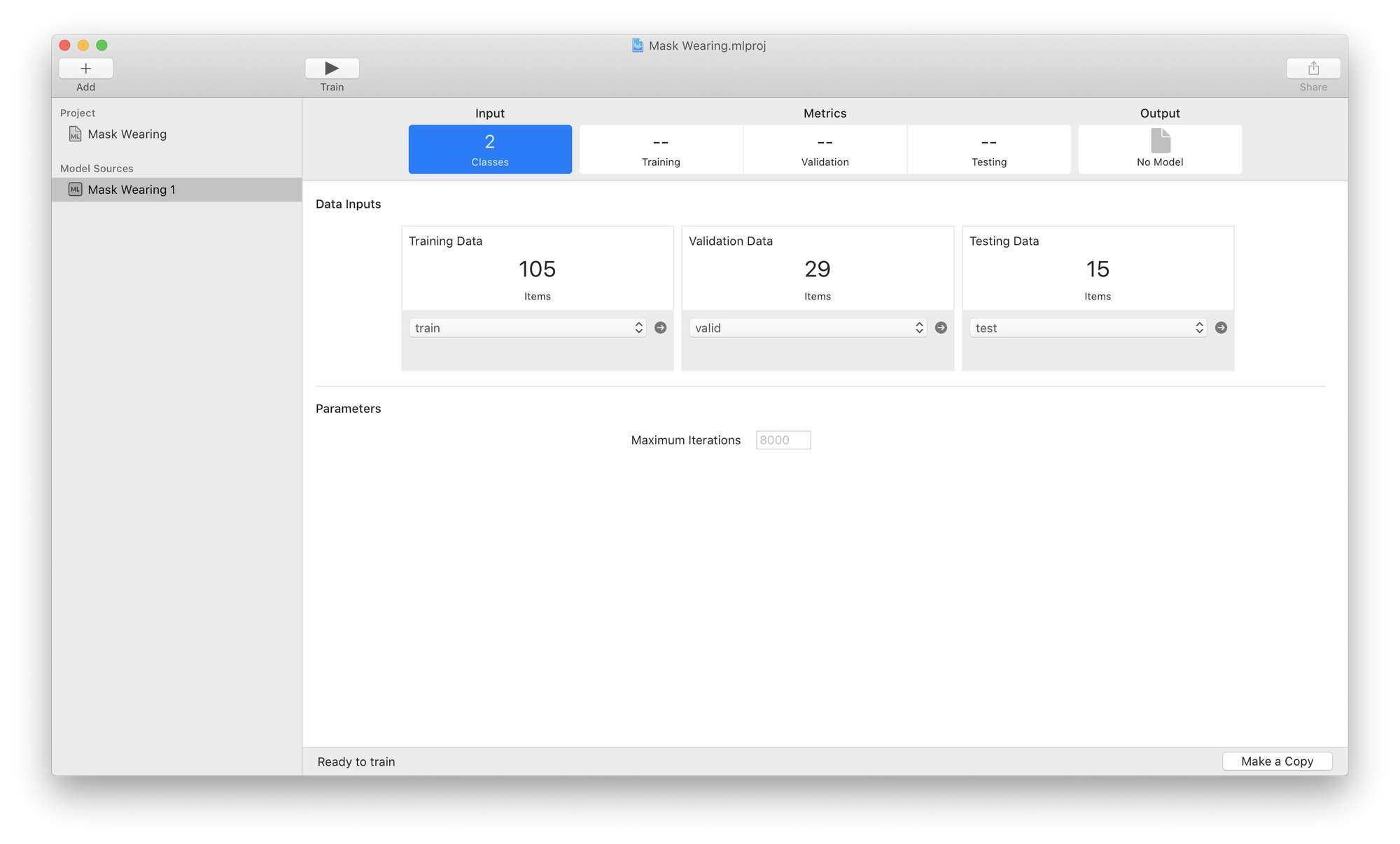

Then we'll unzip the download from Roboflow and point CreateML at the training, validation, and testing data we downloaded:

And we're ready to train. Just hit the "Train" button at the top and we're on our way. Training might take a while so grab a coffee (or let it run overnight depending on the size of your dataset and the number of iterations you picked) while it trains.

A word of warning: your computer is going to be working in overdrive so don't expect to be able to use it for other things while the model is training.

Evaluate Model Performance in CreateML

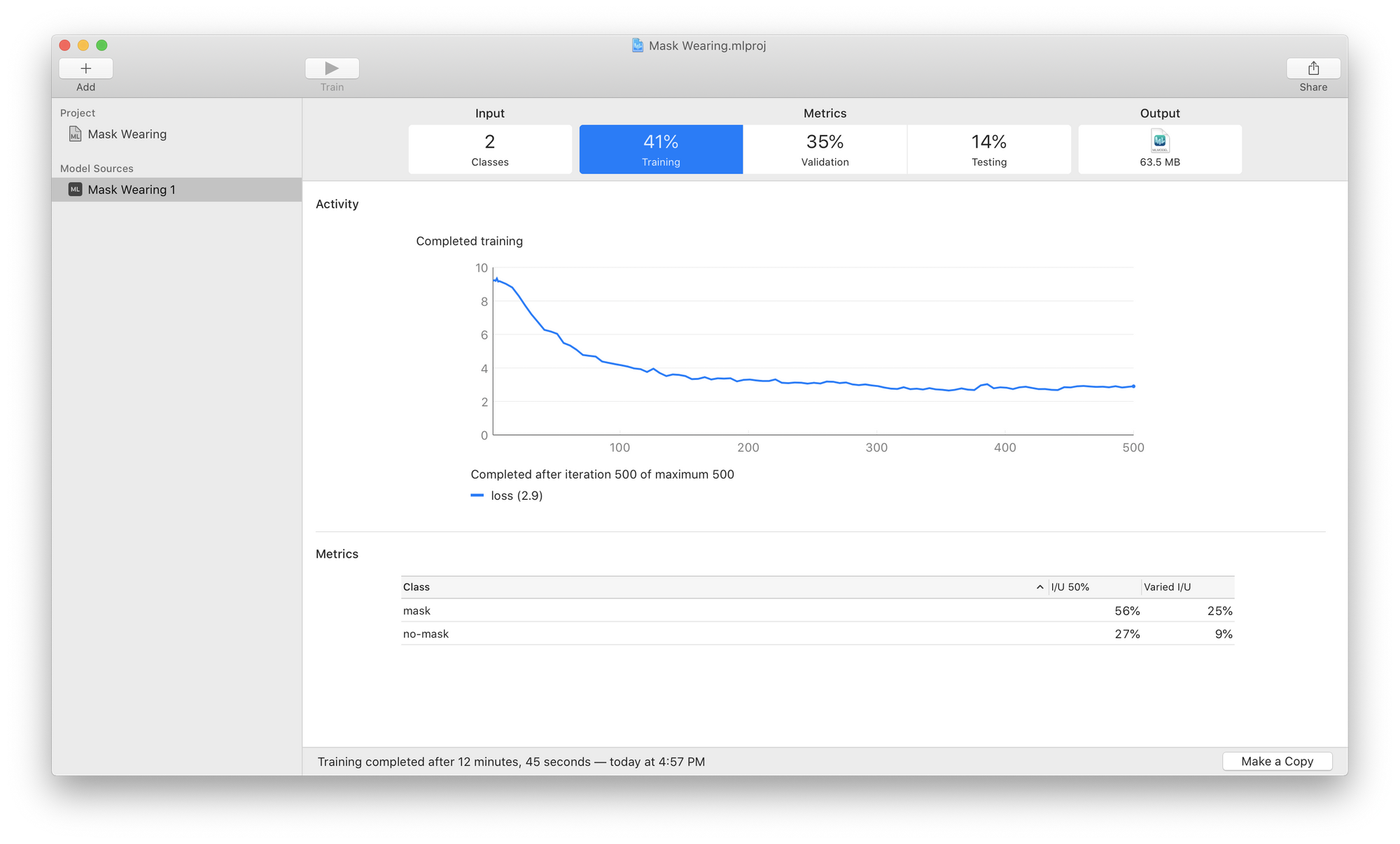

As your model trains, you'll watch the blue line tick to the right (and hopefully down). This line measures your validation loss which is one measure of how well your model is fitting your dataset. Lower numbers are better.

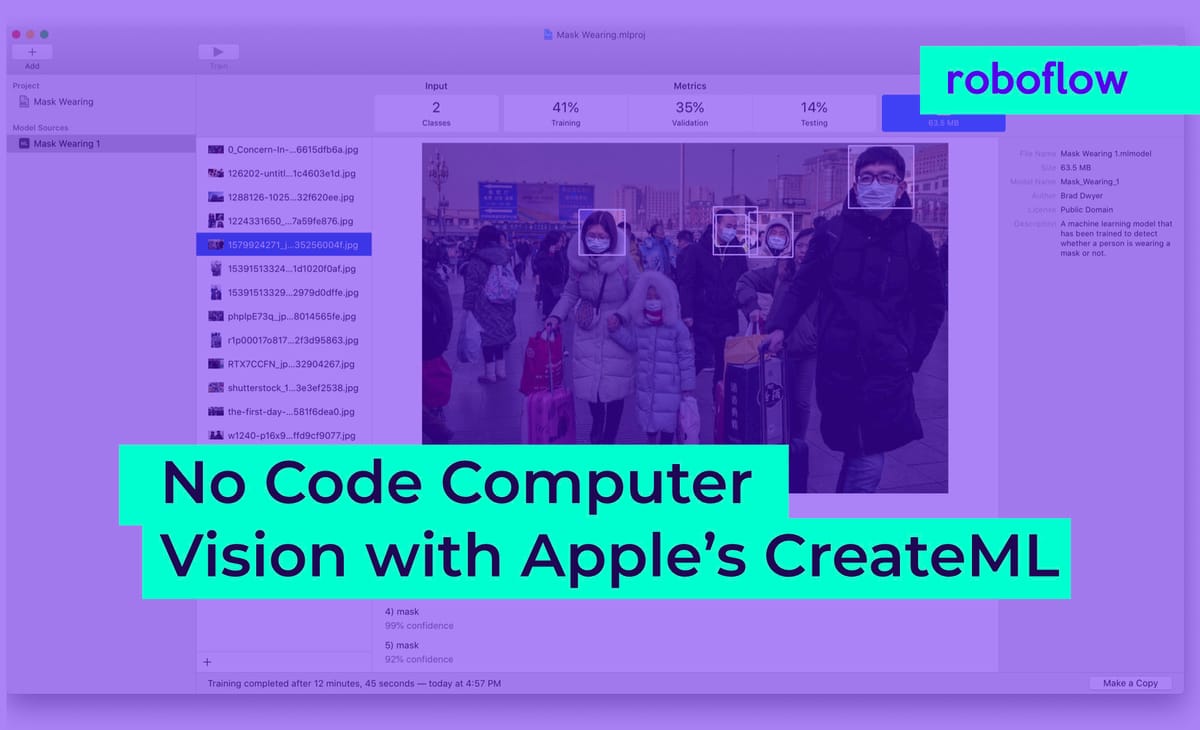

At the end of training you will see a percentage for Intersection Over Union (I/U) for each class and a Mean Average Precision for your Training, Validation, and Testing sets at the top. Higher values are better here.

From my screenshot above we can see that the model is better at detecting faces with masks than faces without masks. And that it does much better on the training and validation data than the testing data. This means the model did not generalize very well to data it hadn't seen before.

To address this issue I should collect or generate more training data (particularly of people not wearing masks) or add augmentations and re-export a new version from Roboflow.

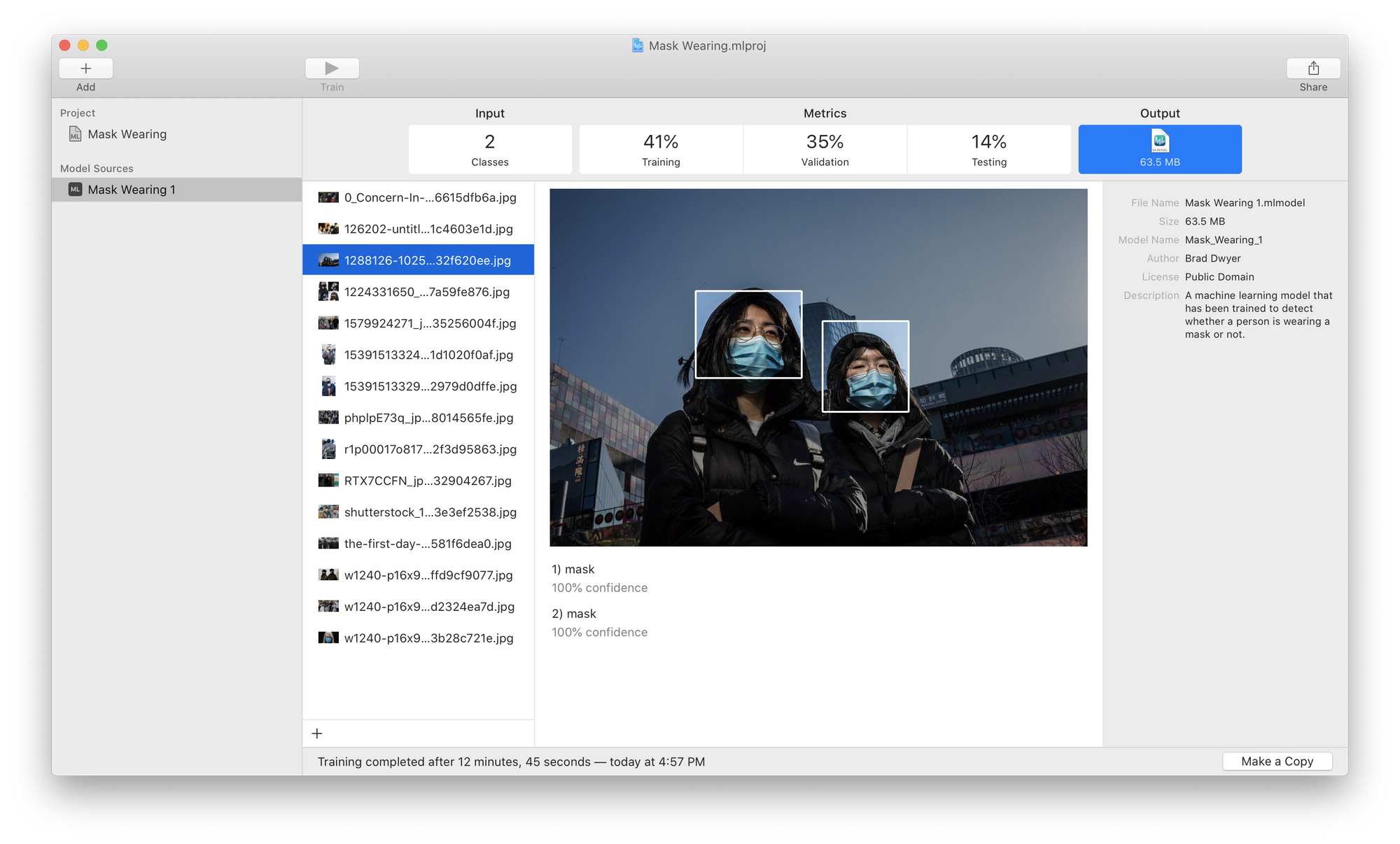

But numbers can be hard to grok. To truly see how your model performs, click on the "Output" tab and drop your test images in to see a visualization of your model prediction.

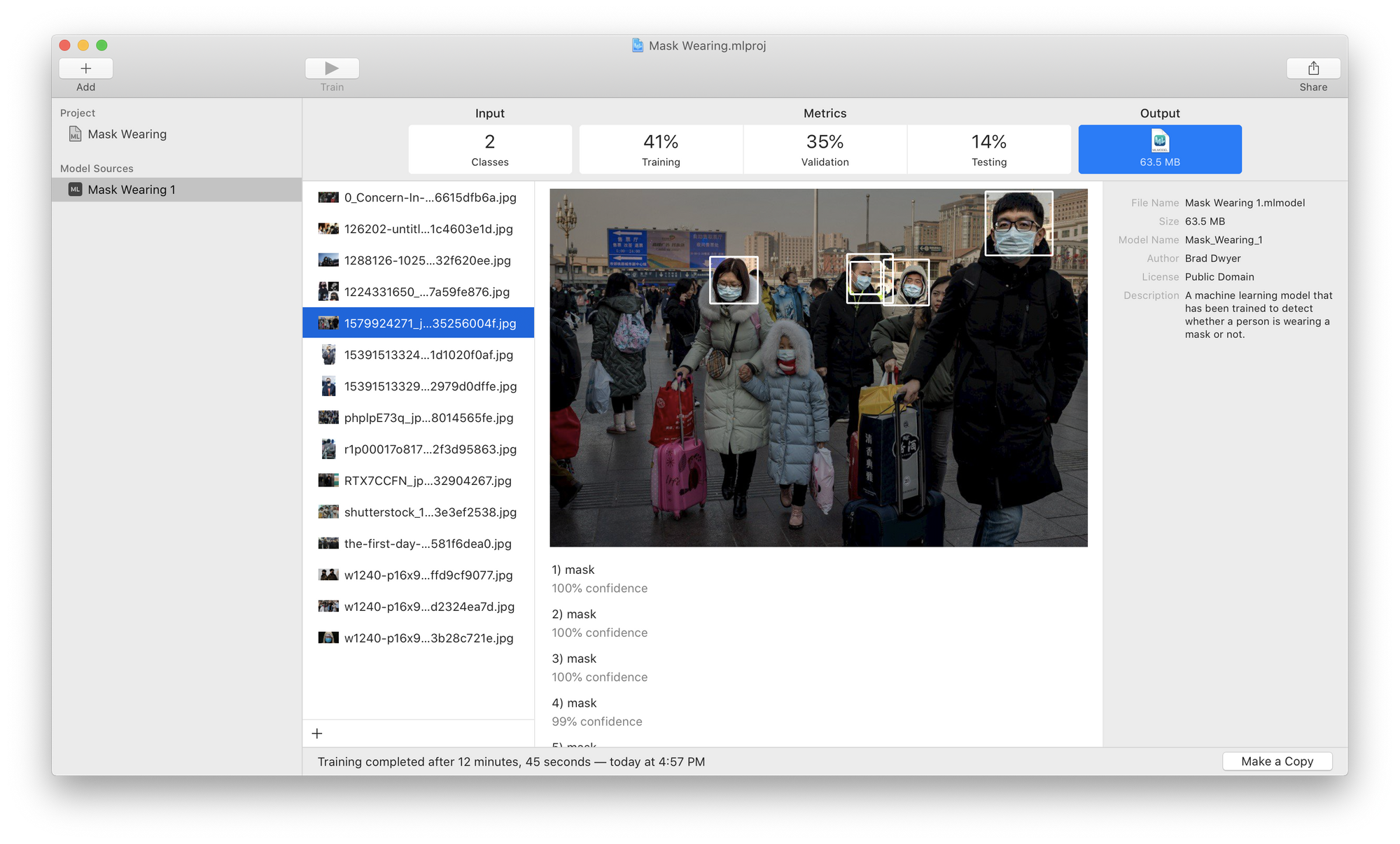

Here we can see that our model actually did quite well! It's detected two people with masks. Unfortunately, in some other images, it did worse; for example it missed the child with the mask and the lady whose face was partially obscured by the man's arm in this example:

Use CreateML in a Sample App

This is a pretty good start! I can actually drop this CoreML file directly into the Apple sample app and run it on my iPhone's live camera feed.

But getting a model that is good enough to use in production requires experimentation and iteration. To improve this model I would go out and collect more varied training examples, add them to my dataset, and train another version of my model.

Then repeat the process of identifying where it's least accurate, adding more data, and training again until it's ready for prime time!

How did your model do? Did you find some augmentations that improved your model's performance? Tweet us, we'd love to hear!

Cite this Post

Use the following entry to cite this post in your research:

Brad Dwyer. (Sep 18, 2020). How to Build Computer Vision Models with Apple CreateML. Roboflow Blog: https://blog.roboflow.com/createml/