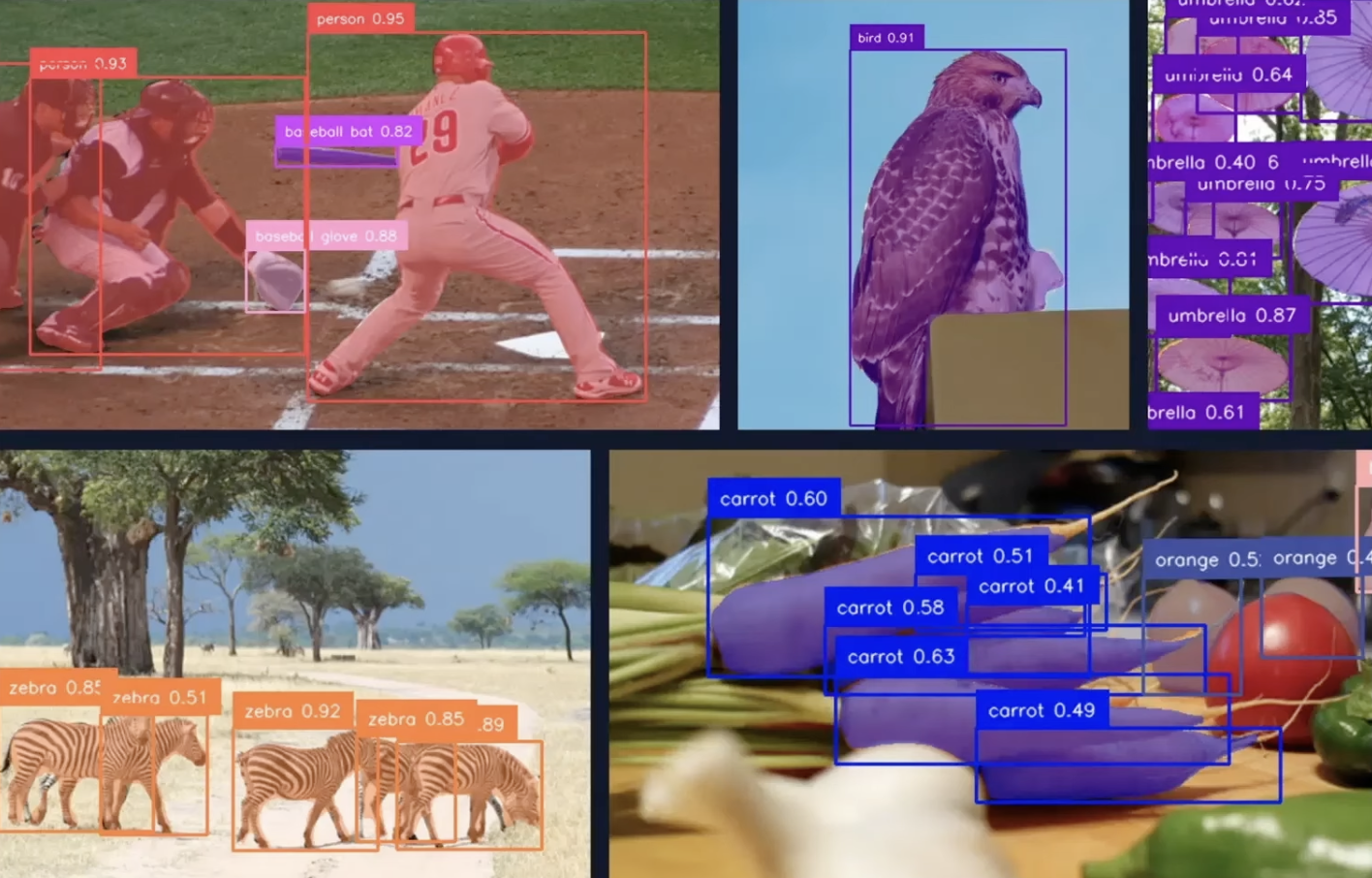

Defect detection has become essential across modern manufacturing, transforming from reactive quality inspection to proactive prevention. Today, manufacturers leverage advanced deep learning algorithms combined with high-resolution imaging to identify flaws in real time, from microscopic scratches on circuit boards to subtle structural anomalies in aerospace components. With the rapid evolution of vision AI, a new generation of optimized models makes it possible to detect and segment manufacturing defects with unprecedented precision while maintaining the speed required for production lines.

In this guide, we explore the best defect detection algorithms for manufacturing, from classical approaches to cutting-edge transformer architectures. We'll examine why instance segmentation has emerged as the superior approach for most manufacturing quality control scenarios, showcase the top performing models, and demonstrate how to deploy them efficiently across production environments.

What Is Defect Detection in Manufacturing?

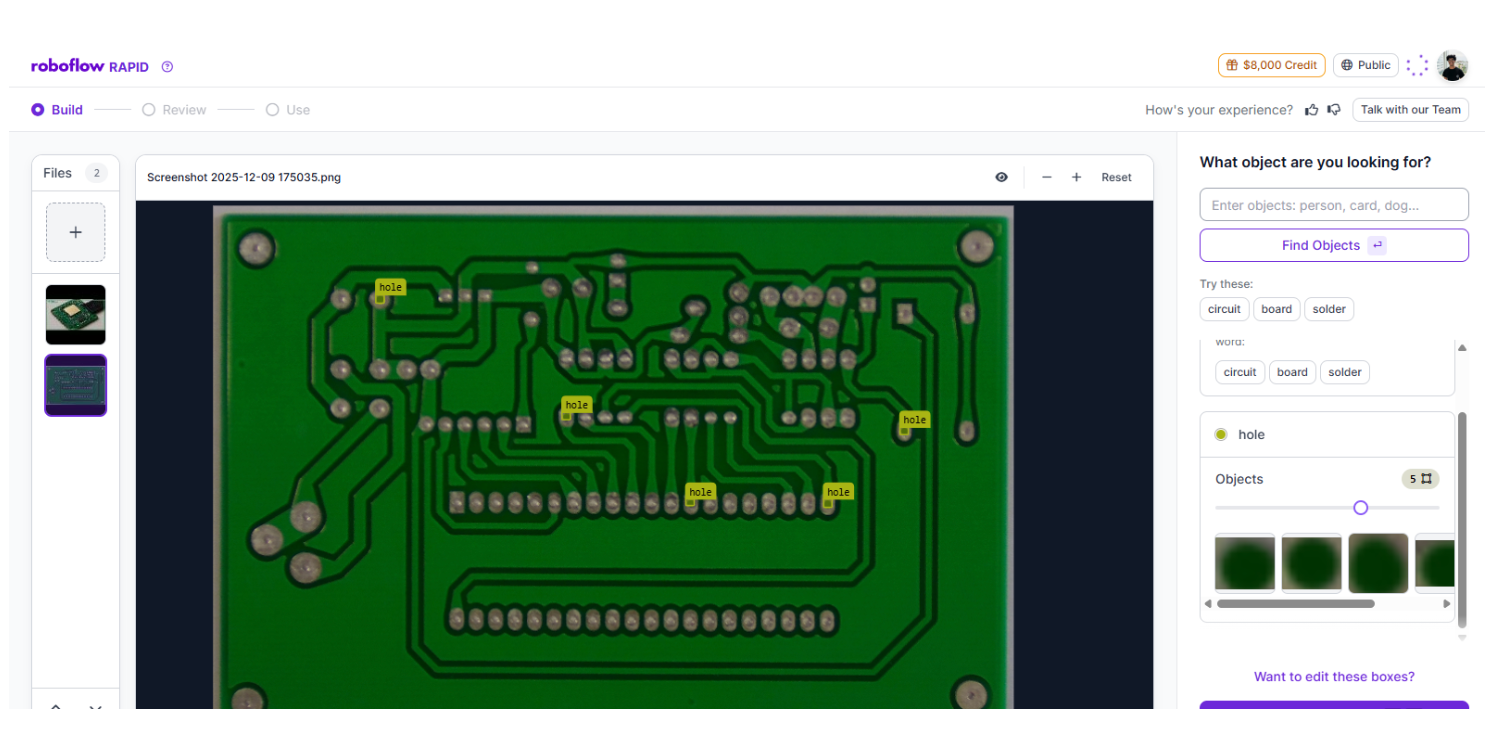

Defect detection is the automated process of identifying, localizing, and classifying manufacturing imperfections or deviations from quality standards using computer vision and deep learning. Unlike traditional manual inspection, modern defect detection systems capture high-resolution images of products at various manufacturing stages and employ AI algorithms to automatically identify anomalies invisible to the human eye.

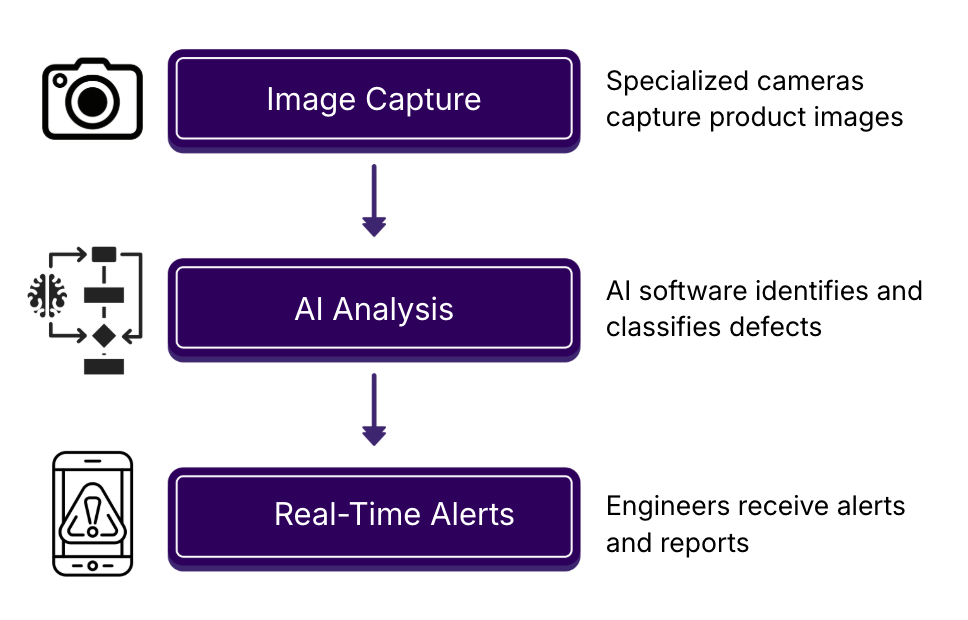

The process typically involves three stages:

- First, optical inspection systems capture detailed images of products using specialized cameras with controlled lighting.

- Second, AI software analyzes these images to identify and classify defects.

- Third, engineers receive real-time alerts and detailed reports, enabling them to quickly identify root causes and adjust manufacturing parameters to prevent future defects.

Modern defect detection extends beyond simple identification. It enables manufacturers to understand not just where a defect exists, but its precise shape, size, and location. This granular information transforms quality control from a binary pass/fail determination into a data-driven optimization tool that continuously improves production processes.

Evaluation Criteria for Manufacturing Defect Detection Models

Selecting the right defect detection algorithm requires understanding the specific demands of manufacturing environments. Here are the core criteria used to evaluate defect detection models for production deployment.

1. Accuracy Across Defect Types and Scales

Models should reliably detect diverse defect categories, from surface scratches and dimensional variations to subtle internal anomalies. Manufacturing environments present extreme variability: defects range from microscopic (sub-millimetre cracks) to large-scale (misaligned components). A robust model must achieve consistent accuracy across this spectrum, typically requiring mean Average Precision (mAP) of at least 75-80% on manufacturing-specific benchmarks.

2. Real-Time Performance on Production Lines

Inference speed is non-negotiable in manufacturing. Production lines operate continuously, often at speeds of 100+ units per minute. Defect detection systems must process images faster than products move through the inspection station, typically requiring 20-60 FPS depending on line speed and conveyor configuration. A model processing at 5 FPS creates bottlenecks; a model achieving 30+ FPS integrates seamlessly into existing workflows.

3. Precise Spatial Localization

Manufacturing quality control typically requires not just identifying that a defect exists, but understanding its exact location and boundaries. Engineers need to know whether a scratch is 2mm or 20mm long, whether it's on a critical surface, and its precise coordinates for automated remediation or sorting. Traditional bounding boxes provide insufficient detail for these decisions.

4. Robustness to Environmental Variability

Manufacturing environments present harsh conditions: inconsistent lighting, dust, reflective surfaces, product surface variations, and sensor noise. Models must maintain accuracy despite these real-world challenges without requiring extensive environmental calibration or proprietary preprocessing pipelines.

5. Quantization and Edge Deployment

Manufacturing facilities often prefer on-device processing for privacy, reduced latency, and offline operation. Models should maintain accuracy when quantized to INT8 or FP16 precision, enabling deployment on edge devices without cloud dependencies.

6. Scalability Across Product Variants

Modern factories produce multiple product variants on the same line. Detection models must either transfer well to new products or support rapid fine-tuning on minimal custom datasets without requiring complete retraining.

Traditional vs. Modern Defect Detection Approaches

Historical Methods: Rule-Based and Template Matching

For decades, manufacturing defect detection has relied on traditional computer vision techniques, which include edge detection algorithms that identify boundary changes, threshold-based segmentation that isolates defects from backgrounds, and template matching that compares current images to known defect libraries. These approaches were effective within narrow, controlled environments but struggled with the complexity of real-world applications.

Traditional methods required extensive manual tuning for each production line and product type. They were brittle; a slight change in lighting, camera angle, or product surface finish could cause complete detection failure. They also proved ineffective for novel defect types, as they relied on predefined templates and rules rather than learning generalizable patterns.

The Deep Learning Revolution

Beginning in the 2010s, deep learning transformed defect detection. Convolutional Neural Networks (CNNs) automatically learn features from image data rather than requiring manual feature engineering. Early CNN-based approaches like Faster R-CNN introduced object detection to manufacturing, enabling systems to locate defects more reliably than traditional methods.

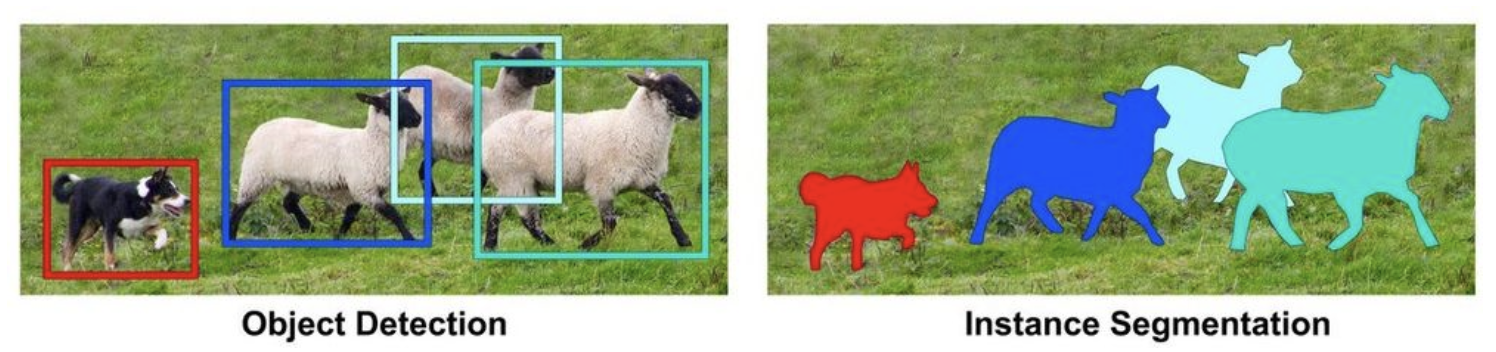

However, traditional object detection, which places bounding boxes around defects, proved insufficient for manufacturing quality control. A bounding box tells you approximately where a defect is, but doesn't reveal its precise shape or boundaries. This limitation became increasingly problematic as manufacturing precision increased.

The Instance Segmentation Advantage

Instance segmentation represents the natural evolution of defect detection for manufacturing. Rather than bounding boxes, instance segmentation generates pixel-level masks that precisely outline each defect. This approach provides the spatial precision manufacturing requires while maintaining the accuracy and generalization benefits of deep learning.

Research consistently demonstrates that instance segmentation outperforms object detection for manufacturing defect detection. A study comparing approaches found that training models to simultaneously perform defect detection and instance segmentation resulted in higher detection accuracy than training on detection alone. Another analysis in 2025 showed that combining YOLO5's detection efficiency with Mask R-CNN's fine segmentation capability achieved mAP@[0.5:0.95] of 0.72, substantially outperforming either model independently.

The advantages of instance segmentation for manufacturing are substantial. Precise measurement capabilities enable automated systems to determine whether a defect exceeds tolerance thresholds without human judgment. Detailed shape information helps engineers understand defect morphology, enabling root cause analysis. Pixel-level masks provide data for statistical process control, tracking defect patterns over time. And most critically, instance segmentation reduces false rejections; a major cost in high-speed production, where even a 0.1% false reject rate translates to thousands of dollars in waste.

How Defects Are Detected: The Technical Process

Modern manufacturing defect detection follows a well-established pipeline adapted from computer vision best practices.

1. Image Acquisition and Preprocessing

High-resolution cameras positioned at strategic points in the production line capture product images. These cameras are typically industrial-grade, capable of 4K+ resolution at production line speeds. Lighting systems, often ring lights or diffuse illumination, are carefully configured to highlight surface features and defects while minimizing reflections and shadows.

Preprocessing normalizes these images for consistent model input. This may include resizing to model-expected dimensions (typically 640x640 or 1024x1024 pixels), intensity normalization, and optional augmentation to simulate variations in lighting and product positioning. Modern defect detection systems minimize aggressive preprocessing, as excessive transformations can obscure subtle defects.

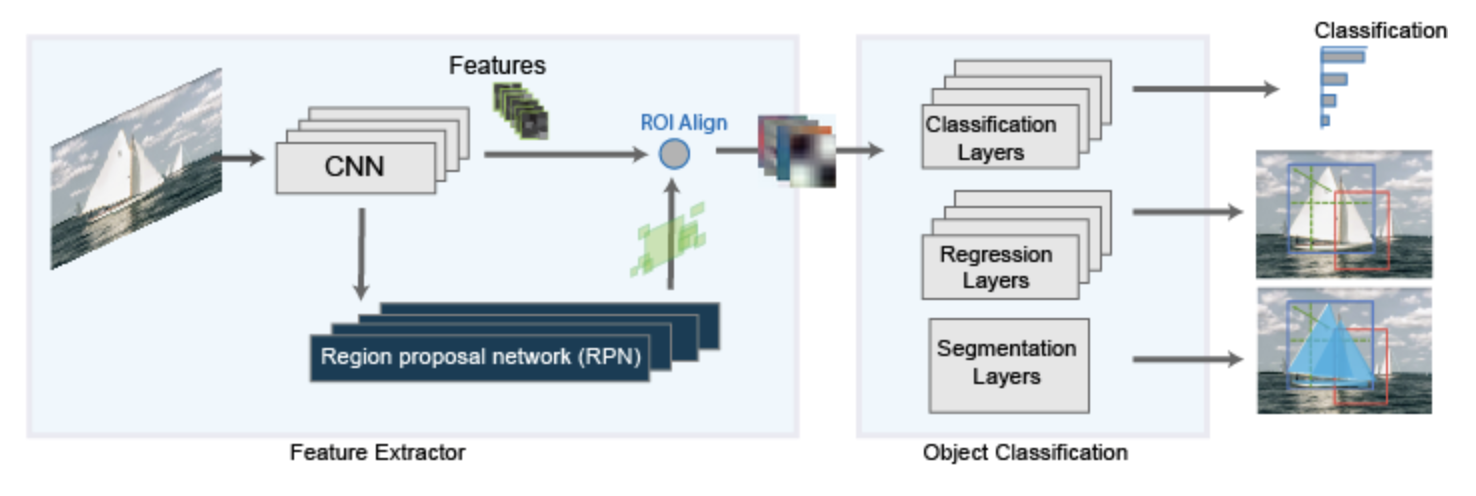

2. Feature Extraction and Spatial Understanding

The model backbone, typically a CNN or transformer, processes the preprocessed image to extract hierarchical features. Early layers capture low-level details (edges, textures), while deeper layers learn semantic information (defect patterns, material characteristics). The backbone produces feature maps at multiple scales, enabling the model to understand both tiny, microscopic defects and large-scale anomalies.

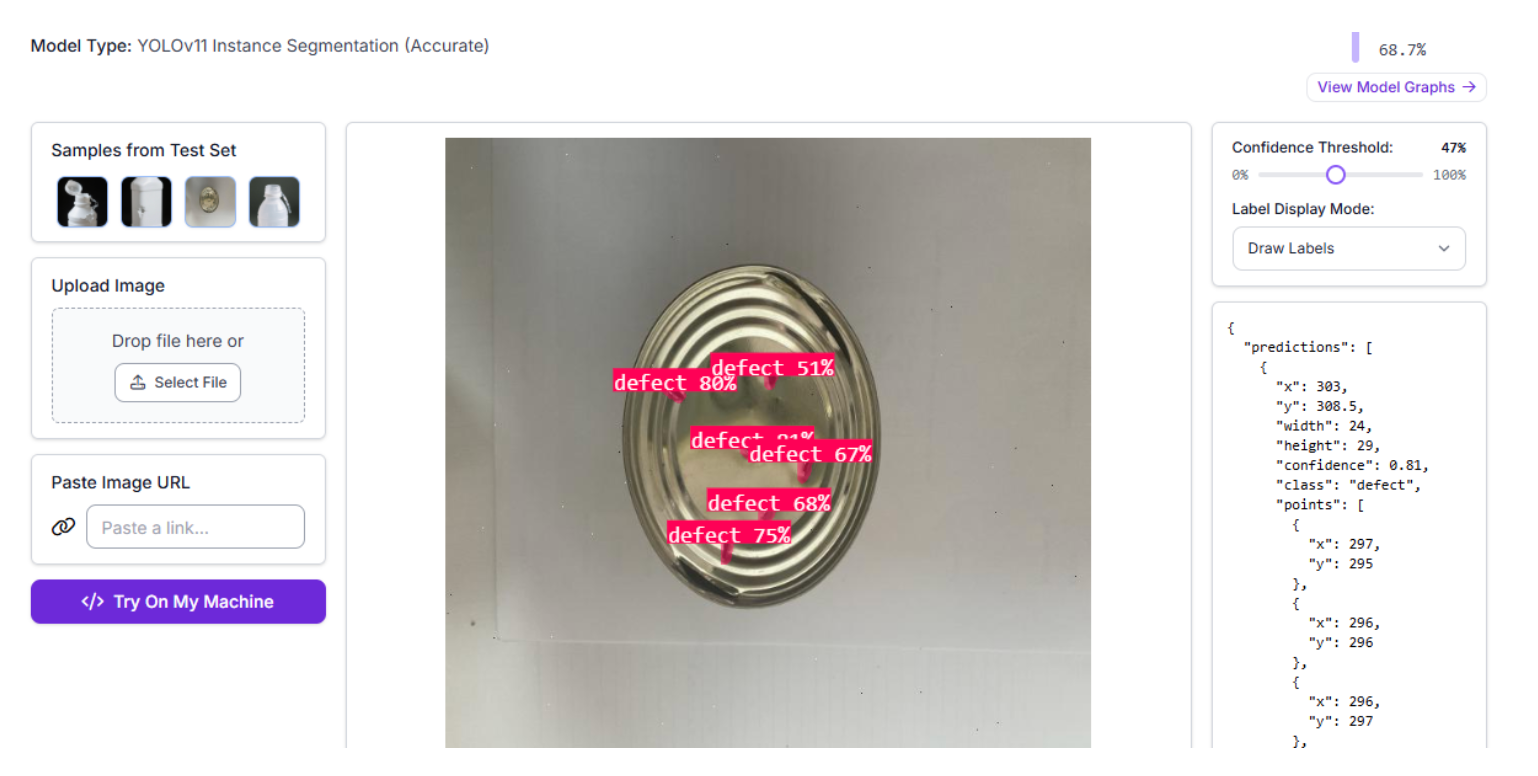

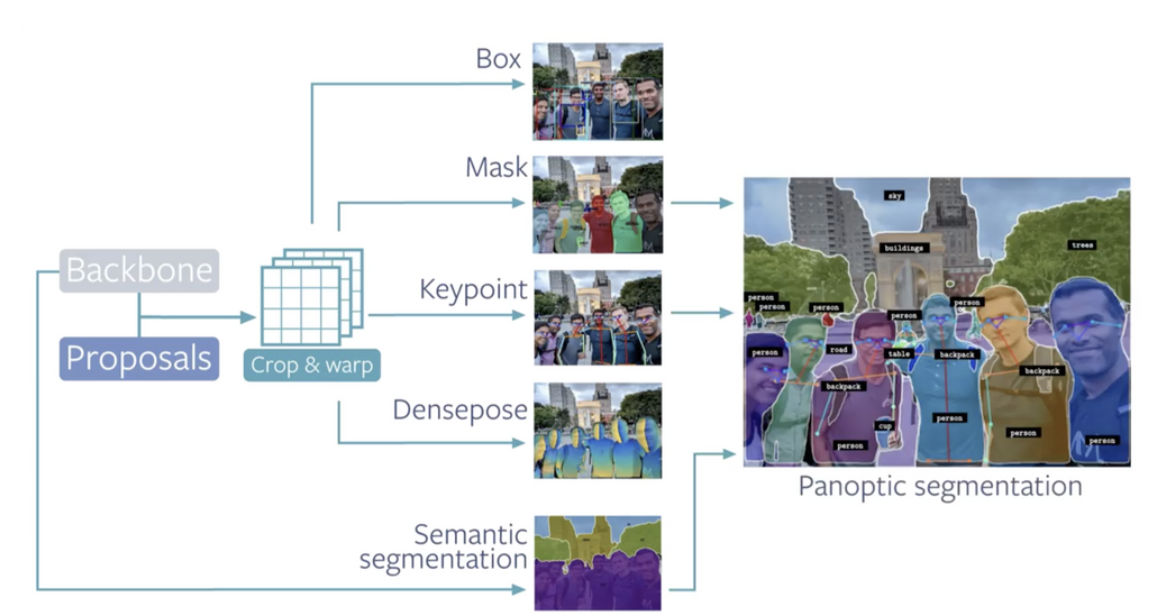

3. Instance Segmentation: Detection and Masking

For instance segmentation models, this multiscale feature representation flows to two parallel heads. The detection head predicts bounding boxes and class probabilities for each potential defect, localizing regions of interest. Simultaneously, the mask head generates pixel-level segmentation masks for each detected defect, precisely outlining its boundaries.

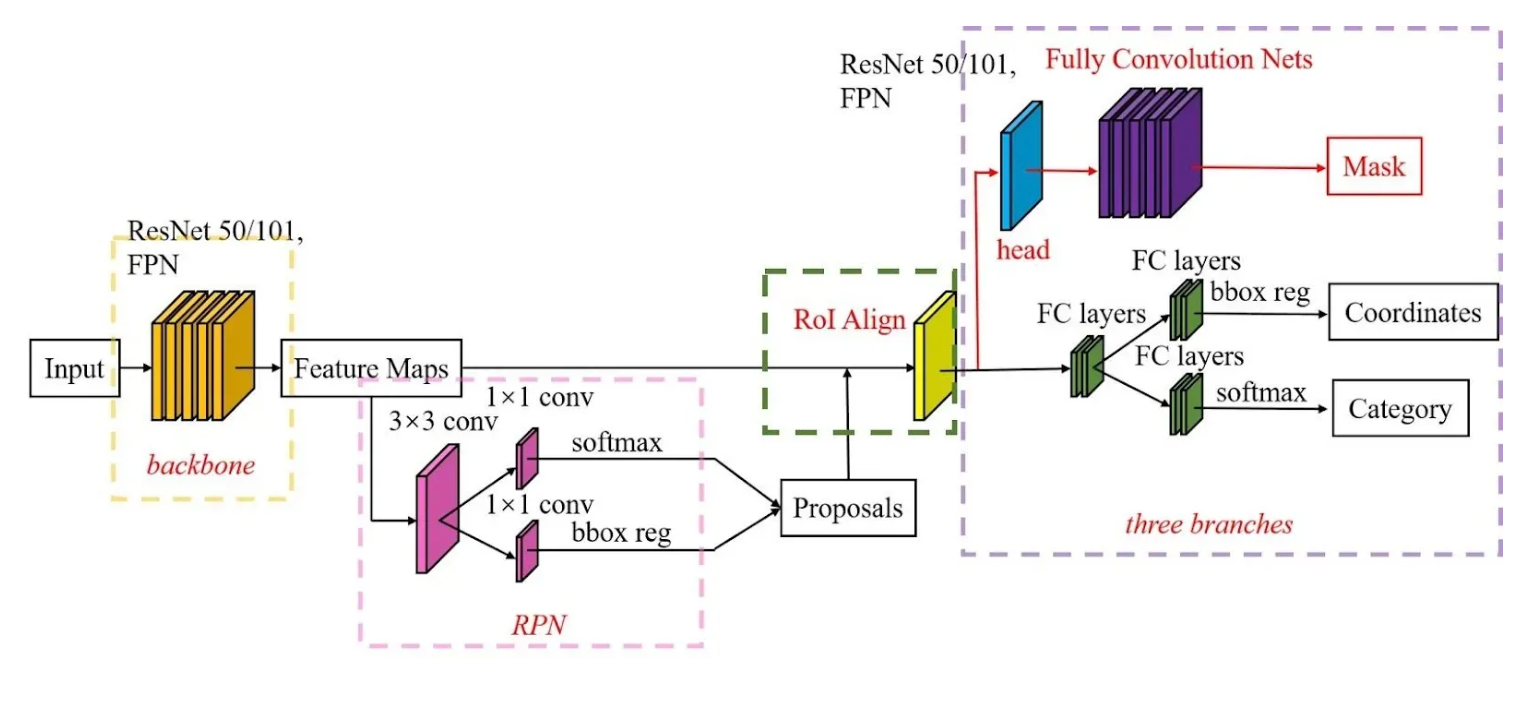

Modern architectures like Mask R-CNN use a Region of Interest (RoI) Align operation to efficiently crop features corresponding to each detected region, then apply convolution operations to generate high-resolution segmentation masks. This two-stage approach, first detecting regions, then precisely segmenting them, balances speed and accuracy effectively.

4. Post-Processing and Decision Logic

Raw model outputs include overlapping predictions and uncertain detections. Post-processing applies Non-Maximum Suppression (NMS) or more sophisticated algorithms to eliminate duplicate predictions, then applies confidence thresholds. A defect prediction with 60% confidence might require human review, while 95% confidence triggers automatic rejection or remediation routing.

Many production systems implement human-in-the-loop feedback, where operators review borderline cases and provide feedback that continuously improves model calibration. This collaborative approach combines machine efficiency with human domain expertise.

Explore the Best Defect Detection Algorithms for Manufacturing

Here's our comprehensive analysis of today's leading defect detection algorithms optimized for manufacturing.

1. RF-DETR Segmentation: The Domain Adaptation Leader

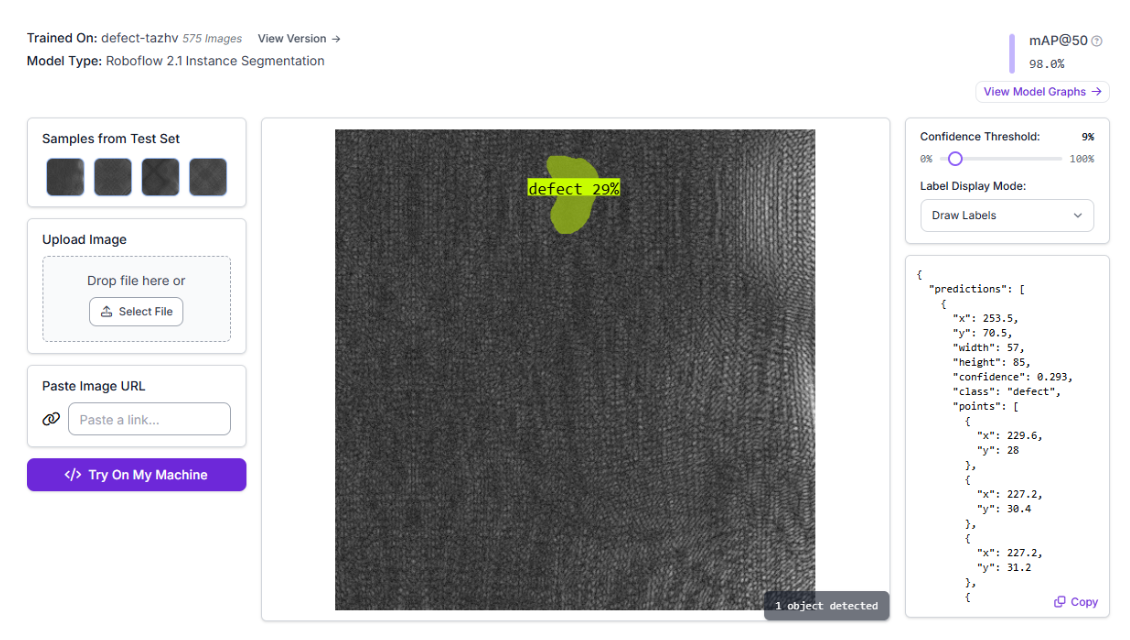

RF-DETR, Roboflow's state-of-the-art detection transformer with integrated instance segmentation (released in preview in 2025), represents the frontier of manufacturing defect detection. Built on the DINOv2 vision backbone, RF-DETR combines the domain adaptability required for dynamic manufacturing environments with the real-time speed production lines demand.

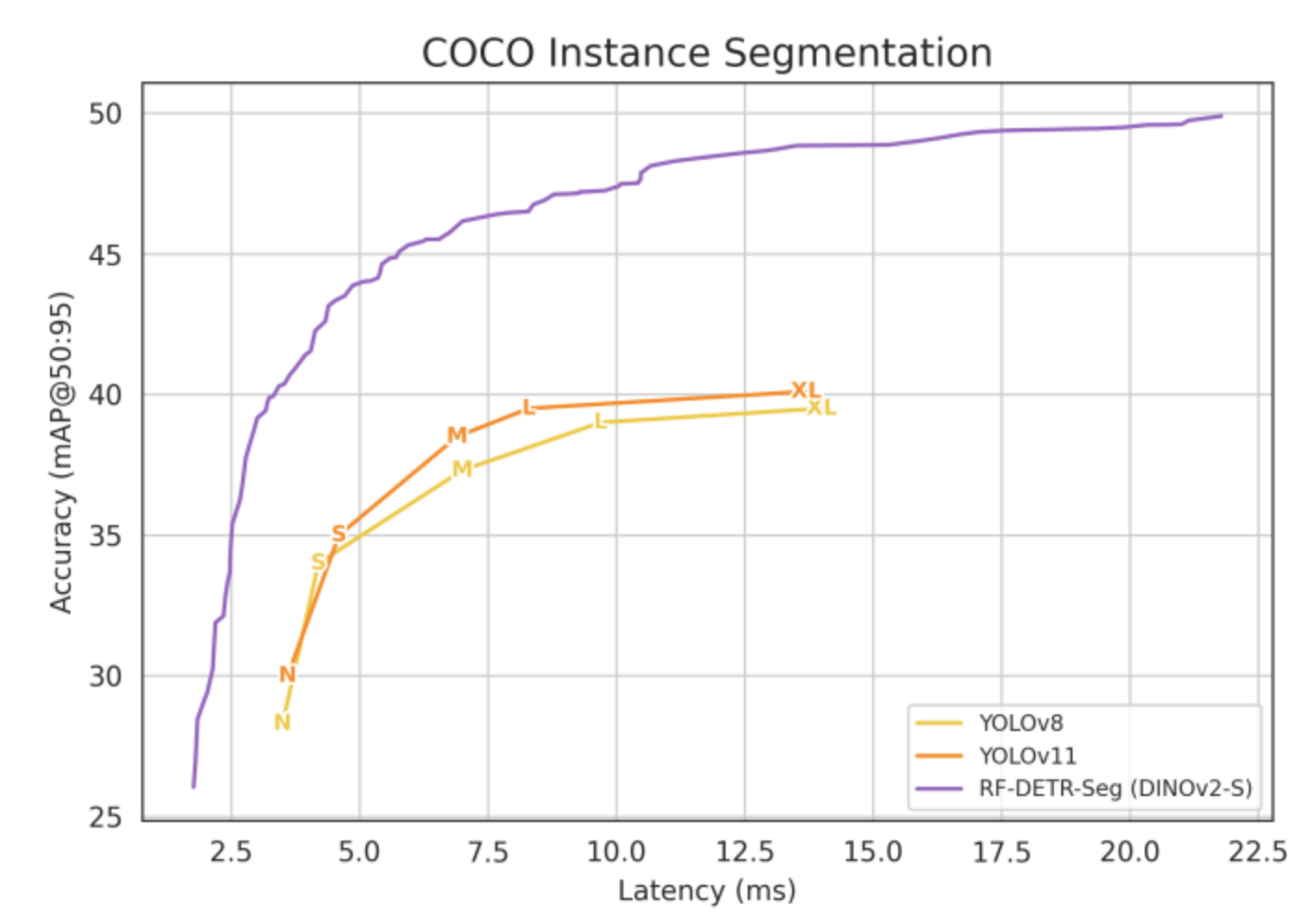

What distinguishes RF-DETR for manufacturing is its exceptional domain adaptation capability. Roboflow’s official benchmarks page states that RF‑DETR is the first real‑time model to exceed 60 AP on the Microsoft COCO benchmark and achieves state‑of‑the‑art performance on the RF100‑VL domain‑adaptation benchmark. This is critical in manufacturing, where products constantly evolve, and defect types change.

Key Strengths:

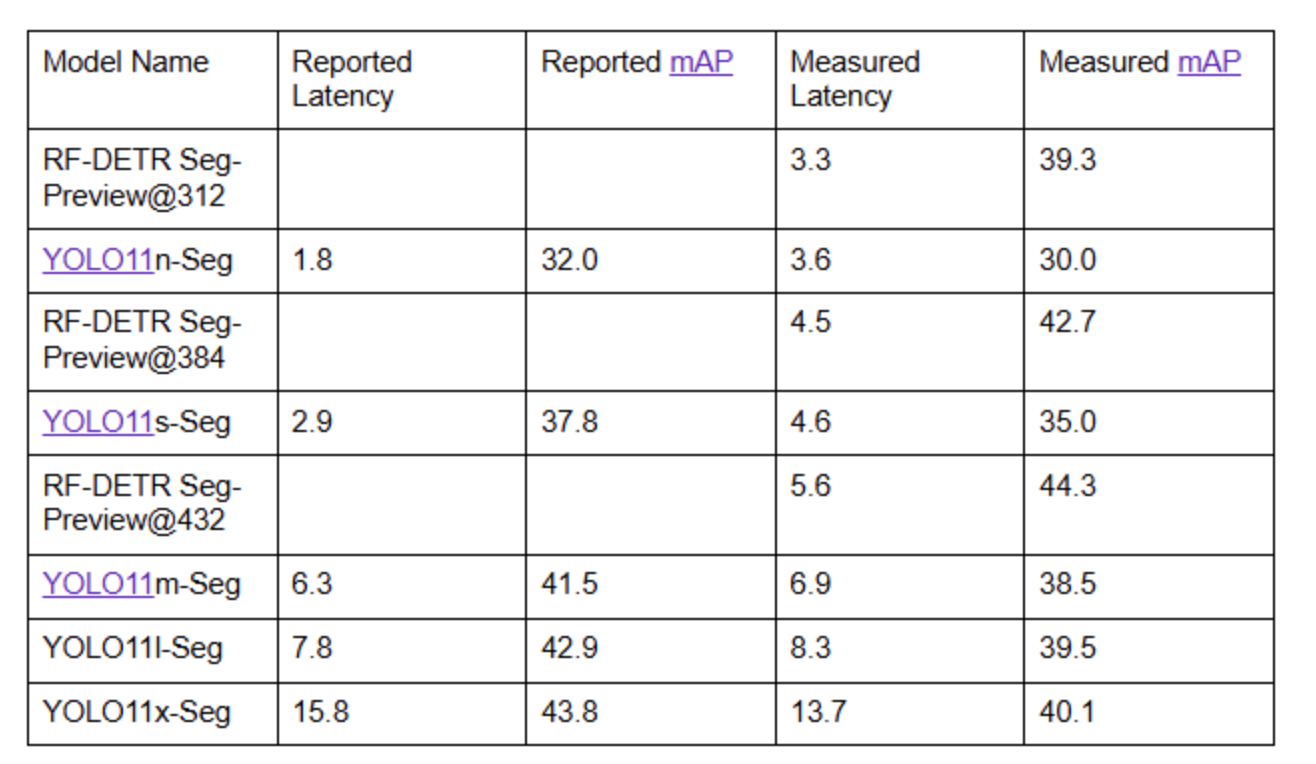

- Unprecedented Speed-Accuracy Balance: RF-DETR Segmentation is 3x faster and more accurate than the largest YOLO11 on the Microsoft COCO Segmentation benchmark, achieving over 30 FPS with 44.3 mAP@0.5:0.95 on a T4 GPU

- Domain Generalization: Excels at transferring to new manufacturing domains without extensive retraining, a crucial advantage when products or suppliers change

- End-to-End Latency: 5.6ms latency translates to 170+ FPS on T4 GPUs, enabling seamless production line integration

- Transformer Efficiency: Despite using transformers, it achieves real-time performance through architectural innovations specifically designed for on-device deployment

- Quantization-Friendly: Maintains strong accuracy when quantized to INT8 or FP16 for edge deployment

- Multiple Model Sizes: Nano, Small, Medium, and Large variants enable precise computational budgeting

Why Domain Adaptation Matters for Manufacturing:

Most manufacturing defect detection systems must adapt quickly to new products and defect types. A semiconductor manufacturer transitioning from one product family to another needs a detector that transfers well rather than requiring complete retraining. RF-DETR's domain adaptation capabilities mean that a model pre-trained on general manufacturing defects learns the specific patterns of a new product family with minimal additional training data, a substantial advantage in dynamic manufacturing environments.

Performance Benchmarks:

On the Microsoft COCO Segmentation benchmark, RF-DETR Segmentation achieves 44.3 mAP@50:95, a state-of-the-art result for real-time segmentation models. When measured with end-to-end latency including Non-Maximum Suppression (NMS) and mask generation, RF-DETR Seg-Preview@432 outperforms the reported mAP for YOLO11x-Seg while being nearly 3x faster.

Deployment Scenario: Ideal for manufacturers requiring rapid adaptation across product families, those prioritizing domain generalization over absolute speed, facilities with GPU infrastructure, and applications where model flexibility is valued.

2. Mask R-CNN: The Precision Standard

Mask R-CNN remains the gold standard for precise manufacturing defect detection, particularly for applications where accuracy is paramount. The architecture's two-stage design, region proposal followed by refinement, naturally accommodates the instance segmentation requirement critical for quality control.

Recent 2025 implementations demonstrate exceptional performance. PCB defect detection using Mask R-CNN via the Detectron2 framework achieved high precision for defects like mouse bites and missing holes, with segmentation mAP of 69.32 at IoU=0.50. The model excels at handling shape variations, complex structures, and occlusions, precisely the challenges manufacturing environments present.

Key Strengths:

- Pixel-Level Precision: Generates detailed segmentation masks enabling precise defect measurement and characterization

- Mature Ecosystem: Detectron2 provides production-ready implementations with extensive optimization and deployment support

- Proven Track Record: Years of real-world manufacturing deployment have established best practices and optimization techniques

- Flexible Backbones: Supports ResNet-50, ResNet-101, and other proven architectures for accuracy-speed tradeoffs

- Feature Pyramid Network (FPN): Built-in multi-scale feature extraction for handling defects at various scales

Mask R-CNN Architecture Components:

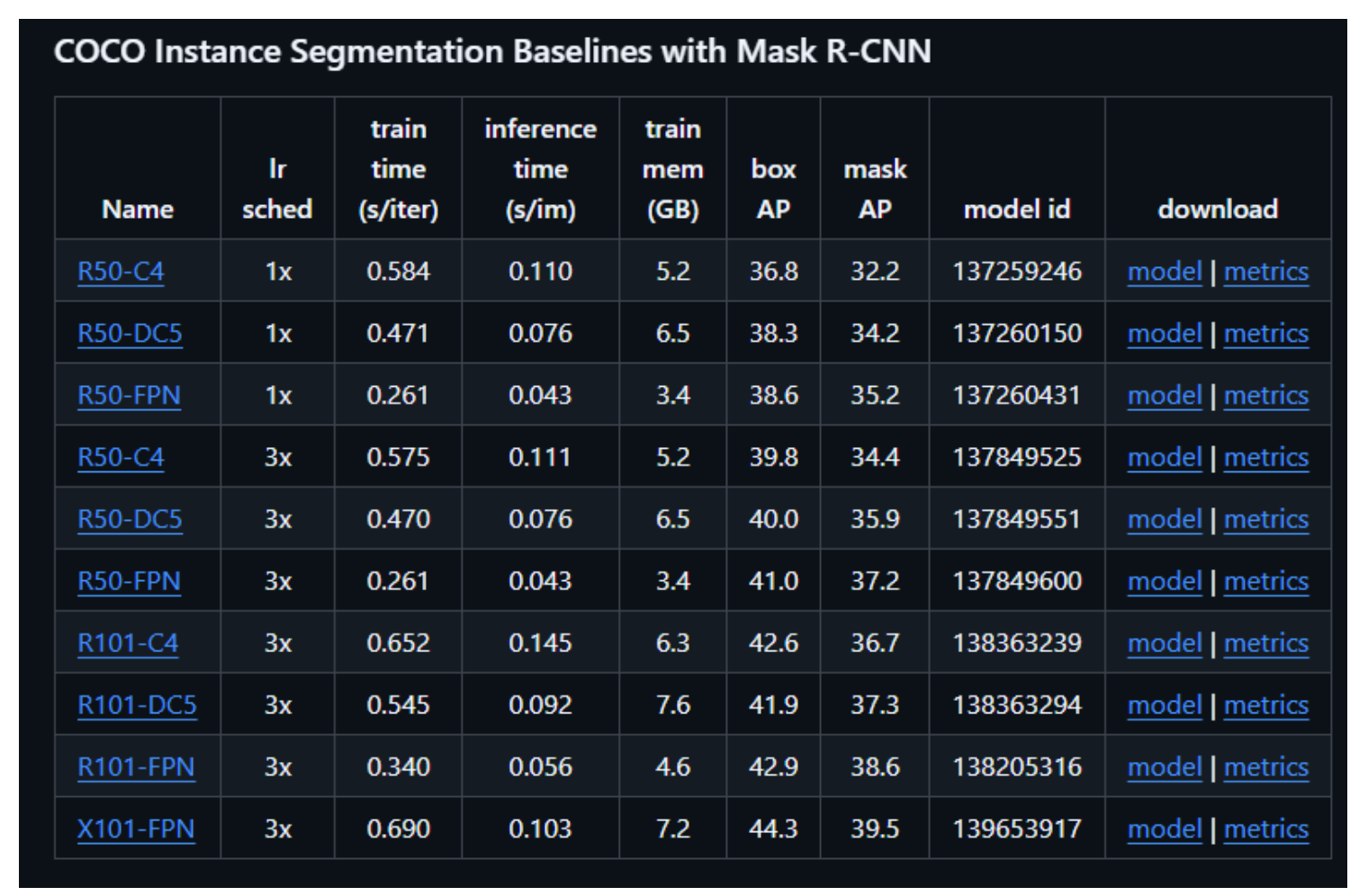

The Mask R-CNN architecture builds upon Faster R-CNN with the addition of a mask head branch for pixel-wise segmentation. ROIAlign replaces traditional ROI pooling, using bilinear interpolation to preserve spatial information, leading to improved segmentation accuracy for small objects.

Considerations:

Mask R-CNN is computationally intensive, typically requiring GPU acceleration. Inference speeds of 1-3 FPS are common for high-resolution images, creating throughput constraints on high-speed production lines unless multiple GPUs are deployed. The architecture requires more training data than single-stage detectors, and fine-tuning requires careful hyperparameter tuning for optimal results.

Deployment Scenario: Ideal for batch inspection, offline quality analysis, or production lines where 1-3 second inspection windows are acceptable. Commonly used in semiconductor, PCB, and precision optics manufacturing.

3. SAM 3: The Zero-Shot Concept Segmentation Pioneer

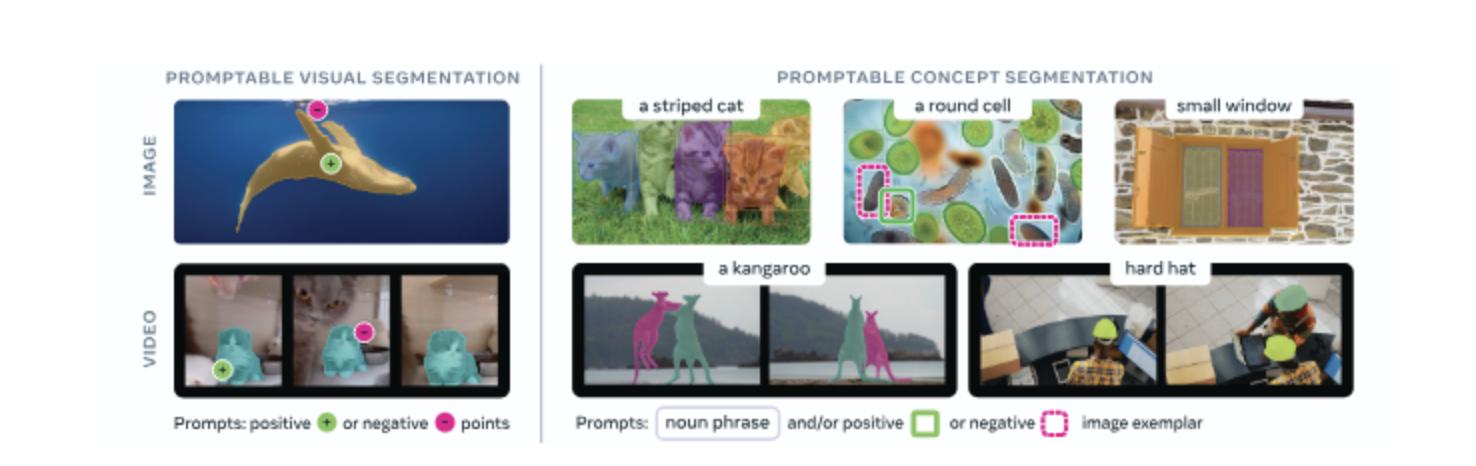

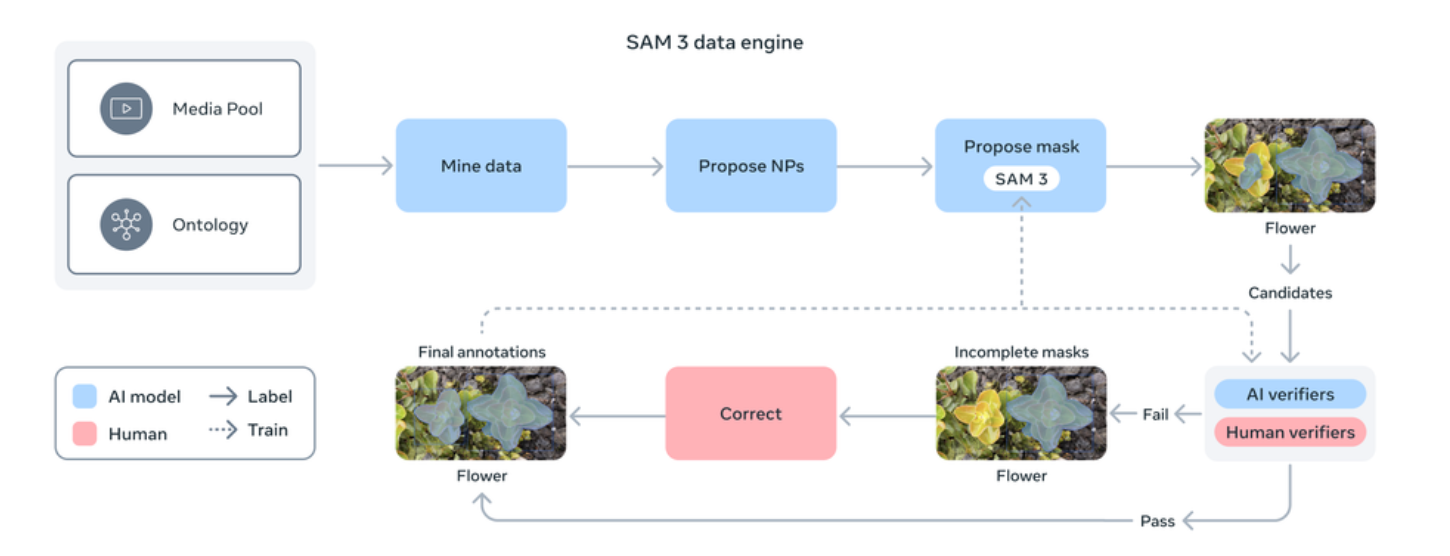

Segment Anything Model 3 (SAM 3), released by Meta in November 2025, introduces a paradigm shift for manufacturing defect detection through Promptable Concept Segmentation (PCS). Unlike traditional models trained on fixed defect classes, SAM 3 enables open-vocabulary segmentation using natural language prompts, fundamentally changing how manufacturers can adapt detection systems to new products and defect types without extensive retraining.

SAM 3 represents a unified model for detection, segmentation, and tracking across both images and videos, addressing a critical manufacturing challenge: the constant evolution of product lines and defect patterns. Rather than retraining for each new product or defect type, engineers can simply describe the defect in natural language, and SAM 3 automatically detects all instances matching that description.

Key Strengths:

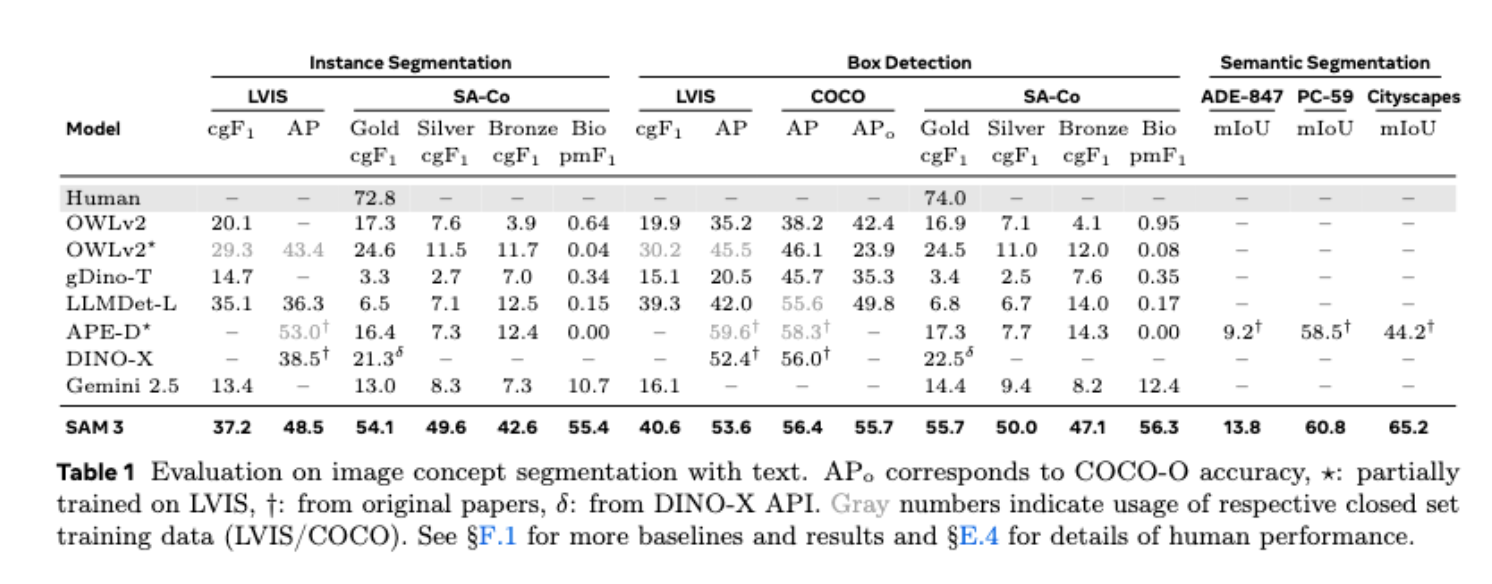

- Near-Human Performance: Achieves 88% of human lower bound performance on the SA-Co/Gold benchmark, demonstrating reliability comparable to expert human inspection

- Comprehensive Pixel-Level Segmentation: Generates exhaustive instance masks for all matching concepts in a single inference pass, not just one object per prompt

- Interactive Refinement: Users can provide positive and negative exemplars to refine detections on-the-fly ("+18.6 CGF1 improvement after 3 exemplar prompts"), essential for handling novel or ambiguous defect types

- Unified Image-Video Operation: A Single model handles both static images and video streams, enabling real-time tracking of defects across production line sequences

- Versatile Prompt Types: Supports text prompts, visual exemplars (positive/negative examples), points, boxes, and masks, enabling human-in-the-loop refinement workflows ideal for manufacturing quality control

- Exceptional Zero-Shot Performance: Achieves 47.0 LVIS zero-shot mask AP (22% improvement over the previous best of 38.5) and 2× better performance on the SA-Co benchmark for concept segmentation, demonstrating unprecedented generalization across diverse defect types

- Open-Vocabulary Concept Prompting: Query for any defect type using text descriptions—"scratches," "dents," "missing components," or complex descriptions like "surface cracks longer than 2mm" without predefined training

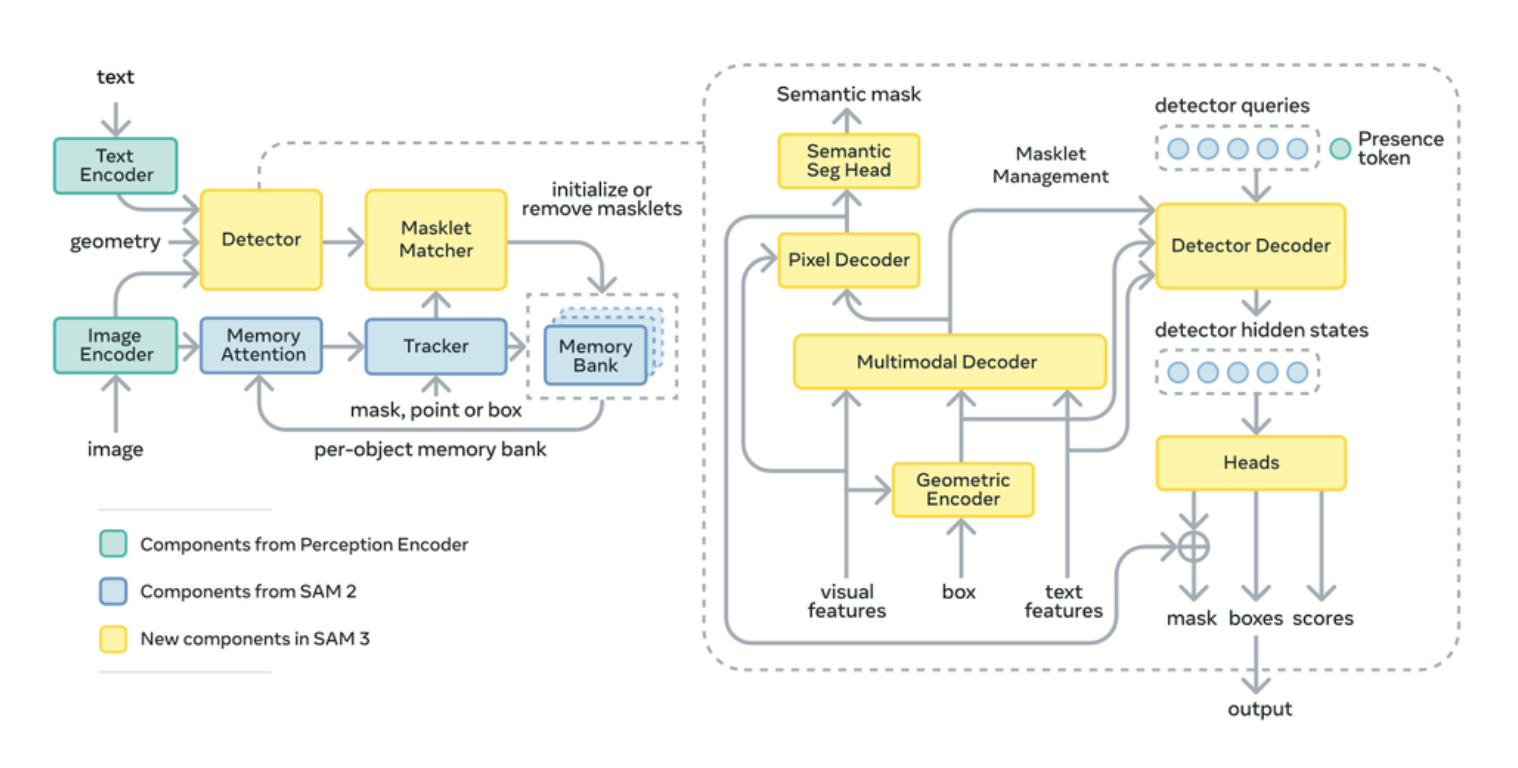

SAM 3 Architecture and Capabilities:

SAM 3 uses a dual encoder-decoder transformer architecture with 840–850M parameters that enables both Promptable Concept Segmentation (PCS) for text and exemplar prompts and Promptable Visual Segmentation (PVS) for traditional point/box/mask prompts. The architecture includes three key innovations: a presence head that predicts whether a concept exists globally in the image, a detector for localization, and a tracker for video consistency. This decoupled design balances efficiency and precision. Concepts can be checked for presence without localization overhead, then only promising regions are processed for detailed mask generation.

Inference Performance:

SAM 3 operates at approximately 30 milliseconds per image on an NVIDIA H200 GPU while handling 100+ detected objects, though this represents server-scale performance. For video, the model sustains near real-time performance for approximately five concurrent objects. While slower than YOLO11 (which achieves 2-3 ms inference), SAM 3's performance is substantially faster than many traditional two‑stage instance segmentation models, such as Mask R‑CNN, which often run at only a few frames per second on comparable hardware.

The model requires 16 GB+ GPU memory for typical deployment, fitting comfortably on modern GPUs and using less VRAM than SAM 2. For constrained environments, SAM 3 can label training data that is then used to train smaller, specialized models, combining SAM 3's zero-shot flexibility with edge device efficiency.

Manufacturing-Specific Advantages:

The open-vocabulary nature addresses a fundamental challenge in manufacturing. When a factory transitions from producing circuit boards to producing automotive components, traditional models require complete retraining. SAM 3 simply requires describing the new defect types: “missing solder joints,” “misaligned connectors,” “bent pins.” This dramatically reduces the time-to-deployment for new products from weeks (requiring data collection, labelling, and retraining) to hours.

The interactive refinement capability creates human-in-the-loop workflows, particularly valuable in manufacturing. Quality engineers can provide a few examples of defects they care about ("these are the types of scratches that require rejection"). SAM 3 learns from these exemplars, automatically detecting similar defects across the production line.

For facilities requiring regulatory traceability, SAM 3's ability to generate comprehensive pixel-level masks for all defect instances provides detailed documentation suitable for compliance documentation and root cause analysis.

Performance Considerations:

SAM 3's primary limitation for high-speed production lines is inference latency. At 30ms per image, it matches approximately 33 FPS, sufficient for many production scenarios but below the 50-100+ FPS achieved by optimized YOLO11 implementations. However, this comparison requires context: SAM 3 achieves this performance without task-specific training, while YOLO11 requires labelled training data for each defect type and product variant.

The model's server-scale size (840–850M parameters) makes edge deployment challenging without quantization or distillation. However, research has demonstrated successful adaptation (SAM3-UNet), reducing memory requirements to under 6 GB for specialized manufacturing tasks.

Deployment Scenario: Ideal for manufacturers frequently introducing new products or defect types, facilities without extensive labelled datasets, research, and development environments requiring rapid iteration, and organizations implementing human-in-the-loop quality control workflows. Particularly valuable for semiconductor fabs, aerospace component manufacturers, and precision optics producers, where defect types are numerous and constantly evolving. Less suitable for high-speed (100+ FPS) production lines without hardware acceleration infrastructure, though emerging on-device optimization techniques are improving edge deployment possibilities.

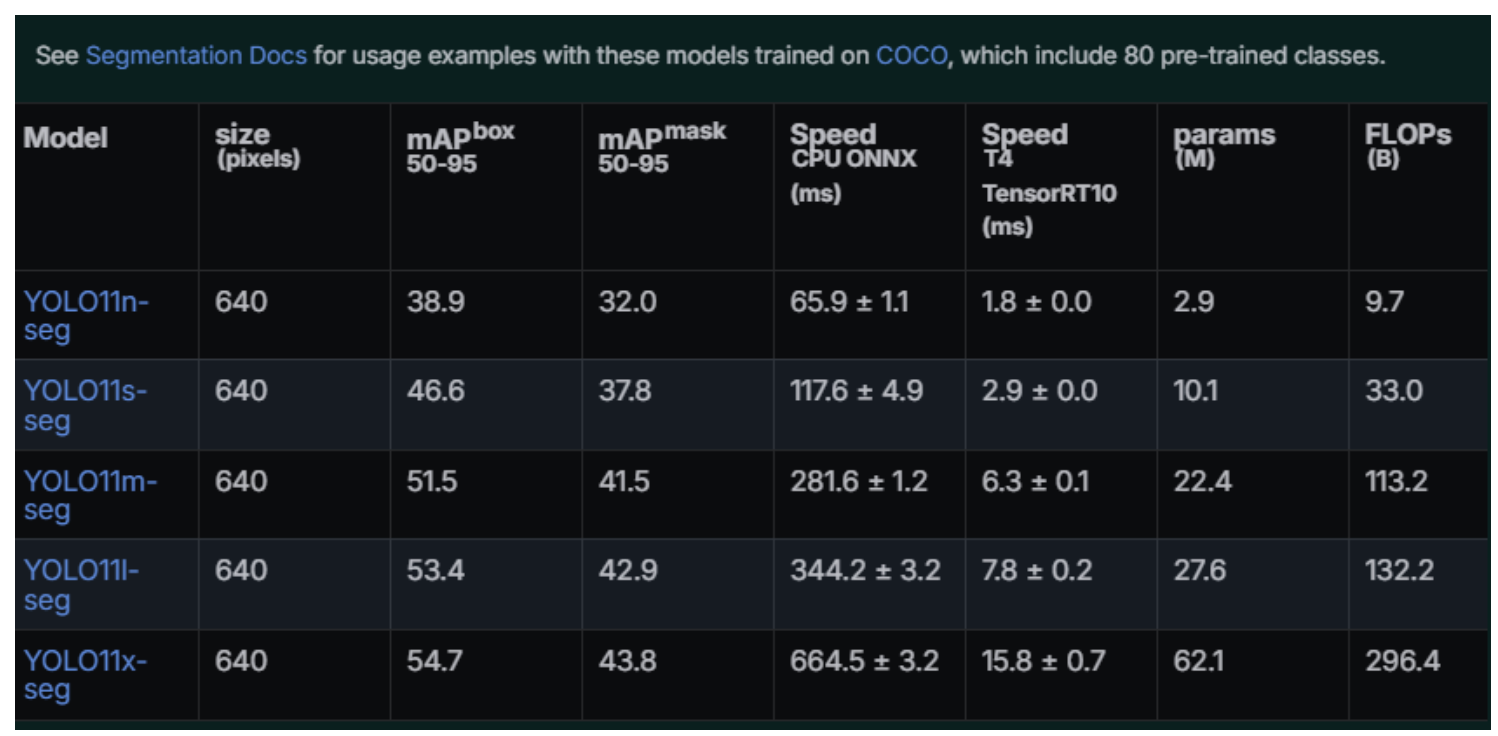

4. YOLO11 with Instance Segmentation (YOLO11-seg)

YOLO11 released in October 2024, represents the latest single-stage approach to combined detection and instance segmentation. The model's single-stage architecture, detection and segmentation in one forward pass, delivers unprecedented speed while maintaining competitive accuracy with multi-stage approaches. YOLO11 achieves 22% fewer parameters than YOLOv8m while delivering higher mean Average Precision, making it exceptionally efficient for edge deployment. The architecture includes optimized convolution designs and streamlined feature extraction, enabling real-time segmentation on standard hardware.

Key Strengths:

- Real-Time Speed: Runs in the low single-digit millisecond range per image on NVIDIA T4 GPUs at 640 px (TensorRT), enabling high‑FPS real-time operation for production lines.

- Multiple Size Variants: Nano through XLarge models allow precise speed-accuracy tuning for your specific requirements (YOLO11n for embedded systems, YOLO11x for maximum accuracy)

- Single-Stage Efficiency: Combined detection and segmentation in one forward pass reduces latency compared to two-stage approaches

- Excellent Fine-Tuning: Supports rapid adaptation to new defect types with minimal custom training data

- Production Maturity: Extensive ecosystem support, deployment frameworks, and real-world case studies

Performance Metrics:

Implementation Example:

YOLO11-seg can be fine-tuned on manufacturing defect datasets with a straightforward configuration. A PCB defect detection implementation using YOLO11-seg combined with Mask R-CNN (Y-MaskNet architecture) achieved mAP@[0.5:0.95] of 0.72, surpassing either model independently.

Considerations:

While faster than Mask R-CNN, YOLO11-seg requires tuning for manufacturing-specific edge cases. Small defects sometimes require adjusted anchor configurations or multi-scale training augmentation. The single-stage architecture, while fast, occasionally produces less precisely refined segmentation masks compared to multi-stage approaches on particularly challenging defect types.

Deployment Scenario: Best for moderate-to-high-speed production lines (10+ FPS requirement), where real-time feedback and high throughput are essential. Widely deployed in electronics assembly, quality control robotics, and automated sorting systems.

5. Detectron2 with Advanced Backbones

Detectron2, Meta's comprehensive computer vision framework, provides maximum flexibility for manufacturing defect detection. Rather than a single model, Detectron2 is a modular framework supporting multiple architectures: Mask R-CNN, Cascade R-CNN, and others, each with numerous backbone options.

This flexibility enables researchers and engineers to systematically evaluate different architectures and find optimal configurations for specific defect detection tasks. PCB defect detection implementations using Detectron2 have demonstrated how model selection impacts performance for different defect types.

Key Strengths:

- Modular Architecture: Supports rapid experimentation with different detectors, backbones, and training strategies

- Extensive Model Zoo: Pre-trained models for various tasks enable transfer learning and rapid prototyping

- Research-Oriented Design: Built for flexibility, supporting custom loss functions, augmentation strategies, and architectural innovations

- Production Deployment Support: Despite research focus, includes production-ready deployment tools through Detectron2go

Framework Advantages for Manufacturing:

Detectron2 enables systematic model selection. Rather than choosing a fixed architecture, teams can train multiple Detectron2-based models on their specific defect dataset, evaluate performance across defect categories, and select the best-performing configuration. This data-driven approach is particularly valuable in manufacturing, where defect characteristics vary significantly across product types and production stages.

Considerations:

Detectron2's flexibility requires more implementation expertise than turn-key solutions. Training and deployment require more careful configuration and hyperparameter tuning. The framework prioritizes research flexibility over simplicity, making it less suitable for teams without deep computer vision expertise.

Deployment Scenario: Best for research laboratories, advanced manufacturing facilities with dedicated ML teams, and scenarios where model customization and optimization are justified by critical quality requirements.

Critical Considerations for an Effective Use of Instance Segmentation Models for Defect Detection

Several factors distinguish manufacturing defect detection from other computer vision applications.

Lighting and Environmental Control

Manufacturing environments are challenging: dust, reflections, inconsistent lighting, and surface contamination obscure details. Models must be trained on representative production imagery, not controlled laboratory conditions. Consider environmental augmentation during training, simulating dust, reflections, and lighting variations, to improve robustness.

Small Defect Detection

Manufacturing defects range from microscopic (sub-millimetre cracks) to large-scale. Small object detection requires high-resolution input images and multiscale feature extraction. Models like Mask R-CNN with strong multiscale Feature Pyramid Networks naturally excel here, while single-stage detectors may require augmentation (higher resolution, pyramid pooling) to maintain small defect accuracy.

False Reject Cost Analysis

The cost of false rejections often exceeds defect-related losses in high-volume manufacturing. Establish clear decision thresholds based on cost analysis. A 0.5% false reject rate that costs $10,000 daily might be unacceptable despite high defect detection accuracy.

Throughput Requirements

Production line speed determines model latency requirements. A line moving at 100 units/minute through a 30-cm inspection zone provides only 180ms for capture, inference, and output. Models must respect these physical constraints; a model requiring 500ms per image creates bottlenecks, no matter its accuracy.

Hardware Infrastructure

Determine whether to deploy on GPU servers, edge devices, or embedded systems in manufacturing cells. GPU servers offer maximum accuracy and flexibility but require network connectivity and centralized infrastructure. Edge devices eliminate network dependence but require model optimization. Select hardware and model based on facility infrastructure and requirements.

Regulatory and Traceability Requirements

Many manufacturing sectors (aerospace, medical devices, automotive) require documented traceability. Implement logging systems that record every inference: timestamp, product identifier, detected defects with segmentation masks and confidence scores. This creates auditable quality records required for regulatory compliance.

Best Defect Detection Algorithms for Manufacturing Conclusion

For manufacturing applications, specifically, instance segmentation is the clear winner over traditional object detection. The pixel-level precision enables automated decision-making, quantifiable quality metrics, and reduced false rejection rates; the key factors determining manufacturing defect detection ROI. The key to successful manufacturing defect detection is matching model selection to your specific requirements: throughput needs, accuracy demands, hardware infrastructure, and manufacturing dynamics.

For domain-adapted, rapid iteration cycles, deploy RF-DETR Segmentation, leveraging its state-of-the-art performance on domain adaptation benchmarks for quick adaptation across product families.

Written by Aarnav Shah

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Dec 10, 2025). Best Defect Detection Algorithms for Manufacturing. Roboflow Blog: https://blog.roboflow.com/defect-detection-algorithms-for-manufacturing/