You can extract information about objects of interest from satellite and drone imagery using a computer vision model, but a machine learning model will only tell you where an object is in an image, not where it is on the globe.

To get the objects' GPS position, you need to combine the model's output with some other information. This process is called georeferencing.

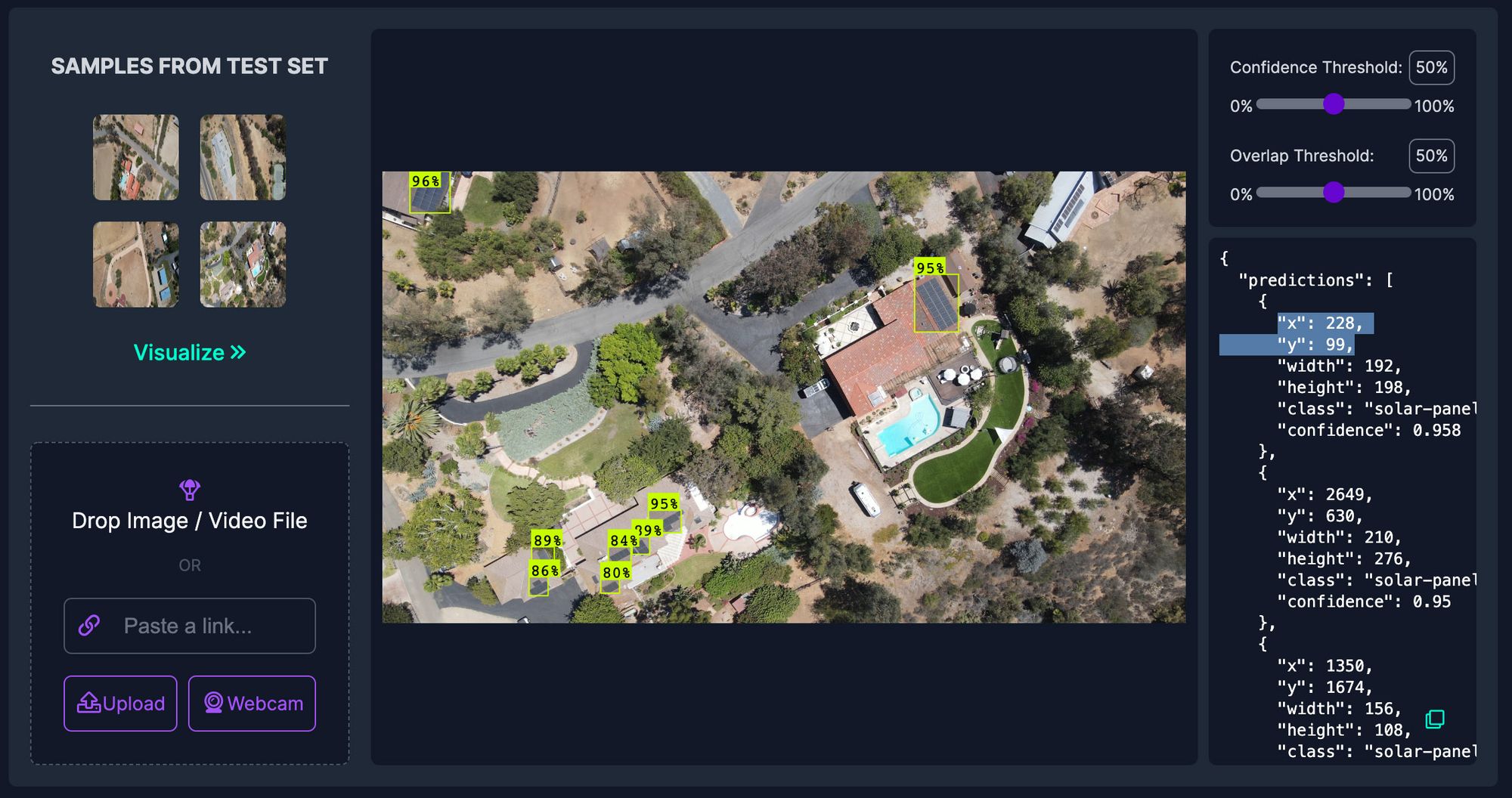

In this post, we will show how to train a computer vision model to detect solar panels from above and combine its output with a video and flight log from a DJI Mavic Air 2 to plot the predictions on a map and show you how to use computer vision with drones.

If you want to skip to the end, you can try out the finished project here with this sample video and flight log, and the source code is available on Github:

Detecting Objects in Aerial Imagery

Option 1: Find a Pre-Trained Model

Roboflow Universe hosts the largest collection of aerial imagery datasets and pre-trained models, which have powered aerial computer vision use-cases like preventing accidental disruptions of oil pipelines, cataloging wind turbines, and estimating Tesla factory output.

If you're looking for a common object, you can probably find a model on Universe. Even if it's not perfect for your use-case, you can use it as a starting point for training a custom model that works better.

Option 2: Train a Custom Model

If you can't find a pre-trained model on Roboflow Universe that detects your object of interest, you can use Roboflow to create one.

Roboflow is an end-to-end computer vision platform that has helped over 100,000 developers use computer vision. The easiest way to get started is to sign up for a free Roboflow account and follow our quickstart guide.

The basic process is:

- Upload at least 100 images that are as similar as possible to the ones your model will see in the wild.

- Annotate your objects of interest using Roboflow Annotate.

- Choose preprocessing & augmentation options and generate a dataset.

- Train a model using Roboflow's 1-Click training.

- Use one of our Deploy options to use your model to get predictions on images and videos it's never seen before.

- Collect problem images to add to your dataset & iterate on improving your model using active learning.

For training a custom computer vision model on aerial imagery, we have a couple of additional resources you may find helpful:

- Sourcing Images from Google Earth Engine's Python API

- Image Augmentations for Aerial Imagery

- Tips for Detecting Small Objects and

- Edge Tiling During Inference

Roboflow is free to use with public datasets. If you're doing a project for work and need to keep your data private or need help training a good model on your custom data, Roboflow's paid tiers offer optional onboarding with a computer vision expert; reach out to our sales team to learn more.

How to Use Computer Vision with Drones

The next thing you're probably wondering is how to use drone images for computer vision. Well, we need to do three things:

- Find out how to convert our image coordinates into a position on the globe, a process called georeferencing.

- Figure out how to get flight data from a DJI drone.

- Use your computer vision inferences to get outputs such as a map plot, object counting, object measuring, object distances, and more.

What is Georeferencing?

Georeferencing is the process of associating information contained in imagery with its position and orientation on the Earth's surface. Typically, the coordinate system we work with when dealing with images and videos is measured in pixels, but when we're dealing with images of objects that exist on the globe, we want to know their location in GPS coordinates.

The process of converting between these two spatial frames of reference by combining geospatial metadata with imagery is called georeferencing.

Getting Detected Objects' GPS Coordinates

Your computer vision model will find objects in your images and videos, but with aerial imagery, we often care much more about their position on earth.

For the rest of this tutorial, we'll be utilizing a model trained to detect solar panels in aerial imagery and a video shot with a DJI Mavic Air 2.

Obtaining and Parsing the Drone Flight Log from a DJI Drone

Most resources on the Internet recommend using DJI's SRT metadata files using video captions to extract and use flight telemetry from your drone videos, but unfortunately those files do not contain the compass heading which is needed to accurately georeference points.

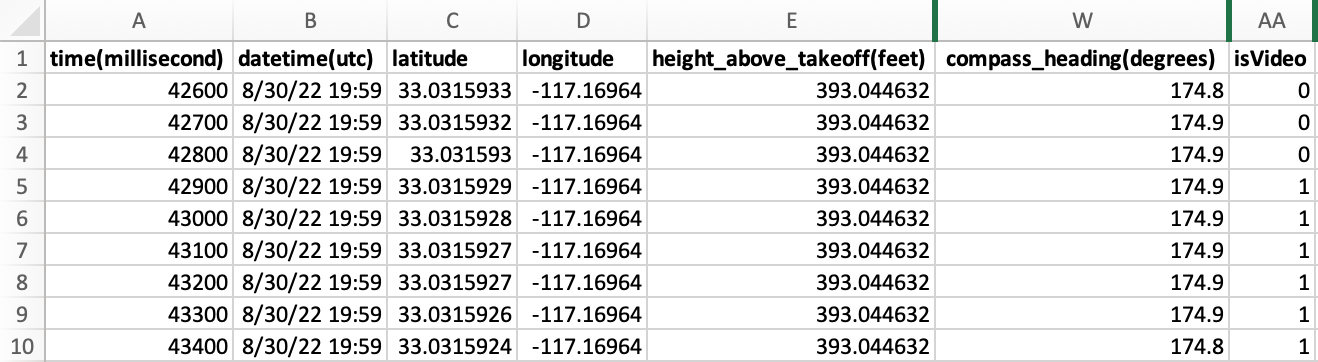

Instead, the best solution I found for how to get data off of a DJI drone is to pull the full flight log data from Airdata by linking your DJI account using these instructions. This gave a CSV file containing telemetry recorded every 100 milliseconds during a flight.

Note that the flight log records entries throughout the duration of the flight, not just during the recording of the video. To sync the video with the relevant log entries we filter down to the entries where isVideo is 1. (Currently the code uses the first such contiguous block, so if you took multiple videos during your flight you may need to split your flight log before loading it.)

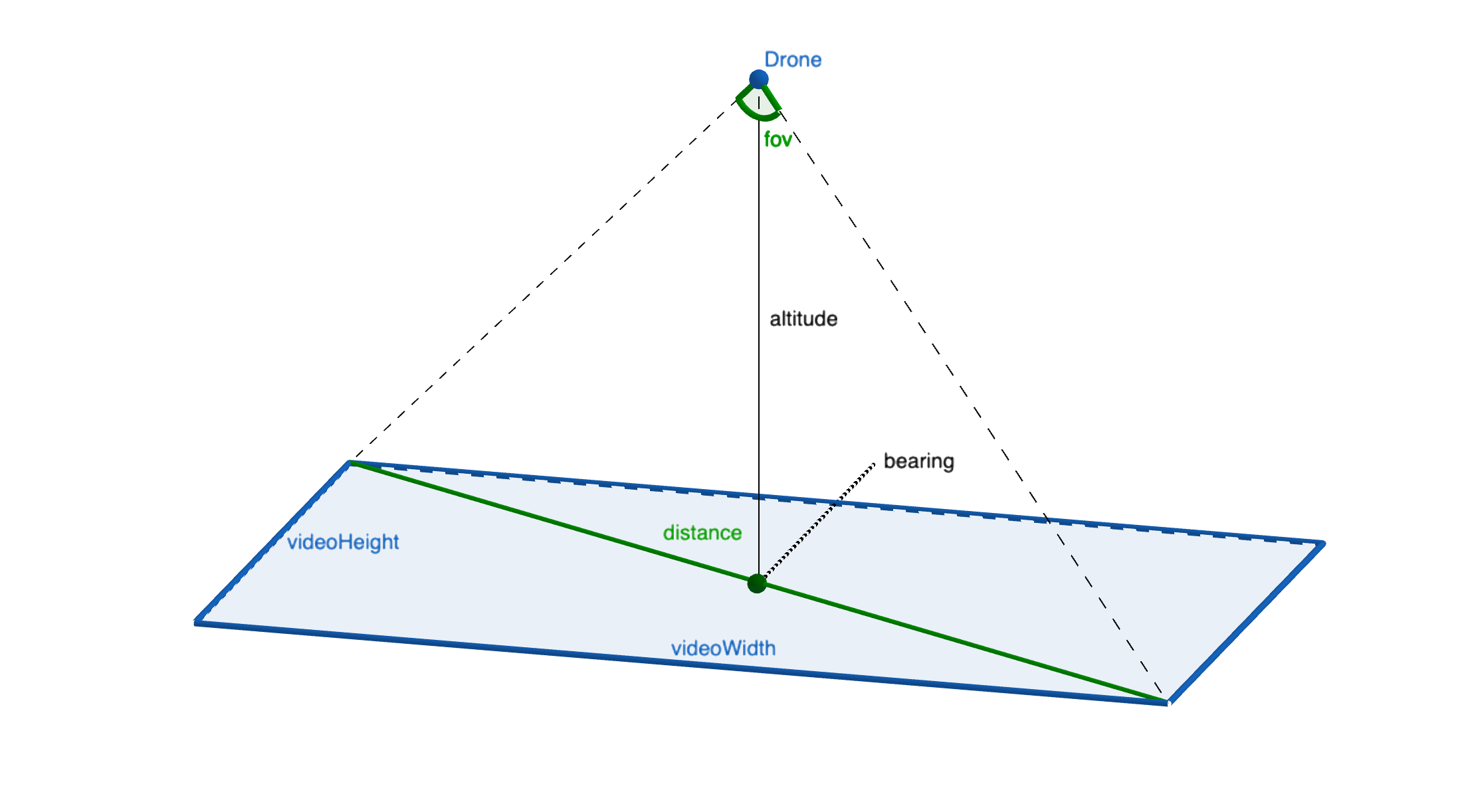

Converting Pixel Coordinates to Latitude and Longitude

In order to convert our pixel coordinates into GPS coordinates, we'll utilize the data we extracted from the drone's flight log and a little bit of trigonometry.

The three key pieces of information we need from the flight log are:

- GPS Coordinates of the Drone

- Compass Heading/Bearing

- Altitude Above Ground

We also need to know the Field of View of the drone's camera while shooting video (for the Mavic Air 2 this turned out to be 60 degrees).

Using the altitude and the field of view (fov), we can get the distance between the video's corners by taking altitude * tan(fov) and we can get the offset between the bearing and the corners based on the video's aspect ratio atan(videoHeight/videoWidth).

By using the using these values, we can use turf.js' rhumbDestination method to calculate the corners' GPS locations (for each corner, start at the drone's GPS location, which is the center point of our video, and go distance in the direction of bearing + offset) and plot the video on the map. We can use the same method to convert any point in our video to a GPS location by calculating its distance and angle relative to the center of the video.

Note: In order for this math to work, the video must be shot with the gimbal pointed straight downwards (90°). You'll usually get best results by maximizing the area your drone can see by flying at the highest altitude allowed in your area.

The source code for performing these calculations is available on Github in renderMap.js.

Verifying Our Calculations

While not strictly necessary (we could just export a CSV or JSON file of solar panels we found), we'll verify that our calculations are correct by plotting the flightpath, video, and detected solar panels on a map.

In order to render the map, we use mapboxgl, a WebGL based mapping API. It supports plotting our flight path as a GeoJSON polygon and overlaying our video. It also supports adding markers at specific locations.

Technically, that's all we need to do to georeference our machine learning model's predictions, but there are some settings we can tweak and some additional features we can add to make our app better.

Tuning Object Detection Confidence Levels

By default, our computer vision model will return predictions when it is 50% confident. A low confidence threshold can lead to many false-positive predictions.

Since we will be processing many frames of video our model will get many chances to identify our objects of interest which means we can probably wait to record a prediction until the model finds one it's very sure of.

This demo uses a 90% confidence threshold, but looking closely at the output it missed a few solar panels so in a future version it may help to decrease this slightly (or, ideally, improve my model so it becomes more confident and resilient to changes in lighting, angle, and environment).

We can tune this parameter in the model settings located in main.js.

Combining Duplicate Object Detection Predictions

Since our model makes predictions on many frames of video, we're bound to get the same solar panel predicted multiple times. These will appear in clusters on the map due to slight fluctuations in the accuracy of the predictions' x/y positions, the drone's GPS location, and the fact that the telemetry data only has a 100ms resolution (but our drone shoots video at 24, 30, or 60 frames per second).

In order to work around this, we can combine solar panels that are predicted to be very close to each other into a single marker on the map. By averaging the predictions together we benefit from the additional data afforded by many frames of video.

This code lives in renderMap.js and you can adjust the MIN_SEPARATION_OF_DETECTIONS_IN_METERS variably in the configuration at the top of the file.

Ignoring Stray Object Detection Predictions

Even after tuning the confidence threshold, every once in a while, the model might still make a mistake and detect an object when there isn't really one there. This is called a "false positive" prediction.

In order to mitigate this, you can add some code to delay showing a marker on the map until it has been detected on at least two separate frames of the video.

This threshold is editable with the MIN_DETECTIONS_TO_MAKE_VISIBLE variable in renderMap.js.

Next Steps

And that's all there is to it! The code for this tutorial on Github and you can try it out on Github Pages with your own drone video and flight log or the ones used in this example for testing.

Next, you can try with a pre-trained aerial image model from Roboflow Universe or a model of your own by forking the repo and swapping out the model config in main.js.

And if you're in for a real challenge, you could try creating an Instance Segmentation model to measure the size of the detected solar panels (or buildings or lawns or swimming pools or anything else you can think of) in addition to just their location.

If this is of interest to you, feel free to create an issue on the repo!

⭐️ Star the Repo

If you enjoyed the post, please let us know by starring the repo!

Cite this Post

Use the following entry to cite this post in your research:

Brad Dwyer. (Sep 14, 2022). Using Computer Vision with Drones for Georeferencing. Roboflow Blog: https://blog.roboflow.com/georeferencing-drone-videos/