Early speculations on multimodal LLMs

OpenAI released GPT-4 showcasing strong multi-modal general AI capabilities in addition to impressive logical reasoning capability. It fuses information from the image domain with its knowledge of text to provide wholly-new capabilities like transforming a napkin sketch into an HTML website.

To date, computer vision has been focused on solving quantitative tasks (like localizing objects of interest and counting things); GPT-4 and multimodality extend its capabilities into unstructured qualitative understanding (like captioning, question answering, and conceptual understanding).

Are general models going to obviate the need to label images and train models? Has YOLO only lived once? How soon will general models be adopted throughout the industry? What tasks will benefit the most from general model inference and what tasks will remain difficult?

Here, we share initial speculations on the answers to these questions and reflect on where the industry is heading. Let’s dive in!

What is GPT-4?

GPT-4 is a multi-modal Large Language Model (LLM) developed by OpenAI and released on March 14th, 2023. GPT-4 has more advanced question answering, reasoning, and problem solving abilities compared to previous models in OpenAI's GPT family. GPT-4 is technically capable of accepting images as an input.

The GPT-4 Technical Report document is the de facto GPT-4 research paper, offering insights into the capabilities of the model, the announcement of visual inputs with reference to an example, how the model handles disallowed prompts, risk factors, and more.

GPT-4's Multi-Modality Capabilities

GPT-4 is multi-modal, which means it incorporates multiple input types. GPT-4 accepts text and images to provide text outputs.

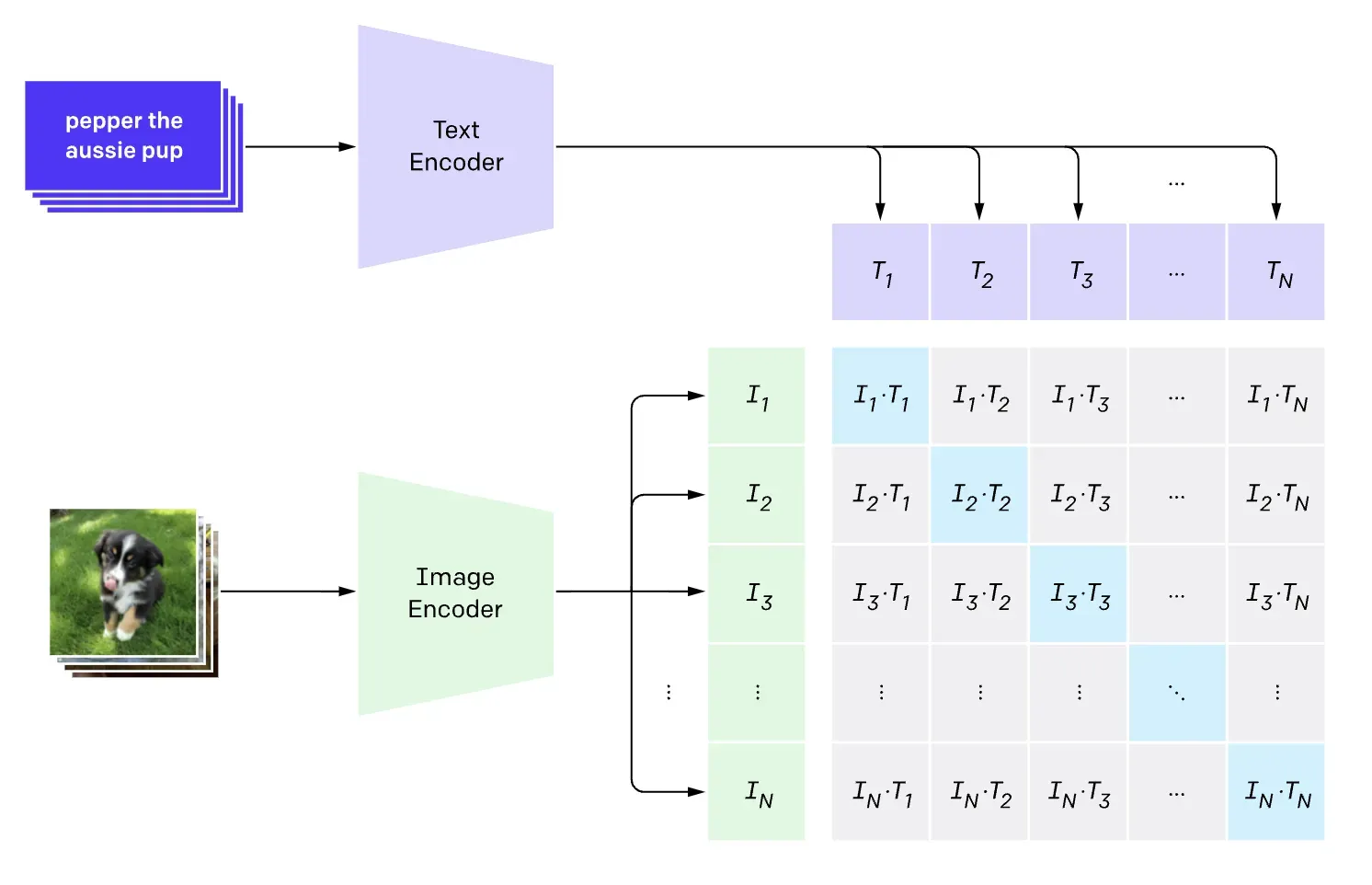

As a note, GPT-4 is not the first multimodal approach to understanding visual inputs. Flamingo, DETIC, GLIP, OWL-ViT, PALM-E, and CLIP among other research have demonstrated various approaches to leveraging language pretraining and pixels.

Multi-Modal Semantics

Apart from scaled-up compute and context windows which allow the model to retain more knowledge, improve its reasoning abilities, and maintain context for longer, the most notable change from GPT-3 to GPT-4 is the ability to understand text and images in the same semantic space. We have already seen the beginnings of multi-modal models influencing the machine learning industry with OpenAI's CLIP model, generative AI like Stable Diffusion, and research like data2vec.

Multi-modality applied to imagery is revolutionary because it brings all of the rich understanding of text pretraining routines into the world of images - a world where large pretraining routines have proved lackluster compared to their text based counterparts. Simply learning to predict the next pixel as opposed to the next text token delivers less insight to the model, as words are jammed with the meaning we have imbued them with over the years - furthermore, text is inherently linear. Pixels can be formed into concepts - but those concepts live more natively in the abstract sphere of language than the realm of pixels.

Multi-Modal APIs

OpenAI has yet to widely distribute the multi-modal APIs for GPT-4, but when they do, the APIs will accept both text context and image context. This means that you can ask the model questions about an image or instruct a model to take actions based on visual and textual context. In the GPT-4 demo, Greg Brockman demonstrated how you can take a sketch of a website and ask the model to generate the code for that website - flowing across image, text, and code concepts.

How Multi-Modal APIs Are Different

Traditional image based CV applications deploy a model that accepts an image as input and responds with a structured response. Generative multi-modal inference is more open ended, multi-turn, and zero-shot.

Open Ended - When you query the model, you receive a generated response which may take many forms. It is up to you in your application to translate that response into an actionable format. Multi-modal APIs certainly provide valuable general intelligence with a human in the loop - it is unclear how efficient they will be when integrated into applications that are running on their own - in some ways their open ended nature make it more difficult to write code surrounding their predictions, but in other ways, their open ended nature will absorb some of the downstream business logic that we used to need to write around structured predictions.

Multi-Turn - Opposed to single inference task based CV models, multi-modal API queries can be multi-turn. If you don't get the exact answer in the first query you can make a follow up one to perfect the response. This will likely be a big advantage over single frame inference in traditional CV models.

Zero-Shot - Traditional task based CV models require supervised training with a dataset to prepare your model for the task it will accomplish. Multi-modal inference is based on the models pre-training routines and no further training is required. Zero-shot inference is faster to adapt into an API, but may lack the specificity required for a given task. We will speculate more on this below.

The Impacts of GPT-4 General Knowledge

GPT-4 will obviate the need for certain traditional computer vision tasks, it will be unable to solve some of them, and it will unlock new applications that were never possible under the previous paradigm.

OpenAI’s north star is creating artificial general intelligence. That means vision is only one input in creating agents that understand the world (vision, language, sound) to reason and interact with the world like humans can.

We’re not there yet.

Thus, the best way to understand GPT-4’s visual capabilities is to approximate its intelligence relative to known computer vision task types and exploring new tasks it makes possible.

While the APIs remain in closed beta, it is difficult to assert for certain which CV tasks GPT-4 will solve. We provide some directional speculation below.

Traditional Tasks that GPT-4 Obviates

GPT-4's general domain knowledge will solve common tasks where imagery is prevalent on the web that we traditionally trained CV models to solve. A good way to think about these problems would be any problem that you could scrape images from Bing images, add them to a dataset, and train your model. The knowledge of these images is already locked into the memory of GPT-4 and no further training will be required.

Some example tasks that fall into this bucket will be:

- Classifying dogs into different species

- Determining whether there is smoke in an image

- Reading the numbers from a document and summing them

- Captioning images and answering followup questions about them

Difficult Tasks for GPT-4

GPT-4 will do a poor job of solving computer vision tasks where domain specific/proprietary knowledge is involved or a high level of precision is required from the model's prediction. Because GPT-4 is trained to make mean predictions from web based data, it will be unable to replicate proprietary knowledge that only lives within institutions. For precision, because GPT-4's response are language based, it will likely be difficult to extract extremely precise predictions for applications that need to operate with very minimal error.

Examples of proprietary, domain specific tasks that will be difficult for GPT-4 will include:

- Identifying defects in machine parts on factory floor (where only the producer knows what the parts should look like during production)

- Tagging particular species of deep sea fish under a commercial fishing ship

Examples of highly precise predictions that GPT-4 may not solve include:

- Following the location of a football player on screen

- Measuring the size of a lawn from a satellite image

Notably, many computer vision applications also run on the edge and in offline environments. GPT-4 is available via hosted API, which limits its ability to run in these conditions in real-time.

New Applications that GPT-4 Unlocks

GPT-4's open domain knowledge unlocks new CV applications that were not previously possible. For example, the first partner OpenAI has using the multi-modal APIs is building an application that allows low sight people to get another sense of the visual scene around them. We can imagine myriad other applications that are open ended, such as notifying if a given security camera sees something peculiar. And as mentioned earlier, open ended text predictions will allow applications to be made more flexibly, skipping some of the business logic that we typically write around structured model predictions.

There will also be many applications that are built as a combination of GPT-4s general senses alongside finetuned CV models.

At Roboflow in particular, we are very excited to be building tools at the intersection of general multi-modal intelligence and fine tuned models.

Unknown Areas of GPT-4 Capability

The unknown areas of GPT-4 accuracy revolve around the specificity of predictions that the model will provide. We will not know the answers to many of these questions until the APIs become generally available.

It’s not yet known how GPT-4 will do on the quantitative tasks that existing computer vision models are already good at like classification and object detection.

- Localization: It remains to be seen how good GPT-4 will be at localizing objects in images. Localization will depend on the model architecture - if OpenAI simply featurizes images before inference like in the CLIP model, localization capabilities are likely to be constrained.

- Counting: It remains to be seen how well GPT-4 can count. Perhaps generative language prediction will not provide the specificity required for accurate counts of objects.

- Pose estimation: It not yet known how precise GPT-4 will be in understanding the pose of an individual (think like a physical therapist needs: depth of pushup or angle of rotation of one’s knees).

It is worth noting that OpenAI does not yet provide evaluation of GPT-4 on tasks like object detection yet. The argument in favor of it being good at these tasks is that in NLP large language models are state of the art for things like Classification that used to be solved by purpose-built models. One argument against this primacy is that LLMs have historically been poor at spatial reasoning (text is inherently linear and pixel data spans multiple axes).

GPT-4 Adoption in Production

Assuming GPT-4 predictions have the requisite accuracy for a task, when it comes to real industry adoption of GPT-4 (and any general multi-modal successors), it will come down to the deployment costs of the model, the latency of the model's inference, and the privacy / offline requirements of its users.

GPT-4 Image Inference Cost - In many cases, invoking a GPT-4 inference for a task will be like bringing a sledge hammer in to crack a nut. The API currently costs $0.03 per ~750 words in the prompt and $0.06 per ~750 words in the response. It depends on how many tokens an image request will cost, but if we think about the input to a vision transformer at 224x224x3 pixels, which is 10x the input length cap now. We think those image requests will be featurized internally before being passed to the model and will take up at least half the input (as only one image is accepted now). It's a long shot, but this estimates image API requests at $0.12/call, which would completely disqualify many use cases, where you might as well send an image over to a human in the loop. Costs are inevitably going to come down over time, but this might be the primary blocker now to OpenAI releasing the multi-modal endpoint more generally.

GPT-4 Image Inference Latency - GPT-4 is hosted behind an inference API with response times ranging up to a few seconds depending on input and output length, so GPT-4 will not be suitable for any CV application that requires edge deployment latency.

GPT-4 Hosted Model Limitations - Since GPT-4 is hosted behind and API, enterprise will need to be comfortable calling an external API. OpenAI has indicated model inputs are not used for retraining. Computer vision applications requiring offline inference will necessarily not be able to leverage GPT-4.

Conclusion

GPT-4 has introduced general pretrained models to the world of computer vision. The GPT-4 model will change the way we solve computer vision tasks where imagery is prevalent on the web, it may struggle with domain specific applications, and it will unlock new computer vision applications that have never been possible before.

The adoption of GPT-4 will be bolstered by its strong intelligence and myriad applications, and it may be slowed by its costs to deploy, latency, and privacy/offline requirements.

At Roboflow we support over 100,000 computer vision practitioners to train and deploy domain specific models with custom datasets. We publish research, open source tooling (like supervision and notebooks), 100M+ labeled images and 10k+ pretrained models on Universe, and provide free hosted tools for developers in our core app.

We predict that the introduction of strong multi-modal AI models to the field of computer vision will further accelerate the pace of CV adoption in industry and unlock new capabilities for businesses, developers, and CV practitioners. We're excited to build GPT-4 into our product so our users can benefit from its transformative capabilities soon.

It has never been a more exciting time to be working in the world of AI applications.

Happy inference! And happy training (sometimes 😁)!

Frequently Asked Questions

How do I try GPT-4?

GPT-4 is available for use in the OpenAI web interface for everyone who subscribes to ChatGPT Plus ($20 / month). API access is being granted to selected people on the API waitlist.

Is GPT-4 be available for free?

To use GPT-4 in the web interface, you need to pay $20 / month to access ChatGPT Plus, the plan through which GPT-4 is available.

How much does the GPT-4 API cost?

GPT-4 costs $0.03 per 1k tokens for prompts and $0.06 per 1k tokens for completion responses in the 8k context version of the model. The 32k context version costs $0.06 per 1k tokens and $0.12 per 1k tokens.

Can you fine-tune GPT-4?

As of April 7th 2023, you cannot fine-tune GPT-4.