You might be wondering, "How do I monitor performance of a deployed vision model?" Deploying an AI model into production is not the last phase of the model life cycle. It is the beginning of the new phase. Real-world production environments are complex.

Lighting may change, cameras can shift, new objects may appear, and user behavior introduces edge cases the model has never seen during training. Therefore, in such conditions, even a high-accuracy AI model will slowly degrade if it is not monitored and improved.

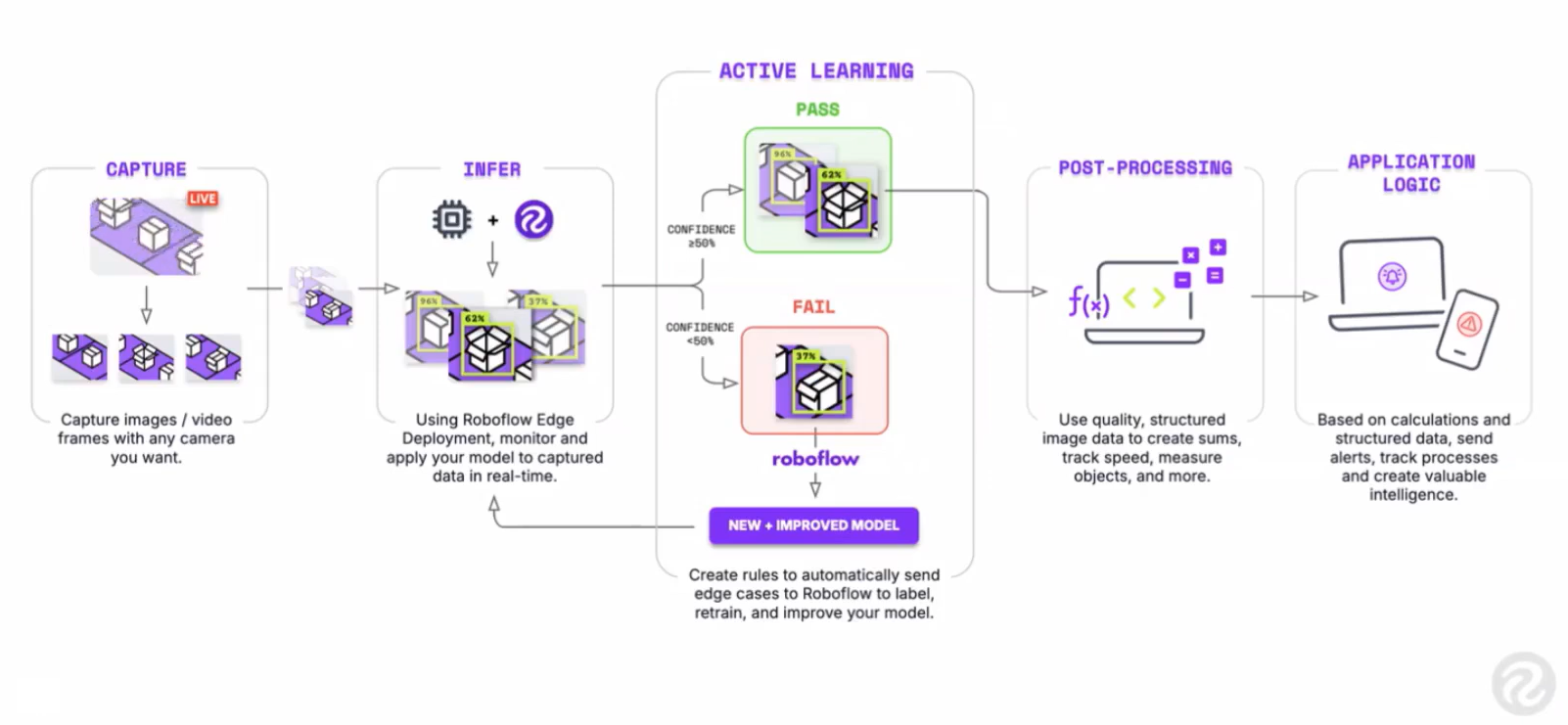

The solution is to build a feedback loop that continuously measures model performance, identifies failure cases, and improves the model over time. To support this continuous improvement cycle, an integrated workflow is needed that connects production monitoring with model iteration. This includes tracking inference behavior on real data, identifying failure cases, updating datasets, and retraining and re-deploying models as conditions change.

Here's how to monitor and continuously improve AI models once they are in production.

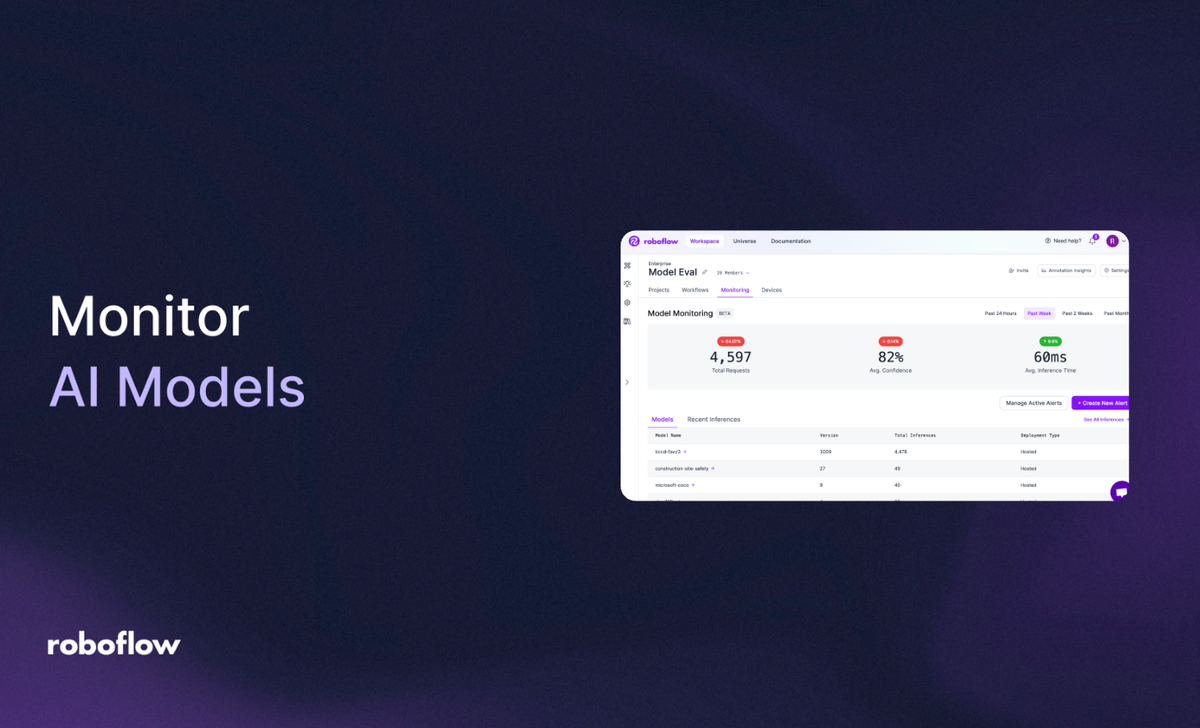

Model Monitor in Roboflow

How to Improve AI Models in Production

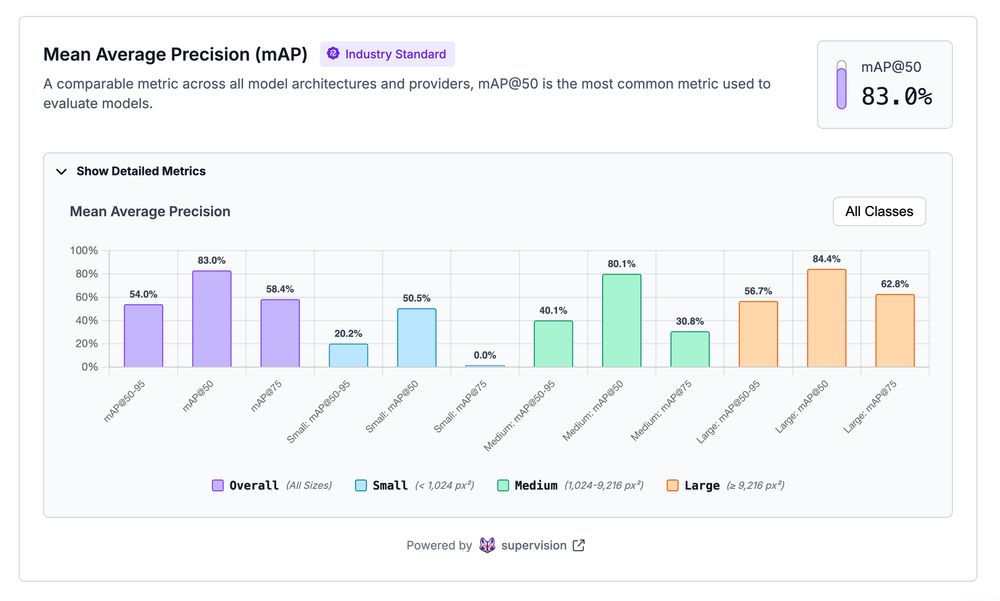

1. Track Model Performance with Verified Metrics

Once a model is deployed, how it performs on real, previously unseen data becomes far more important than its offline training accuracy. Consistent evaluation metrics are required to measure performance reliably across model versions and over time.

Analyze metrics such as Mean Average Precision (mAP), precision, recall, and confidence thresholds in a standardized way to avoid misleading conclusions caused by dataset leakage or shifting class distributions.

Set an appropriate confidence threshold to balance precision and recall. High thresholds reduce false positives, but may miss objects. While low thresholds increase recall at the risk of more false alarms.

Use model evaluation tools to measure whether a new model version actually improves performance or simply shifts errors elsewhere.

Read more:

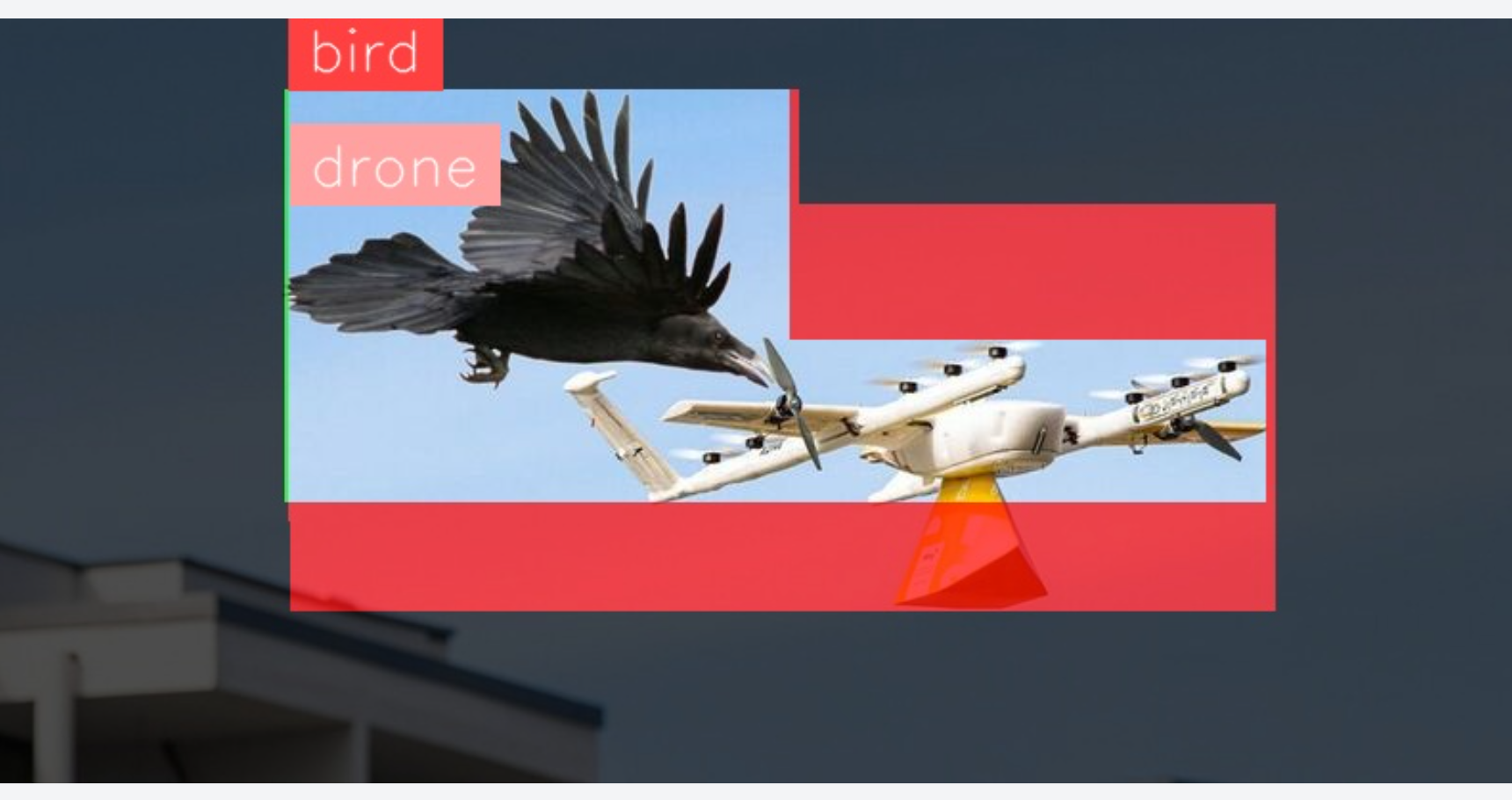

2. Visually Compare Model Predictions Across Versions

Numbers alone rarely tell the full story. Two models with similar metrics may fail in very different ways. So you should visually compare predictions from multiple models on the same images. Visual comparison is especially useful when validating retrained models before deployment, ensuring that improvements are real and not accidental.

Use Roboflow’s Model Comparison Visualization block in Workflows, which addresses this by overlaying predictions from two models on the same image. This visual comparison highlights where a new model detects objects that a previous model missed or where it removes false positives.

Read more:

3. Detect Data Drift Using Production Inference

Production data rarely stays static. Changes in camera hardware, lighting conditions, weather, backgrounds, or object appearance can slowly introduce data drift. Use Roboflow Inference to identify drift. Inference helps explore prediction confidence, bounding boxes, masks, and class distributions for real production inputs data. It helps to monitor:

- Low-confidence predictions, which often indicate unfamiliar inputs

- New object appearances not well represented in the training set

- Rising false positives or missed detections on specific devices or locations

These signals are the earliest indicators of data drift. Because Roboflow Inference runs consistently across cloud, edge, and on-device deployments, the same monitoring logic applies everywhere. When drift is detected, affected frames can be captured, reviewed, and added back into the dataset, closing the loop between deployment and retraining.

Read more:

4. Improve Datasets with Active Learning

All input data in production does not contribute to model improvement, and labeling every possible variation of an object is also costly and time consuming. Active learning prioritizes the most informative samples from production data.

Use Roboflow's active learning workflow, to identify low-confidence predictions, misclassifications, and edge cases from model inference results. Select these samples for re-labeling and add them back to the training dataset.

This approach avoids labeling large amounts of redundant data, and focuses annotation effort on data that directly improves model performance. Over time, this targeted dataset refinement improves generalization, reduces errors on edge cases, and leads to more robust computer vision models in production.

Active learning in Roboflow

Read more:

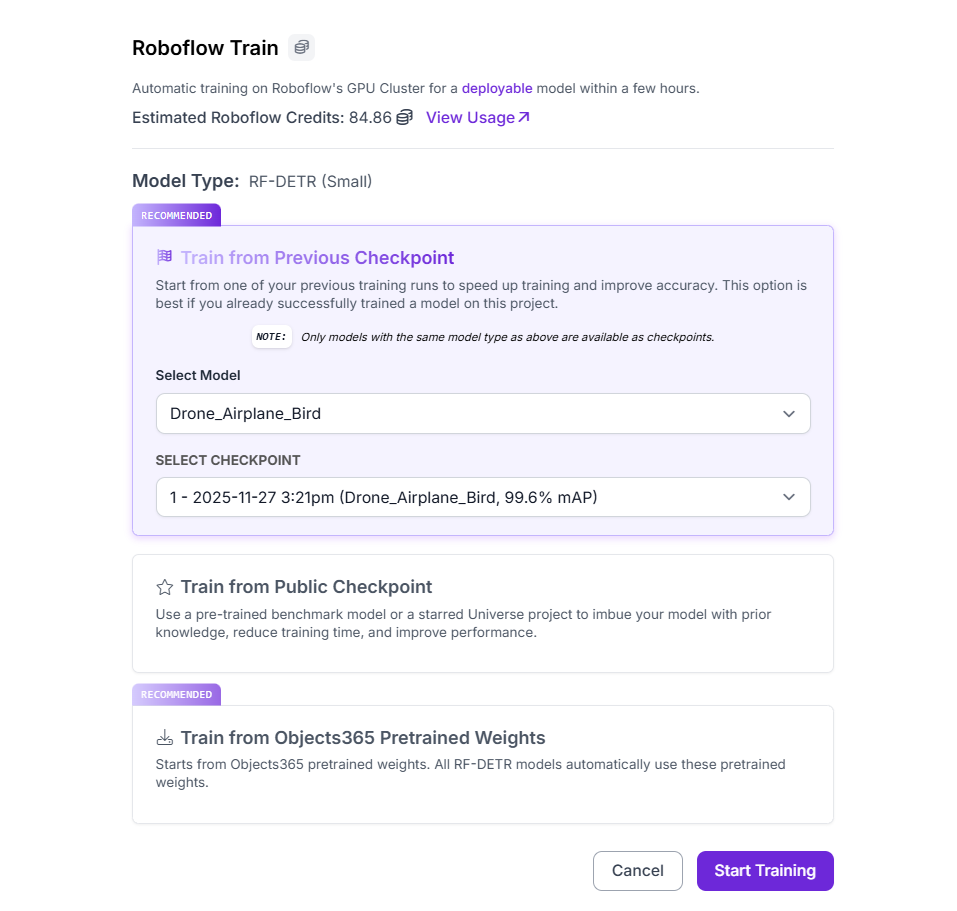

5. Re-Train Models

When new production data is available, retraining should be fast, controlled, and easy to compare. Use versioned datasets, consistent preprocessing, and controlled data augmentation with Roboflow Train.

Each dataset update creates a new version, ensuring training runs are fully reproducible and traceable. Preprocessing and augmentation such as resizing, normalization, lighting variation, and geometric transforms help models generalize to real-world conditions without changing the core dataset.

Roboflow also supports training from a previous checkpoint, allowing you to start from an earlier successful model instead of training from scratch. This speeds up convergence and improves accuracy when new data represents incremental changes rather than a completely new distribution.

Read more:

- How to Improve the Accuracy of Your Computer Vision Model: A Guide

- What Is Image Preprocessing and Augmentation?

- Getting Started with Data Augmentation in Computer Vision

6. Automate the Feedback Loop

Manual monitoring, labeling, and retraining do not scale in production environments. To remain reliable over time, vision AI systems require an automated feedback loop.

Build automated pipelines that connect production inference, data filtering, and dataset updates with Roboflow Workflows. Instead of manually collecting data, inference results such as low-confidence predictions or specific failure cases will be automatically captured and routed for review.

Workflows allow teams to define clear rules for which data should be saved, labeled, or retrained on. This ensures that only high-value samples enter the improvement cycle, reducing labeling effort while maximizing model gains. Once reviewed, these samples are added to a new dataset version and used in the next training run.

Read more:

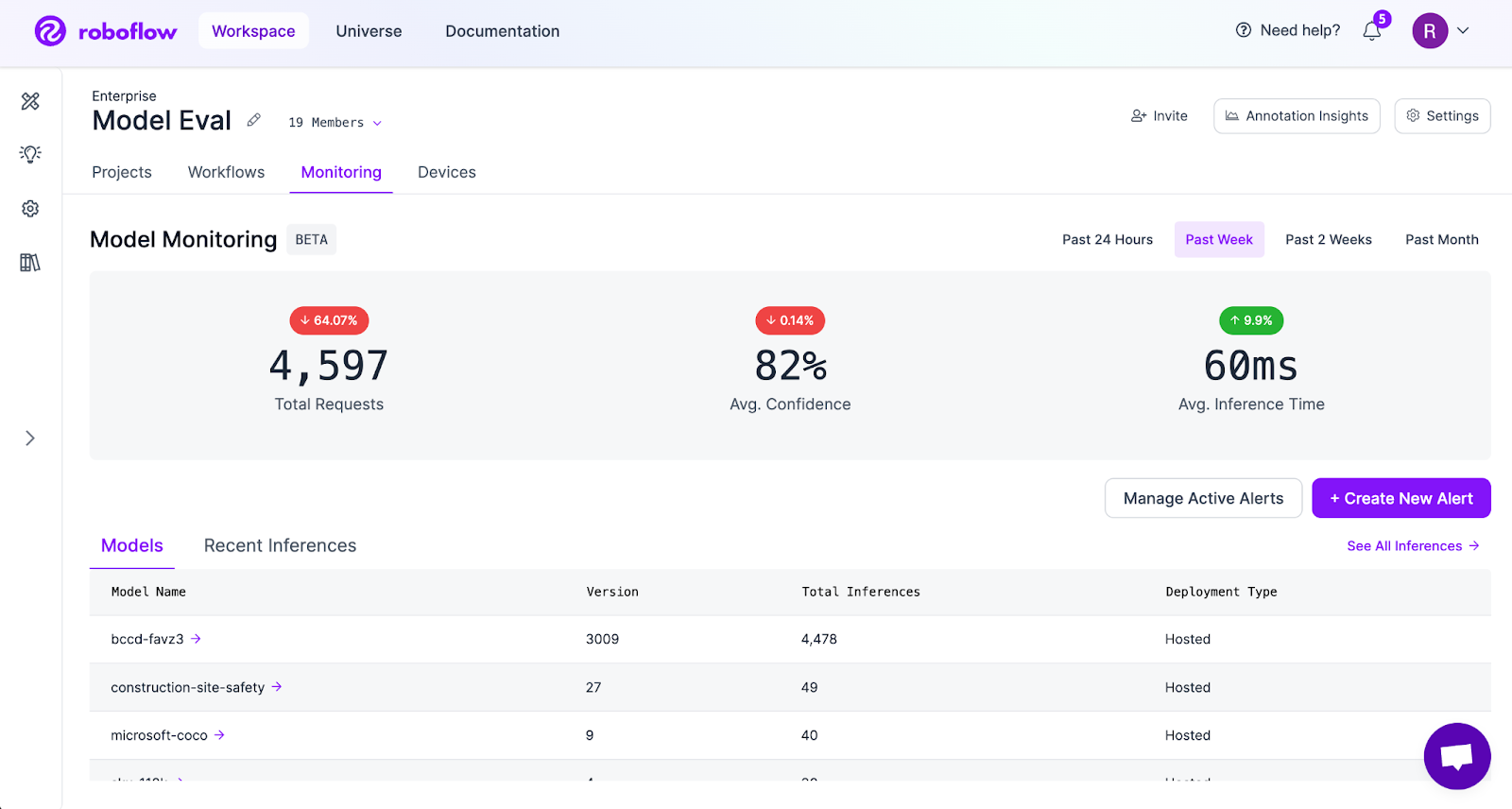

7. Monitor Models in Production

Once a model is live, track how it behaves on real production data to detect drift, failures, and performance degradation with Roboflow Model Monitoring. View statistics such as prediction confidence distributions, class frequency, and inference volume.

Attach metadata to each inference, such as device ID, camera location, environment, or time of day. This context will help you slice monitoring data and identify where problems occur. For example, you'll be easily able to spot a specific camera producing low-confidence predictions or a location experiencing higher error rates.

Read more:

- Launch: Computer Vision Model Monitoring with Roboflow

- Retrieve Statistics About Deployed Models in a Workspace

- Attach Metadata to an Inference

How to Monitor and Continuously Improve AI Models Conclusion

In production, computer vision models are living systems. Lighting changes, products evolve, cameras drift, and new edge cases appear. Without monitoring, even the best performing model will slowly lose accuracy and trust. The teams that succeed with vision AI are not the ones that deploy once, but the ones that continuously observe, learn, and adapt.

The core pattern is clear: measure real-world performance, inspect failures visually, detect drift early, prioritize the right data with active learning, retrain with control, and automate the feedback loop. When these steps are connected, model improvement becomes routine instead of reactive. Accuracy gains compound over time, labeling effort stays focused, and production failures are caught before they impact operations.

Roboflow is built around this closed-loop approach. From verified metrics and visual model comparison to production inference monitoring, active learning, retraining, and automated workflows, every part of the platform is designed to keep vision systems reliable long after deployment. Get started today.

Cite this Post

Use the following entry to cite this post in your research:

Timothy M. (Dec 16, 2025). How to Monitor and Improve AI Models in Production. Roboflow Blog: https://blog.roboflow.com/how-to-improve-ai-models/