In this post, we walk through the steps to train and export a custom TensorFlow Lite object detection model with your own object detection dataset to detect your own custom objects.

If you need a fast model on lower-end hardware, this post is for you. Whether for mobile phones or IoT devices, optimization is an especially important last step before deployment due to their lower performance. If you're not sure what model and device to use in your pipeline, follow our guide to help figure that out.

In this tutorial, we will train an object detection model on custom data and convert it to TensorFlow Lite for deployment. We’ll conclude with a .tflite file that you can use in the official TensorFlow Lite Android Demo, iOS Demo, or Raspberry Pi Demo.

A Note about Custom Data

If you don’t have a dataset, you can follow along with a free Public Blood Cell Detection Dataset. If you have an unlabeled dataset, you can learn how to label with best practices with this post and create a free Roboflow account to label up to 10,000 images.

Let's dive in!

Open up this Colab Notebook to Train TensorFlow Lite Model.

What is TensorFlow Lite?

TensorFlow Lite is the official TensorFlow framework for on-device inference, meant to be used for small devices to avoid a round-trip to the server. This has many advantages, such as greater capacity for real-time detection, increased privacy, and not requiring an internet connection.

Note TensorFlow Lite isn’t for training models. It’s for bringing them to production. Luckily, the associated Colab Notebook for this post contains all the code to both train your model in TensorFlow and bring it to production in TensorFlow Lite.

Preparing Object Detection Data

TensorFlow models need data in the TFRecord format to train. Luckily, Roboflow converts any dataset into this format for us.

Note: if you have unlabeled data, you will first need to draw bounding boxes around your object in order to teach the computer to detect them.

Label and Annotate Data with Roboflow for free

Use Roboflow to manage datasets, label data, and convert to 26+ formats for using different models. Roboflow is free up to 10,000 images, cloud-based, and easy for teams.

Using Your Own Data

To export your own data for this tutorial, sign up for Roboflow and make a public workspace, or make a new public workspace in your existing account. If your data is private, you can upgrade to a paid plan for export to use external training routines like this one or experiment with using Roboflow's internal training solution.

Once you have labeled data, if you haven’t yet, sign up for a free account with Roboflow. Then upload your dataset.

After upload you will be prompted to choose options including preprocessing and augmentations.

After selecting these options, click Generate and then Download. You will be prompted to choose a data format for your export. Choose Tensorflow TFRecord format.

Note: in addition to converting data to TFRecords in Roboflow, you can check the health of your dataset, make preprocessing and augmentation decisions, and generate synthetic object detection data so you can avoid overfitting and spend less time labeling more data.

After export, you will receive a curl link to download your data into our training notebook. There will be two links in the notebook to replace. The first is the TFRecord link you just generated. For the second link, repeat the steps for COCO JSON (in the dropdown as JSON > COCO).

Lastly, we map our training data files to variables for use in our training pipeline configuration.

Training Custom TensorFlow Model

Because TensorFlow Lite lacks training capabilities, we will be training a TensorFlow 1 model beforehand: MobileNet Single Shot Detector (v2).

Instead of writing the training from scratch, the training in this tutorial is based on a previous post: How to Train a TensorFlow MobileNet Object Detection Model. It explains the reason for choosing the MobileNet architecture:

“This specific architecture, researched by Google, is optimized for lightweight inference, enabling it to perform well natively on compute-constrained mobile and embedded devices”

We will not dive deeply into details here. To learn more about training, refer to the tutorial to train MobileNet and provided MobileNet Object Detection Colab Notebook.

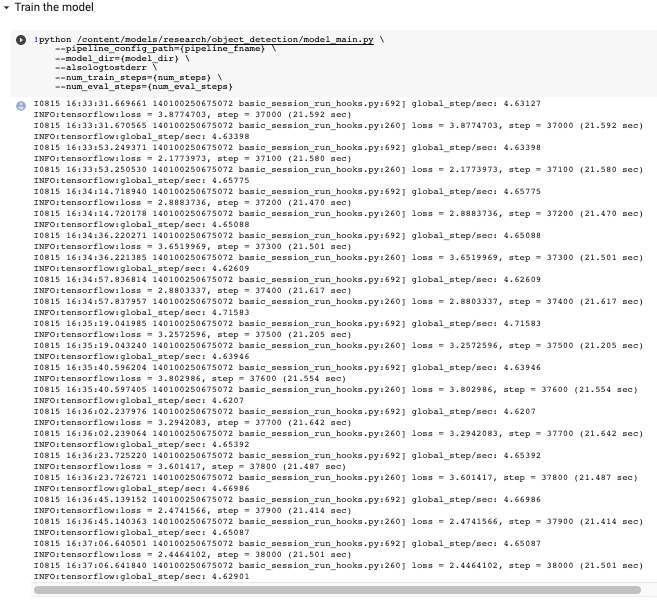

From a high level, in order to train our custom object detection model, we take the following steps in the Colab Notebook to Train TensorFlow Lite Model:

- Install TensorFlow object detection library and dependencies

- Import dataset from Roboflow in TFRecord format

- Write custom model configuration

- Start custom TensorFlow object detection training job

- Export frozen inference graph in

.pbformat - Make inferences on test images to make sure our detector is functioning

Converting a SavedModel to TensorFlow Lite

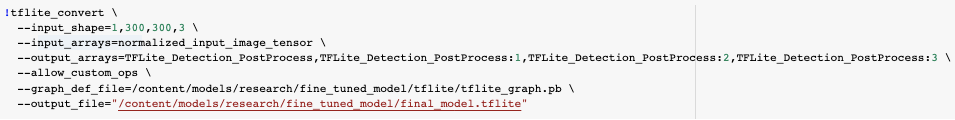

Once you have a SavedModel (from the provided Colab Notebook or your own source), use the TensorFlow Lite Converter. Google recommends using its Python API, though it provides a Command Line Tool to do the trick as well.

We use the command line converter in the notebook because it’s simpler.

.pb model to .tflite with the command line converterBe sure to set the input shape as desired for deployment. Smaller input shapes will run faster, but will be less performant.

Deployment Your Custom TensorFlow Lite Model

Lastly, we download our TensorFlow Lite model out of the Colab Notebook.

To deploy your model on device, check out the official TensorFlow Lite Android Demo, iOS Demo, or Raspberry Pi Demo. You can simply clone one of these repositories, drop in your .tflite file, and build according to the repo’s README.

Roboflow offers a mobile iOS SDK if your mobile applications will also need to run on iPhones or iPads.

You’re ready to run on-device inference 💥

As always - happy detecting!