In this tutorial, we will train state of the art EfficientNet convolutional neural network, to classify images, using a custom dataset and custom classifications. To run this tutorial on your own custom dataset, you need to only change one line of code for your dataset import.

Today, we will train EfficientNet using a Keras framework in Google Colab. We train our classifier to recognize rock, paper, scissors hand gestures - but the tutorial is written generally so you can use this approach to classify your images into any classification type, given the right supervision in your dataset.

Resources in this tutorial:

The Custom Classification Task

Given an image, we are seeking to identify the image as belonging to one class in a series of potential class labels. Our model will form features from the image, pass these features through a deep neural network, and output a series of probabilities corresponding to the likelihood that the image belongs to each of those classes. We can assume that the highest probability that is output corresponds with the models prediction.

In our tutorial we will be training a model to classify rock, paper, scissors hand gestures in the popular game.

Why Use EfficientNet for Classification

Research

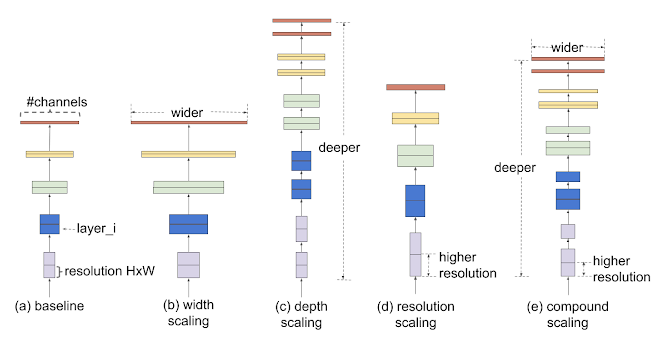

EfficientNet is a state of the art convolutional neural network, released open source by Google Brain. The primary contribution in EfficientNet was to thoroughly test how to efficiently scale the size of convolutional neural networks. For example, one could make a ConvNet larger based on width of layers, depth of layers, the image input resolution, or a combination of all of those levers.

EfficientNet forms the backbone for the state of the art object detector EfficientDet. Object detection goes one step further to localize as well as classify objects in an object. If you are searching for localization, I recommend this tutorial on how to train EfficientDet, this YOLOv4 Tutorial, or this YOLOv5 Tutorial.

Performance

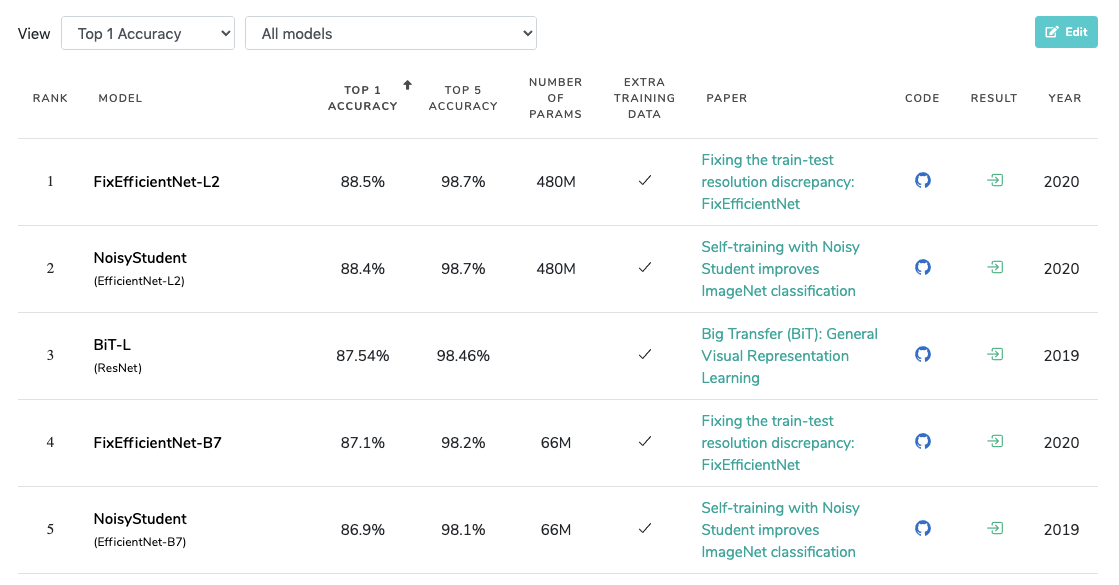

EfficientNet is currently the most performant convolutional neural network for classification. Image Classifiers are typically benchmarked on ImageNet, an image database organized according to the WordNet hierarchy, containing hundreds of thousands of labeled images.

As you can see in the table, 4 out of the top 5 approaches to the ImageNet task are all based on EfficientNet.

As a nice added bonus, the EfficientNet models we use in this tutorial have been pretrained on ImageNet, meaning that they already have a solid understanding of general features used to classify images.

Let's get started!

Import EfficientNet Dependencies

As you are working through this tutorial, I recommend opening this Colab Notebook Training EfficientNet in tandem. This notebook is based on the original tutorial by DLogogy and has been updated to fix software versioning and the dataset import and creation now easily flows through with Roboflow.

First, make a copy of the notebook so you can edit, and make sure you select your Runtime Type --> GPU to utilize free GPU resources.

The first step we take in the notebook is to select the correct tensorflow environment, the codebase is still running on tensorflow 1.x. We also check our keras version, in this pass we are using keras 2.3.1. Then we import some packages and clone the EfficientNet keras repository.

Import EfficientNet and Choose EfficientNet Model

The biggest contribution of EfficientNet was to study how ConvNets can be efficiently scaled up. In this notebook, you can take advantage of that fact!

In the line from efficientnet import EfficientNetB0 as Net you can choose between the size of model you would like to use. Choose from among EfficientNetB0, EfficientNetB1, EfficientNetB2, EfficientNetB3. The larger the better performance, but watch out training time will slow down with larger models and you may run out of GPU memory with the free Colab GPUs.

Next, before loading the model, we choose the input resolution. We start with 150 x 150 here for GPU memory and to get a feel for the classification script, but it may be useful to scale this up on your task later.

Creating a Custom EfficientNet Training Dataset

Now, you can import your own data to use transfer learning to teach EfficientNet to classify images into your custom classes.

If you are just following along with the tutorial, we recommend using this public rock, paper, scissors dataset.

If bringing your own data, we recommend uploading to Roboflow for preprocessing and augmentations. First, you can sign up for a free account. Then Create Dataset and drag and drop to upload. Make sure your images are in the following folder structure.

folder

---class1

------images

---class2

------images

...Folder structure for classification dataset

***Using Your Own Data***

To export your own data for this tutorial, sign up for Roboflow and make a public workspace, or make a new public workspace in your existing account. If your data is private, you can upgrade to a paid plan for export to use external training routines like this one or experiment with using Roboflow's internal training solution.

Then you can choose dataset version settings Generate and Download your dataset for import into the Colab notebook with a curl link.

Once we have the download curl link, we can replace the one line in the notebook where it says "Your Link Here".

For the purposes of our tutorial, we are only going to train on a small subset of 25 images, so we filter accordingly. Of course, you will want to scale up training on your own dataset. Then we pass our data through a training generator to prepare the data for train time.

Importantly, during the creation of our training generator we set the batch_size that our model will be using during training. The higher batch size the more images you pass through at a time and training will run faster, but you may max out the memory that the Colab GPU can handle.

✅ Dataset ready to train

Creating a Custom EfficientNet Training Job

Next we set up the infrastructure to run a training job on our dataset. We choose the number of epochs to train for. The more epochs, the better your model is likely to fit your data but training will run for longer.

Next, we set up the network to build the correct number of layers for the number of classes we have in our dataset.

Here is a summary of our model's architecture:

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

efficientnet-b0 (Model) (None, 5, 5, 1280) 4049564

_________________________________________________________________

gap (GlobalMaxPooling2D) (None, 1280) 0

_________________________________________________________________

dropout_out (Dropout) (None, 1280) 0

_________________________________________________________________

fc_out (Dense) (None, 3) 3843

=================================================================

Total params: 4,053,407

Trainable params: 3,843

Non-trainable params: 4,049,564EfficientNet model architecture built in this tutorial

Run Custom EfficientNet Training

Now that we have imported our dataset and set up the training job, we are ready to train our custom classification model!

We reference the train_generator and the number of epochs and kick off training.

Epoch 1/50

22/25 [=========================>....] - ETA: 1s - loss: 3.6326 - acc: 0.2632Epoch 1/50

25/25 [==============================] - 3s 101ms/step - loss: 4.7799 - acc: 0.1978

25/25 [==============================] - 12s 470ms/step - loss: 3.5314 - acc: 0.2727 - val_loss: 4.7799 - val_acc: 0.1978

Epoch 2/50

21/25 [========================>.....] - ETA: 0s - loss: 3.2819 - acc: 0.3205Epoch 1/50

25/25 [==============================] - 1s 51ms/step - loss: 4.8272 - acc: 0.2308

25/25 [==============================] - 3s 114ms/step - loss: 3.0902 - acc: 0.3407 - val_loss: 4.8272 - val_acc: 0.2308

Epoch 3/50

24/25 [===========================>..] - ETA: 0s - loss: 2.4715 - acc: 0.3214Epoch 1/50

25/25 [==============================] - 1s 55ms/step - loss: 4.4176 - acc: 0.2418

25/25 [==============================] - 3s 109ms/step - loss: 2.4724 - acc: 0.3068 - val_loss: 4.4176 - val_acc: 0.2418

Epoch 4/50

24/25 [===========================>..] - ETA: 0s - loss: 2.0287 - acc: 0.4253Epoch 1/50

25/25 [==============================] - 1s 56ms/step - loss: 4.1211 - acc: 0.2527

25/25 [==============================] - 3s 120ms/step - loss: 2.0432 - acc: 0.4066 - val_loss: 4.1211 - val_acc: 0.2527

Epoch 5/50

23/25 [==========================>...] - ETA: 0s - loss: 2.2813 - acc: 0.3625Epoch 1/50

25/25 [==============================] - 1s 54ms/step - loss: 4.1572 - acc: 0.2527

25/25 [==============================] - 3s 103ms/step - loss: 2.2516 - acc: 0.3750 - val_loss: 4.1572 - val_acc: 0.2527

Epoch 6/50

23/25 [==========================>...] - ETA: 0s - loss: 2.6184 - acc: 0.3976Epoch 1/50

25/25 [==============================] - 1s 54ms/step - loss: 4.0033 - acc: 0.2527

25/25 [==============================] - 3s 118ms/step - loss: 2.6760 - acc: 0.3736 - val_loss: 4.0033 - val_acc: 0.2527Running classification training ⏰

If you see your training hanging for more that 10 minutes on Epoch 1/50, then you may have run out of GPU memory, though the error is not exposed. You may need to reduce the size of the training set, reduce the batch size, etc.

As training is running, you want to watch your train loss moving downwards and the validation accuracy increasing. Validation accuracy is measured on a portion of your dataset that the model has never seen.

Examine EfficientNet Training Results

After training your custom EfficientNet classification model, you will be able to view the graph of your training job

Fine Tuning Custom EfficientDet model

Lastly, we can fine tune the last few layers of our network, hopefully to squeeze out some additional performance. This means that most of the network doesn't change but the last few parameters that are contributing the most to the class prediction.

Use Custom EfficientNet Model for Inference

And now the best part!

We can run a test image through our custom EfficientNet model for test inference. When we pass the image through the classifier it will return a series of probabilities associated with each of the possible classifications. We can assume the maximum probability is the models prediction for that class.

Our test image yields through inference:

('paper', 0.9996512, array([[9.9965119e-01, 1.3654739e-04, 2.1229258e-04]], dtype=float32))Our classifier identifies the above image as paper with 99.9% probability!

Saving Custom EfficientNet Model Weights for Posterity

Lastly, we save our classification model's weights and export for future use in an application.

We also provide an example in the notebook of how to load the model back in for future inference.

Conclusion

Congratulations! Now you now how to train EfficientNet, a state of the art convolutional neural network, on your own dataset for image classification. You are now able to leverage state of the art deep learning technology to adapt artificial intelligence to new domains.

We hope you enjoyed this tutorial! Happy classifying 🧐 And try an object detection tutorial next!

Cite this Post

Use the following entry to cite this post in your research:

Jacob Solawetz. (Jul 30, 2020). How to Train an EfficientNet Model with a Custom Dataset. Roboflow Blog: https://blog.roboflow.com/how-to-train-efficientnet/