Deploying computer vision models to the edge is critical to unlocking new use cases like in places with limited internet connectivity or where minimal latency is essential. That might be deploying to a rural field in agriculture or computer vision in high throughput manufacturing.

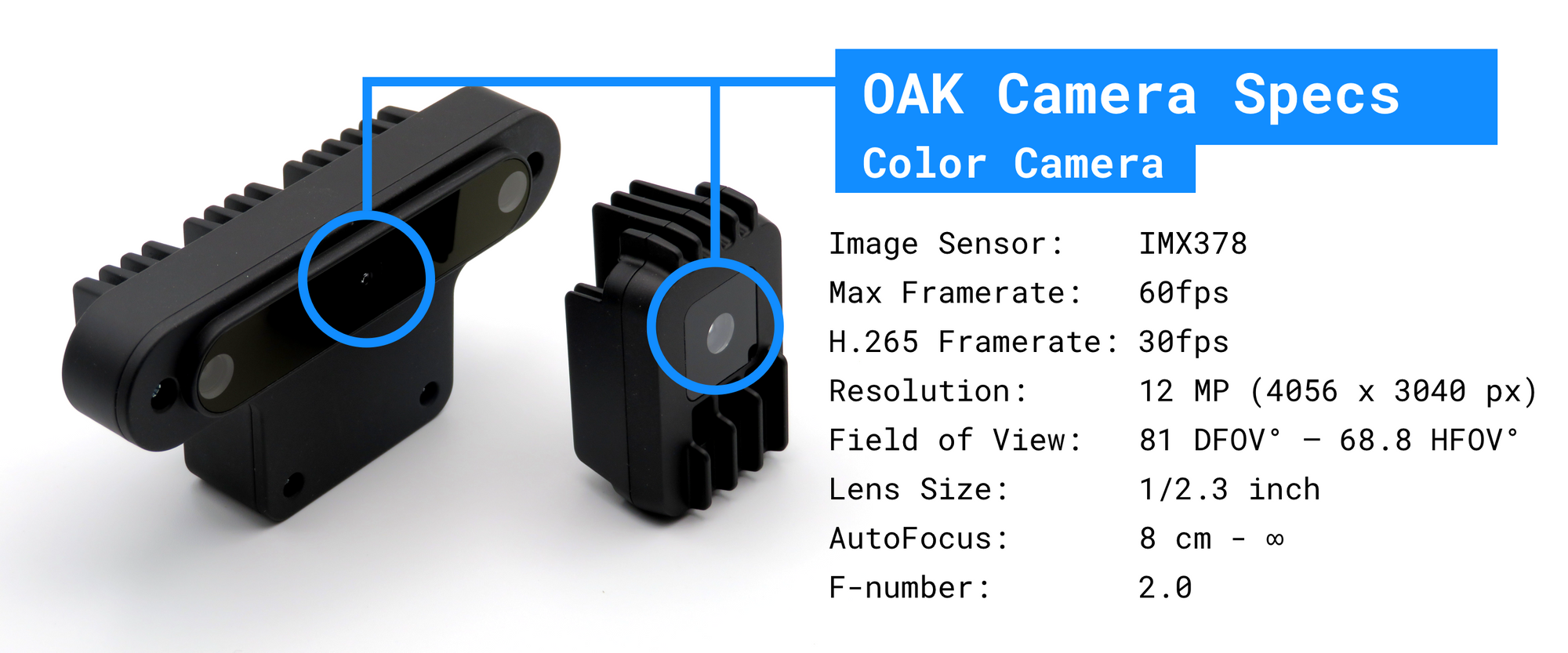

As a part of our partnership with OpenCV, we're excited to introduce an even easier way to deploy models to the edge on the Luxonis OpenCV AI Kit (OAK-1 and OAK-D) – a powerful set of devices that include a 4K camera and neural processing in one unit. Roboflow users can deploy a Docker container with their model directly to their OAK device, eliminating model conversion and dependency issues that silently degrade model performance.

(With this update, OAK joins the roster of Roboflow-supported hardware like deploying computer vision models to the NVIDIA Jetson.)

OpenCV AI Kit has been met with exceptional excitement. Since a record-breaking $1.35M Kickstarter campaign last summer, tens of thousands of global users have adopted OAK to build computer vision products. The team behind OAK at Luxonis is ushering in a new wave of spatial AI and embedded computer vision. (Hear more about that in our interview with Luxonis CEO, Brandon Gilles.)

Note that you can already use Roboflow with their OAK devices by training their own models, converting model formats, and getting the model onto the OAK. But we've heard from users that the process can be error-prone: setting up training infrastructure, converting model formats, and wrestling with dependencies result in silent errors that degrade model performance.

Building a Robust Machine Learning Operations Pipeline

Thus, as of today, users can now use Roboflow Train to one-click train a custom model on GPU and then download their model directly to their OAK via a Docker container. No more, "Did my model finish training at the right spot? How do I convert my model to OpenVINO? Which dependencies do I need to install?"

Also, after a model is finished training but before deploying, users can easily prototype it with a fully hosted web API to assess performance. This enables much more rapid iteration and removes the guess work from knowing how the model is going to perform on the device.

One final benefit of combining OAK and Roboflow is easily collecting data from the field for continued model improvement. Using the Roboflow Upload API, developers can collect data directly from what their device is seeing. For example, one could collect data if-and-only-if model confidence is below a given threshold or if their end user indicates there's an issue with detections. This failure case data is the bread-and-butter of active learning, which enables models to improve faster and with less data required.

We're dedicated to making the machine learning operations pipeline simpler to eliminate errors in model performance. As OpenCV CEO Satya Mallick notes, "We have partnered with Roboflow and Luxonis to make the AI pipeline ridiculously simple for programmers."

Try It Out

If you already have an OAK, you can get started for free. Simply request Roboflow Train directly in Roboflow and reply to the email with your OAK serial number. (If you don't yet have an OAK, email us for a discount.)

Then, follow our how to deploy a custom model to OAK tutorial. Be sure to read the docs, too.

Follow this step-by-step deployment guide to deploy models trained with Roboflow Train to OAK devices with our Python package (roboflowoak). DepthAI and OpenVINO are required on your host device for the package to work.

- roboflowoak (PyPi)

We can't wait to see what you build!