Vector databases play an essential role in Large Language Model (LLM) and Large Multimodal Model (LMM) applications. For example, you can use a vector database like Pinecone to store text and image embeddings for use in a Retrieval Augmented Generation (RAG) pipeline.

Using a vector database, you can determine candidates for information that you want to include as context in an LLM or LMM prompt.

In this guide, we are going to show you how to calculate and load image embeddings into Pinecone using Roboflow Inference. Roboflow Inference is a fast, scalable tool you can use to run state-of-the-art vision models, including CLIP. You can use CLIP to calculate image embeddings.

By the end of this guide, you will have:

- Calculated CLIP embeddings with Roboflow Inference.

- Loaded image embeddings into Pinecone, and;

- Run a vector search using embeddings and Pinecone.

Without further ado, let’s get started!

What is Pinecone?

Pinecone is a vector database in which you can store data and embeddings. You can store embeddings from models like OpenAI's text embedding model, OpenAI's CLIP model, Hugging Face models, and more. Pinecone provides SDKs in a range of languages, including Python, which provide language-native ways to interact with Pinecone vector databases.

Step #1: Install Roboflow Inference

We are going to use Roboflow Inference to calculate CLIP embeddings. Roboflow Inference is an open source solution for running vision models at scale. You can use Inference to run fine-tuned object detection, classification, and segmentation models, as well as foundation models such as Segment Anything and CLIP.

You can use Inference on your own device or through the Roboflow API. For this guide, we will use Inference on our own device, ideal if you have compute resources available to run CLIP embedding calculations on your machine. We will also show you how to use the Roboflow CLIP API, which uses the same interface as Inference running on your machine.

First, install Docker. Refer to the official Docker installation instructions for information on how to install Docker on the device on which you want to run Inference.

Next, install the Roboflow Inference CLI. This tool lets you start an Inference server with one command. You can install the Inference CLI using the following command:

pip install inference-cliStart an inference server:

inference server startThis command will start an Inference server at http://localhost:9001. We will use this server in the next step to calculate CLIP embeddings.

Step #2: Set Up a Pinecone Database

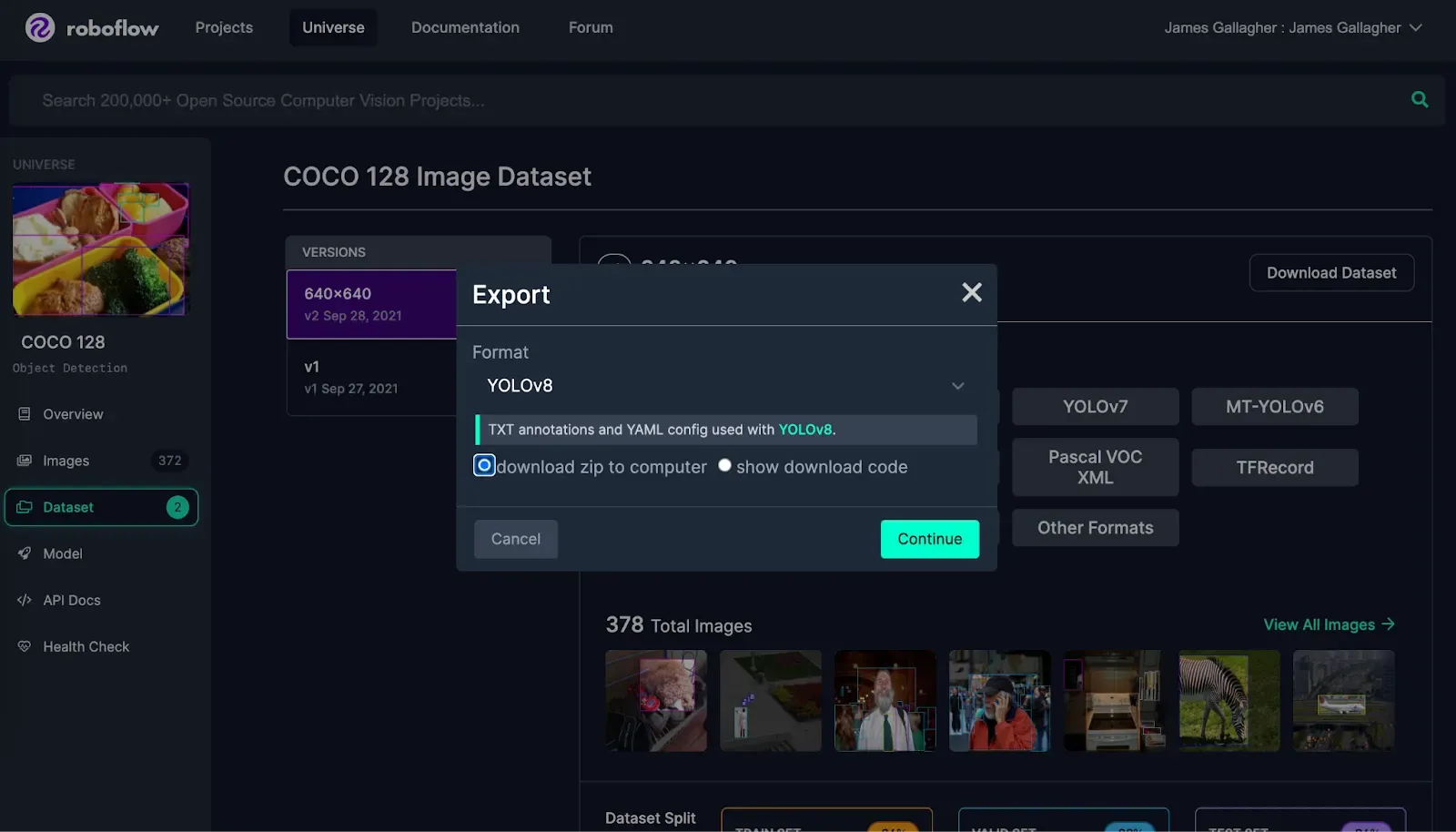

We are going to create a search engine for a folder of images. For this guide, we will use images from the COCO 128 dataset, which contains a wide range of different images. The dataset contains objects such as planes, zebras, and broccoli.

You can download the dataset from Roboflow Universe as a ZIP file, or use your own folder of images.

Before we can set up a Pinecone vector database, we need to install the Pinecone Python SDK which we will use to interact with Pinecone. You can do so using the following command:

pip install pinecone-clientNext, create a new Python file and add the following code:

from pinecone import Pinecone, PodSpec

import os

pc = Pinecone(api_key=os.environ["PINECONE_API_KEY"])

pc.create_index(

name='images',

dimension=512,

metric="cosine",

spec=PodSpec(environment="gcp-starter", pod_type="starter")

)Create a Pinecone account. After creating your account, retrieve your API key and environment from the "API Keys" page linked on the Pinecone Console. You do not need to create an index in the Pinecone dashboard, as our code snippet will do that.

Replace YOUR_API_KEY with your Pinecone API key and YOUR_ENVIRONMENT with the environment value on the Pinecone API Keys page.

This code will create an index called "images" with which we will work.

Next, create a new Python file and add the following code:

from pinecone import Pinecone

import base64

import requests

import os

import uuid

pc = Pinecone(api_key=os.environ["PINECONE_API_KEY"])

index = pc.Index("images")

IMAGE_DIR = "images/train/images/"

API_KEY = os.environ.get("ROBOFLOW_API_KEY")

SERVER_URL = "http://localhost:9001"

vectors = []

for i, image in enumerate(os.listdir(IMAGE_DIR)):

print(f"Processing image {image}")

infer_clip_payload = {

"image": {

"type": "base64",

"value": base64.b64encode(open(IMAGE_DIR + image, "rb").read()).decode("utf-8"),

},

}

res = requests.post(

f"{SERVER_URL}/clip/embed_image?api_key={API_KEY}",

json=infer_clip_payload,

)

embeddings = res.json()['embeddings']

print(res.status_code)

vectors.append({"id": str(uuid.uuid4()), "values": embeddings[0], "metadata": {"filename": image}})

index.upsert(vectors=vectors)Above, we iterate over all images in a folder called “images/train/images/” and compute a CLIP embedding for each image using Roboflow Inference. If you want to use the hosted Roboflow CLIP API to calculate CLIP vectors, you can do so by replacing the API_URL value with https://infer.roboflow.com.

We save all vectors in a vector database called “images”.

Run the code above to create your database and ingest embeddings for each image in your dataset.

Step #3: Run a Search Query

With all of our image embeddings calculated, we can now run a search query.

To query our Pinecone index, we need a text embedding for a query. We can calculate a text embedding using Roboflow Inference or the hosted Roboflow CLIP API. We can then pass the text embedding through Pinecone to retrieve images with embeddings that are most similar to the text embedding we calculate.

Let’s search for “bus”.

infer_clip_payload = {

"text": "bus"

}

res = requests.post(

f"{SERVER_URL}/clip/embed_text?api_key={API_KEY}",

json=infer_clip_payload,

)

embeddings = res.json()['embeddings']

results = index.query(

vector=embeddings[0],

top_k=1,

include_metadata=True

)

print(results["matches"][0]["metadata"]["filename"])This code will calculate a CLIP text embedding for the query “broccoli”. This embedding is used as a search query in Pinecone. We retrieve the top image with vectors closest to the text embedding. Here are the results:

000000000471_jpg.rf.faa1965b86263f4b92754c0495695c7e.jpgNote: The files are in the IMAGE_DIR you defined earlier.

Let’s open the result:

Our code has successfully returned an image of a bus as the top result, indicating that our search system works.

Conclusion

Pinecone is a vector database that you can use to store data and embeddings such as those calculated using CLIP.

You can store image and text embeddings in Pinecone. You can then query your vector database to find results whose vectors are most similar to a given query vector. Searches happen quickly by leveraging the fast semantic search interface implemented in Pinecone.

In this guide, we walked through how to calculate CLIP image embeddings with Roboflow Inference. We then demonstrated how to save these embeddings in Pinecone. We showed an example query that illustrates a successful vector search using our embeddings.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Nov 28, 2023). How to Load Image Embeddings into Pinecone. Roboflow Blog: https://blog.roboflow.com/pinecone-roboflow-inference-clip/