We are introducing RF-DETR, a state-of-the-art real-time object detection model. RF-DETR outperforms all existing object detection models on real world datasets and is the first real-time model to achieve 60+ mean Average Precision when benchmarked on the COCO dataset. RF-DETR is available under an open source Apache 2.0 license.

RF-DETR is accompanied by a paper, RF-DETR: Neural Architecture Search for Real-Time Detection Transformers, which is available on Arxiv. The paper contains detailed information about the RF-DETR model architecture, our results from benchmarking the model, and more.

RF-DETR is available on GitHub and for finetuning via a Colab Notebook.

RF-DETR is a real-time object detection transformer-based architecture designed to transfer well to both a wide variety of domains and to datasets big and small. As such, RF-DETR is in the "DETR" (detection transformers) family of models. It is developed for projects that need a model that can run high speeds with a high degree of accuracy, and often on limited compute (like on the edge or low latency).

Today, we are introducing two model sizes: RF-DETR-base (29M parameters) and RF-DETR-large (128M parameters).

Model Performance and Benchmarks

Object detection models face a mini crisis in evaluation. Common Objects in Context (COCO), a canonical 163k image benchmark from Microsoft first introduced in 2014, hasn't been updated since 2017. At the same time, models have gotten really good – and used in contexts well beyond 'common objects.' New state-of-the-art models often increase COCO mAP by fractions of a percent while reaching for other datasets (LVIS, Objects365) to demonstrate generalizability.

This is part of our motivation of introducing a model: we aim to show not only competitive performance on existing benchmarks like COCO, yet also show why domain adaptability ought to be a top level consideration alongside latency. In a world where COCO performance is increasingly saturated for models of similar size, how well a model is able to adapt – especially to novel domains – grows in importance.

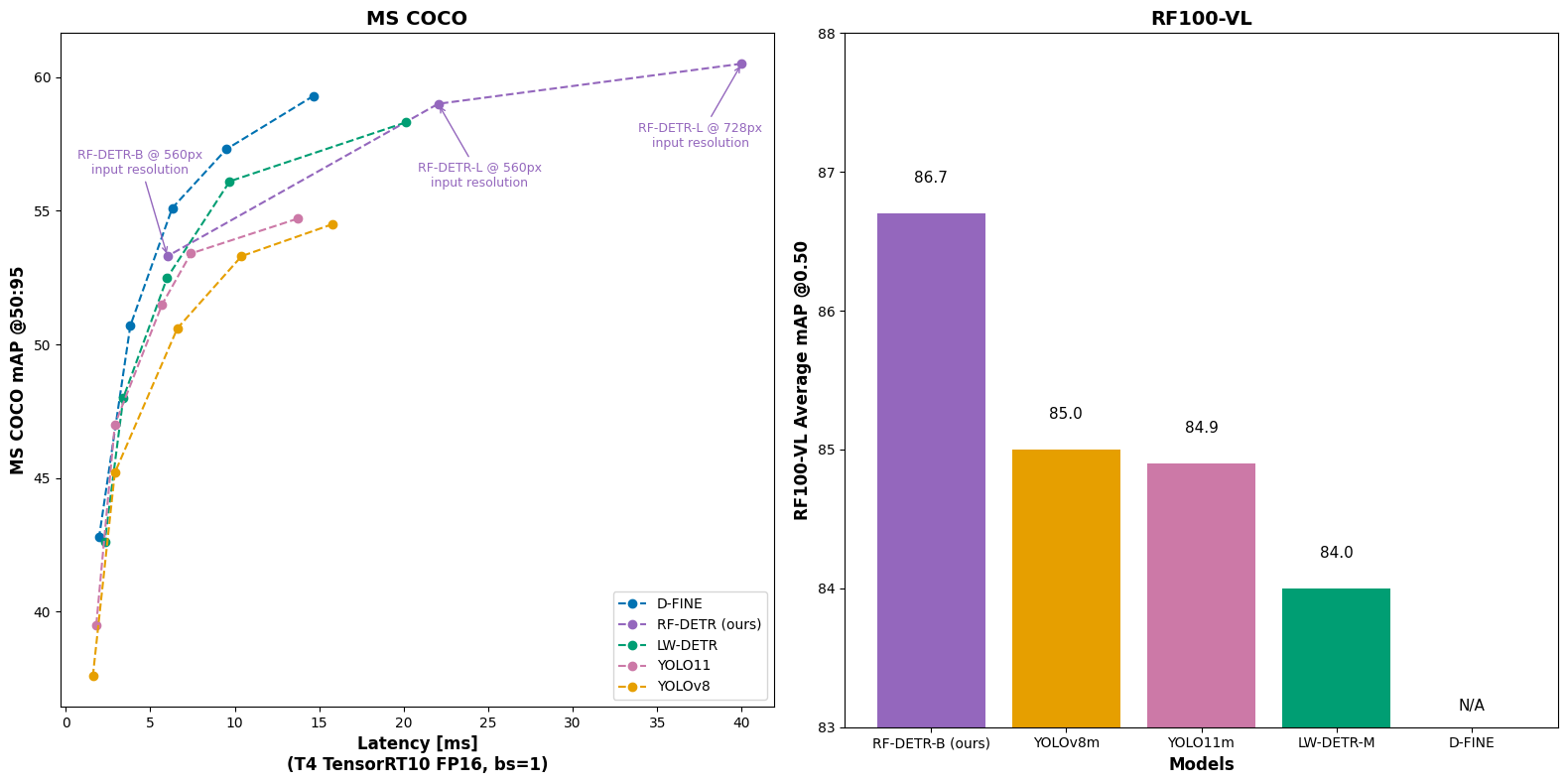

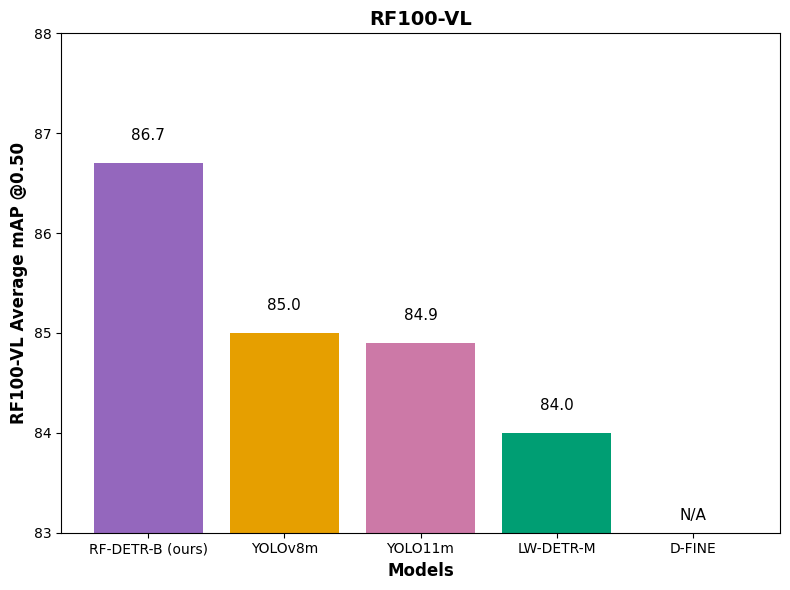

We evaluate performance in three categories: COCO mean average precision, RF100-VL mean average precision, and speed. RF100-VL is a selection of 100 datasets from the 500,000+ open source datasets on Roboflow Universe. It represents how computer vision is actually being applied to problems like aerial imagery, industrial contexts, nature, lab imaging, and more.

RF100 has been used by research labs like Apple, Microsoft, Baidu, and others. (This also means that in joining us to build open source computer vision on Roboflow, you are improving the entire field's ability to develop visual understanding.) A great model should do well on COCO compared to models of similar size, adapt very well to a domain problem, and be fast. RF100-VL is our new take on the mission of RF100, designed to not only benchmark object detectors in novel domains but also to allow direct comparison between object detectors and large language models with standardized evaluation criteria.

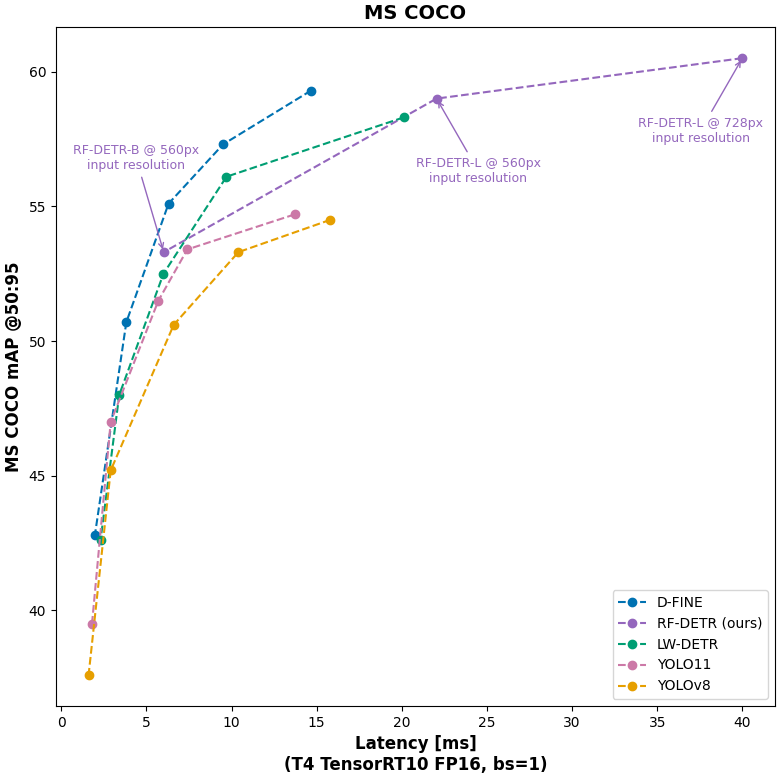

We evaluate RF-DETR relative to realtime COCO SOTA transformer models (D-FINE, LW-DETR) and SOTA YOLO CNN architectures (YOLO11, YOLOv8). With respect to these parameters, RF-DETR is the only model #1 or #2 in all categories.

Of note, the speed shown is the GPU latency on a T4 using TensorRT10 FP16 (ms/img) in a concept LW-DETR popularized called "Total Latency." Unlike transformer models, YOLO models conduct NMS following model predictions to provide candidate bounding box predictions to improve accuracy.

However, NMS results in a slight decrease in speed as bounding box filtering requires computation (the amount varies based on the number of objects in an image). Note most YOLO benchmarks use NMS to report the model's accuracy, yet do not include NMS latency to report the model's corresponding speed for that accuracy. This above benchmarking follows LW-DETR's philosophy of providing a total amount of time to receive a result uniformly applied on the same machine across all models. Like LW-DETR, we present latency using a tuned NMS designed to optimize latency while having minimal impact on accuracy.

Secondly, D-FINE fine-tuning is unavailable, and its performance in domain adaptability is, therefore, inaccessible. Its authors indicate, "If your categories are very simple, it might lead to overfitting and suboptimal performance." There are also a number of open issues inhibiting fine-tuning. We have opened an issue to aim to benchmark D-FINE with RF100-VL.

On COCO, specifically, RF-DETR is strictly Pareto optimal relative to YOLO models and competitive – though not strictly more performant – with respect to realtime transformer based models. However, in releasing RF-DETR-large and using a 728 input resolution, RF-DETR achieves the highest mAP (60.5) for any realtime (Papers with Code defines "real-time" as 25+ FPS on T4).

Based on community feedback, we may release more model sizes in the RF-DETR family in the future.

RF-DETR Architecture Overview

Historically, CNN-based YOLO models have given the best accuracy for real-time object detectors. CNNs continue to be a core component of many of the best approaches in computer vision, e.g. D-FINE leverages both CNNs and transformers in its approach.

CNNs alone do not benefit as strongly from large scale pre-training like transformer-based approaches do and may be less accurate or converge more slowly. In many other subfields of machine learning, from image classification to LLMs, pre-training is more and more essential to achieving strong results. Therefore, an object detector that shows strong benefit from pre-training is likely to lead to better object detection results. However, transformers have typically been quite large and slow, unfit for many challenges in computer vision.

Recently, through the introduction of RT-DETR in 2023, the DETR family of models has been shown to match YOLOs in terms of latency when considering the runtime of NMS, which is a required post-processing step for YOLO models but not DETRs. Moreover, there has been an immense amount of work on making DETRs converge quickly.

Recent advances in DETRs combine these two factors to create models that, without pre-training, match the performance of YOLOs, and with pre-training, significantly outperform at a given latency. There is also reason to believe that stronger pre-training increases the ability of a model to learn from small amounts of data, which is very important for tasks that may not have COCO-scale datasets. Hybrid approaches are also evolving. YOLO-S merges transformers and CNNs for realtime performance. YOLOv12 also takes advantage of sequence learning alongside transformers.

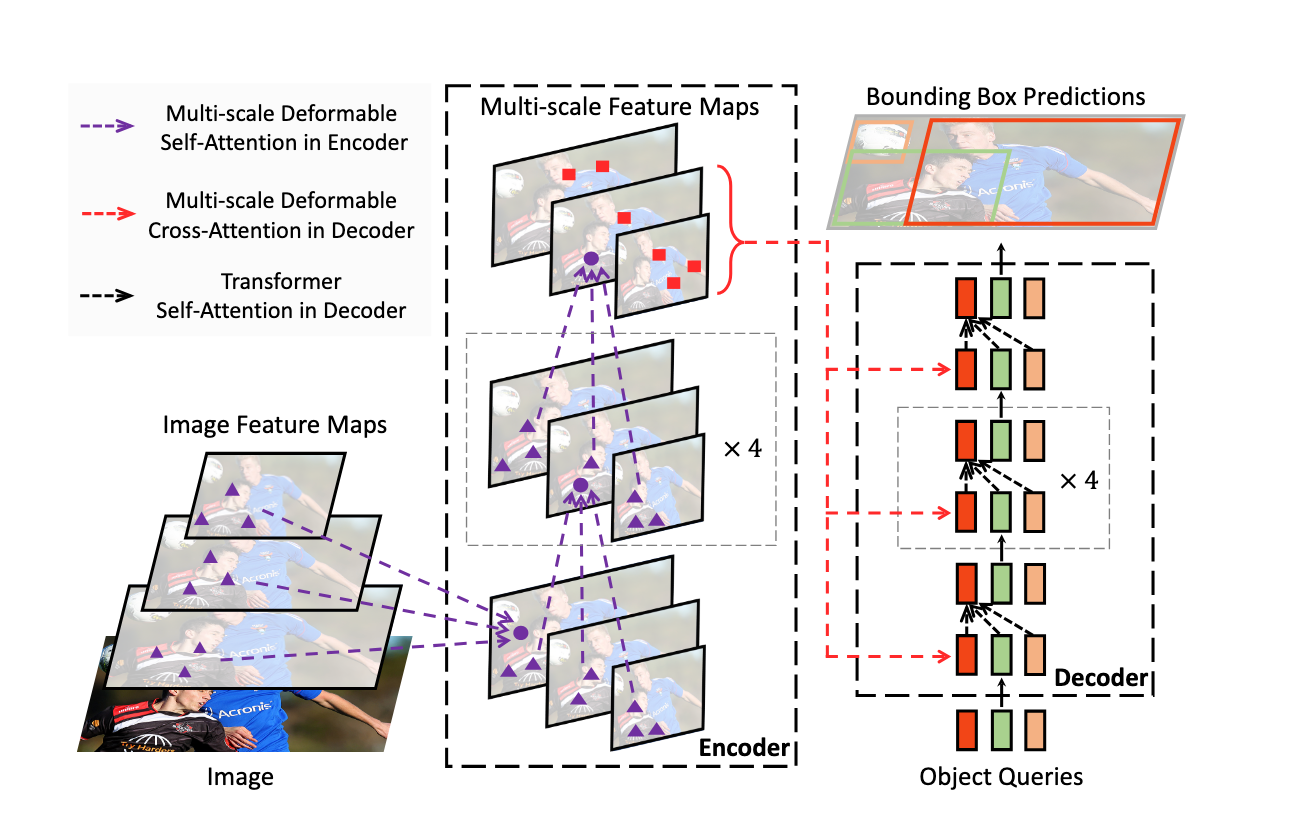

RF-DETR uses an architecture based on the foundations set out in the Deformable DETR paper. Whereas Deformable DETR uses a multi-scale self-attention mechanism, we extract image feature maps from a single-scale backbone.

We explored pushing forward the state of the art by combining the best of modern DETRs with the best of modern pre-training. Specifically, we created RF-DETR by combining LW-DETR with a pre-trained DINOv2 backbone. This gives us exceptional ability to adapt to novel domains based on the knowledge stored in the pre-trained DINOv2 backbone.

We also train the model at multiple resolutions, which means we can choose to run the model at different resolutions at runtime to tradeoff accuracy and latency without retraining the model.

How to Use RF-DETR

We are releasing RF-DETR with a pre-trained checkpoint trained on the Microsoft COCO dataset. This checkpoint can be used with transfer learning to fine-tune an RF-DETR model with a custom dataset.

RF-DETR can be fine-tuned using the rfdetr Python package. We have prepared a dedicated Colab notebook that walks through how to train an RF-DETR model on a custom dataset.

You can train RF-DETR models from our optimized checkpoint that we have found results in higher mAP scores. Read our Train and Deploy RF-DETR Models with Roboflow guide to learn how to start training RF-DETR models on our platform.

You can use RF-DETR models trained on Roboflow with Roboflow Inference, our open source inference server, and Roboflow Workflows, our web-based tool for building vision applications that youc an run at the edge. Learn how to use RF-DETR with Workflows.

More to Come

We're introducing RF-DETR because we see a clear opportunity to move the field forward while showcasing our methods so everyone else can improve upon this result too. Making the world programmable takes all of us.

At Roboflow, we pride ourselves on supporting a wide array of vision models – from large vision language models to small task-specific models to adapting closed source APIs. Even as we introduce this novel architecture, we will continue to support any and all models.

Ready to get started with RF-DETR? Check out our model fine-tuning guide which walks through, step-by-step, how to train your own RF-DETR model. You can also view the source code behind the model on GitHub.

As you build with RF-DETR, let us know what you make! Tag us with your creations on any platforms or communities you use.

RF-DETR vs. YOLOv11

RF-DETR is primarily focused on object detection and segmentation. It's designed to be highly fine-tunable, often converging in fewer epochs than YOLO models on small, custom datasets due to the pre-trained DINOv2 backbone and transformer architecture, making it better than YOLOv11 at handling complex scenes with overlapping objects. YOLOv11 relies on convolutions, scanning the image in patches, which is incredibly fast and efficient but traditionally struggles more with heavy occlusion.

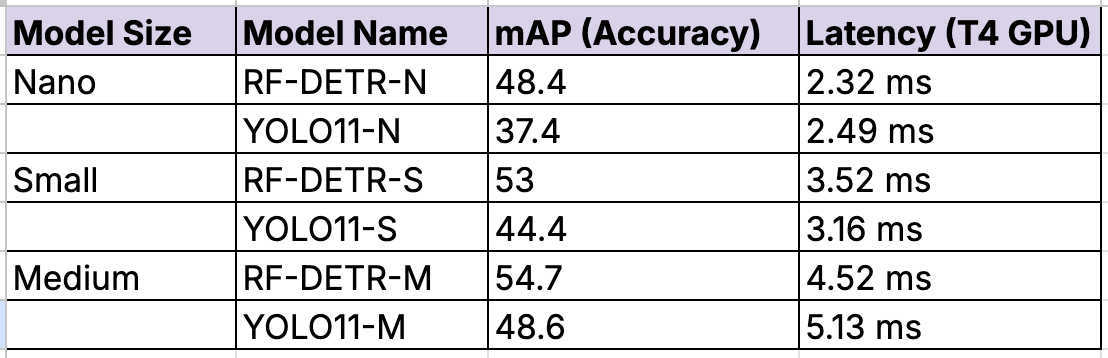

RF-DETR achieves higher accuracy (mAP) than YOLO11 for the same inference speed, particularly in the "Nano" and "Small" model sizes.

RF-DETR is licensed under Apache 2.0, is truly open-source. You can use it commercially, modify it, and distribute it without releasing your source code or paying a fee.

YOLO11 is licensed under AGPL-3.0. If you use it in a product that you distribute, you may be required to open-source your code. For closed-source commercial use, you typically need to purchase an Enterprise License.

As a result, RF-DETR is best when you need the highest possible accuracy, especially for small objects or crowded scenes; you need a permissive license (Apache 2.0) for a commercial product without paying fees; or you have limited data - the transformer backbone often generalizes better with fewer training images.

Cite this Post

Use the following entry to cite this post in your research:

Peter Robicheaux, James Gallagher, Joseph Nelson, Isaac Robinson. (Mar 20, 2025). RF-DETR: A SOTA Real-Time Object Detection Model. Roboflow Blog: https://blog.roboflow.com/rf-detr/