This blog post is a guest post by James Nitsch, a mobile developer (Android) with WillowTree Apps living in Charlottesville, VA. He's passionate about do-it-yourself hardware projects, 3D printing, and machine learning & computer vision. He's happily employed but always loves collaborating and tackling innovative or unique problems. Feel free to reach out!

Problem: Grading cards is expensive and time-consuming.

When collecting memorabilia like cards, it's desirable to get your cards graded, or evaluated for authenticity and quality. However, access to card grading services is restrictive! Services verifying the authenticity of a piece of memorabilia or autographed object have a place in the hands of trained, human professionals. However, a community’s reliance on these sorts of service comes at a great cost, as well.

These services can be slow and expensive. Professional grading can raise the value of an item at auction by many orders of magnitude, sometimes 10x or even more. In these cases, the price per card, which often goes for around $50 or higher, can be justified.

But that begs the question: how can you tell which cards are worth getting graded if you’re an amature collector? In many cases, eBay listings are flooded with sellers who happen to be sitting on very valuable cards, but don’t have the knowledge of exactly what they’re selling. This can lead to heavily over or underpriced listings, as well as misleading titles, meant to inflate the price, such as speculation about what it might potentially be worth, or what grading it might receive.

Additionally, collectibles can surge greatly in price over time. One such example is the rapid rise in vintage Pokémon card prices in 2020. Unfortunately, professional grading services can take months to turn around a graded card. Estimated turnaround times for certain providers exceeded a year during COVID. If you’re trying to capitalize on a surge in pricing, that simply won’t do.

How can computer vision help?

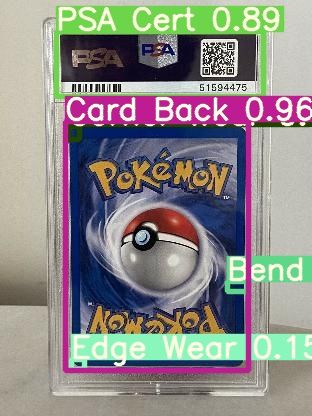

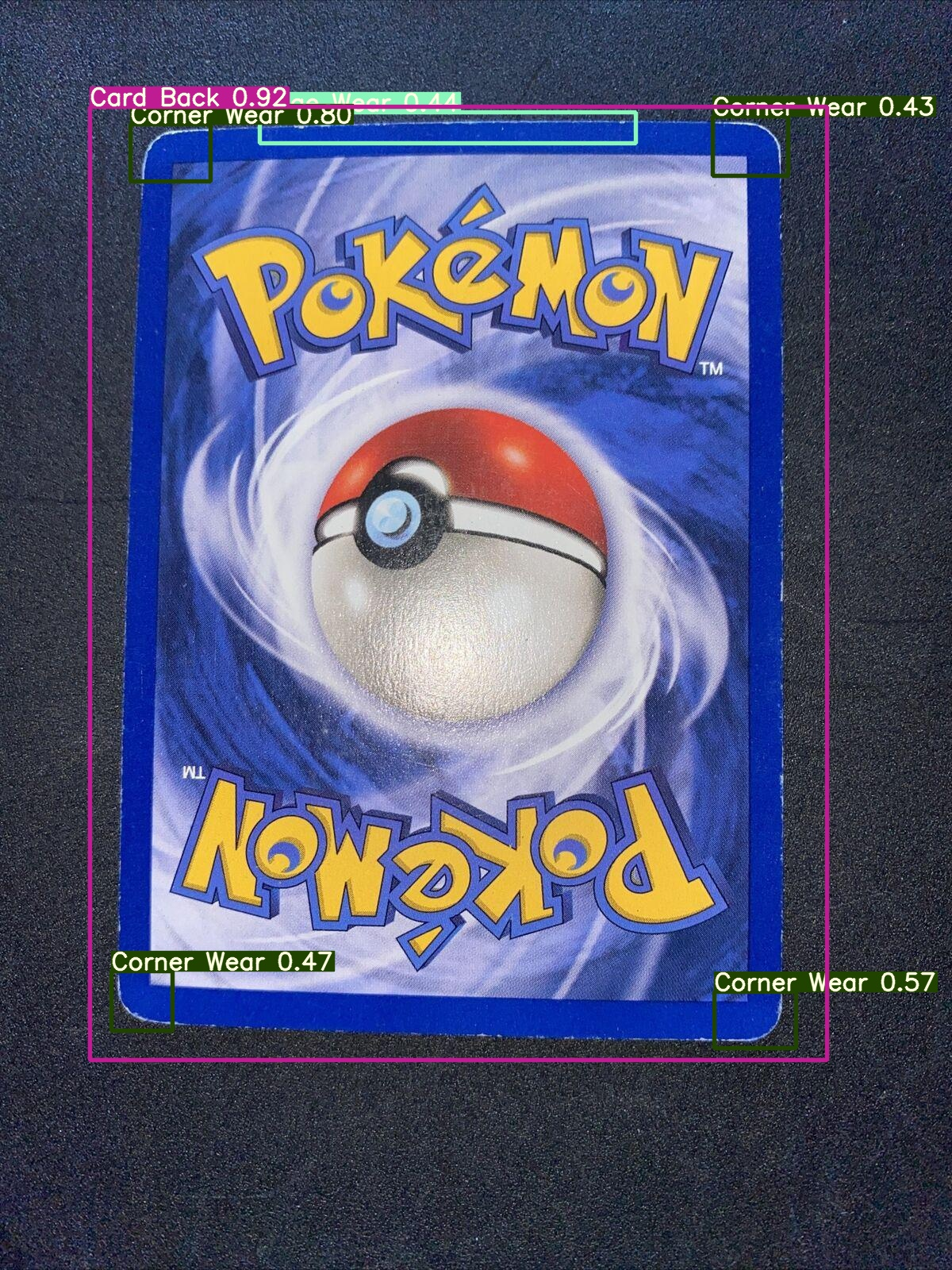

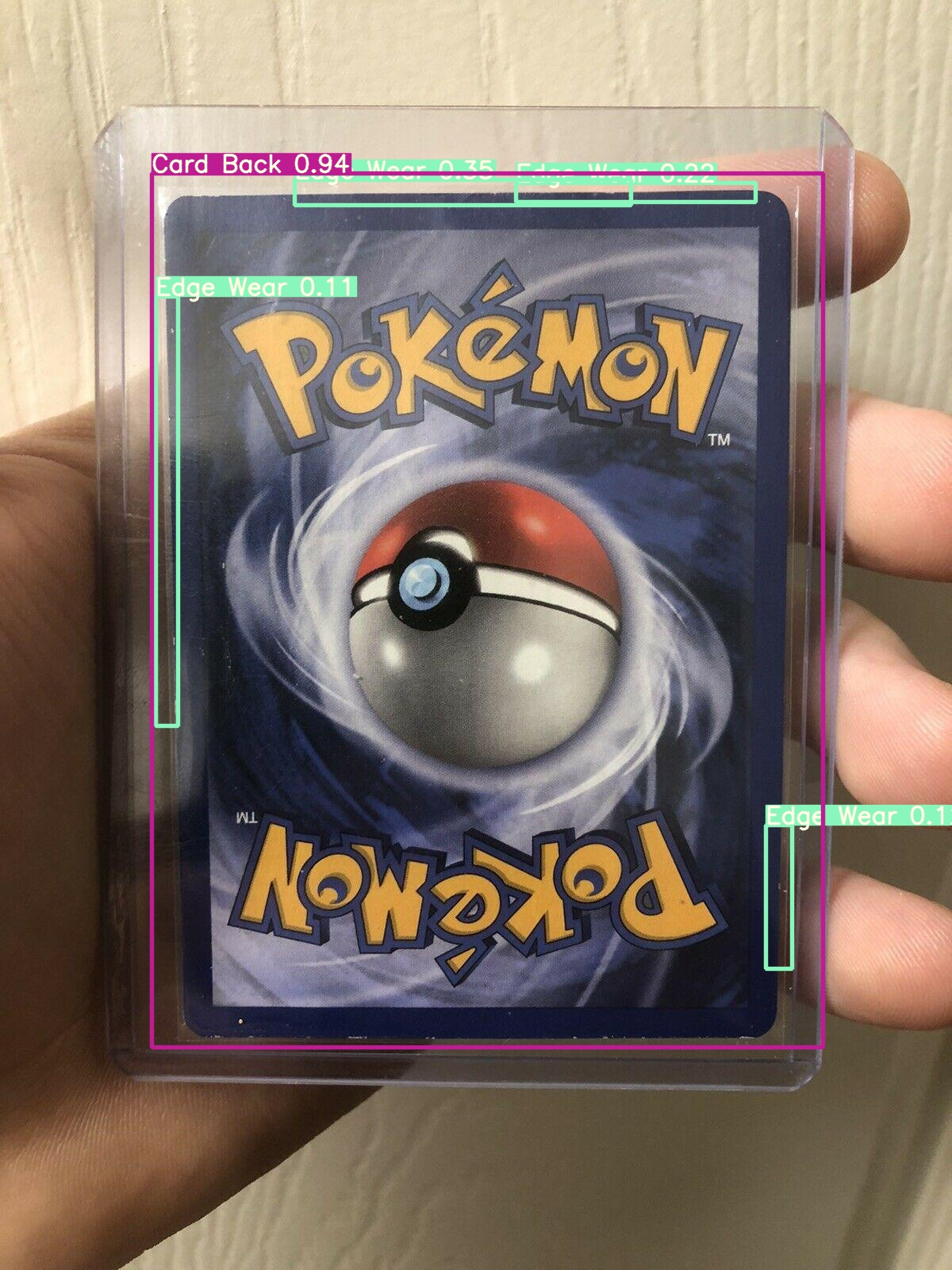

Computer vision can help solve some of the more common aspects of grading collectible cards, such as edge wear, corner wear, centering, and damage to the surface of the card. That's what I did!

How did I do it?

I built a proof of concept model on about 150 images of Pokémon cards, in varying cosmetic conditions, both graded and ungraded. Each image contains an image of a Pokemon card, roughly centered and flat in the frame.

I wrote a few web scrapers to pull down data from eBay, and stored mappings to the listing price, sold price, date, and of course, every associated image. The web scrapers were written from scratch in Python using the BeautifulSoup library. Initially, I labeled the images with LabelImg, but have since moved to using Roboflow’s built-in annotator.

Roboflow's Dataset Health Check helped me understand my data much more than I thought it would! Not only did it help me keep each of my core classes well represented, and of roughly equal amounts, but I also was running into an issue where my model was having a much harder time detecting worn edges on the top-left of a card. Roboflow’s heatmap utility showed me that of all my examples of worn or creased edges, the top left was extremely underrepresented compared to the other 3.

Most of the preprocessing steps were done manually via Python scripts. I felt comfortable filtering out any images that do not match certain expected criteria that I may later require for uploaded images. One such restriction is that the card needs to be completely flat, and cannot appear skewed in the frame. Relying on OpenCV algorithms to perform edge detection around the edges and frame of the card, it was easy to calculate each slope of the lines and ensure the card was flat, and unskewed in the image.

To account for the various lighting conditions that might be present when a user takes a photo, I used Roboflow's image augmentation techniques. I also apply augmentation for blur, exposure, and saturation to account for various types of cameras and smartphones that may be supplying the images in the final product.

I tried bottlenecking VGG-16, and then training YOLOv5 on my dataset using Roboflow’s own tutorial and Jupyter notebook. YOLOv5 ended up being a better long-term approach for my project.

My model is still in development; I eventually intend to host this model behind an API that allows users to upload photos of their individual cards and receive information regarding the estimated quality of the card and the average price over the last 15 / 50 / 200 days.

Roboflow provided me with all the tools I needed (and some I didn’t expect to need) to bring my idea to life quickly.

Cite this Post

Use the following entry to cite this post in your research:

Matt Brems. (Feb 8, 2021). Using Computer Vision to Make Card Grading Faster and Cheaper. Roboflow Blog: https://blog.roboflow.com/using-computer-vision-to-make-card-grading-faster-and-cheaper/