When you are working on computer vision projects, the term “bounding box” will come up often, especially in the context of labeling data for and using object detection models.

In this guide, we are going to define bounding boxes and look at the two situations in which bounding boxes are regularly used in computer vision.

Without further ado, let’s get started!

What is a Bounding Box?

A bounding box is a rectangle drawn around a region of interest in an image. This region should correspond to a particular object that you want to identify. Bounding boxes are a visual representation of coordinates on an image.

There are two scenarios in which bounding boxes will come up in vision projects:

- During labeling, and;

- During inference, when your model is being used.

Labeling Bounding Boxes

During the labeling process for object detection projects, you need to draw bounding boxes around objects of interest. These boxes are then used alongside the input image as data for training a vision model.

To draw bounding boxes for data labeling, you should use a labeling tool. All object detection labeling tools have support for drawing boxes, and varying degrees of utilities to help you draw those boxes faster. Roboflow offers a free labeling tool for you to use.

Here is an example showing the labeling process, where boxes are drawn around objects of interest:

Bounding boxes should be drawn as tightly around objects as possible, so that each box contains as much detail about an object without including too much background noise. With that said, bounding boxes are boxes and many objects that detection models aim to identify are not boxes. Thus, it is inevitable some background will appear in a region of interest.

Bounding boxes can overlap. For example, if you are detecting a shipping container and its ID, you may draw one box for the ID and another for the wider container. The trained model will learn to identify both objects. You can then do calculations like calculating the intersection over union of two bounding boxes to c

We have written a full guide on how to label data with tips for the most effective ways to draw bounding boxes.

Working with Bounding Boxes

Computer vision models like YOLOv10 and RT-DETR return the coordinates that correspond to the location of an object that the model has been trained to identify. These coordinates can come in various formats. The most popular formats are:

- x0, y0, x1, y1 (also known as xyxy), and;

- x, y, width, height (also known as xywh).

If you decide to plot the coordinates on an image – for example, to visualize how your model is doing – this process is referred to as drawing bounding boxes.

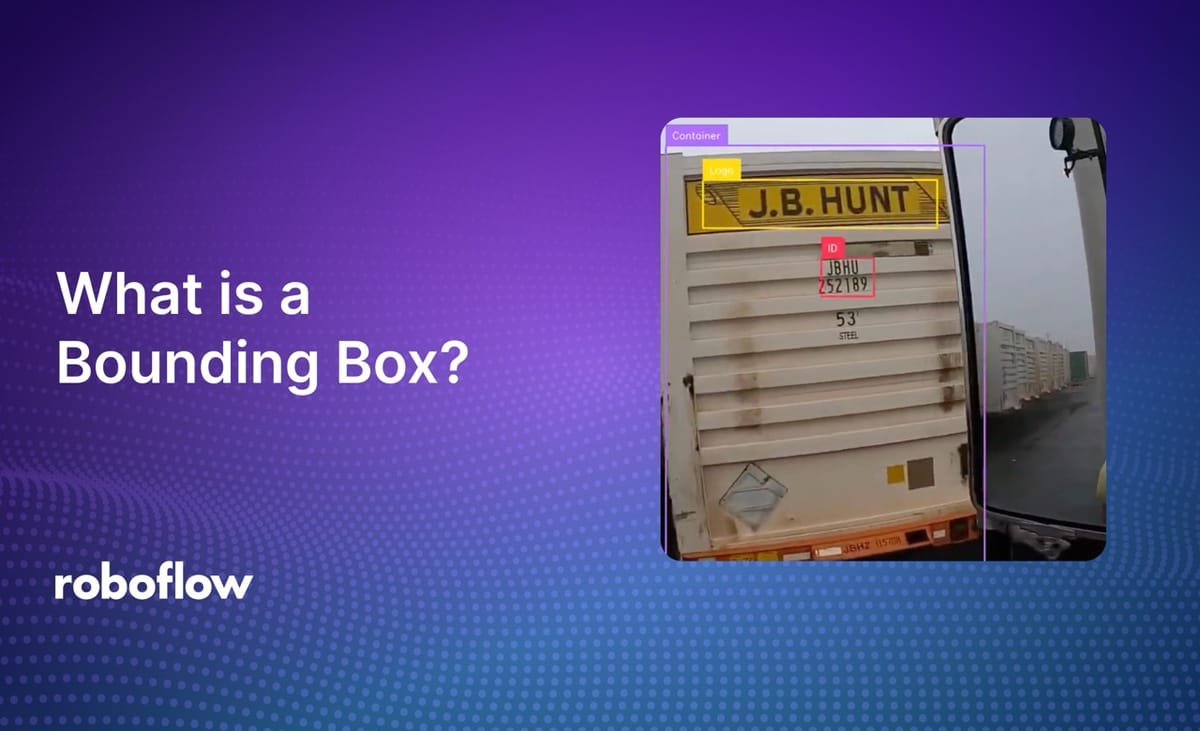

Here is an example of predictions from a yard management object detection model:

Bounding boxes are visualized that correspond to detections for Container, ID, and Logo returned by our yard management object detection model.

The image above was visualized using supervision, a Python computer vision library with utilities for working with computer vision models.

To learn more about drawing bounding boxes with supervision, read our guide on how to draw a bounding box. To learn more about using supervision for building vision application logic, refer to the supervision documentation.

Conclusion

The term “bounding box” has two definitions in computer vision. First, bounding boxes are drawn around objects of interest in an annotation tool. These annotations are then used to train a model. Second, predictions from vision models are drawn as bounding boxes on an input image, allowing you to visualize the results from your model.

To learn more about training computer vision models, refer to the Roboflow Getting Started guide.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Oct 9, 2024). What Is A Bounding Box In Computer Vision?. Roboflow Blog: https://blog.roboflow.com/what-is-a-bounding-box/