Earlier this year, OpenAI announced a powerful art-creation model called DALL-E. Their model hasn't yet been released but it has captured the imagination of a generation of hackers, artists, and AI-enthusiasts who have been experimenting with using the ideas behind it to replicate the results on their own.

The magic behind how these attempts work is combining another OpenAI model called CLIP (which we have written about extensively before) with generative adversarial networks (which have been around for a few years) to "steer" them into producing a desired output.

The intuition is that these GANs have actually learned a lot more from the images they were trained to mimic than than we previously knew; the key is finding the information we're looking for deep in their latent space.

Subscribe to our YouTube for more content like this!

A Human-Filled AI Sandwich

We previously built a project called paint.wtf which used CLIP as the scoring algorithm behind a Pictionary-esque game (whose prompts were generated by yet another model called GPT). We affectionately described this project as an "AI Sandwich" because the task and evaluation are both controlled by machine learning models and the "work" of drawing the pictures is done by humans.

Over 100,000 people have played the game thus far so we have a good idea of how humans perform the drawing task. We were curious what would happen if we replaced the "human" in the middle of the sandwich with one of these new steered-GAN generative models to produce the images instead.

Open Questions

Intuitively, you would expect the steered-GAN to "score" much higher because its output is being steered by precisely the same model (CLIP) that judges the paint.wtf game. We wondered

- How closely would the generative model (CLIP+VQGAN in our case) match the human drawings at the top of the leaderboard?

- Which prompts would produce results closest to the human output?

- Would the GAN be able to be steered towards a higher score from the CLIP judge than the human drawings?

- Do the highest scoring CLIP results qualitatively match the "best" drawings (as judged by me, a real-life human)?

- Is it better to start by generating a high resolution image or does it work better to grow the GAN by starting at, for example, 32x32 then using that output as an input for a 64x64 run, then 128x128, etc.

Methodology

I used a Google Colab notebook that makes it really easy to experiment with CLIP+VQGAN in a visual way. You just update the tunable parameters in the UI, hit "run", and away it goes generating images and progressively steering the outputs towards your target prompts.

I experimented with our Raccoon Driving a Tractor prompt from paint.wtf.

Baseline

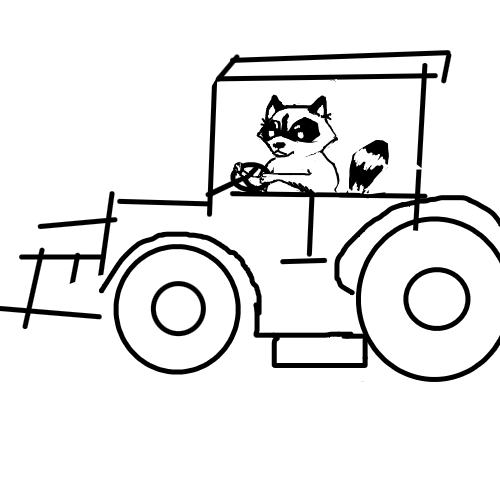

I first browsed the top paint.wtf submissions for this prompt to get a feel for what the 3000+ human artists discovered that CLIP responded best to.

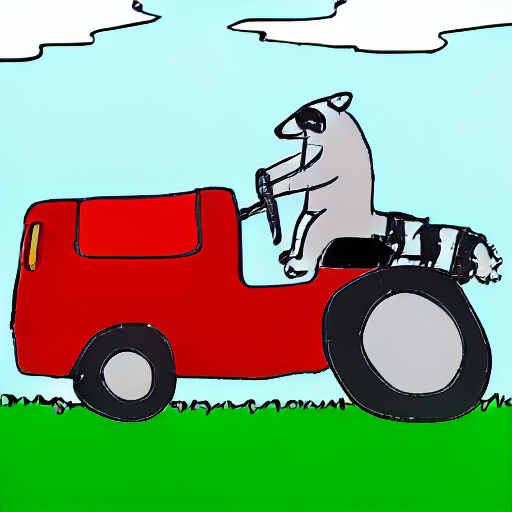

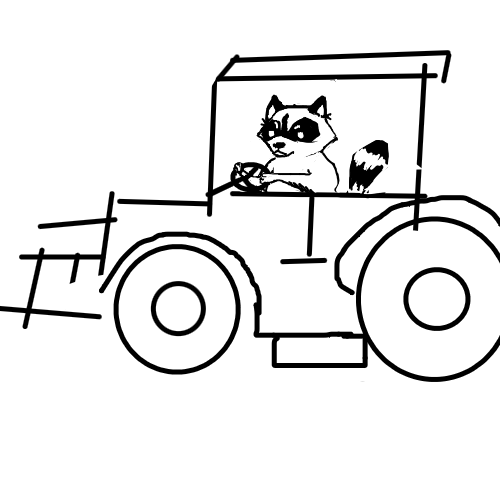

The top human submissions for this prompt.

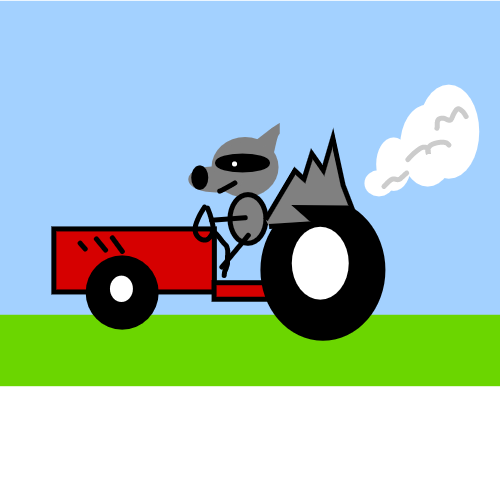

I then ran the exact prompt the above drawings had been scored for through CLIP+VQGAN using the wikiart and imagenet base models for 1500 iterations (about 22 minutes) each: illustration of a raccoon driving a tractor.

That produced the following images:

Those were pretty good but not quite the style I was going for so I tried to guide the style next. Any variation on MS Paint gave me "paintbrush" looking strokes, not the simple style I was hoping for. I also tried variations on drawing, sketch, cartoon, stick figure, vector, etc without much luck.

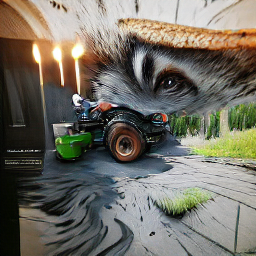

I never did get it to mimic the style of the human illustrators but the prompt A portrait mode close-up of Rocky the Raccoon, the cute and lovable main character from Disney Pixar's upcoming film set a farm in the Cars universe, driving a big John Deere tractor. with the imagenet base turned out pretty neat.

Experiments

The Unreal Engine Trick

On twitter, there are a few different "hacks" people have found to make your images look more impressive. The most famous is to append unreal engine or rendered with unreal engine to the end of your prompt. Some other examples are trending on artstation and top of /r/art.

I tried that with mixed success. It did give them an interesting sense of vibrance and dynamism but at the expense of closely matching the prompt (and added artifacts like weird walls and text).

Over-indexing on the "non-hack" part of the prompt did seem to do better. The following is raccoon | driving | tractor | raccoon driving a tractor | unreal engine.

Progressively Growing Resolution

In the early days of GANs, only tiny resolution ones seemed to work well. There was a breakthrough when someone figured out you could "get them started" with a tiny image then progressively grow them from that seed larger and larger. I thought it might also be faster to generate low-resolution images and then scale them up to let the GAN add detail.

The speed does improve as size decreases (with diminishing returns the smaller you go):

- 1024x1024: out of memory

- 512x512: 1.1 iterations/s

- 256x256: 1.9 iterations/s

- 128x128: 2.8 iterations/s

- 64x64: 3.0 iterations/s

Unfortunately, the quality of a generation starting from a low-resolution base and scaling up to a higher resolution was much worse than starting the high resolution generation from scratch.

Progressively Shrinking Resolution

I figured, "if growing makes it worse, might shrinking make it better?" The thought process was that maybe the details learned at high resolution would carry on at lower resolutions but would be hard to generate at low res in the first place.

This turned out not to be the case. It seems growing and shrinking the size degrades the output. At least using the naive strategy of taking the output image and using it as the input for the different sized models. It's possible that if you initialized the model from a checkpoint derived from the weights of the bigger or smaller model it may work better.

Starting from a Human Drawing

I also experimented with starting from one of the top drawings on paint.wtf and letting the GAN steer from there. This worked Ok, but it kind of lost the thread.

Verbose Prompts

I think what was happening was that while trying to optimize towards the global absolute it was discarding much of the additional context from the original. Keeping it on track by using a much more descriptive prompt helped quite a bit.

This is cartoon raccoon driving a red tractor with big black tires in front of a blue sky and white fluffy clouds | rendered in unreal engine:

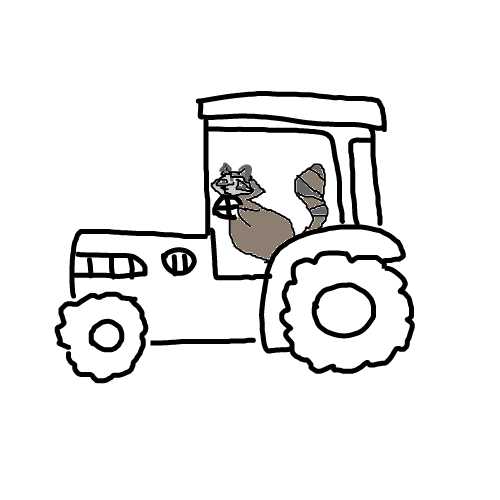

For comparison, that same prompt generated from scratch turned out really neat (I let it run for about 6 hours which equated to 20,000 iterations):

cartoon raccoon driving a red tractor with big black tires in front of a blue sky and white fluffy clouds | rendered in unreal engine

Comparing the Scores

I was interested to see how highly CLIP scored the GAN-generated images on the paint.wtf prompts compared to human drawings. This is measured by comparing the cosine similarity of the CLIP feature vectors between the text prompt and each of the images. A higher similarity means a closer match.

Across the 21 paint.wtf prompts with 1000 or more human submissions, the highest scoring drawing for the humans averaged a cosine similarity of 0.370.

The overall best match across all 100,000+ submissions was this drawing of a hunting license in Utah with a similarity of 0.460 (Note: it was penalized on the paint.wtf leaderboard for "cheating" by using text; the best match without text was this elephant on top of a house with a CLIP cosine similarity of 0.441).

On the "raccoon driving a tractor" prompt, the best human score was 0.425.

In comparison, VQGAN scored a whopping 0.564! That's significantly better than any human drawing on any prompt in paint.wtf. This makes a lot of sense because the GAN was able to "cheat" by colluding with the judge to steer in the "right" direction to optimize its score.

Interestingly, both the humans and the GAN scored higher than any photo/caption did in a sample of 1 million images from Unsplash.

I was also curious about the reverse task: given an image, craft a text prompt with the highest cosine similarity according to CLIP that you can. My personal best was 0.4565 – better than a human drawing but worse than a GAN-generated image. This might make a fun followup game.

Observations

Throughout these experiments I learned quite a few interesting things.

- The composition of a generated image gets locked in very early. If it's not looking promising by iteration 100 or so you should kill it and start over from a new seed or with a new prompt. I'd like to modify the notebook to concurrently generate 10 or 20 different starting points until the 100 iteration mark, save the checkpoints, and let you choose which one to continue from.

- There are diminishing returns to more iterations. After 2000 or so details might change a bit but things don't tend to improve that much.

- I found myself wanting to more actively steer. Changing the prompt in the middle of a generation to "take a right turn" would be interesting to experiment with.

- The "unreal engine" trick didn't actually help that much. It's a cool modifier that can get artsy effects but it detracts from how closely things seem to match the prompt.

- Using the pipe to split a prompt into separate prompts that are steered towards independently may be counterproductive. For example, steering towards

cartoon vector drawing of a raccoon driving a tractorlooked better to me thana raccoon driving a tractor | cartoon vector drawing.

Conclusion

CLIP+VQGAN is a fun and powerful tool. There is still much to be discovered and unlocked. The more people experimenting with the model the quicker we'll learn about how to control it! Try out the Colab Notebook and let me know on twitter if you discover anything interesting.

If you're interested in building other applications using CLIP, check out our guide on building an image search engine powered by CLIP.

By the way, if these types of experiments are the types of thing that are interesting to you, you'd probably enjoy working with us! We're innately curious people. And we're hiring.

Cite this Post

Use the following entry to cite this post in your research:

Brad Dwyer. (Jul 25, 2021). Experimenting with CLIP and VQGAN to Create AI Generated Art. Roboflow Blog: https://blog.roboflow.com/ai-generated-art/