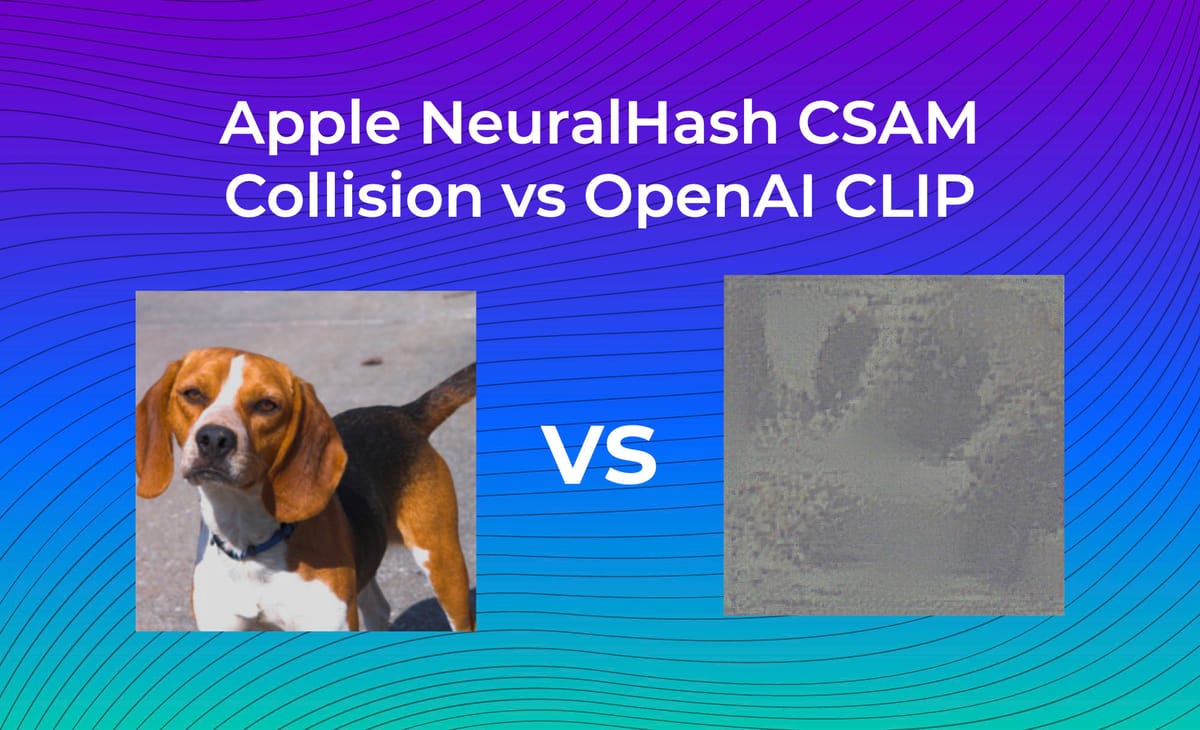

This morning, Hacker News was ablaze with several stories about vulnerabilities in Apple's CSAM NeuralHash algorithm. Researchers had found a way to convert the NeuralHash model to ONNX format and reverse engineer a hash collision (in other words: they created an artificial image with the same hash as a real image).

These two images look identical to Apple's NeuralHash algorithm.

This is a problem because it would mean that an attacker could artificially create benign images that triggered Apple's code to (falsely) detect them as CSAM. While Apple's process has humans review these images before alerting authorities, it creates a mechanism by which their human review queue could be flooded with junk images.

This probably isn't a problem for Apple in practice

I was curious about how these images look to a similar, but different neural feature extractor, OpenAI's CLIP. CLIP works in a similar way to NeuralHash; it takes an image and uses a neural network to produce a set of feature vectors that map to the image's contents.

But OpenAI's network is different in that it is a general purpose model that can map between images and text. This means we can use it to extract human-understandable information about images.

I ran the two colliding images above through CLIP to see if it was also fooled. The short answer is: it was not. This means that Apple should be able to apply a second feature-extractor network like CLIP to detected CSAM images to determine whether they are real or fake. It would be much harder to generate an image that simultaneously fools both networks.

Looking for a video breakdown of what's happening? We've got you. Follow us on YouTube for more: https://bit.ly/rf-yt-sub

How CLIP sees the colliding images

In order to answer this question, I wrote a script that compares the feature vectors of the 10,000 most common words in the English language with the feature vectors extracted from the above two images.

For the real image (of the dog), the 10 closest English words according to CLIP were:

lycos 0.2505926318654958

dog 0.24832078558591447

max 0.24561881750210326

duke 0.24496333640708098

franklin 0.24365466638044955

duncan 0.2422463574688551

tex 0.2421228800579409

oscar 0.2420606738013696

aj 0.24109170618481915

carlos 0.24089544292527865And for the fake image, the closest words were:

generated 0.28688217846704156

ir 0.28601858485497855

computed 0.2738434877999296

lcd 0.27321733530015463

tile 0.27260846605722056

canvas 0.2715020955351239

gray 0.2711179601100141

latitude 0.2709974225452176

sequences 0.27087782102658364

negative 0.2707182921325215As you can see, it wasn't fooled in the least. Apple should be able to ask a similar network to CLIP questions like: "is this a generated image?" and "is this a CSAM image" and use its answer as a sanity check before flagging it to leave your phone and go to human review.

Caveats

That isn't to say that CSAM is infallible (or even a good idea in the first place), but there are workarounds to this particular NeuralHash collision exploit that mean it's not dead in the water just yet. And Apple: if you need help with using CLIP, drop us a line!

If you want to learn more about how CLIP can be applied in novel ways, check out our other related posts:

- ELI5 CLIP: A Beginner's Guide to the CLIP Model

- How we Built Paint.wtf (A CLIP-powered pictionary game)

- How to try CLIP: OpenAI's Zero-Shot Image Classifier

- Zero-Shot Content Moderation with OpenAI's new CLIP Model

- Prompt Engineering: The Magic Words to using OpenAI's CLIP

- Experimenting with CLIP+VQGAN to Create AI Generated Art

Cite this Post

Use the following entry to cite this post in your research:

Brad Dwyer. (Aug 18, 2021). Mitigating the Collision of Apple's CSAM NeuralHash. Roboflow Blog: https://blog.roboflow.com/apples-csam-neuralhash-collision/