This blog post is contributed by Hart Woolery, Founder and CEO, 2020CV.

Introduction

I’ve always been fascinated by special effects in movies and video games. My career took an interesting turn in 2017 when I began experimenting with OpenCV to potentially land a job in the field of Computer Vision (which seemed to be a popular job posting at the time). Little did I know that a ridiculous side project, involving virtual hand puppets, would eventually land me a deal with famed investor Mark Cuban and the formation of my company, 2020CV.

Computer vision powered AR experience Source

The AR experience shown above was created with 2020CV’s PopFX software.

Computer Vision and Augmented Reality

As machine learning and augmented reality (AR / ML) were just becoming feasible to run on mobile devices at this time, I went all in and created one of the first mobile experiences to combine these worlds, InstaSaber - a way to turn a roll of paper into a virtual lightsaber.

Fast forward to 2024, and I’m still building these experiences, with my latest creation PopFX being used to create live AR for production video events such as NBA highlights and the Super Bowl. All of the model datasets for these were created using Roboflow!

How to Build AR Applications Using Computer Vision

Shaping Ideas for AR Experiences

I often try to think of some effect that would create enjoyment for the user, and that has some element of novelty. It’s also important to consider the user experience, how the cameras are positioned, and the feasibility of tracking some object in real time (tip: it’s hard to track fast moving objects).

Collecting and Labeling Data for AR Experiences

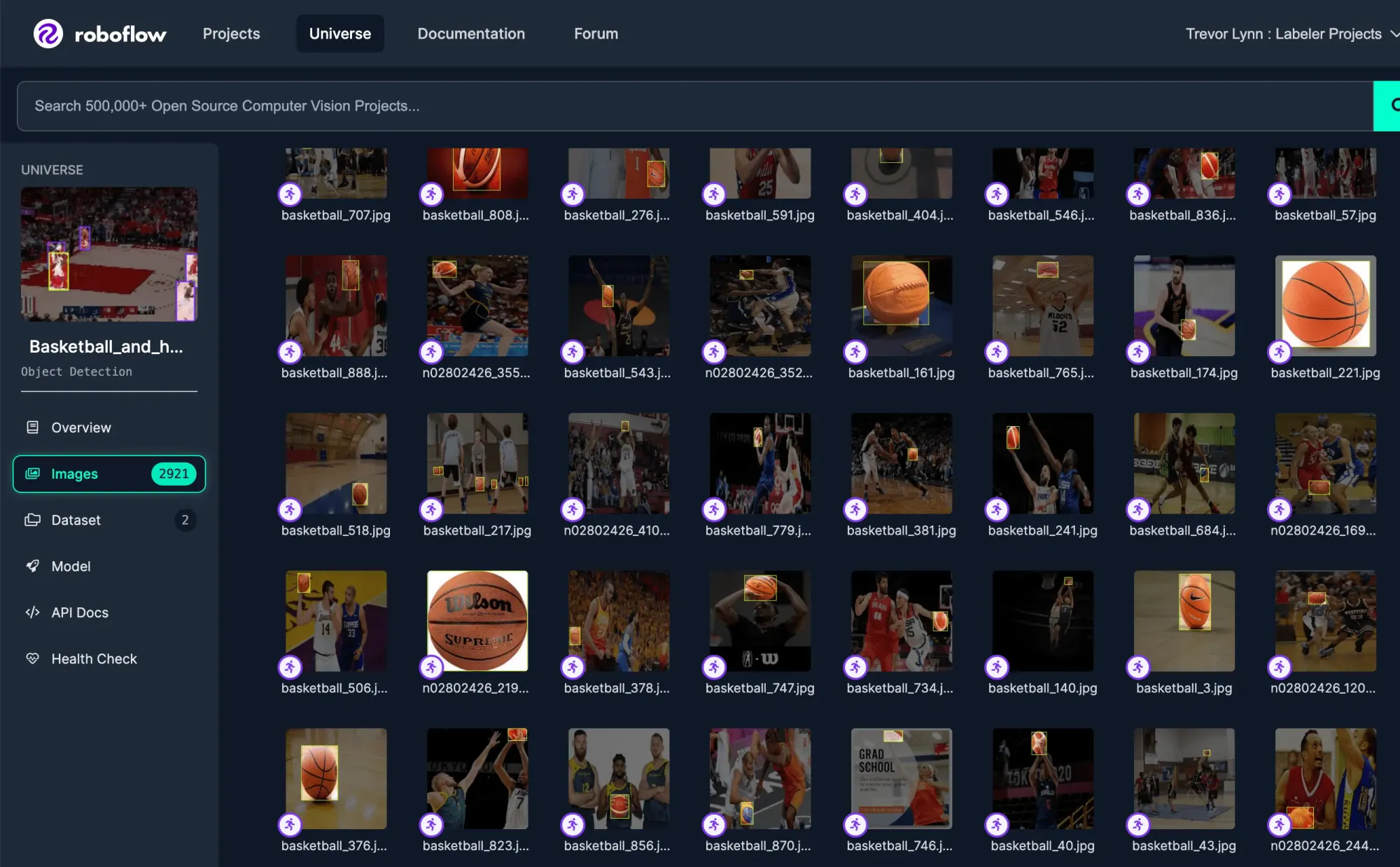

Next you’d want to gather image data, usually containing similar content to what you’d expect the user’s camera to produce (tip: use Roboflow is to parse frames from a video). Once you have the image dataset, you can label it in Roboflow, e.g. draw bounding boxes around your desired subjects. Roboflow’s tools are advanced enough to automate this process in many cases, or at least make it trivial to label a few hundred images.

Creating a Robust Dataset for Model Training

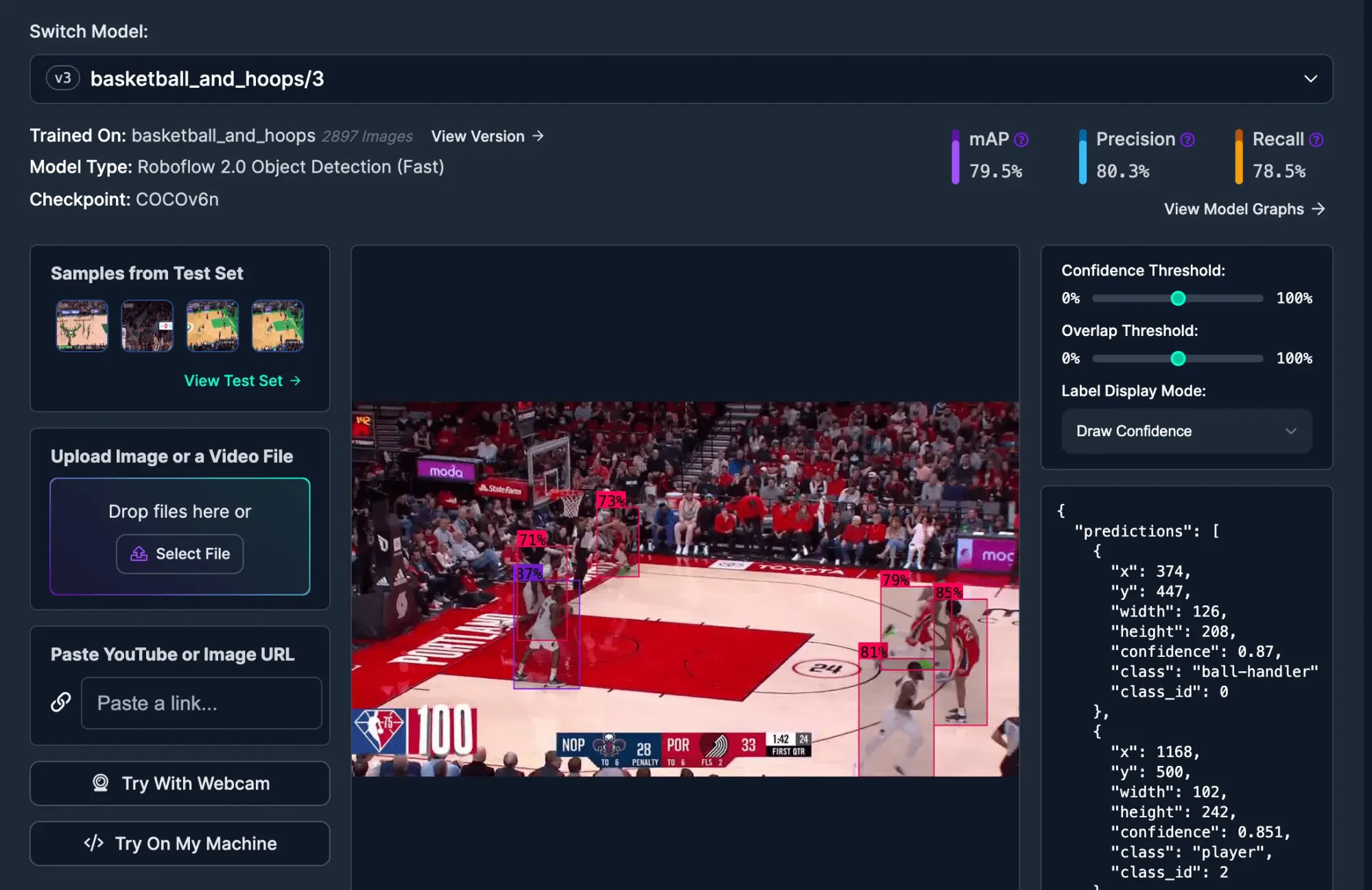

After your dataset is complete, you can augment it to expand its size, and then train a model using one of Roboflow’s many notebooks (tip: many sites like Google Colab offer free tiers) or train inside Roboflow directly. A model’s results can usually be visualized at that point, using one of Roboflow’s libraries like Supervision or Inference.

Ultimately you’ll want to convert your model weights to a format friendly for AR, such as CoreML, TensorFlow Lite, or export directly from Roboflow to Snapchat. Various Python scripts are available for most of the common models out in the wild to accomplish this (tip: start with a pre-trained model to prototype your idea and move to a custom one when you are ready).

Building AR Applications with Computer Vision

The last, and generally most complex, piece of creating the AR experience is tying together the camera, rendering, and machine learning pipelines into a seamless experience.

This is beyond the scope of this post, but there exist many XCode and Python projects on GitHub that will serve as a good starting point. Roboflow also provides a few sample projects that will demonstrate how to dynamically load your models.

Conclusion

In conclusion, creating an AR experience with computer vision is an undertaking of considerable time and effort. But there exist many friendly tools such as Snap’s Lens Studio (tip: it can also import Roboflow models) that allow you to prototype these types of experiences if you just want to dip your toes in the water. I hope you enjoyed my overview of creating AR experiences and I’m excited to see what you create!