When you are training computer vision models, deduplicating images in your dataset is helpful. While having many examples of images gathered from an environment in which your model will be deployed is helpful, images that are almost exact may not be useful as your model scales.

In this guide, we are going to demonstrate how to use OpenAI’s Contrastive Language–Image Pre-training (CLIP) to identify near duplicates to an image in a dataset. We will use this to identify images that could be removed from a training set to improve the accuracy of multimodal models. We will run CLIP on an Intel Habana Gaudi2 chip, which has been optimized for high performance computer vision tasks.

For example, consider a model designed to identify objects related to logistics (i.e. wooden pallets). If you have many images that are taken of the same wooden pallet that are almost identical, you could remove some of the near duplicates. This will reduce time it takes to train your model, ideal if you are working with millions or hundreds of thousands of images.

By removing images that are identical or almost identical, you can reduce the number of images with which you train and preserve strong accuracy. Methods like image hashing can be used to remove exact duplicates, but to remove near duplicates we need a more advanced solution.

Without further ado, let’s get started!

What is CLIP?

Contrastive Language Image Pre-training (CLIP) is a computer vision model developed by OpenAI. You can use CLIP to calculate image embeddings. Image embeddings can be compared using a similarity metric to identify the extent to which two images are similar. We will use Euclidean distance.

We are going to use CLIP to improve a large dataset by deduplicating near duplicate images. Model training time increases for each image you have, but performance does not necessarily increase with more images. It is about the quality of images you have. Indeed, near duplicates provide less valuable information to models. Our dataset contains 99,238 images across 20 classes that relate to logistics.

For this guide, we'll look at the 18,988 images in the valid set. In our testing, we can compute vectors and identify similar images through distance comparisons in under six minutes for a dataset of this size.

To deduplicate our images, we will:

- Install CLIP.

- Calculate CLIP vectors for each image.

- Compare each CLIP vector to identify similar vectors.

Cosine similarity operations are fast, so we can compare each CLIP vector manually without optimization for a dataset of our size. Learn more about image vector analysis.

If you are working with millions of images, you may consider storing vectors in a vector database (like Pinecone), identifying the N most related images (i.e. 10), then using the cosine similarity math to see if vectors are too similar (i.e. over 95% similar) to a given image.

Note that if your images are nearly identical and only a few pixels are changing, CLIP will not be the right model to improve your dataset. This is because CLIP performs best at images with clear differences, rather than tiny differences.

Install Dependencies

We are going to use the Transformers implementation of CLIP. This implementation has been enhanced for use on a Gaudi2 chip, allowing us to achieve strong performance when we calculate vectors.

To install Transformers, run the following command:

pip install transformersWith Transformers installed, we can now write logic to calculate CLIP vectors for a dataset.

For this guide, we are going to use the Logistics dataset on Roboflow Universe. We can download this dataset using the Roboflow Python package. To download the Roboflow Python package, run the following command:

pip install roboflowTo download our dataset, create a new Python file and add the following code:

import roboflow

roboflow.login()

rf = roboflow.Roboflow(api_key="")

project = rf.workspace("large-benchmark-datasets").project("logistics-sz9jr")

dataset = project.version(2).download("coco")You will be asked to authenticate with Roboflow when you first run this script. The dataset will be saved in a folder called “Logistics-2”. Alternatively, you can use any dataset with which you are working.

Calculate CLIP Vectors for a Dataset

Create a new Python file and add the following code:

import json

import os

import faiss

import numpy as np

import tqdm

from PIL import Image

from transformers import (AutoImageProcessor, AutoTokenizer,

VisionTextDualEncoderModel,

VisionTextDualEncoderProcessor)

try:

import habana_frameworks.torch.core as htcore

DEVICE = "hpu"

except:

DEVICE = "cpu"

model = VisionTextDualEncoderModel.from_vision_text_pretrained(

"openai/clip-vit-base-patch32", "roberta-base"

).to(DEVICE)

tokenizer = AutoTokenizer.from_pretrained("roberta-base")

image_processor = AutoImageProcessor.from_pretrained("openai/clip-vit-base-patch32")

processor = VisionTextDualEncoderProcessor(image_processor, tokenizer)

dataset_location = "Logistics-2/valid"

images = [i for i in os.listdir(dataset_location) if i.endswith(".jpg")]

batch_size = 32

batches = [

images[i : i + batch_size] for i in range(0, len(images), batch_size)

]

index = faiss.IndexFlatL2(512)

vectors = []

# Process images in batches and collect vectors

for batch in tqdm.tqdm(batches):

batch_images = [Image.open(f"{dataset_location}/{i}") for i in batch]

inputs = processor(images=batch_images, return_tensors="pt").to(DEVICE)

outputs = model.get_image_features(**inputs).to(DEVICE)

outputs = outputs.cpu().detach().numpy()

for i in outputs:

index.add(np.array([i]))

vectors.append(i)

[i.close() for i in batch_images]

# Build a dictionary to hold the similar images for each image

images_dict = {img: {"similar": []} for img in images}

# Populate the dictionary with similar images

for idx, img in enumerate(tqdm.tqdm(images)):

# Find the top 5 nearest neighbors for each image

k = 5 # Number of nearest neighbors to find

D, I = index.search(np.array([vectors[idx]]), k)

for i, distance in zip(I[0], D[0]):

# normalize the distance to be between 0 and 1

distance = 1 - distance / np.max(D)

if i != idx and distance > 0.9:

images_dict[img]["similar"].append(

{"image": images[i], "distance": distance.item()}

)

# Save the results to a JSON file

with open("deduped.json", "w+") as f:

json.dump(images_dict, f)In this code, we compute CLIP vectors for every image in the “Logistics-2/valid” folder. Replace the folder name with the name of the folder in which the images you want to deduplicate are stored.

In this code, we split our dataset of 18,988 images into batches of 32. We send each image batch to the HPU for processing. We keep track of these vectors in memory.

You should see the following text in the output, which signifies that the script was able to successfully connect to your HPU:

============================= HABANA PT BRIDGE CONFIGURATION ===========================

PT_HPU_LAZY_MODE = 1

PT_RECIPE_CACHE_PATH =

PT_CACHE_FOLDER_DELETE = 0

PT_HPU_RECIPE_CACHE_CONFIG =

PT_HPU_MAX_COMPOUND_OP_SIZE = 9223372036854775807

PT_HPU_LAZY_ACC_PAR_MODE = 1

PT_HPU_ENABLE_REFINE_DYNAMIC_SHAPES = 0

---------------------------: System Configuration :---------------------------

Num CPU Cores : 160

CPU RAM : 1056389528 KB

------------------------------------------------------------------------------The text will look slightly different depending on your system specifications.

We are saving all the CLIP vectors in memory which works for our scale (~20,000 images for the test set). For larger datasets (i.e in the multiple hundreds of thousands or millions of images), you may want to use a vector database like faiss to store vectors. This depends on the RAM available on your system.

We then compare each image embedding. If two image embeddings are similar, we keep track of the similarity in a dictionary. The dictionary takes the following form:

{“file_name”: [{“image”: “file.png”, similarity: 0.9001}]Where the list represents similar images.

We only measure the similarity of a vector if it has not already been marked as similar to another image. This allows us to be more efficient in our computations. For example, if we know that “file1.png” is similar to “file100.png”, it is not necessary to calculate if “file100.png” is similar to an image since we already know it is. We use the “similar” set to track images that have already been marked as similar to an image to track which images we want to skip.

We save this dictionary to a file called “duplicates.json”. With the information in the dictionary, you can make a determination about what to do next. For example, you may want to remove all images that have a similarity of 0.9 or greater from your dataset. This would remove any images 90% similar to an image in your dataset.

You may want to remove images after a manual review to ensure that images are not of interest. For example, two images may be almost identical but one may contain a small object of interest that you want to annotate.

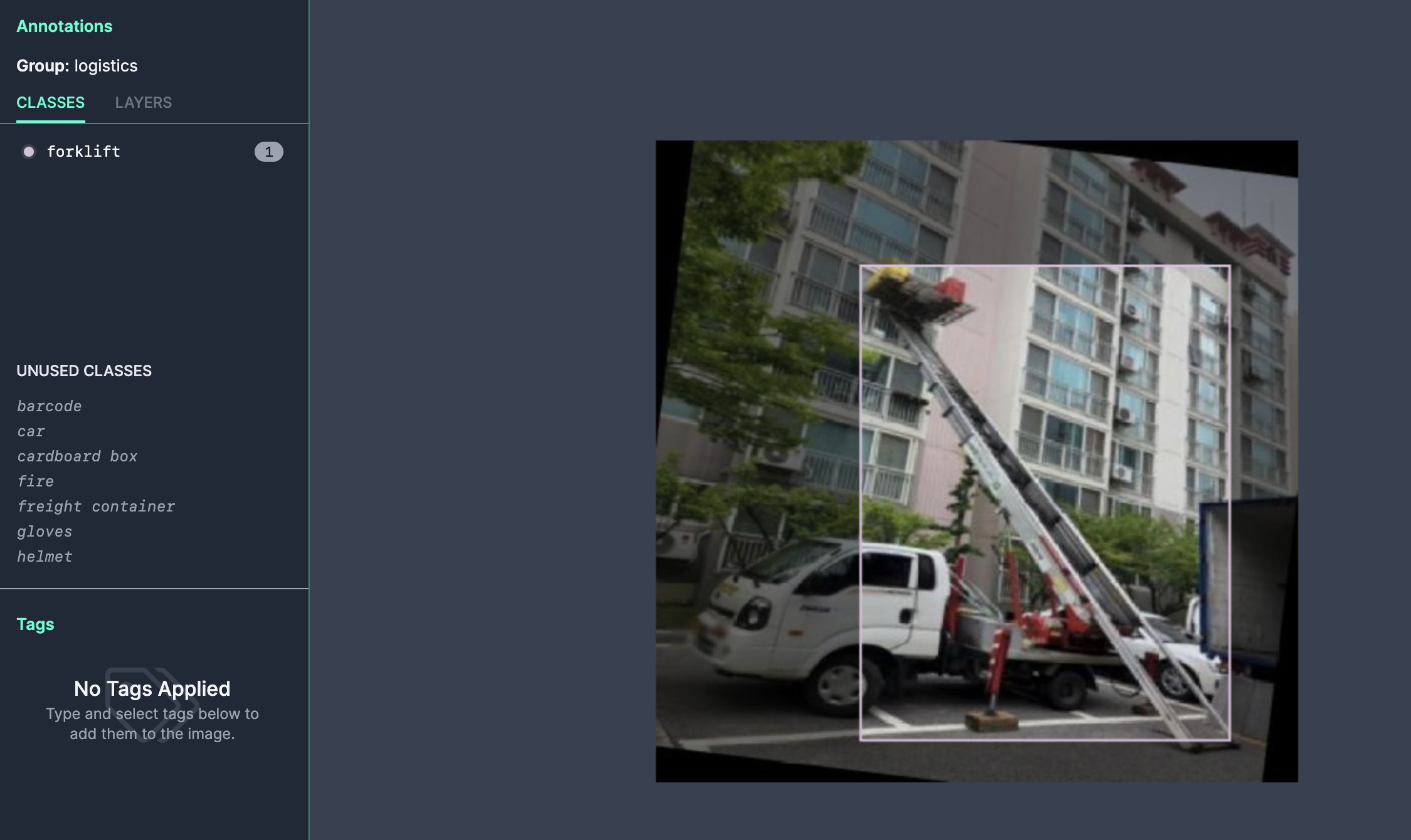

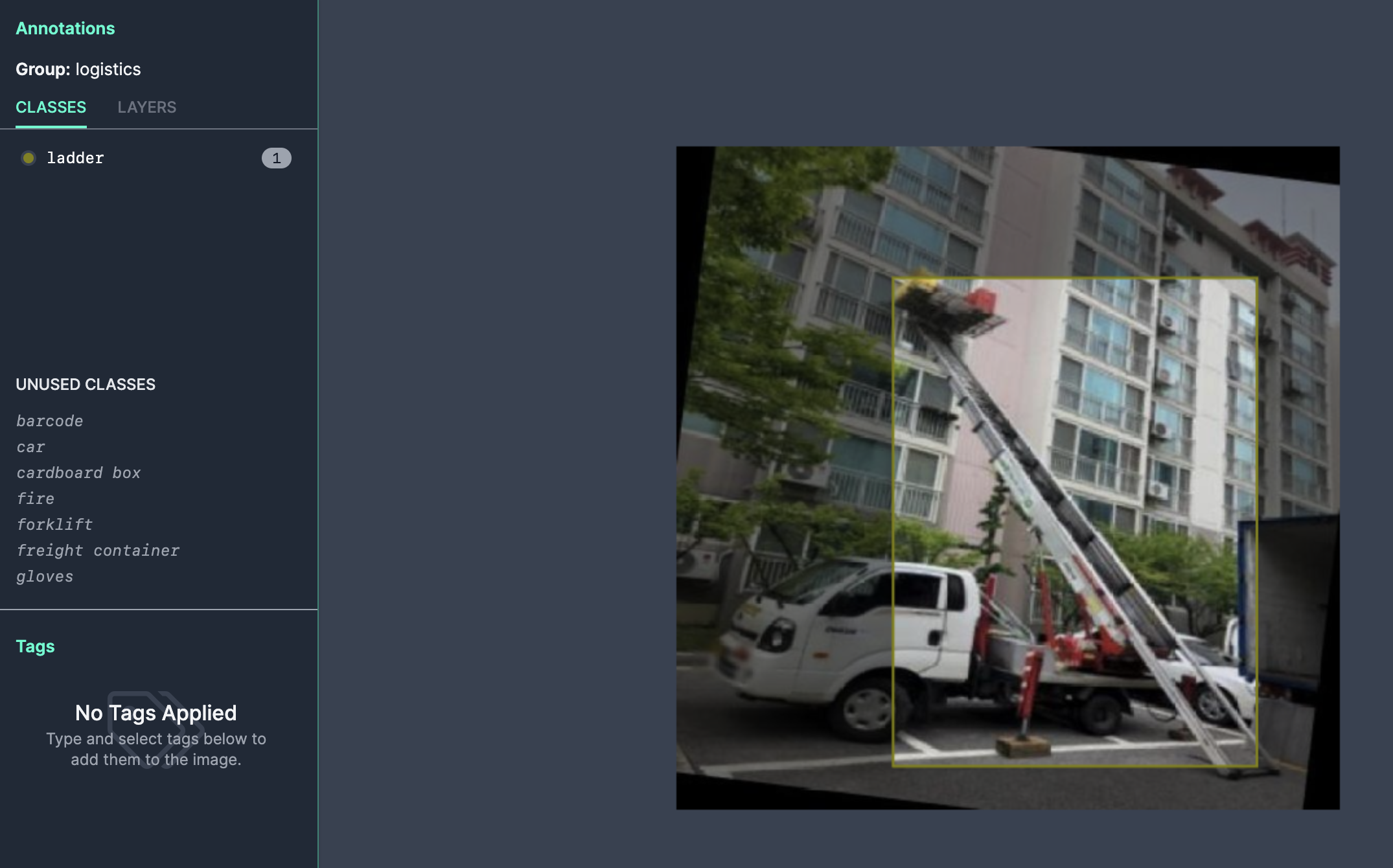

Consider the following image:

This image is Forklift_275_jpg.rf.4468df3379d97965e084f08cb0cbe8d8.jpg. Our code found that Forklift_281_jpg.rf.a17605279befd66b9e6e959d961c388c.jpg was > 90% similar to the image above. Opening up the other image, we can see it is almost identical to the one above:

Our system successfully identified a duplicate image.

We can use these file names to investigate our annotations, too. For example, using the Roboflow web interface, we can look up each duplicate file in our Logistics dataset that we downloaded from Roboflow. We can check the annotations for each image.

In one random check, the same image was in the validation set twice with two different labels:

In this example, the same object in the same image has been labeled two ways: in the first image, the object is labeled as a forklift. In the second, the image is labeled as a ladder.

Next Steps

Using the code above, you can identify near or exact duplicate images in your dataset. You can look up each image in your dataset to either remove duplicates or, in the case where images are annotated, review annotations to ensure the image you don't delete has the correct label.

After deduplicating your dataset, the next step is to train a model using your dataset. With a Gaudi2, you can train a wide range of models, including CLIP-like models, ViT image classification models, and more. The Gaudi2 is optimized to handle large training jobs, allowing you to reliably and dependably train the models you need for your enterprise. You can also train across multiple Gaudi2 HPU chips, allowing you to achieve faster training jobs.

After you have trained the first version of your model, you can start to work on refining the system. Indeed, a model is not “complete” at any stage; requirements and environments in which models run change. Rather, you should continually iterate to improve performance and add more data over time if the environment in which your model changes.

To improve your model over time, you can use active learning, which allows you to continually collect new data for use in training a model.

Conclusion

When you are working with large image datasets in computer vision workloads, deduplicating images is useful. For example, having 1000 images that are almost identical may not be useful.

Traditional techniques for identifying image duplicates depend on hashing. The limitation with hashing is that image hashes only track exact duplicates. With CLIP, we can identify images that are almost identical. We can set our own threshold for what constitutes identical.

In this guide, we deduplicated an image dataset with over 20,000 images using CLIP. We identified the similarity between all images.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Feb 20, 2024). Build Enterprise Datasets with CLIP for Multimodal Model Training Using Intel Gaudi2 HPUs. Roboflow Blog: https://blog.roboflow.com/deduplicate-dataasets-clip-gaudi2/