The pace of innovation in multimodal AI is accelerating. Just months after the release of Gemini 2.5, Google has launched Gemini 3 Pro, a frontier model that redefines state-of-the-art performance for visual reasoning, video understanding, and complex agentic workflows.

In this guide, we explore what Gemini 3 Pro is, how it performs on key computer vision benchmarks, and how you can try it yourself and compare it against other top models using Roboflow Playground.

What Is Gemini 3 Pro?

Gemini 3 Pro is Google DeepMind’s latest flagship multimodal model, released on November 18, 2025. It is designed to be a "reasoning-first" model, capable of handling deep, multi-step tasks across text, code, images, audio, and video.

Unlike previous iterations that focused primarily on generation speed or context length, Gemini 3 Pro introduces a "Deep Think" mode (similar to OpenAI's o1 reasoning chains) and integrates with Google Antigravity, a new infrastructure layer that optimizes latency for massive context windows.

Key Gemini 3 Pro Features

- 1 Million Token Context Window: Process entire codebases, long-form videos, or massive document sets in a single pass.

- Pixel-Precise Pointing: The model can output specific 2D coordinates for objects in images, enabling zero-shot object detection and keypoint estimation .

- Media Resolution Control: Developers can now tune the visual token usage, balancing cost against fidelity for tasks like dense OCR or fine-grained detail analysis.

- Native Tool Use: Enhanced capabilities for "agentic" workflows, allowing the model to write and execute code, browse the web, and use external APIs more reliably.

Gemini 3 Pro Benchmarks

Gemini 3 Pro has set new records on virtually every major multimodal benchmark, particularly in visual and spatial reasoning.

| Benchmark | Gemini 3 Pro | Gemini 2.5 Pro | Claude Sonnet 4.5 | GPT-5.1 | |

|---|---|---|---|---|---|

| Academic reasoningHumanity's Last Exam | No tools | 37.5% | 21.6% | 13.7% | 26.5% |

| With search and code execution | 45.8% | — | — | — | |

| Visual reasoning puzzlesARC-AGI-2 | ARC Prize Verified | 31.1% | 4.9% | 13.6% | 17.6% |

| Scientific knowledgeGPQA Diamond | No tools | 91.9% | 86.4% | 83.4% | 88.1% |

| MathematicsAIME 2025 | No tools | 95.0% | 88.0% | 87.0% | 94.0% |

| With code execution | 100.0% | — | 100.0% | — | |

| Challenging Math Contest problemsMathArena Apex | 23.4% | 0.5% | 1.6% | 1.0% | |

| Multimodal understanding and reasoningMMMU-Pro | 81.0% | 68.0% | 68.0% | 76.0% | |

| Screen understandingScreenSpot-Pro | 72.7% | 11.4% | 36.2% | 3.5% | |

| Information synthesis from complex chartsCharXiv Reasoning | 81.4% | 69.6% | 68.5% | 69.5% | |

| OCROmniDocBench 1.5 | Overall Edit Distance, lower is better | 0.115 | 0.145 | 0.145 | 0.147 |

| Knowledge acquisition from videosVideo-MMMU | 87.6% | 83.6% | 77.8% | 80.4% | |

| Competitive coding problemsLiveCodeBench Pro | Elo Rating, higher is better | 2,439 | 1,775 | 1,418 | 2,243 |

| Agentic terminal codingTerminal-Bench 2.0 | Terminus-2 agent | 54.2% | 32.6% | 42.8% | 47.6% |

| Agentic codingSWE-Bench Verified | Single attempt | 76.2% | 59.6% | 77.2% | 76.3% |

| Agentic tool useτ2-bench | 85.4% | 54.9% | 84.7% | 80.2% | |

| Long-horizon agentic tasksVending-Bench 2 | Net worth (mean), higher is better | $5,478.16 | $573.64 | $3,838.74 | $1,473.43 |

| Held out internal grounding, parametric, MM, and search retrieval benchmarksFACTS Benchmark Suite | 70.5% | 63.4% | 50.4% | 50.8% | |

| Parametric knowledgeSimpleQA Verified | 72.1% | 54.5% | 29.3% | 34.9% | |

| Multilingual Q&AMMMLU | 91.8% | 89.5% | 89.1% | 91.0% | |

| Commonsense reasoning across 100 Languages and CulturesGlobal PIQA | 93.4% | 91.5% | 90.1% | 90.9% | |

| Long context performanceMRCR v2 (8-needle) | 128k (average) | 77.0% | 58.0% | 47.1% | 61.6% |

| 1M (pointwise) | 26.3% | 16.4% | not supported | not supported |

1. Visual Reasoning (ARC-AGI-2)

On the ARC-AGI-2 benchmark, which tests abstract visual reasoning and pattern recognition, Gemini 3 Pro achieved a score of 31.1%. For context, this is nearly double the score of GPT-5.1 (17.6%) and significantly higher than Gemini 2.5 Pro (4.9%).

2. Video Understanding (Video-MMMU)

Video analysis has historically been computationally expensive and accuracy-limited. Gemini 3 Pro tops the Video-MMMU leaderboard, demonstrating an ability to understand cause-and-effect relationships in long video clips rather than just identifying static objects.

3. Screen Understanding (ScreenSpot-Pro)

For autonomous agents, understanding user interfaces is critical. Gemini 3 Pro scored 72.7% on ScreenSpot-Pro, dominating Claude 3.5 Sonnet (36.2%) and GPT-5.1 (3.5%). This makes it exceptionally strong for building robotic process automation (RPA) agents that "see" screens.

First Impressions of Gemini 3 Pro

In my initial testing, Gemini 3 Pro feels fundamentally different from the generation of models before it. The enabling of "Deep Think" mode introduces a variable latency; processing takes longer as the model "ponders" complex visual chains, but the payoff is a profound improvement in accuracy for spatial and logic-heavy tasks.

- Pros: The native "pointing" capability is the standout feature for computer vision developers. Unlike previous VLMs that offered vague bounding box approximations, Gemini 3 Pro returns precise normalized coordinates [ymin, xmin, ymax, xmax]. When combined with Roboflow's parsing tools, this effectively functions as a zero-shot object detector that requires no training data to identify obscure classes.

- Cons: While the 1M token context window allows for analyzing hour-long video feeds, it introduces significant time-to-first-token latency. While Google's new Antigravity infrastructure helps optimize throughput for massive inputs, real-time applications may still prefer the "Flash" variant or require asynchronous workflows (polling) rather than synchronous API calls.

View how Gemini 3 Pro currently ranks against other AI models in our Model Rankings Leaderboard.

How to Use Gemini 3 Pro on Roboflow Playground

You can try Gemini 3 Pro right now on Roboflow Playground.

Playground is a web-based interface that lets you test, compare, and evaluate over 30+ state-of-the-art vision models without writing any code. Gemini 3 Pro is available for multiple task types, including:

- Object Detection: Identify and locate objects.

- Optical Character Recognition (OCR): Read text from documents or scenes.

- Image Classification: Categorize entire images.

- Image Captioning: Generate detailed descriptions.

- Open Prompt (VQA): Ask natural language questions about your image.

To try out Gemini 3 Pro, follow this link and select a task (e.g., object detection). Upload an image, define your classes, and run the model.

Comparing Gemini 3 Pro vs. Other Models

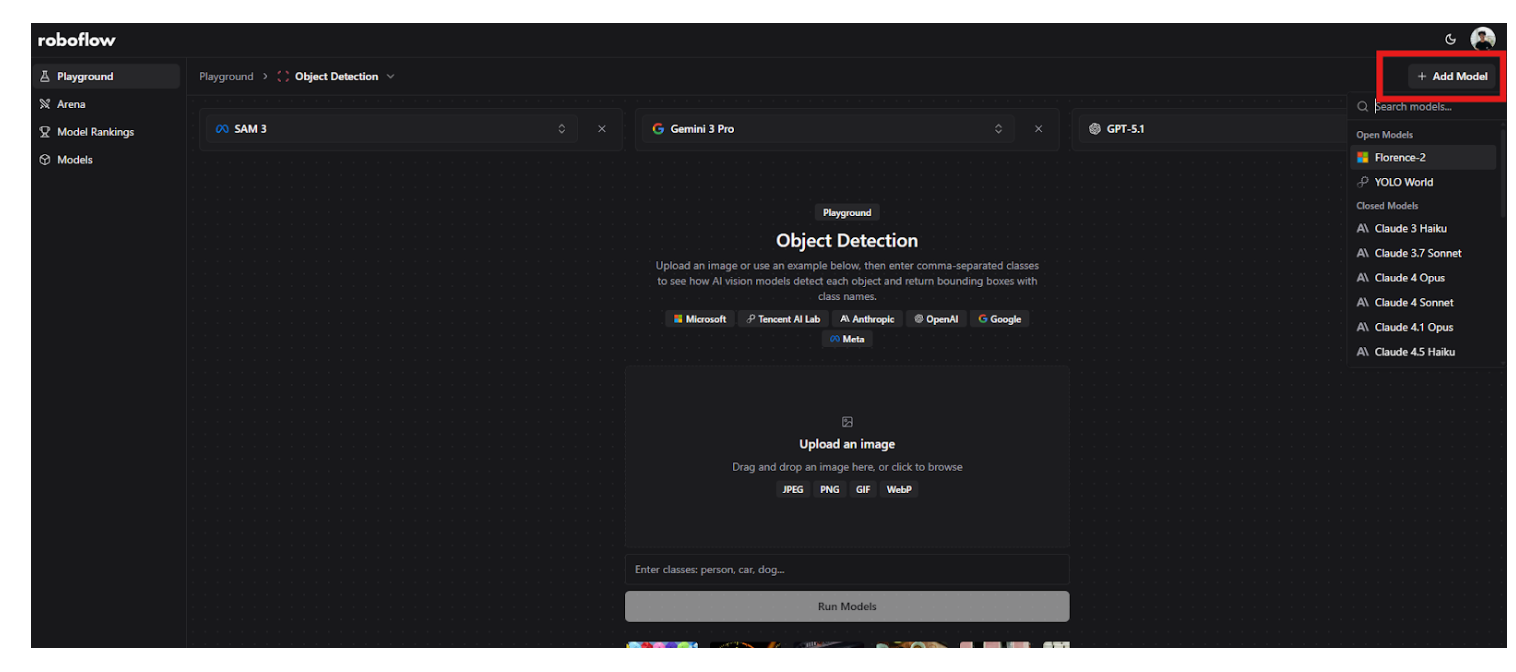

One of the most powerful features of Roboflow Playground is the Compare mode. You can run Gemini 3 Pro side-by-side with other models like SAM3, GPT 5.1, or GPT-4o to see which performs best for your specific data.

Step-by-Step Guide:

- Select a Task: Go to Playground and choose a task, such as "Object Detection".

- Choose Models: In the model selector, pick Gemini 3 Pro. Then, click "Add Model" to select a comparison model, for example, Florence-2.

- Upload an Image: Drag and drop your image (or use a sample).

- Run Inference: Click "Run Models".

Playground will visualize the results side-by-side:

How Can We Take This Beyond Playground?

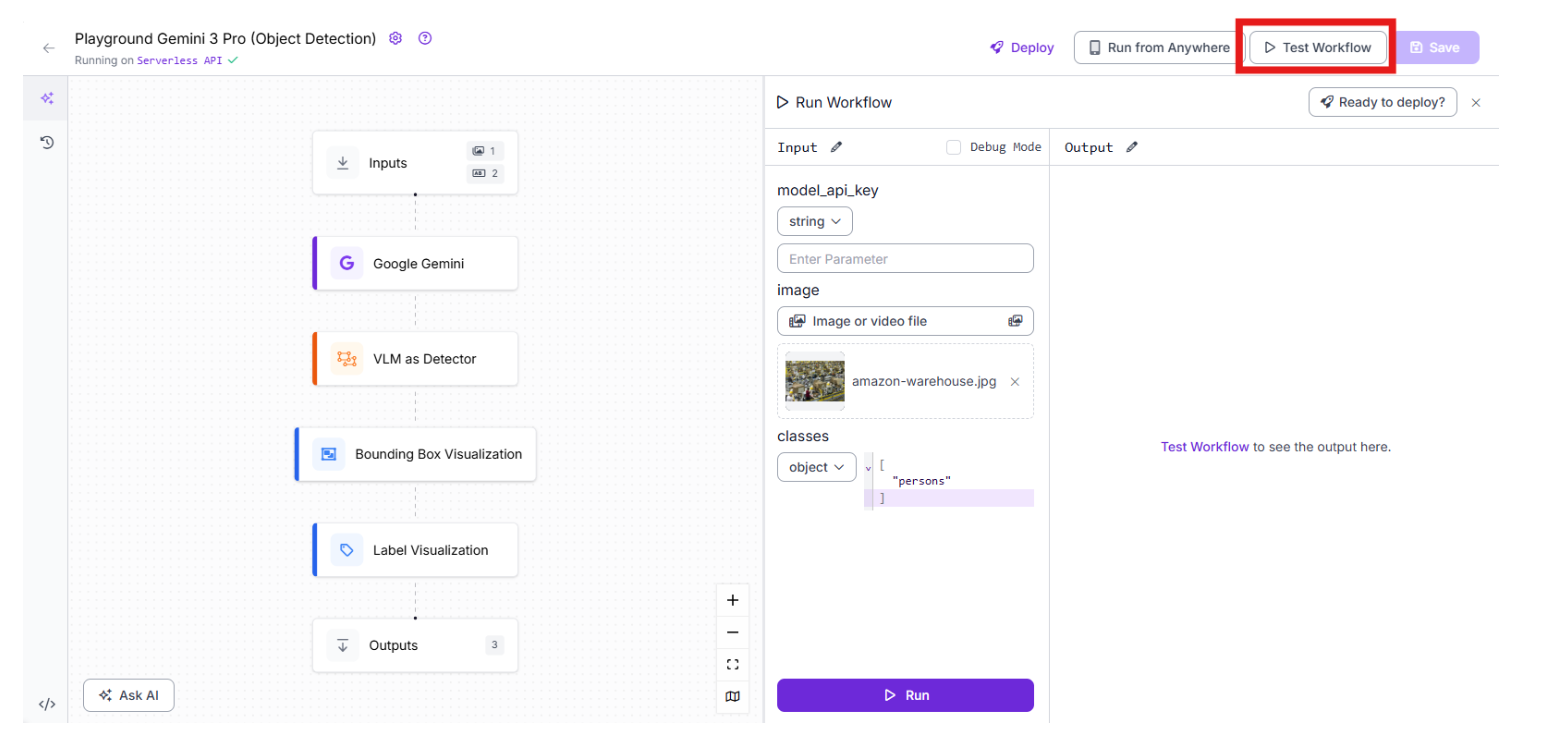

Playground is excellent for solitary tests, but real-world applications require logic, chaining, and integration. This is where Roboflow Workflows comes in. You can take the exact prompt that worked in Playground and drop it into a production-ready pipeline. What’s nice is that Playground already provides us access to a workflow within a few clicks.

How to set it up:

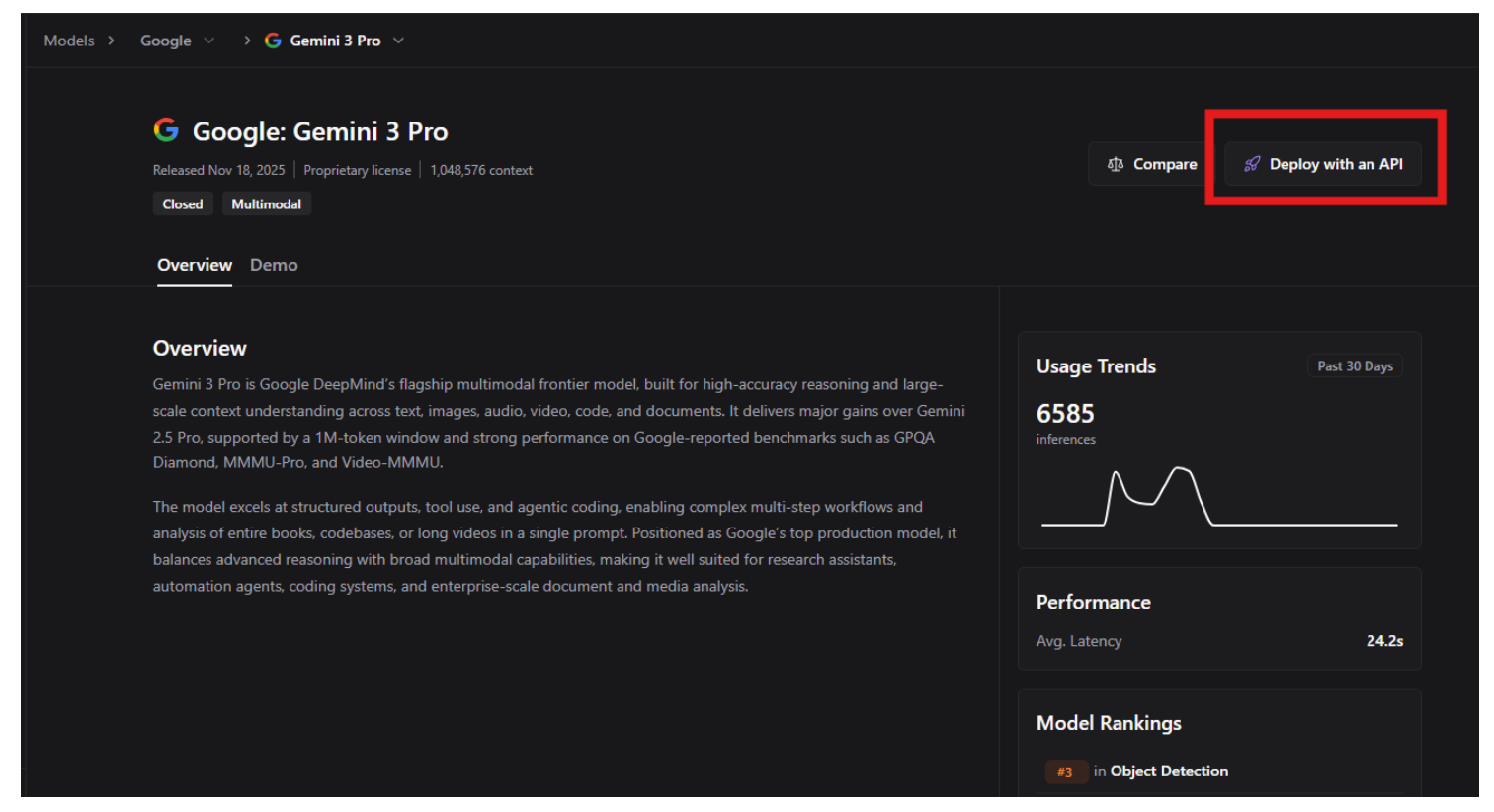

- Return to the Gemini 3 Pro model description page on Playground and click Deploy with API:

- Choose your preferred task type (ex. Object Detection), choose your workspace, and click continue:

It’s important to note that a Roboflow account is required to create a workspace, so if you don’t already have one, creating an account should be your first step. Don’t worry; it’s free and only takes a few clicks.

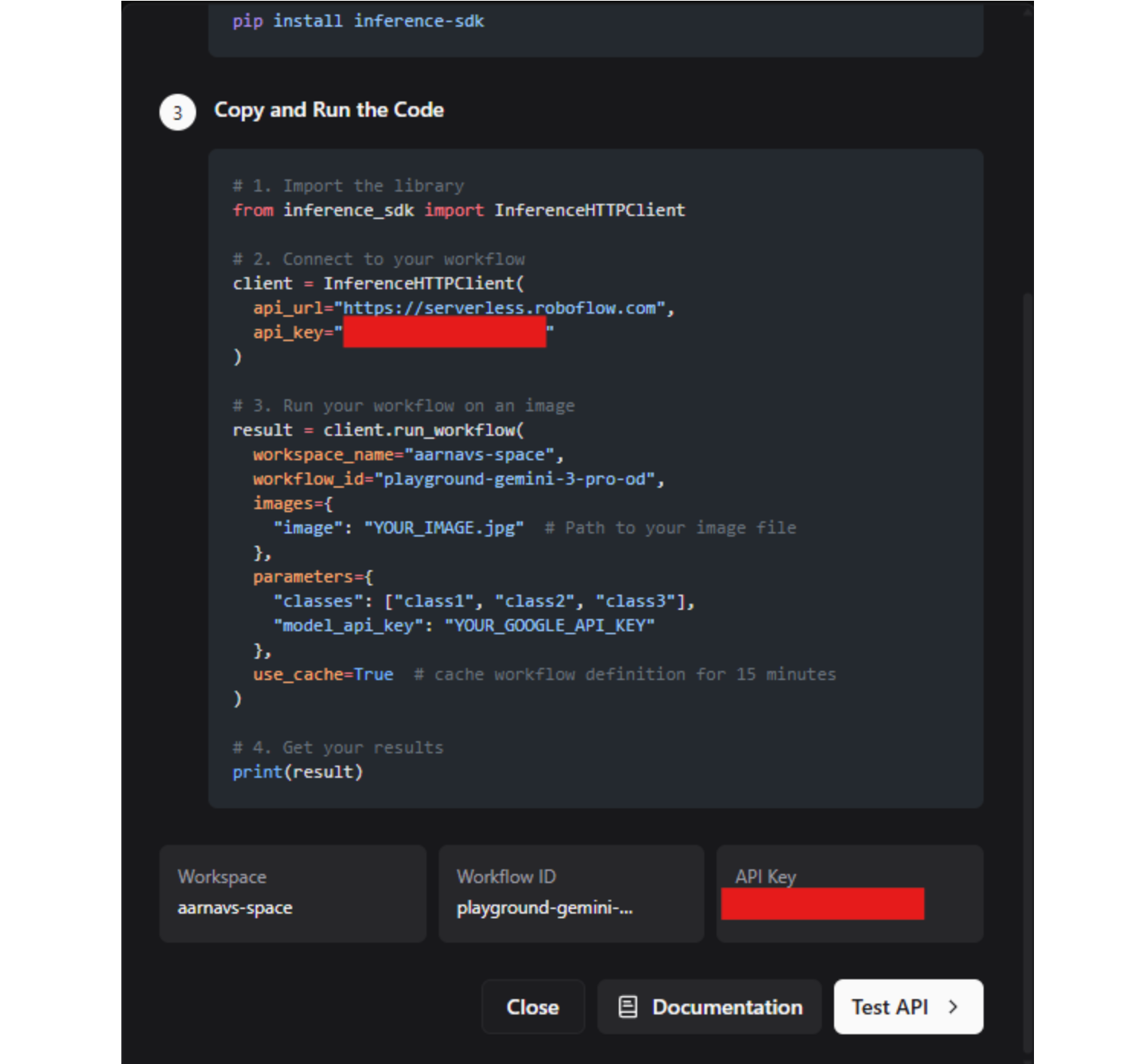

- Follow the instructions provided to implement the model into your own code, or simply click “Test API” to go to your own workflow.

Another important thing to note is that a Google API key is required to use the Gemini 3 Pro model in a workflow or within your own application. If you haven’t created one yet, please follow this tutorial.

- In the workflow, edit the workflow however you please. Then, click “test workflow”. Then input your Google API key under the “model_api_key” input. Upload an image to analyze, and input the classes you want analyzed in the image in the correct format. Then click run.

Conclusion: Try Gemini 3 Pro

Gemini 3 Pro represents a significant leap forward for multimodal AI, offering reasoning capabilities that bridge the gap between simple recognition and true understanding. Whether you are building autonomous agents, analyzing complex video feeds, or need robust zero-shot detection, it is a tool worth exploring.

You can test Gemini 3 Pro today on Roboflow Playground and see how it stacks up against the competition.

Written by Aarnav Shah

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Dec 31, 2025). Try Gemini 3 Pro From Google. Roboflow Blog: https://blog.roboflow.com/gemini-3-pro/