On July 29th, 2024, Meta AI released Segment Anything 2 (SAM 2), a new image and video segmentation foundation model. According to Meta, SAM 2 is 6x more accurate than the original SAM model at image segmentation tasks. This article covers the original Segment Anything model release.

Discover the potential of Meta AI’s Segment Anything Model (SAM) in this comprehensive tutorial. We dive into SAM, an efficient and promptable model for image segmentation. With over 1 billion masks on 11M licensed and privacy-respecting images, SAM’s zero-shot performance is often competitive with or even superior to prior fully supervised results. For more information on how SAM works and the model architecture, read our SAM technical deep dive.

In this written tutorial (and the video below), we will explore how to use SAM to generate masks automatically, create segmentation masks using bounding boxes, and convert object detection datasets into segmentation masks. If you're interested in using SAM to label data for computer vision, Roboflow Annotate uses SAM to power automated polygon labeling in the browser which you can try for free.

On July 29th, 2024, Meta AI released Segment Anything 2 (SAM 2), a new image and video segmentation foundation model. According to Meta, SAM 2 is 6x more accurate than the original SAM model at image segmentation tasks.

You can try out SAM 2 in the workflow below.

What is Segment Anything?

Segment Anything (SAM) is an image segmentation model developed by Meta AI. This model can identify the precise location of either specific objects in an image or every object in an image. SAM was released in April 2023. SAM is open source, released under an Apache 2.0 license.

Background: Object Detection vs. Segmentation

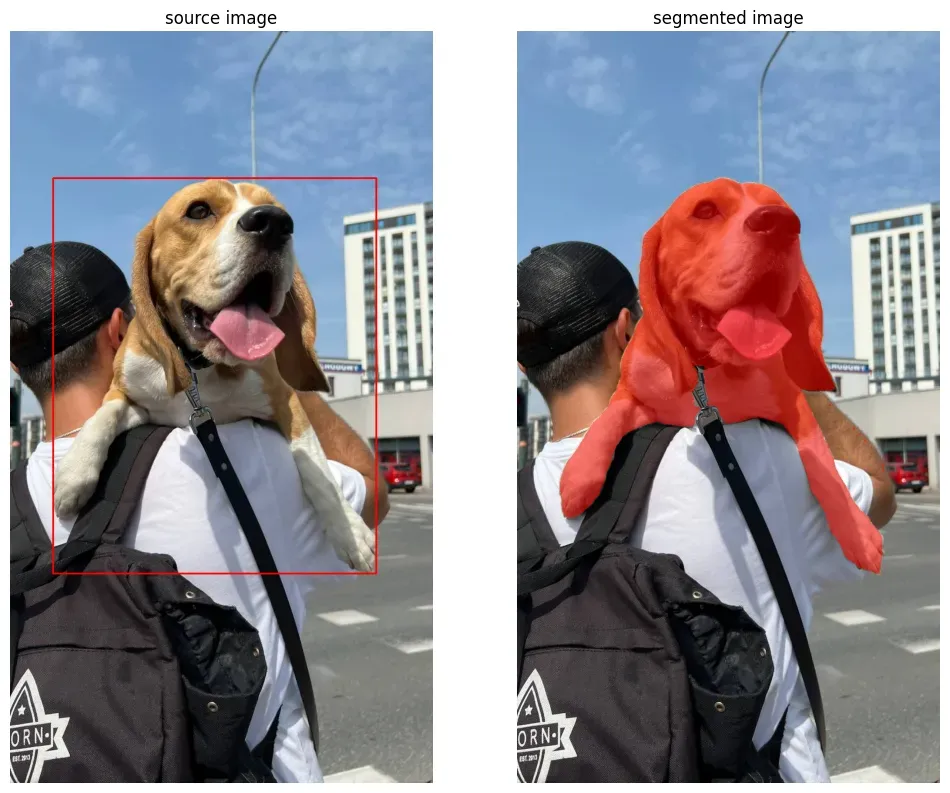

In object detection tasks, objects are represented by bounding boxes, which are like drawing a rectangle around the object. These rectangles give a general idea of the object's location, but they don't show the exact shape of the object. They may also include parts of the background or other objects inside the rectangle, making it difficult to separate objects from their surroundings.

Segmentation masks, on the other hand, are like drawing a detailed outline around the object, following its exact shape. This allows for a more precise understanding of the object's shape, size, and position.

To use Segment Anything on a local machine, we'll follow these steps:

- Set up a Python environment

- Load the Segment Anything Model (SAM)

- Generate masks automatically with SAM

- Plot masks onto an image with Supervision

- Generate bounding boxes from the SAM results

Setting up Your Python Environment

To get started, open the Roboflow notebook in Google Colab and ensure you have access to a GPU for faster processing. Next, install the required project dependencies and download the necessary files, including SAM weights.

pip install \

'git+https://github.com/facebookresearch/segment-anything.git'

pip install -q roboflow supervision

wget -q \

'https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth'Loading the Segment Anything Model

Once your environment is set up, load the SAM model into the memory. With multiple modes available for inference, you can use the model to generate masks in various ways. We will explore automated mask generation, generating segmentation masks with bounding boxes, and converting object detection datasets into segmentation masks.

The SAM model can be loaded with 3 different encoders: ViT-B, ViT-L, and ViT-H. ViT-H improves substantially over ViT-B but has only marginal gains over ViT-L. These encoders have different parameter counts, with ViT-B having 91M, ViT-L having 308M, and ViT-H having 636M parameters. This difference in size also influences the speed of inference, so keep that in mind when choosing the encoder for your specific use case.

import torch

from segment_anything import sam_model_registry

DEVICE = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

MODEL_TYPE = "vit_h"

sam = sam_model_registry[MODEL_TYPE](checkpoint=CHECKPOINT_PATH)

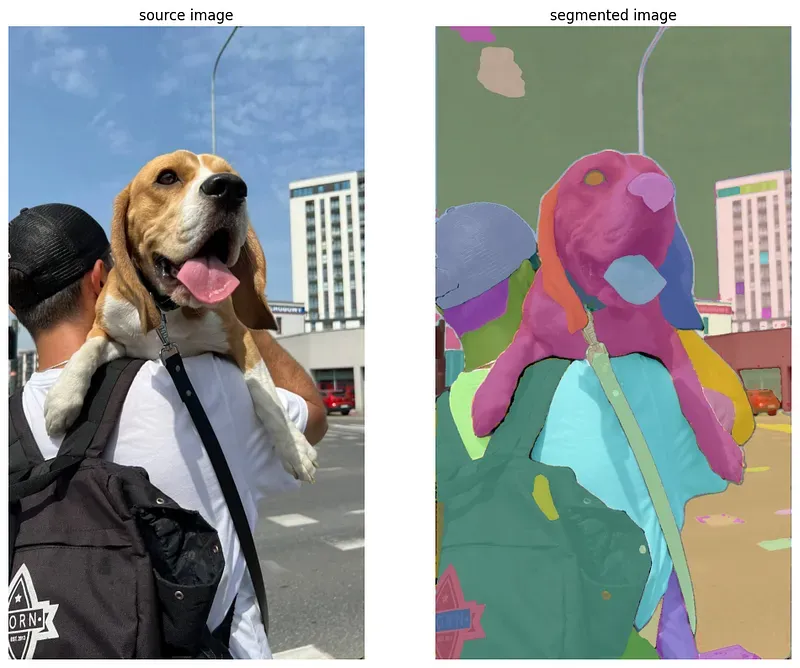

sam.to(device=DEVICE)Automated Mask (Instance Segmentation) Generation with SAM

To generate masks automatically, use the SamAutomaticMaskGenerator. This utility generates a list of dictionaries describing individual segmentations. Each dict in the result list has the following format:

segmentation-[np.ndarray]- the mask with(W, H)shape, andbooltype, whereWandHare the width and height of the original image, respectivelyarea-[int]- the area of the mask in pixelsbbox-[List[int]]- the boundary box detection inxywhformatpredicted_iou-[float]- the model's own prediction for the quality of the maskpoint_coords-[List[List[float]]]- the sampled input point that generated this maskstability_score-[float]- an additional measure of mask qualitycrop_box-List[int]- the crop of the image used to generate this mask inxywhformat

To run the code below you will need images. You can use your own, programmatically pull them in from Roboflow, or download one of the over 200k datasets available on Roboflow Universe.

import cv2

from segment_anything import SamAutomaticMaskGenerator

mask_generator = SamAutomaticMaskGenerator(sam)

image_bgr = cv2.imread(IMAGE_PATH)

image_rgb = cv2.cvtColor(image_bgr, cv2.COLOR_BGR2RGB)

result = mask_generator.generate(image_rgb)The supervision package (starting from version 0.5.0) provides native support for SAM, making it easier to annotate segmentations on an image.

import supervision as sv

mask_annotator = sv.MaskAnnotator(color_map = "index")

detections = sv.Detections.from_sam(result)

annotated_image = mask_annotator.annotate(image_bgr, detections)

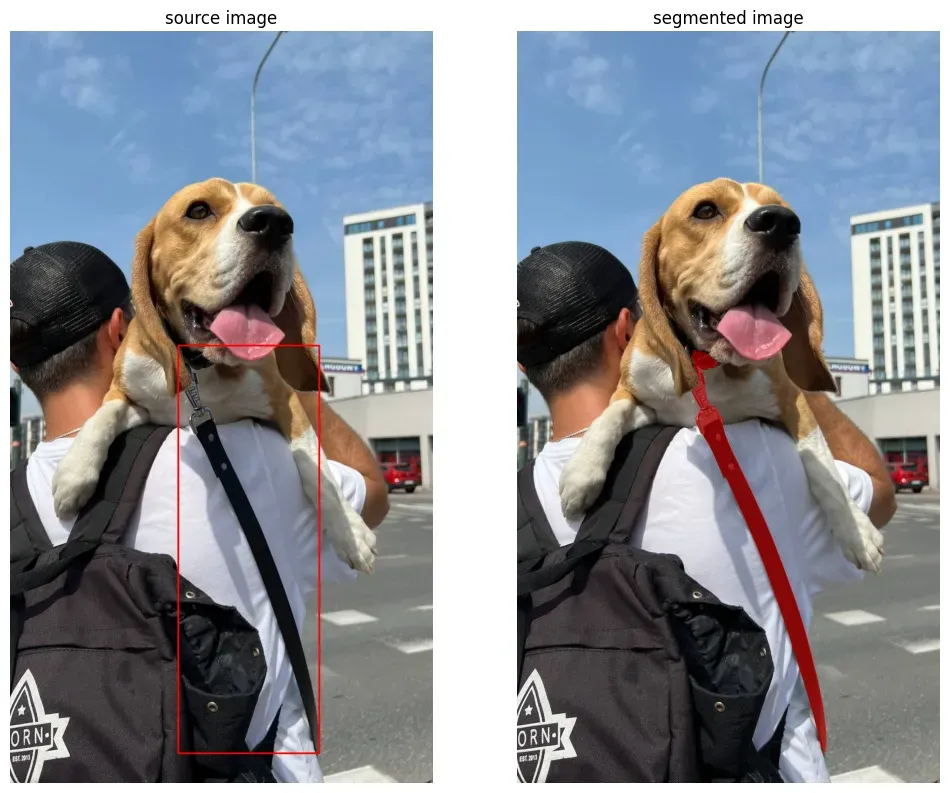

Generate Segmentation Mask with Bounding Box

Now that you know how to generate a mask for all objects in an image, let’s see how you can use a bounding box to focus SAM on a specific portion of your image.

To extract masks related to specific areas of an image, import the SamPredictor and pass your bounding box through the mask predictor’s predict method. Note that the mask predictor has a different output format than the automated mask generator. The bounding box format for the SAM model should be in the form of [x_min, y_min, x_max, y_max] np.array.

import cv2

from segment_anything import SamPredictor

mask_predictor = SamPredictor(sam)

image_bgr = cv2.imread(IMAGE_PATH)

image_rgb = cv2.cvtColor(image_bgr, cv2.COLOR_BGR2RGB)

mask_predictor.set_image(image_rgb)

box = np.array([70, 247, 626, 926])

masks, scores, logits = mask_predictor.predict(

box=box,

multimask_output=True

)

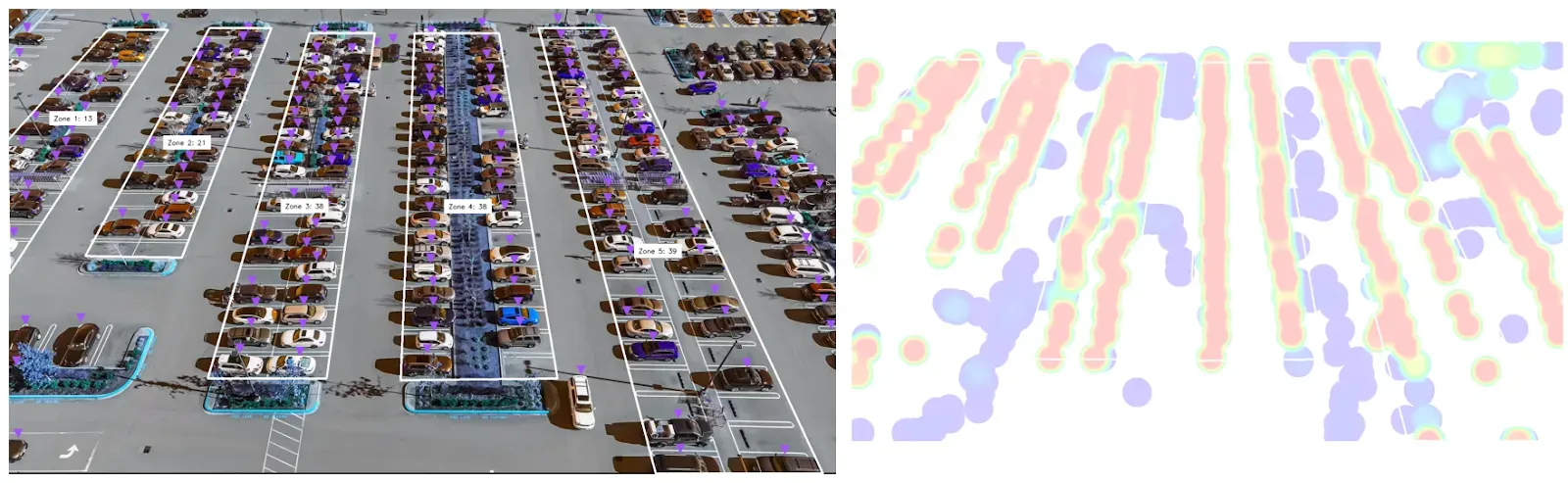

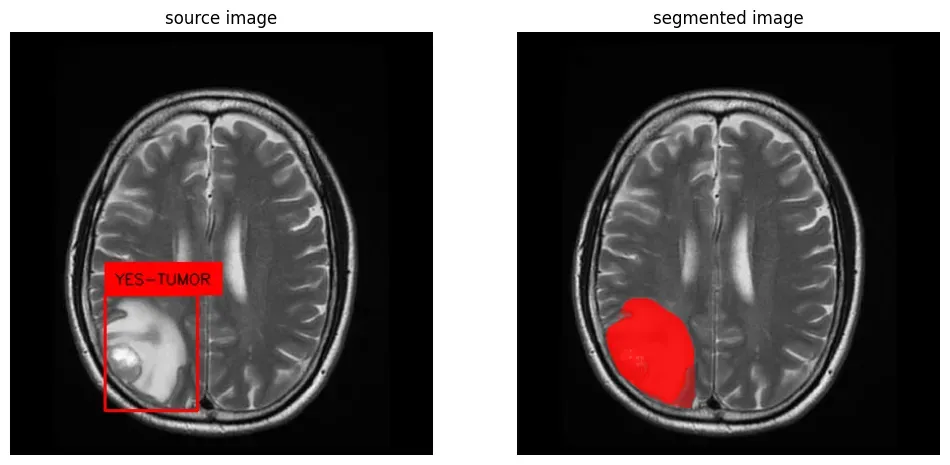

Convert Object Detection Datasets into Segmentation Masks

To convert bounding boxes in your object detection dataset into segmentation masks, download the dataset in COCO format and load annotations into the memory.

If you don't have a dataset in this format, Roboflow Universe is the ideal place to find and download one. Now you can use the SAM model to generate segmentation masks for each bounding box. Head over to the Google Colab where you will find the code to convert from bounding box to segmentation.

Deploy Segment Anything at Scale

You can run Segment Anything (SAM) on your own hardware, at scale, using Roboflow Inference. Roboflow Inference is an inference server through which you can run fine-tuned models (i.e. YOLOv5 and YOLOv8) as well as foundation models like SAM and CLIP.

Learn how to get started with the Inference Segment Anything quickstart.

Conclusion

The Segment Anything Model offers a powerful and versatile solution for object segmentation in images, enabling you to enhance your datasets with segmentation masks.

With its fast processing speed and various modes of inference, SAM is a valuable tool for computer vision applications. To experience labeling your data with SAM, you can use Roboflow Annotate which offers an automated polygon annotation tool, Smart Polygon, powered by SAM.

You might also be interested in using the newer Segment Anything 3, released on November 19th, 2025 - a zero-shot image segmentation model that detects, segments, and tracks objects in images and videos based on concept prompts.

Learn More About SAM

Cite this Post

Use the following entry to cite this post in your research:

Piotr Skalski. (Jan 22, 2024). How to Use the Segment Anything Model (SAM). Roboflow Blog: https://blog.roboflow.com/how-to-use-segment-anything-model-sam/