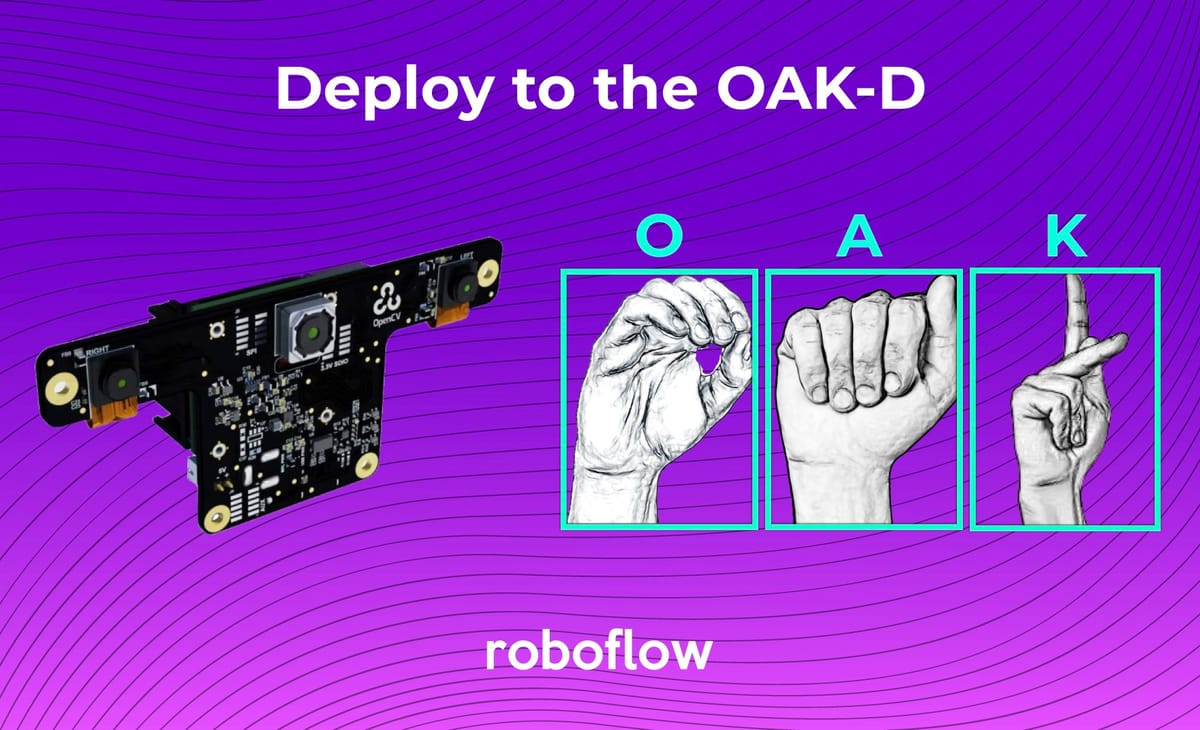

We are pretty excited about the Luxonis OpenCV AI Kit (OAK-D) device at Roboflow, and we're not alone. Our excitement has naturally led us to create another tutorial on how to train and deploy a custom object detection model leveraging Roboflow and DepthAI, to the edge, with depth, faster.

To illustrate the immensely wide open scope of applications this guide opens up, we tackle the task of realtime identification of American sign language.

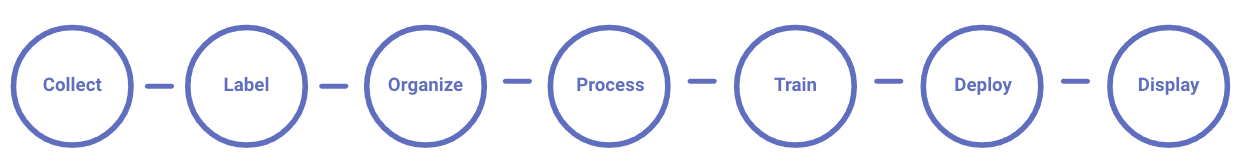

Tutorial Outline

- Gather and Label Images

- Install MobileNetV2 Training Environment in the TensorFlow OD API

- Download Custom Training Data From Roboflow

- Train Custom MobileNetV2 object detection model

- Run Test Inference to Check the Model's Functionality

- Convert Custom MobileNetV2 TensorFlow Model to OpenVino and DepthAI

- Run Our Custom Model on the edge with depth on the Luxonis OAK-D

Resources, Shoutouts, Related Content

Data

- Custom American Sign Language Training Data - Shoutout to David Lee

- Computer Vision for American Sign Language Blog

Training

- Colab Notebook to Deploy Custom Model to OAK-D - This is the notebook we will be referencing in the tutorial

- Luxonis MobileNetV2 Deploy Notebook - Our notebook is heavily inspired by this notebook by Rares @ Luxonis

- Training a Custom TensorFlow Object Detection Model - Roboflow Blog

- Training Custom TensorFlow2 Object Detection Model - Roboflow Blog

Model Conversion

- Converting MobileNetV2 to OpenVino - Intel OpenVino Docs

Deployment

End to End

YouTube Discussion

Gather and Label Images

To get started with this guide, you will need labeled object detection images. Object detection labeling involves drawing a box around the object you want to detect and defining a class label for that object. The labeled images will provide supervision for our model to learn from.

If you would like to follow along with the tutorial, you can skip this step and fork the public American Sign Language Training Data.

To label your own dataset, at the current moment, our labeling tool of choice is CVAT and (recently) Roboflow!:

This guide will also show you how to upload images to Roboflow after labeling.

Install MobileNetV2 Training Environment

Once you have your dataset labeled, it is time to jump into training!

To the Colab Notebook to Deploy Custom Model to OAK-D - we recommend having this up alongside this blog post. You will be able to run the cells in the notebooks sequentially, only changing one line of code for your dataset import.

To start off in this notebook, make a copy in your drive and make sure the GPU Runtime is enabled. Then, we will install dependencies for the TF OD library in the first few cells.

Download Custom Training Data From Roboflow

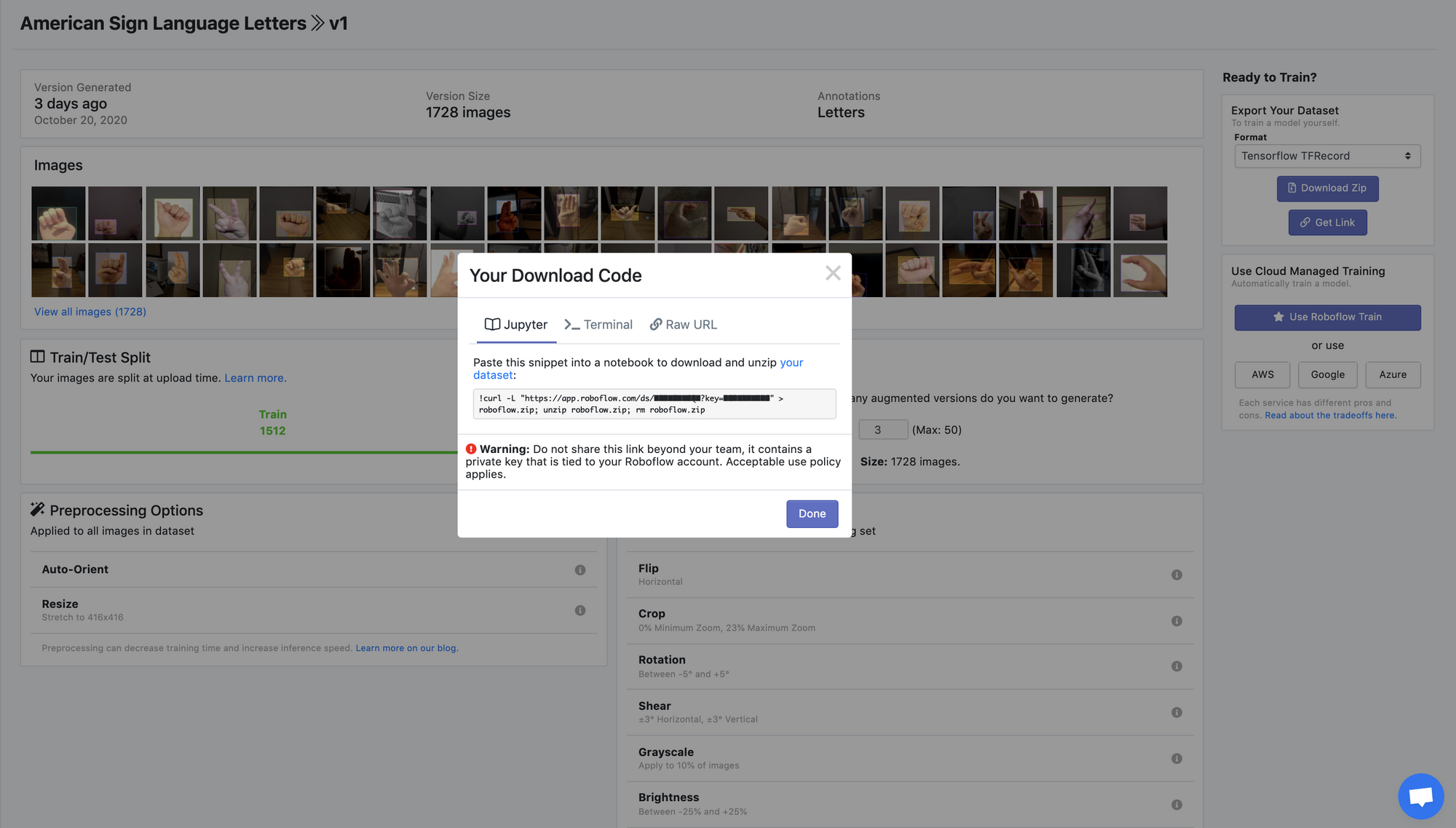

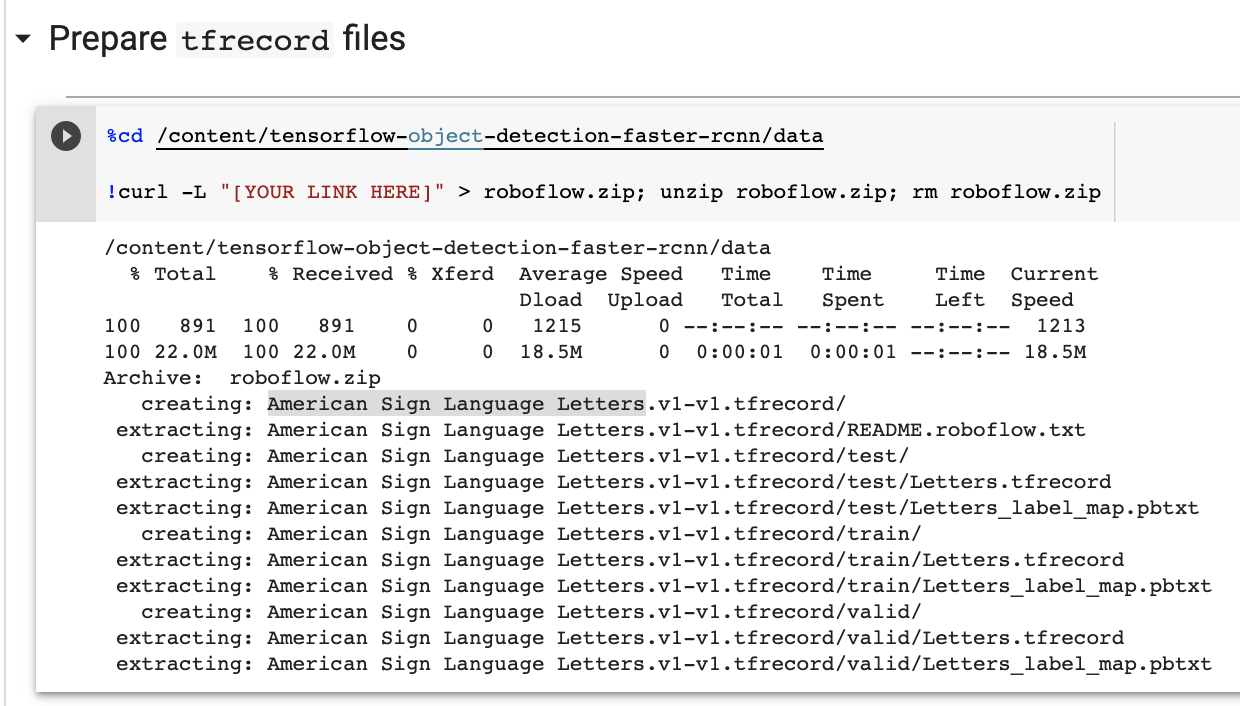

Whether you choose to fork the public sign language detection dataset, or bring your own, you will want to use Roboflow to get your data in the proper format for training, namely, TFRecord.

Choose data preprocessing and augmentation settings. Hit Generate. Then Donwload and choose Tensorflow TFRecord.

Then we'll paste that download link into the Colab notebook to bring our data in.

Train Custom MobileNetV2 Object Detection Model

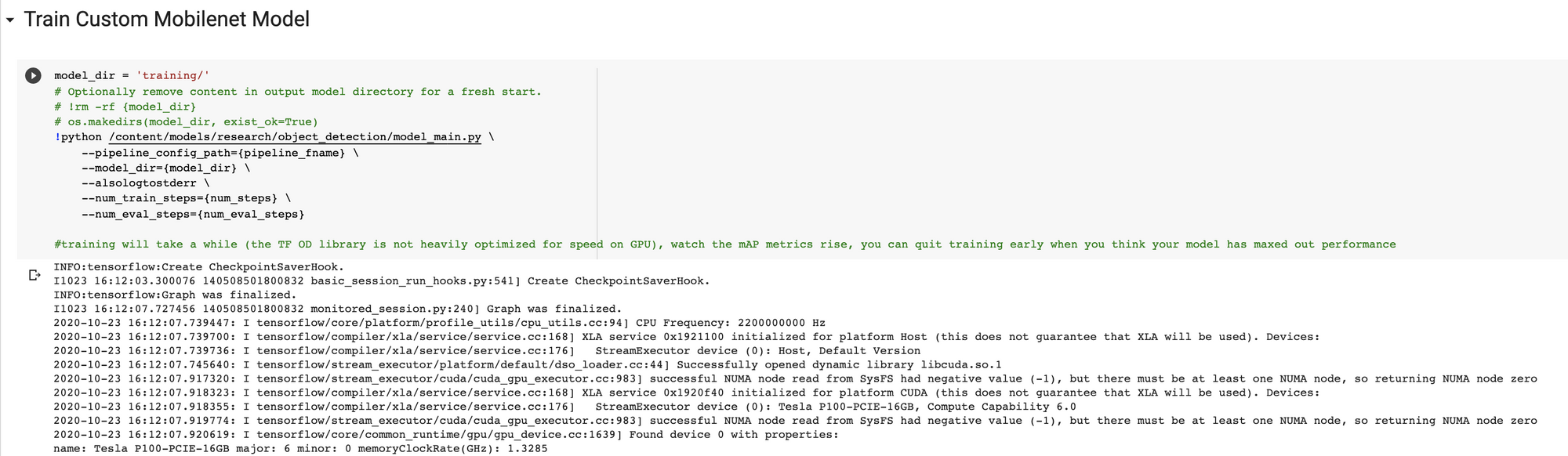

After we have our data in the notebook, we can proceed with training our custom model.

We will first download the pretrained MobileNetV2 weights to start from. Then we'll configure a few parameters in our training configuration file. You can edit these configurations to change based on your datasets needs. Most commonly, you may want to adjust the number of steps your model trains for. More steps takes longer but typically yields a more performant model.

Once the training configuration is defined, we can go ahead and kick off training.

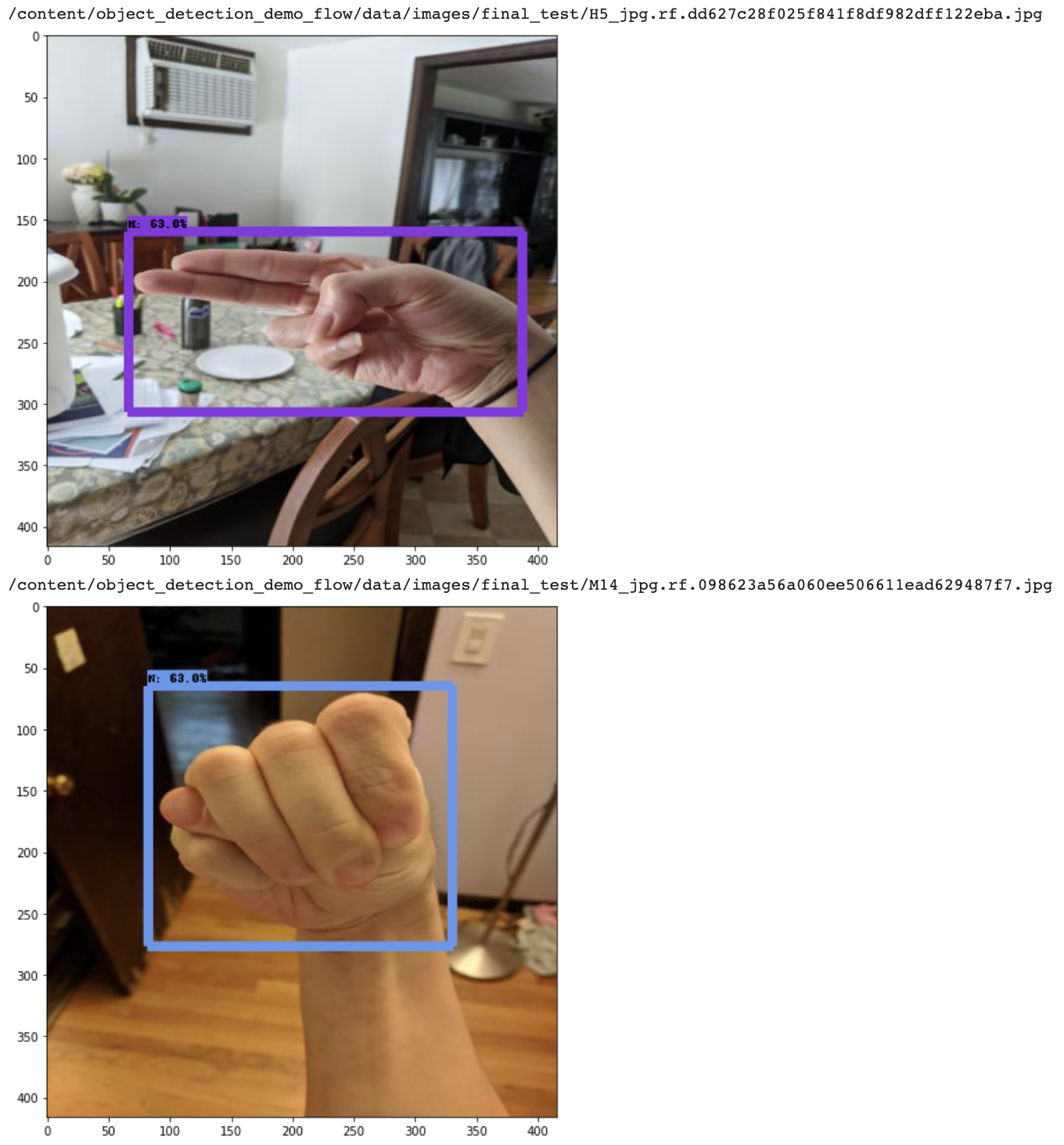

Run Test Inference to Check the Model's Functionality

Before moving on to model conversion, it is wise to check our model's performance on test images. These are images that the model has never seen before.

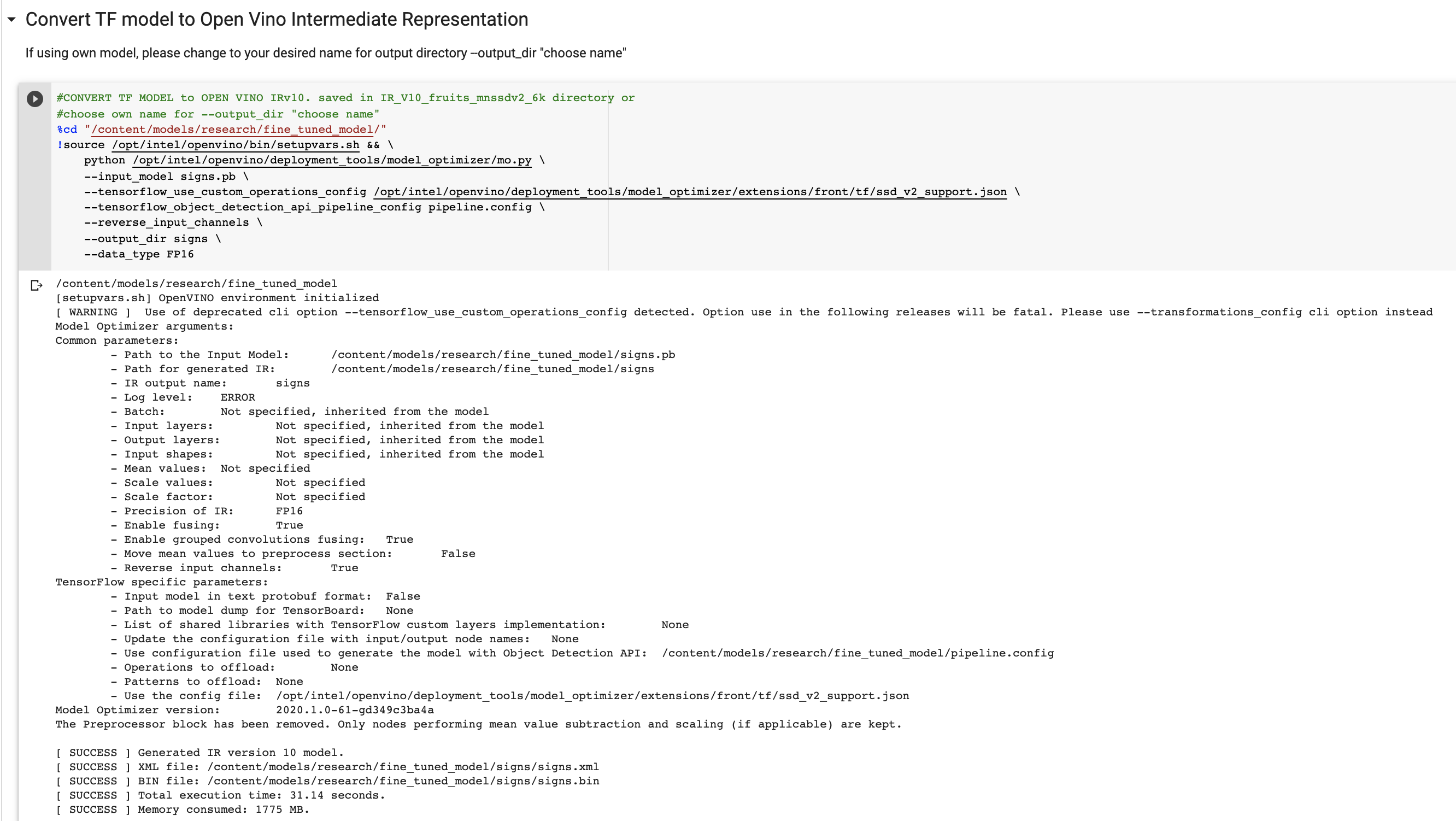

Convert Custom MobileNetV2 TensorFlow Model to OpenVino and DepthAI

Once you are satisfied with your models performance metrics and test inference capabilities it is time to take it live!

Before your model will be usable on the OAK-D Device, you will first need to convert it to a format that is compatible with the DepthAI framework.

The first step is to install OpenVino (Intel's edgeAI framework) and convert your model into an OpenVino Intermediate Representation.

From the OpenVino IR, we then send the model up to DepthAI's API to convert it to a .blob.

Download the .blob and put it somewhere accessible to the machine running your OAK device (AWS S3, USB stick, etc.) . Note: you will need a linux based environment to host the OAK device.

Deploy Our Custom Model to the Luxonis OAK-D

To deploy our custom model on the Luxonis OAK-D, we'll first need to clone the DepthAI repository and install the necessary requirements. Here is the documentation for setting up the DepthAI repo.

Then, you can click in your OAK device and test the following command to see if your installs worked:

python3 depthai_demo.py -dd -cnn mobilenet-ssd

This runs the base mobilenet-sdd model that has been trained on the COCO dataset. To run our model, we'll leverage all the infra around this base model with our own twist.

Within the DepthAI repo, you will see a folder called resources/nn/. To bring in our own model, we'll copy that folder to mobilenet-sddresources/nn/[your_model] and rename the three files to [your_model] in place of mobilenet-ssd.

Then we copy over our custom models weights in place of what once was mobilenet-sdd.blob. Then we edit the two JSON files, to replace the default class names with our own class list. If you need to double-check the order of your custom class lists, you can check the printout in the Colab notebook when we imported our data. After rewriting this files, we are ready to launch our custom model!

python3 depthai_demo.py -dd -cnn [your-model]

You will see the video stream forming bounding boxes around the objects you trained the model to detect along with a depth measurement of how far away the object is from the camera.

Deploying with roboflowoak

Alternatively, you can deploy models trained with Roboflow Train to OAK devices with our Python package (roboflowoak): Step-by-Step Deploy Guide. DepthAI and OpenVINO are required on your host device for the package to work.

- roboflowoak (PyPi)

Conclusion

Congratulations! Through this tutorial, you have learned how to span the long distance from collecting and labeling images to running realtime inference on device with a custom trained model.

We showed how to tackle the problem of identifying American Sign Language in this blog as a demonstration of the wide scope of custom tasks you can apply in your own domain with your dataset.

Good luck and as always, happy training.

Cite this Post

Use the following entry to cite this post in your research:

Jacob Solawetz. (Oct 26, 2020). Luxonis OAK-D - Deploy a Custom Object Detection Model with Depth. Roboflow Blog: https://blog.roboflow.com/luxonis-oak-d-custom-model/