In this blog post, we will explore how you can improve your object detection model performance by converting your bounding box annotations to polygon annotations. We will also discuss the use of augmentations with polygon annotations and using pretrained weights to further boost performance. By the end of this post, you will have powerful tools at your disposal to enhance the accuracy of your object detection models. You can improve your object detection model performance by significant amount using these techniques!

Additionally, you'll have access to the accompanying code to test the comparisons and further explore the results. The code allows for a hands-on experience, enabling you to replicate the experiments and analyze the performance of different annotation types, pretrained weights initialization, and the impact of augmentations on a model's performance.

Bounding Box Annotation (on the left), Polygon Annotation (on the right)

Why Compare Bounding Boxes to Polygons?

The accuracy and performance of object detection models are dependent on the quality of annotations used during the training process. Traditionally, bounding box annotations have long been favored due to their simplicity and ease of application. However, this convenience comes with a tradeoff. Bounding boxes capture objects with space around them, which can result in less precise localization and potentially hinder the model's performance, especially in situations where objects have irregular or complex shapes.

To overcome this limitation, alternative annotation techniques, such as polygons, have emerged. These techniques, such as the Segment Anything Model (SAM) developed by Meta AI, allow for more accurate and detailed object segmentations, enabling better performance, particularly in scenarios involving objects with irregular shapes. Although labeling data using polygons may require additional time and effort, it offers the advantage of capturing objects more precisely and can lead to improved results in object detection tasks. Using tools like Roboflow's Smart Polygon feature (powered by SAM) drastically speeds up the process of annotating data with polygons.

The Experiment: Polygon vs Bounding Box Annotations

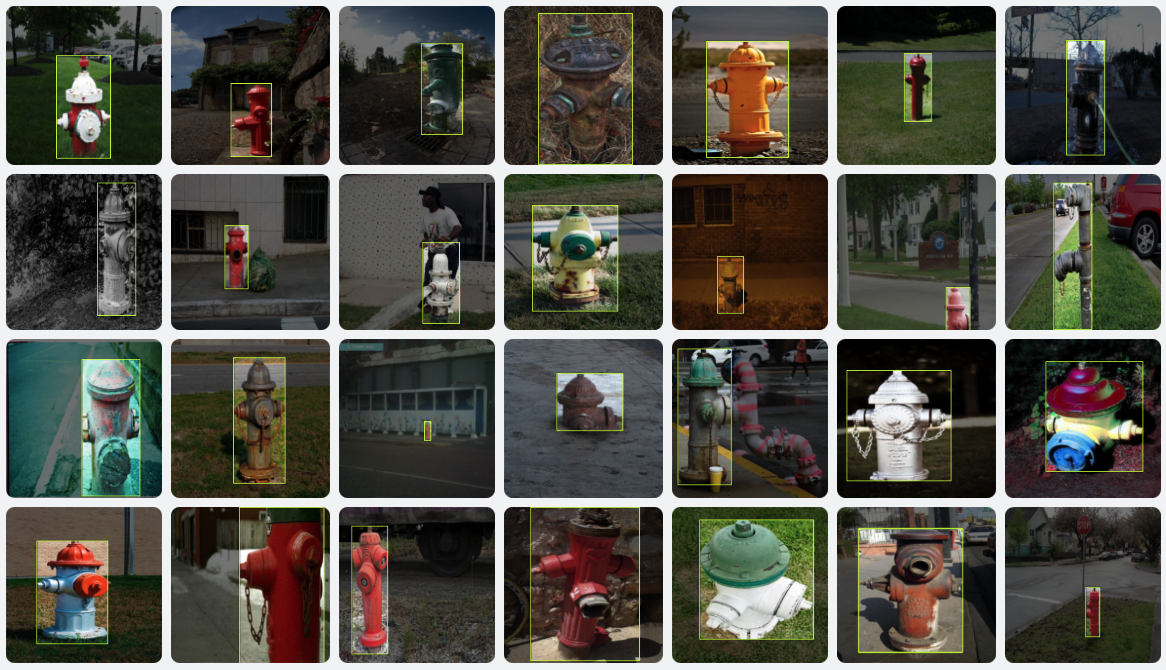

Throughout all our experiments, we maintained consistency in terms of the chosen model, parameters, and dataset. We focused on a dataset specifically curated for fire hydrants. You can download the both dataset using the below link.

- Fire Hydrants: Bounding Box Annotations

- Fire Hydrants: Polygon Annotations

The dataset comprised 408 original images along with 570 augmented images, catering to both bounding box and polygon annotations. It's worth noting that the results may vary depending on the characteristics of custom datasets, such as their size, quality, class distribution, and domain-specific nuances.

The quality and quantity of the dataset have a significant impact on the performance of object detection models. High-quality annotations, with accurate object boundaries and precise labeling, play a crucial role in effectively training the model. Conversely, inconsistent and incomplete annotations can hinder the model's learning capability and its ability to generalize.

If you have a dataset annotated with bounding boxes and want to convert them into instance segmentation annotation labels, use our SAM tutorial and notebook to convert the dataset.

For our experiments, we utilized popular and effective architecture for object detection: Ultralytics YOLOv8. We used Roboflow to download the datasets with both bounding box and polygon annotations and trained the models from scratch, using the provided configuration file, yolov8n.yaml, and the respective dataset for each annotation type.

To train the models, we employed the YOLOv8 architecture for both the bounding box and polygon datasets. We initiated the training process from scratch and the models were trained for a total of 80 epochs, ensuring sufficient learning using this code.

Model Evaluation and Performance Metrics

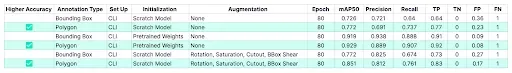

We evaluated the performance of the models using these primary metrics: mAP50, mAP, and normalized confusion matrix. The mAP50 represents the mean average precision at an Intersection over Union (IoU) threshold of 0.50. Additionally, we analyzed precision, recall, true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN) to gain a deeper understanding of the model's performance.

Results

We present the results of our experiments in the table below:

From the provided table, we can draw conclusions. Lets dive in to what this means for your next project.

Polygon Annotations Improve Performance Compared to Bounding Box Annotations

In the table, we see that when comparing the results for the same model setup and parameters, the polygon annotations consistently achieve higher mAP50 values compared to bounding box annotations.

Pretrained Weights Initialization Generally Improves Performance

We can see that using pretrained weights for initialization generally improves the performance. The mAP50 values for both annotation types are higher when pretrained weights are used compared to the scratch model initialization.

Augmentations Enhance Model Performance

We observe that applying augmentations (Rotation, Saturation, Cutout, Bounding Box Shear) improves the performance. The mAP50 values for both annotation types increase when augmentations are applied.

Polygons benefit more from augmentations compared to bounding boxes because polygons accurately represent object shape, allowing for precise adaptation to transformations like rotation and scaling. Polygons can maintain localization accuracy and handle complex shapes, enabling the model to learn from diverse examples and improve performance in object detection tasks. This helps in enhancing the model's ability to handle variations in object appearance, position, and orientation.

It's important to note that these conclusions are based on the information provided in the tables and may not cover all possible scenarios. Further analysis and experimentation is required to validate the pattern across different types of models, datasets and parameters.

Conclusion

In this blog post, we explored the impact of polygon annotations on the performance of object detection models. Our experiments demonstrated that using polygon annotations can lead to improved accuracy compared to models trained with traditional bounding box annotations, particularly in scenarios where objects have irregular shapes.

Furthermore, we leveraged augmentations to enhance the performance of models trained with polygon annotations. By introducing additional variations and challenges to the training data, the models became more robust and achieved even higher accuracy. The augmentations, such as rotation, saturation, cutout, and bounding box shear, further improved the models' ability to generalize to real-world scenarios.

By adopting polygon annotations and utilizing augmentations, you can leverage the power of precise object representation and diverse training data to boost the performance of your object detection models. These techniques open up new avenues for improving the accuracy and reliability of computer vision systems, enabling a wide range of applications in fields such as autonomous driving, robotics, and surveillance.

So, upgrade your object detection models and happy engineering!

Cite this Post

Use the following entry to cite this post in your research:

Arty Ariuntuya. (Jul 19, 2023). Improve Accuracy: Polygon Annotations for Object Detection. Roboflow Blog: https://blog.roboflow.com/polygon-vs-bounding-box-computer-vision-annotation/