As AI systems move beyond text and images, and start operating in the physical world, understanding space becomes essential. Robots, autonomous systems, augmented reality, and simulation tools need more than object detection or language reasoning. They need a stable understanding of three dimensional environments, including how objects relate to each other, and how those relationships change over time.

This capability is called spatial intelligence. It sits at the intersection of perception, geometry, memory, and action. Spatial intelligence allows an AI system not just to describe a scene, but also to reason about it, move within it, and predict the outcome of interactions. Without it, systems may look impressive, while failing at basic physical consistency.

Bridging this gap is now a central challenge in computer vision, robotics, and embodied AI.

What Is Spatial Intelligence?

Spatial intelligence is the ability to understand space, and to use that understanding to act. It means knowing where objects are, how they relate to each other, and how those relationships change when something moves. In AI, this comes from building internal world models that represent the world in three dimensions.

Humans naturally think this way. We do not see the world as labels or text. We experience it as a continuous 3D space with objects, distances, motion, and hidden areas. This is why we can easily judge whether something will fit, fall, collide, or where it went when it moved out of view.

At the center of spatial intelligence is a world model. A world model is an internal map of how the world is arranged and how it changes over time. Instead of treating each image as a separate picture, the model keeps track of objects, their 3D positions, their relationships, and their movement. This allows an AI system to stay consistent across viewpoints, handle occlusions, predict what will happen next, and plan actions.

More specifically, spatial intelligence includes:

- Understanding 3D space: objects have depth, size, position, and do not disappear when they are hidden.

- Reasoning beyond images and text: the system can imagine outcomes, not just recognize pixels or generate words.

- Action in space: the ability to move, interact, and operate in real or simulated environments.

- Recovering 3D from flat images: cameras see flat images, but intelligence comes from understanding the 3D world behind them.

- Consistency over time and physics: objects persist, move smoothly, and follow basic physical rules.

Spatial intelligence is harder than language because the real world is three-dimensional, changing, and constrained by physics. But it is also what allows AI systems to move from describing the world to truly interacting with it.

Why Visual-Spatial Intelligence Matters

The real world isn’t made of images - it’s made of space. Spatial intelligence changes the role of AI from a passive viewer to an active participant - able to navigate environments, reason about interactions, and plan actions before they happen. That shift is what makes robots practical outside the lab, augmented reality stable instead of fragile, and automation trustworthy in real human spaces.

1. Robots that can work in real homes and workplaces

Robots need spatial intelligence to operate outside controlled lab settings. This includes understanding free space, avoiding obstacles, picking and placing objects, and navigating cluttered environments where layouts change and people move unpredictably.

Impact:

- Provide assistance with repetitive or physically demanding tasks

- Reduced physical strain for workers

- Safer collaboration between humans and robots

This is why many researchers describe spatial intelligence as the missing link for practical robotics.

2. Augmented reality that is actually useful

Augmented reality only works when digital content understands the physical world it appears in. Spatial intelligence allows virtual objects to stay fixed in place, navigation cues to align with real hallways, and instructions to attach correctly to machines or tools.

Impact:

- Hands-free guidance for maintenance, repairs, and training

- Indoor navigation in hospitals, airports, and large buildings

- Improved accessibility tools for visually impaired users

Without spatial intelligence, AR systems become unstable and unreliable.

3. Safer vehicles and smarter transportation systems

Autonomous and driver-assist systems depend on spatial understanding to estimate distance, interpret road geometry, and predict the motion of pedestrians and vehicles. Spatial vision helps AI reason about where risk might occur, not just what objects are present.

Impact:

- Fewer accidents

- Better driver assistance

- Safer and more efficient traffic systems

4. Faster design, creativity, and content creation

Spatial intelligence allows AI to generate and reason about three-dimensional environments, not just images or text. This is already influencing film production, game development, architecture, and virtual design workflows. Instead of sketching ideas in 2D, creators can explore them as navigable spaces.

Impact:

- Faster prototyping and iteration

- Lower cost for experimentation

- Small teams creating complex virtual worlds

5. Better learning and problem solving in STEM fields

Many scientific and technical concepts are inherently spatial, such as molecular structures, anatomy, mechanical systems, and physical forces. Spatial AI tools can visualize these concepts in 3D and allow interactive exploration rather than relying on memorization.

Impact:

- Improved science and engineering education

- Better understanding of complex systems

- More intuitive tools for research and discovery

6. Healthcare and human-centered environments

In healthcare settings, spatial intelligence supports robot assistants, safer navigation in crowded clinical spaces, and better interpretation of three-dimensional medical data. It also enables realistic simulation environments for training.

Impact:

- Reduced workload for healthcare staff

- Safer environments for patients

- Better planning, training, and care delivery

Real-World Examples of Spatial Intelligence

Spatial intelligence shows up in many practical systems today where machines need to understand space and interact with the physical world, not just recognize objects in isolation. Following are some examples that show how AI moves beyond flat image recognition toward understanding space, geometry, and motion.

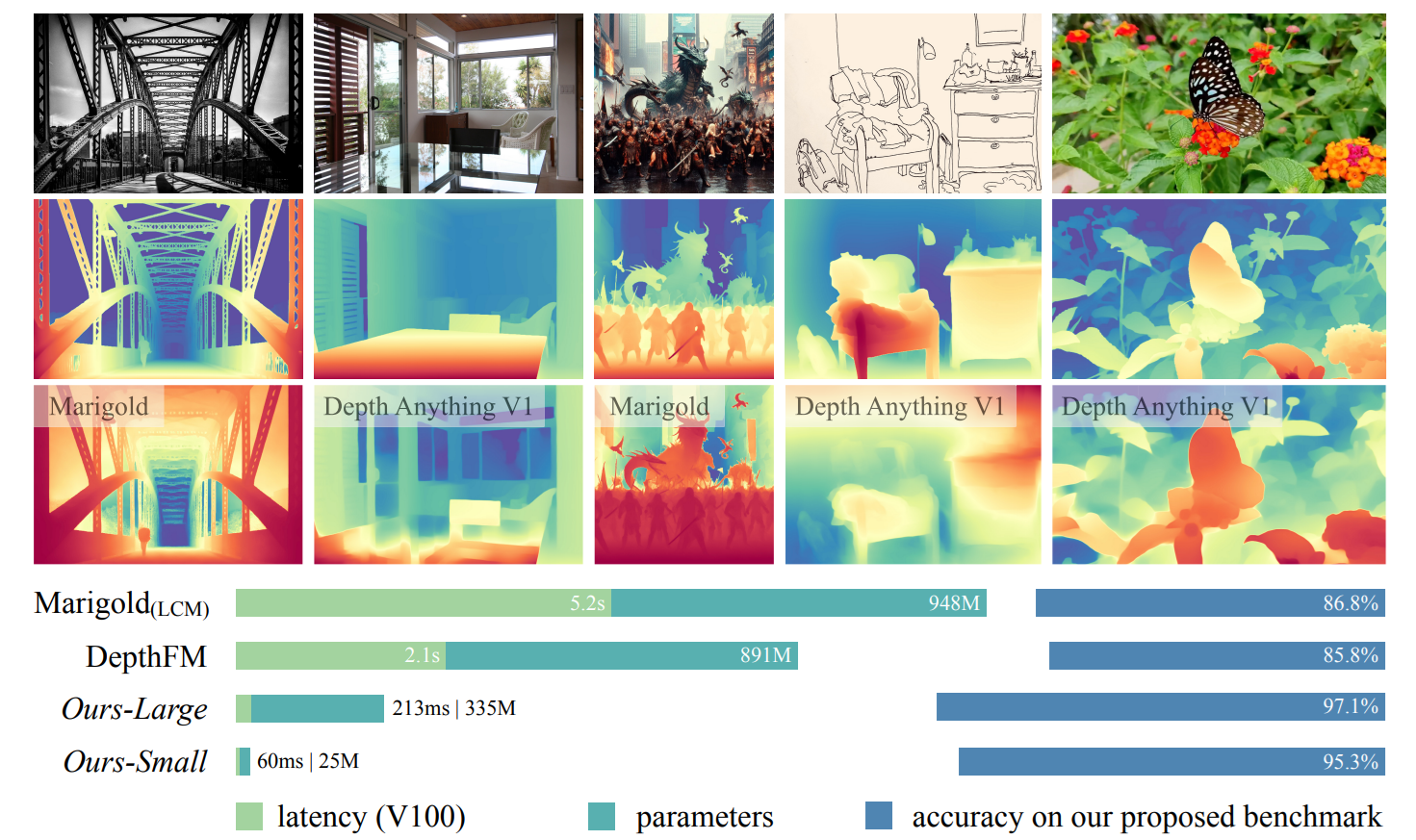

Example 1: Depth Estimation

Depth estimation models predict how far objects are from the camera using a single image or video. This builds 3D awareness from flat inputs. Depth estimation is used in many applications such as:

- AR object placement

- Obstacle detection for robots and vehicles

- Understanding scene layout

Depth estimation turns flat images into spatial maps that AI can reason over.

Learn more:

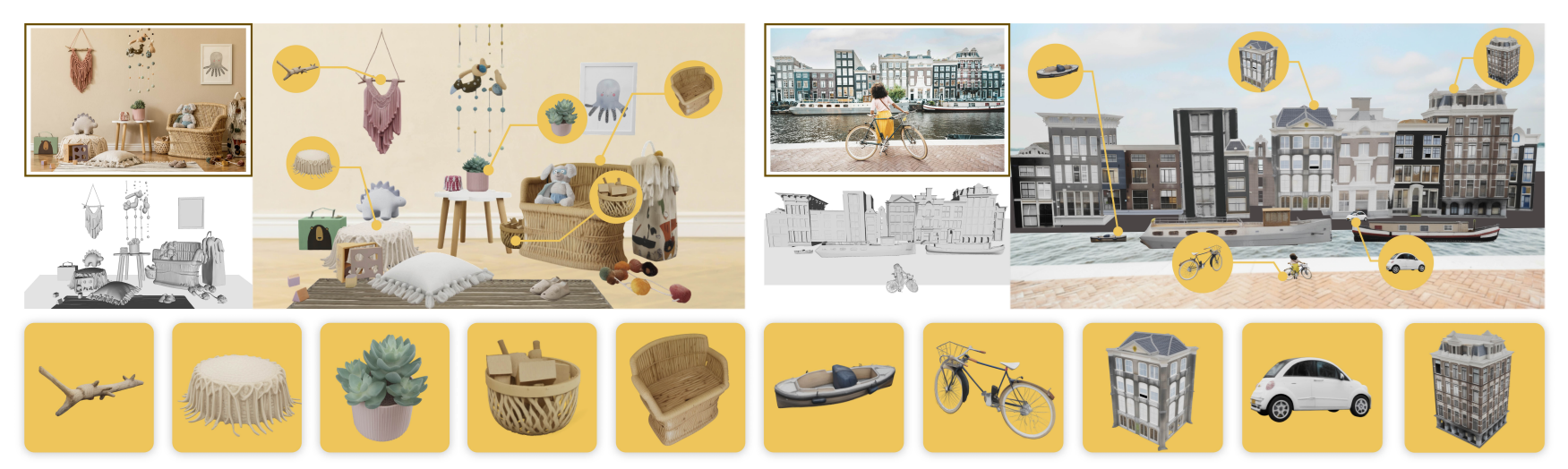

Example 2: 3D Reconstruction and Neural Scene Representations

3D reconstruction techniques recover the shape and layout of a scene from multiple images. Newer neural approaches, such as NeRF style models and segmentation-driven 3D pipelines (including SAM-based 3D workflows), can create highly detailed and consistent scene representations. It is used in

- Virtual tours and digital twins

- Film and visual effects production

- Industrial inspection and simulation

These models allow AI systems to maintain a coherent world instead of generating disconnected views.

Learn more:

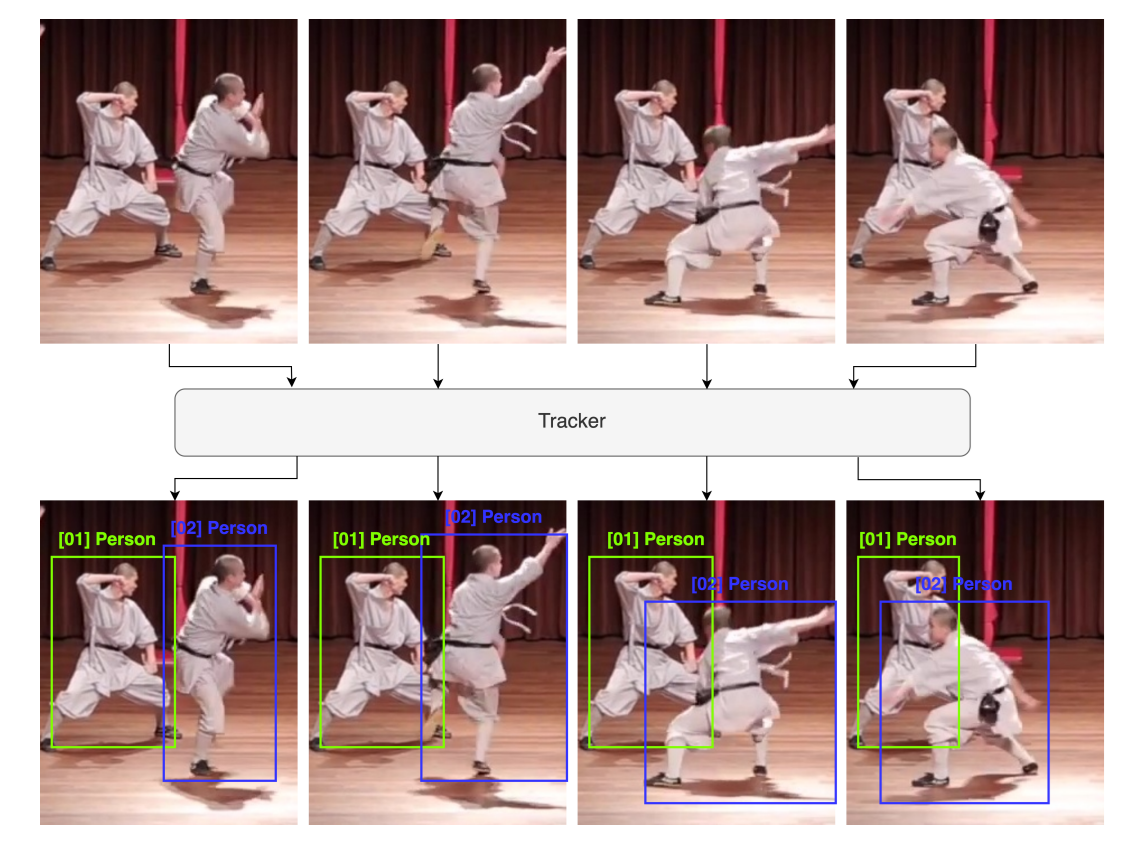

Example 3: Object Tracking

Object tracking systems maintain the identity and position of objects as they move through a scene, even when they overlap or become temporarily occluded. This requires spatial memory and reasoning across time, not just frame-by-frame detection. Some of its applications are:

- Traffic monitoring and pedestrian tracking

- Sports analytics

- Factory and warehouse monitoring

Tracking adds the time dimension to spatial intelligence, allowing systems to reason about motion and interaction.

Learn more:

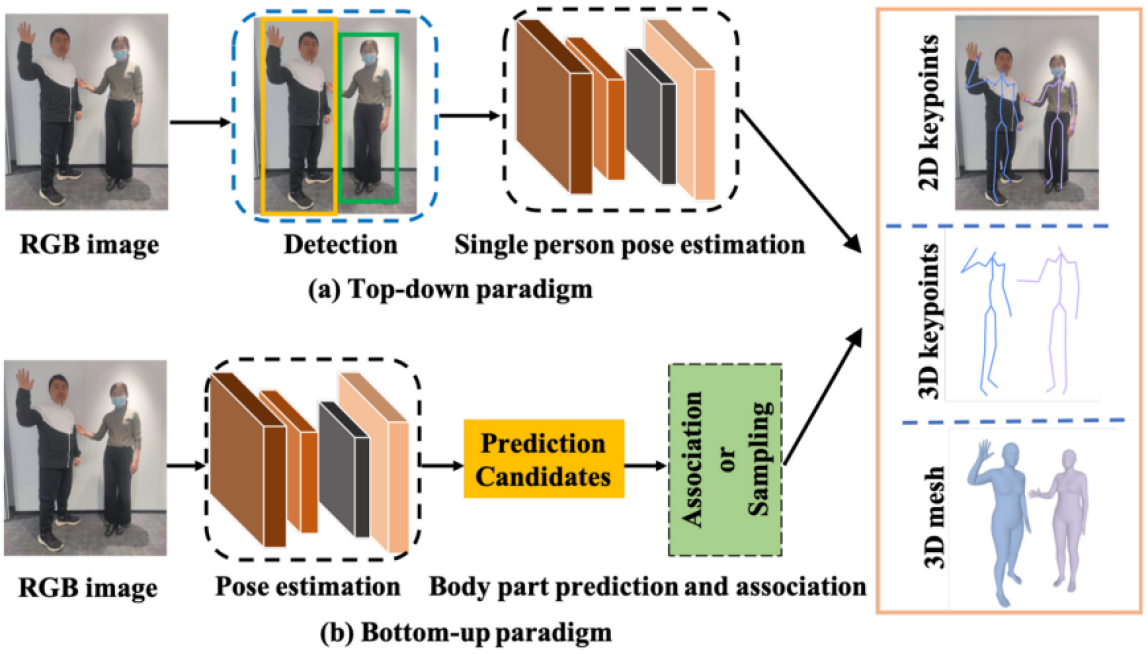

Example 4: Pose Estimation

Pose estimation models detect keypoints on humans or objects and reason about their spatial configuration. They go beyond simply finding objects to understanding the structure and movement of bodies or tools in space. Modern pose estimation includes both 2D keypoint detection and 3D pose estimation, where the system predicts the three-dimensional positions of joints and body parts from images or video. Applications of pose estimation include:

- Human-robot interaction, where robots understand human posture and intent

- Safety and ergonomics monitoring in workplaces

- Sports performance analysis and training feedback

- Gesture-based interfaces for control and accessibility

- Motion tracking for AR, VR, and animation

Pose estimation moves computer vision from recognizing that objects are present to understanding where they are in space, how they are oriented, and how they are moving.

Learn more:

Example 5: Scene Understanding with Vision-Language Models

Vision-language models (VLMs) can interpret full environments, including individual objects. Scene understanding involves analyzing overall layouts, spatial relationships, and how elements interact within a space.

A VLM can analyze an image of a room and describe the spatial organization such as where furniture is placed, which paths are open or blocked, which objects are stacked or touching, and how people might move through the space. For example, given a cluttered scene, a VLM can explain which areas are navigable, which objects obstruct movement, and how the layout would change if something is moved. Some application of VLMs are:

- Assistive systems that describe environments for visually impaired users

- Human-robot collaboration in shared spaces

- AR interfaces that adapt to real-world layouts

- Safety and inspection tools that assess scene conditions

VLMs act as scene interpreters, providing structured, human-readable spatial understanding rather than precise 3D measurements. This turns raw visual input into meaningful scene-level reasoning that helps humans and machines make spatial decisions.

Learn more:

Comprehensive Guide to Vision-Language Models

Spatial Intelligence Conclusion

As AI moves into robotics, transportation, healthcare, and everyday spaces, understanding three dimensional environments becomes critical. Systems that lack spatial intelligence may appear capable, but fail when faced with real-world complexity and change.

True general intelligence requires more than pattern recognition. It requires the ability to understand space and act within it. Spatial intelligence is therefore not an add-on feature. It is a core requirement for the next generation of AI systems.

Cite this Post

Use the following entry to cite this post in your research:

Timothy M. (Dec 29, 2025). Spatial Intelligence. Roboflow Blog: https://blog.roboflow.com/spatial-intelligence/