Over 500 hours of video are being uploaded to YouTube (speaking of which.. have you subscribed to our channel yet?) every minute*. Making sense of that sea of video content creates valuable opportunities. For brands, knowing when and where they're being mentioned means identifying their share-of-voice, marketing to potentially highly relevant viewers, and more.

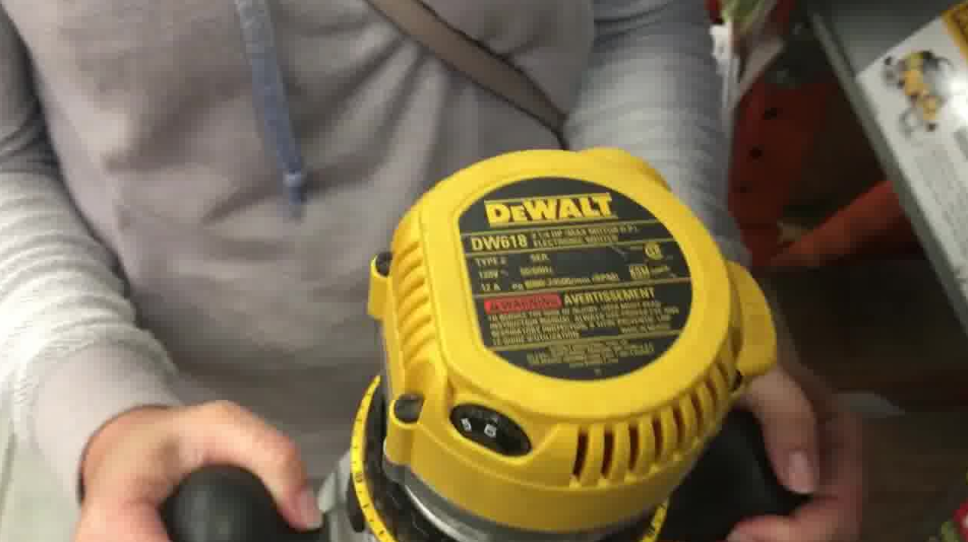

Charles Herring, a software engineer in San Francisco, has taken notice. Charles set out to build a computer vision model that would enable tool brands to identify when they, or a competitor, are being given free air time.

For some companies, power tools represent tens of millions in annual sales. Increasing their exposure, share-of-voice, and, ultimately, conversion from those watching Do It Yourself (DIY) YouTube videos represents a lucrative opportunity.

Charles astutely noted that many YouTubers provide hours of free air time to some brands as a tool is used actively in a shot or even sits idly on a workbench. Providing insights into this otherwise unknown channel enables brands to be aware of their online presence while opening up sponsorship opportunities for YouTubers.

Various tools given free air time in YouTube videos.

Charles set out to create a computer vision model that could run in his browser to identify tools and their corresponding brands. Initially, Charles managed all his source data and training in Google Cloud Platform's infrastructure. He would upload unlabeled images, label in the tool, and use Google AutoML to train a model.

However, Charles identified a few key issues. First, it was challenging to identify dataset-level statistics about his images. How represented were his classes? Should he combine or drop some class labels? Were the objects in each of the frames distributed across different positions in the image?

Second, Charles's dataset was fairly limited, yet the power tool videos could have tools appear in a wider array of conditions: drills rotate at different angles, varying lighting conditions, tools at varying distance from the camera itself, and more.

Charles found Roboflow to be a choice system-of-record for his computer vision training data. Roboflow Pro supports the Google Cloud Vision AutoML format for its CSV and annotations, so uploading data is as easy as dropping in his CSV of images for inspection.

Moreover, Roboflow's Dataset Health Check enables Charles to easily keep track of his class representation, and ontology management for computer vision enables him to easily combine classes, drop underrepresented labels, and more.

To increase the dataset size, Charles leverages Roboflow augmentation to flip, adjust brightness, and randomly alter output images for training. The training dataset size more than doubled, enabling the model to learn a wider array of YouTube video conditions.

Charles made use of Roboflow's Google Cloud AutoML Cloud Vision CSV export to seamlessly continue training.

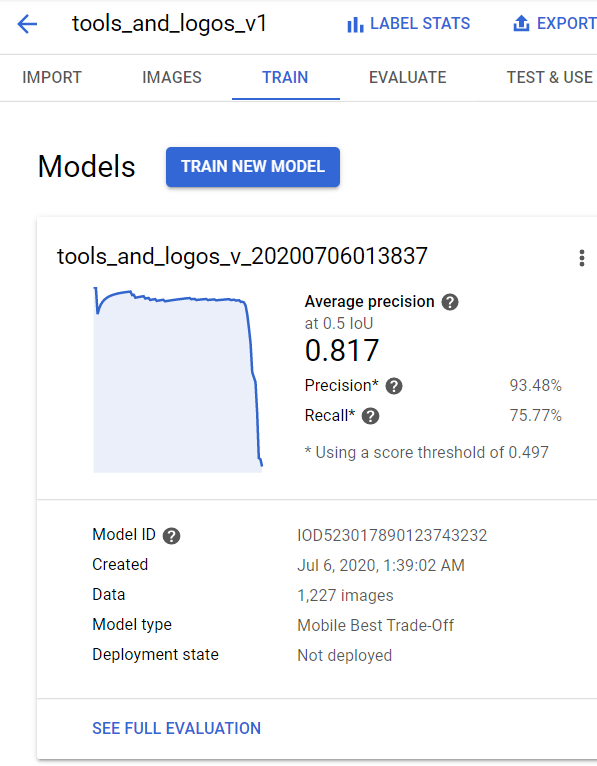

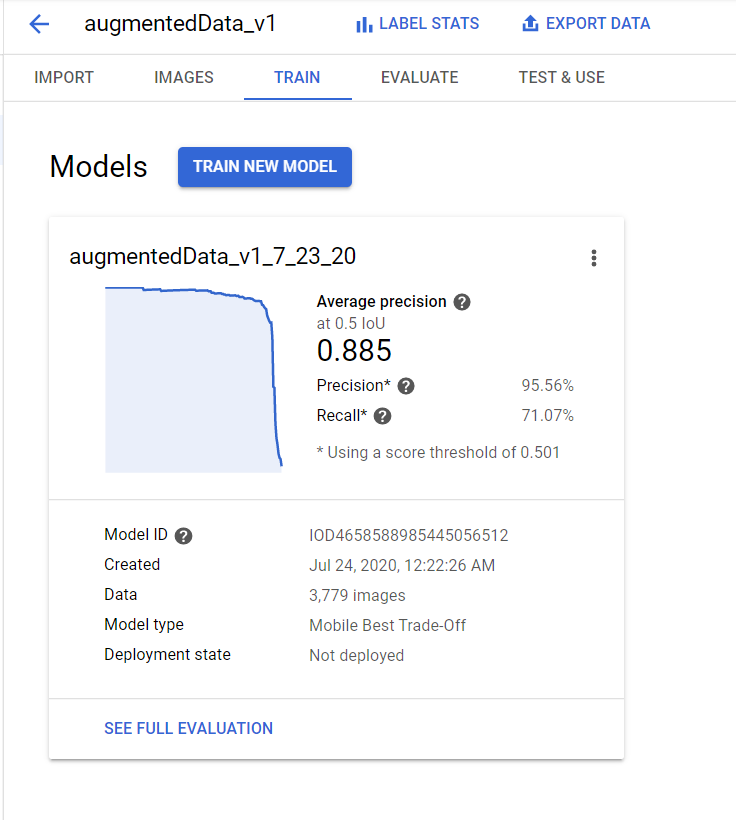

Before Roboflow, Charles saw a Google Cloud Vision mAP of 0.817. After Roboflow, Charles's mAP boosted to 0.885, an eight percent jump.

Charles's AutoML mAP before (left) and after (right) using Roboflow. Charles saw an eight percent jump.

As a result of incorporating Roboflow Pro into Charles's workflow, he's seeing higher quality models and spending less time on dataset management, and more time on his domain problem, including selling his services to large power tool retailers.

Interested in boosting your results with Roboflow Pro? Contact us.

Cite this Post

Use the following entry to cite this post in your research:

Joseph Nelson. (Aug 16, 2020). Using Computer Vision to Find Brands in YouTube Videos. Roboflow Blog: https://blog.roboflow.com/using-computer-vision-to-find-brands-in-youtube-videos/