YOLOv11 is a computer vision model architecture from the creators of the YOLOv5 and YOLOv8 models. YOLOv11 supports object detection, segmentation, classification, keypoint detection, and oriented bounding box (OBB) detection.

In this guide, we are going to discuss what YOLOv11 is, how it performs, and how you can train and deploy YOLOv11 models to your own hardware.

You can try out YOLO11 in the interactive workflow below.

Without further ado, let’s get started!

What is YOLOv11?

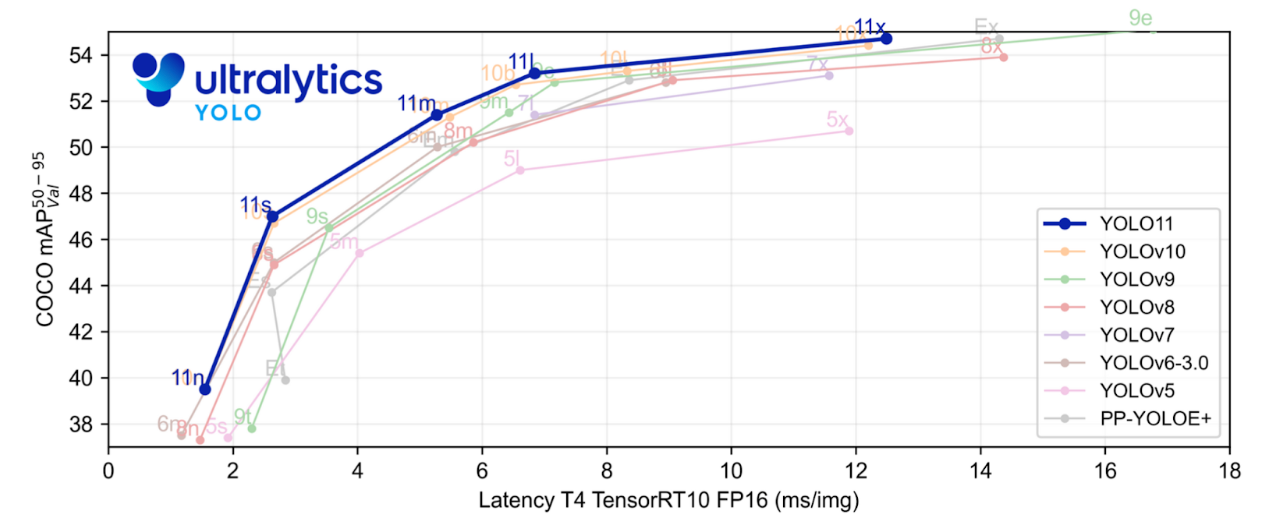

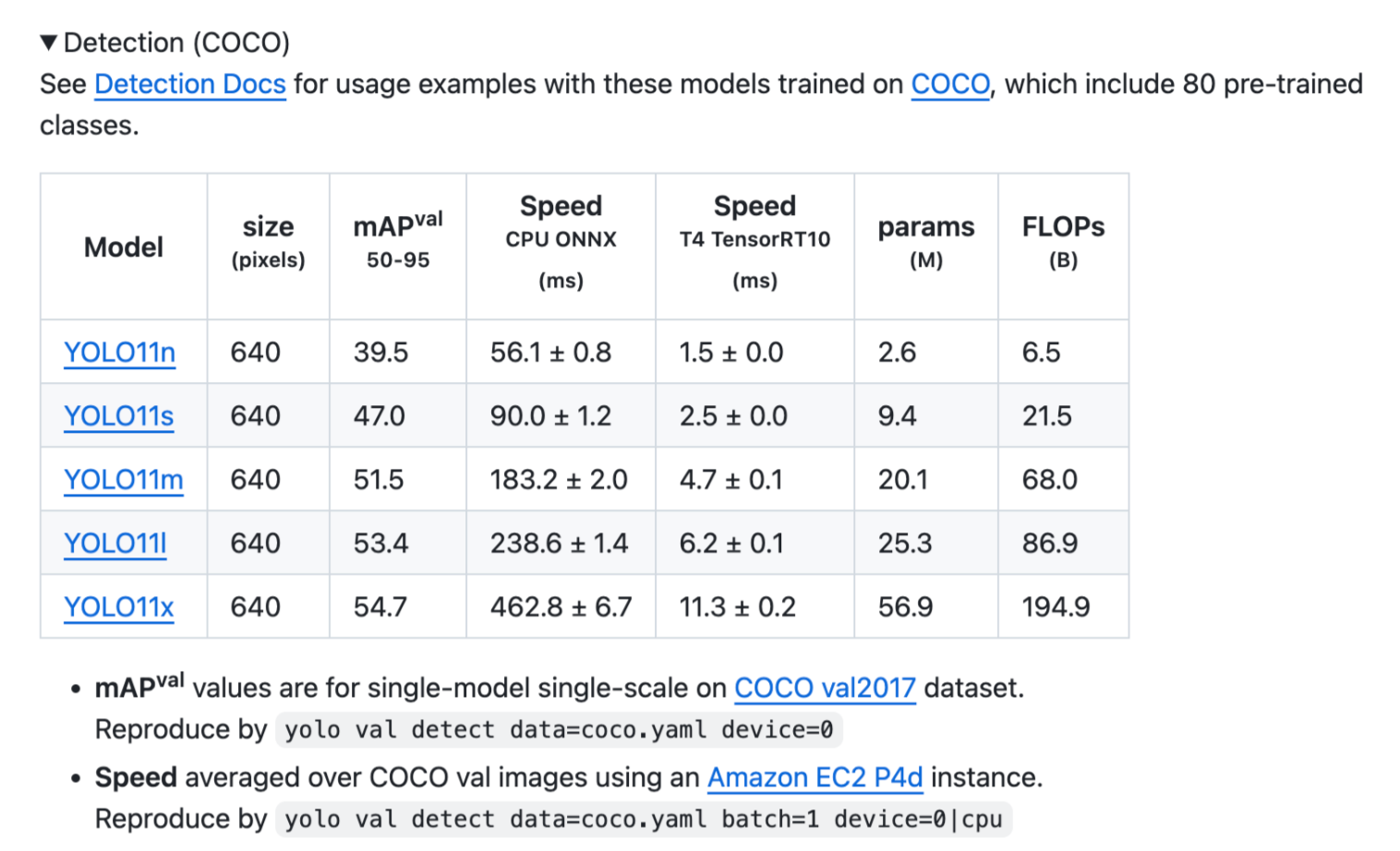

YOLOv11 is a series of computer vision models with varying sizes and levels of accuracy.

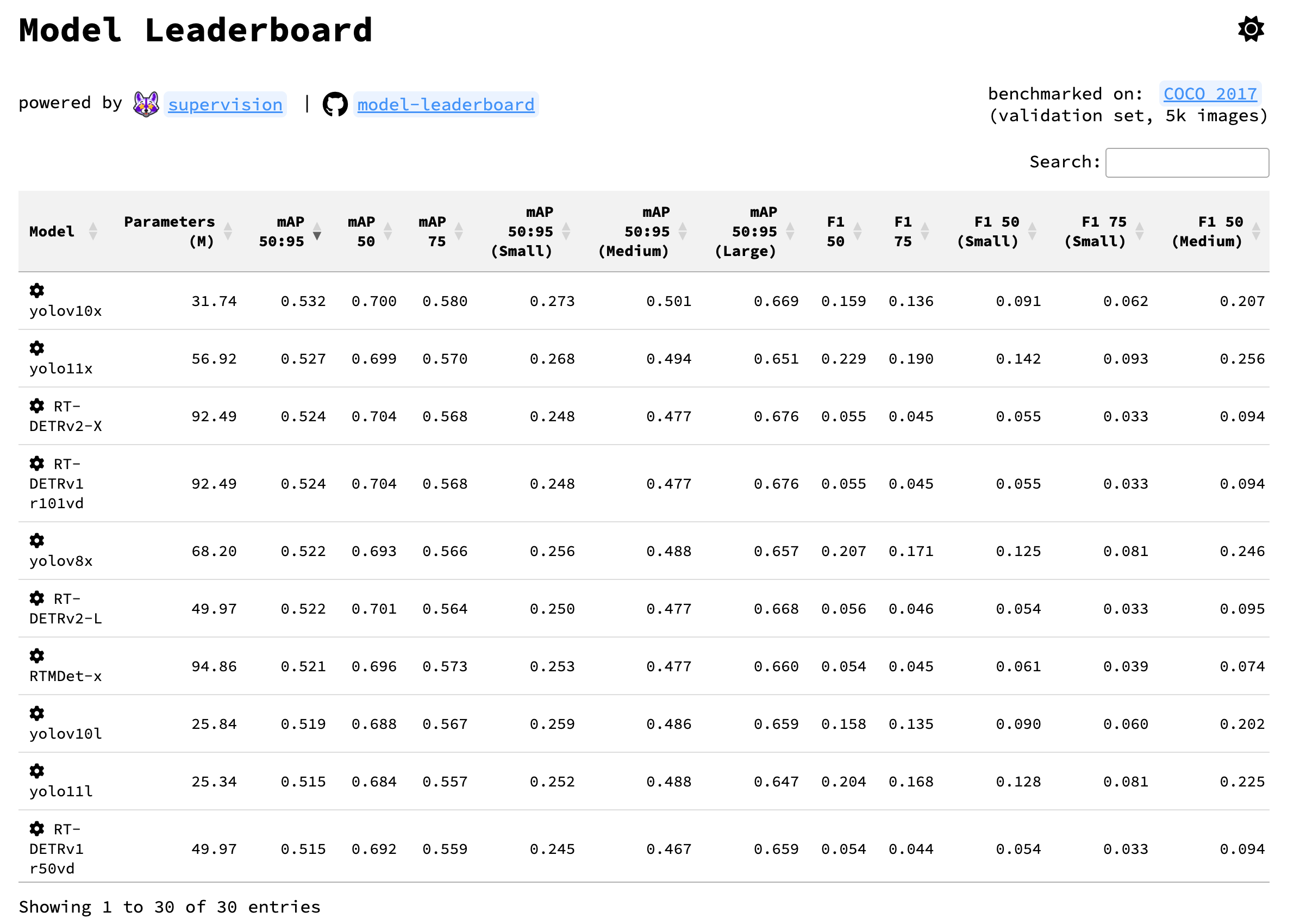

The YOLOv11x model, the largest in the series, reportedly achieves a 54.7% mAP score when evaluated against the Microsoft COCO benchmark. The smallest model, YOLOv11n reportedly achieves a 39.5% mAP score when evaluated against the same dataset. See how YOLOv11 compares to other object detection models in the object detection model leaderboard.

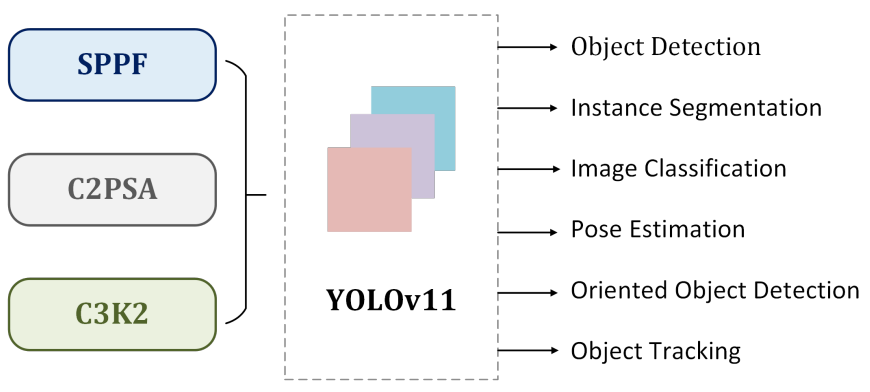

YOLOv11 Architecture: An Overview

YOLOv11 introduces the C3k2 (Cross Stage Partial with kernel size 2) block, SPPF (Spatial Pyramid Pooling - Fast), and C2PSA (Convolutional block with Parallel Spatial Attention) components. These new techniques advance feature extraction and improve model accuracy which continues the YOLO lineage of better models for real-time object detection use cases.

The C3k2 block replaces the C2f block in previous YOLO models which is more computationally efficient and improves processing speed.

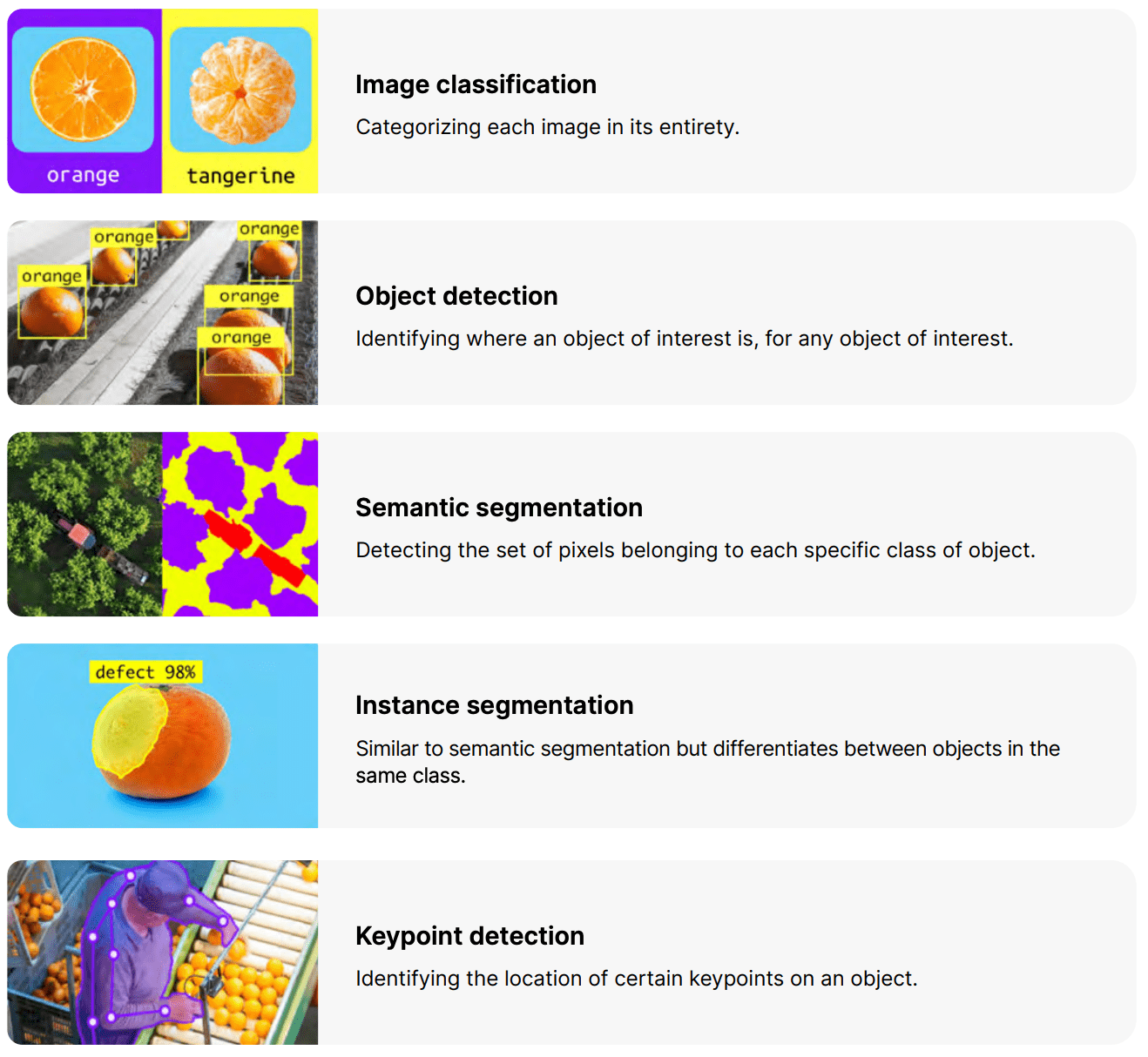

YOLOv11 supports multiple task types including object detection, classification, image segmentation, keypoint detection, and Oriented Bounding Box (OBB).

For a deeper dive into the architecture, we recommend reading the YOLOv11 paper.

YOLOv11 Annotation Format

YOLOv11 uses the YOLO PyTorch TXT annotation format, a modified version of the Darknet annotation format. If you need to convert data to YOLO PyTorch TXT for use in your YOLOv11 model, we have you covered. Check out this tool to learn how to convert data for use in your new YOLOv11 model.

YOLOv11 Data Labeling Tool

The creator and maintainer of YOLOv11 has partnered with Roboflow to be a recommended annotation and export tool for use in your YOLOv11 projects. Using Roboflow, you can annotate data for all the tasks YOLOv11 supports – object detection, classification, and segmentation – and export data so that you can use it with the YOLOv11 CLI or Python package. See the YOLOv11 data labeling guide.

Getting Started with YOLOv11

To get started applying YOLOv11 to your own use case, check out our guide on how to train YOLOv11 on custom dataset.

To see what others are doing with YOLO11, browse Roboflow Universe for other YOLOv11 models, datasets, and inspiration.

Deploy YOLOv11 Models

In addition to using the Roboflow hosted API for deployment, you can use Roboflow Inference, an open source inference solution. Inference works with CPU and GPU, giving you immediate access to a range of devices, from the NVIDIA Jetson (i.e. Jetson Nano or Orin) to ARM CPU devices.

With Roboflow Inference, you can self-host and deploy your model on-device.

Step #1: Upload Model Weights to Roboflow

Once you have finished training a YOLOv11 model, you will have a set of trained weights ready for use with a hosted API endpoint. You can upload your model weights to Roboflow Deploy with the deploy() function in the Roboflow pip package to use your trained weights in the cloud.

To upload model weights, first create a new project on Roboflow, upload your dataset, and create a project version. Check out our complete guide on how to create and set up a project in Roboflow. Then, write a Python script with the following code:

import roboflow

roboflow.login()

rf = roboflow.Roboflow()

project = rf.workspace().project(PROJECT_ID)

project.version(DATASET_VERSION).deploy(model_type="yolo11", model_path=f"{HOME}/runs/detect/train/")Replace PROJECT_ID with the ID of your project and DATASET_VERSION with the version number associated with your project. Learn how to find your project ID and dataset version number.

Shortly after running the above code, your model will be available for use in the Deploy page on your Roboflow project dashboard.

Step #2: Install Inference

You can deploy applications using the Inference Docker containers or the pip package. Let's use the pip package. First run:

pip install inferenceStep #3: Run Inference on an Image

Then, create a new Python file and add the following code:

from inference import get_model

import supervision as sv

import cv2

image_file = "image.jpeg"

image = cv2.imread(image_file)

model = get_model(model_id="model-id")

# run inference on our chosen image, image can be a url, a numpy array, a PIL image, etc.

results = model.infer(image)[0]

# load the results into the supervision Detections api

detections = sv.Detections.from_inference(results)

# create supervision annotators

bounding_box_annotator = sv.BoundingBoxAnnotator()

label_annotator = sv.LabelAnnotator()

# annotate the image with our inference results

annotated_image = bounding_box_annotator.annotate(

scene=image, detections=detections)

annotated_image = label_annotator.annotate(

scene=annotated_image, detections=detections)

# display the image

sv.plot_image(annotated_image)Above, set your Roboflow workspace ID, model ID, and API key, if you want to use a custom model you have trained in your workspace.

Also, set the URL of an image on which you want to run inference. This can be a local file.

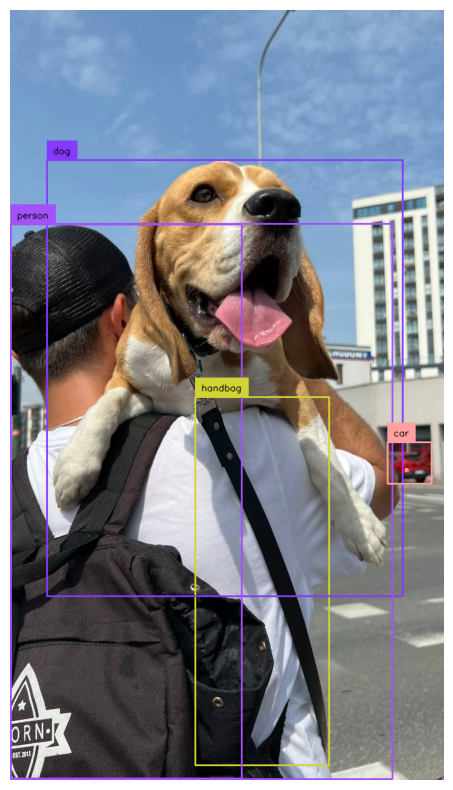

Here is an example of an image running through the model:

The model successfully detected a person in the image, indicated by the purple bounding box on the image.

You can also run inference on a video stream. To learn more about running your model on video streams – from RTSP to webcam feeds – refer to the Inference video guide.

YOLOv11 FAQs

Does YOLOv11 have a published paper?

Yes, a YOLOv11 paper was published which analyzes YOLOv11 and provides an overview of the architecture. The paper was not published by the developer of YOLOv11.

Under what license is YOLOv11 covered?

YOLO11 is covered under an AGPL-3.0 license. If you deploy YOLOvv11 models with Roboflow, you automatically get a commercial license to use the model.

Where is the YOLOv11 source code?

You can find the YOLOv11 source code in the YOLO11 GitHub repository.

What classes can the base YOLOv11 weights identify?

The base YOLOv11 weights that were released with the model were trained on the Microsoft COCO dataset.

YOLOv11 Conclusion

Announced and launched in September 2024, YOLO11 is the latest series of computer vision models in the YOLO lineage. The model architecture has support for all the same vision tasks as YOLOv8, while offering improved accuracy when evaluated against the COCO dataset benchmark.

In this guide, we walked through the basics of YOLOv11: what the model is, what you can do with it, and how to deploy the model on your own device. To learn more about training YOLOv11 models, refer to our YOLOv11 training guide.

Cite this Post

Use the following entry to cite this post in your research:

James Gallagher. (Nov 6, 2024). What Is YOLOv11? An Introduction. Roboflow Blog: https://blog.roboflow.com/what-is-yolo11/