Oftentimes, the visual data you'll want to analyze will come in the form of video. It may be security camera footage, YouTube videos, or webcam recordings. We know how to use Roboflow with images, but how does it work with video?

For most computer vision models, the best way to utilize video is to consider it as simply a sequence of individual images. As we saw in our tutorial on how to get predictions from your model on videos, you can split a video into frames and then process the frames independently. We can use the same strategy to extract training data.

Extracting Video Frames

While you can use cryptic command line tools like ffmpeg, the easiest way to train a computer vision model on video is with Roboflow Pro.

Simply drop a video into the upload flow and you will be able to select a frame rate to sample. The higher the frame rate, the more images you will get from your video (but the more likely they are to be similar to each other).

Experiment to see what framerate strikes a good balance between getting enough training data for your model to learn from and not making things tedious for your annotators.

Annotating Video Frames

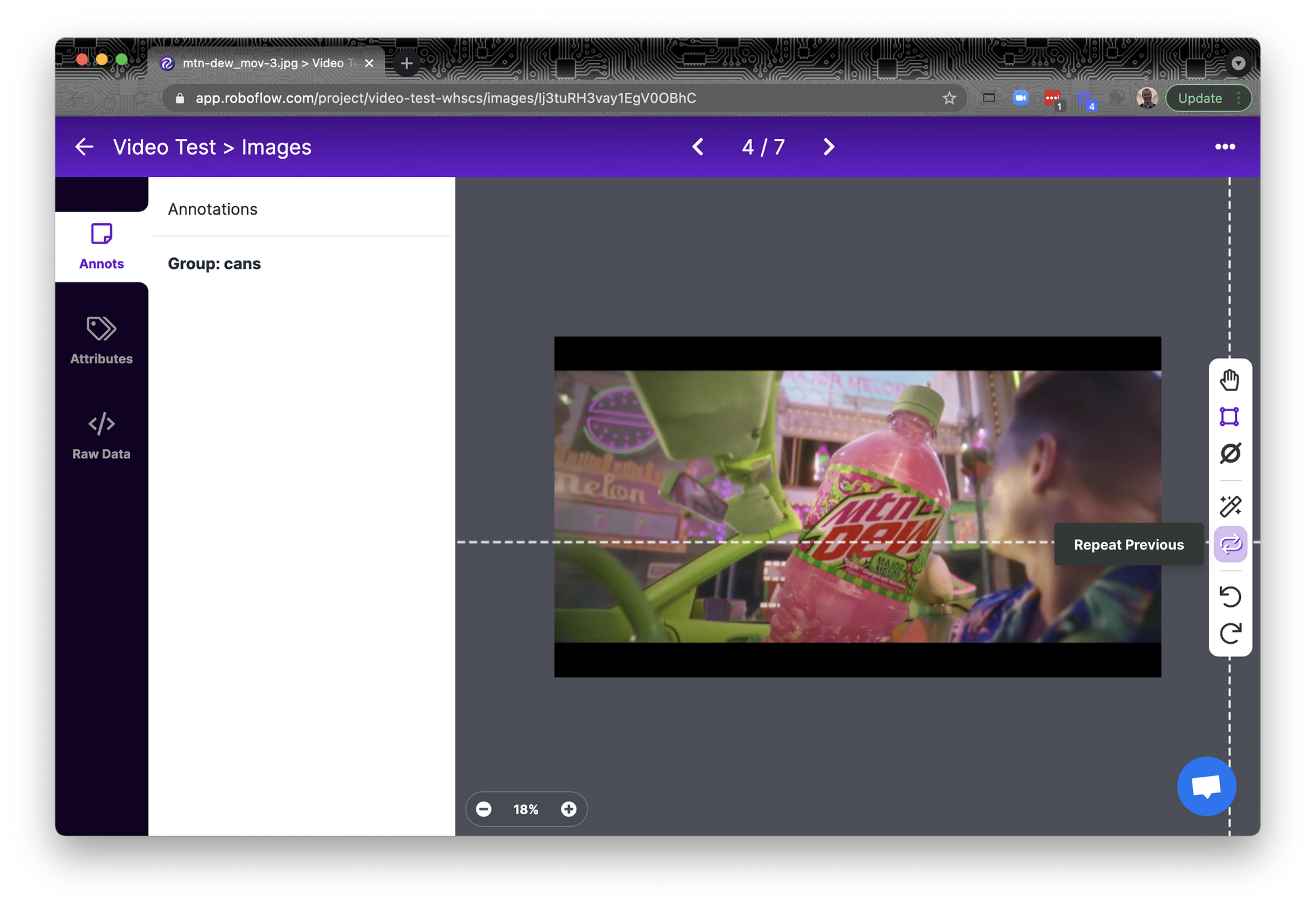

Once you've extracted your frames, you can use all of the same features of Roboflow Annotate that you know and love, including Label Assist with any models that you have previously trained with Roboflow Train.

But there is one extra feature that's useful specifically for video: Repeat Previous.

The Repeat Previous tool will copy the annotation from the last image you annotated. Since the same objects often occur across multiple frames of video, this lets you easily track objects over time by slightly modifying your previous annotation instead of having to start from scratch.

Next Steps

Once you've labeled your images, it's time to train a model. You can then use that model for Label Assist if you have more videos to annotate or deploy it and start getting predictions.