Object detection on iOS has transformed from a cloud-dependent process to a powerful on-device capability, enabling real-time vision applications from augmented reality to safety monitoring. Today, a new generation of optimized models makes it possible to run state-of-the-art detection directly on iPhones and iPads, leveraging the Apple Neural Engine for exceptional performance and privacy.

In this guide, we explore the best object detection models optimized for iOS deployment, from Roboflow's RF-DETR (deployable via the Swift SDK) to YOLO variants and SSD architectures, and show how to choose and deploy them efficiently on Apple devices.

iOS Object Detection Models: Selection Criteria

Here are the criteria we used to evaluate these iOS object detection models.

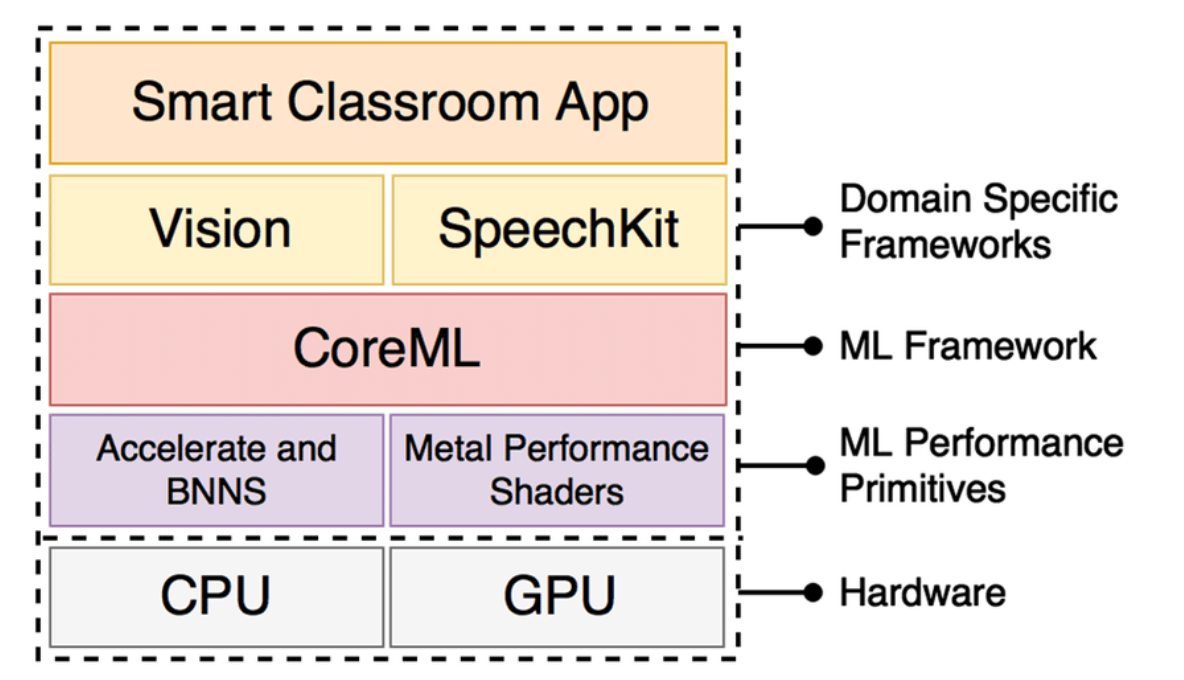

1. iOS Compatibility & CoreML Support

The model must be convertible to CoreML format or provide native iOS SDKs. This ensures seamless integration with Apple's machine learning framework, which automatically dispatches computation across the CPU, GPU, and Neural Engine for optimal performance on iOS devices.

2. Real-Time Performance on Mobile Hardware

Models should achieve inference speeds suitable for live camera processing, typically 15+ FPS on current-generation iPhone hardware (A17 Pro, M-series chips). This ensures smooth, responsive applications without draining the battery.

3. Model Size and Memory Efficiency

Efficient models with manageable parameter counts and FLOPs are essential for iOS deployment. We prioritize models that can run within typical app size constraints while maintaining detection accuracy, especially for on-device deployment without downloading large model files.

4. Accuracy on Standard Benchmarks

Models should demonstrate competitive performance on the COCO dataset with at least 40% mAP (mean Average Precision) at IoU 0.50:0.95, indicating reliable detection across object scales relevant to mobile applications.

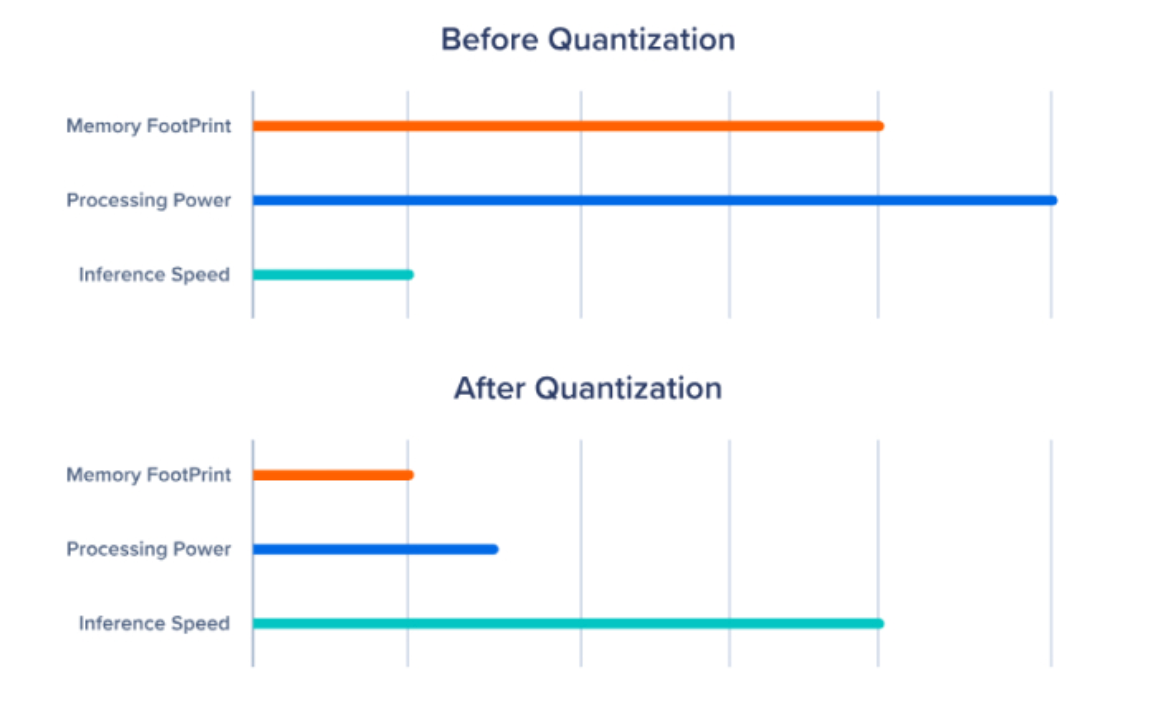

5. Quantization-Friendly Architecture

The architecture should maintain accuracy when quantized to INT8 or FP16 precision for Apple devices. This is critical since quantization dramatically reduces model size and improves inference speed on the Neural Engine, the specialized ML hardware in Apple chips.

6. Production-Ready Tools & Deployment Support

Models with official iOS integration, Swift SDKs, and community support ensure smooth deployment from experimentation to production.

Best iOS Object Detection Models

Here's our list of the best object detection models for iOS.

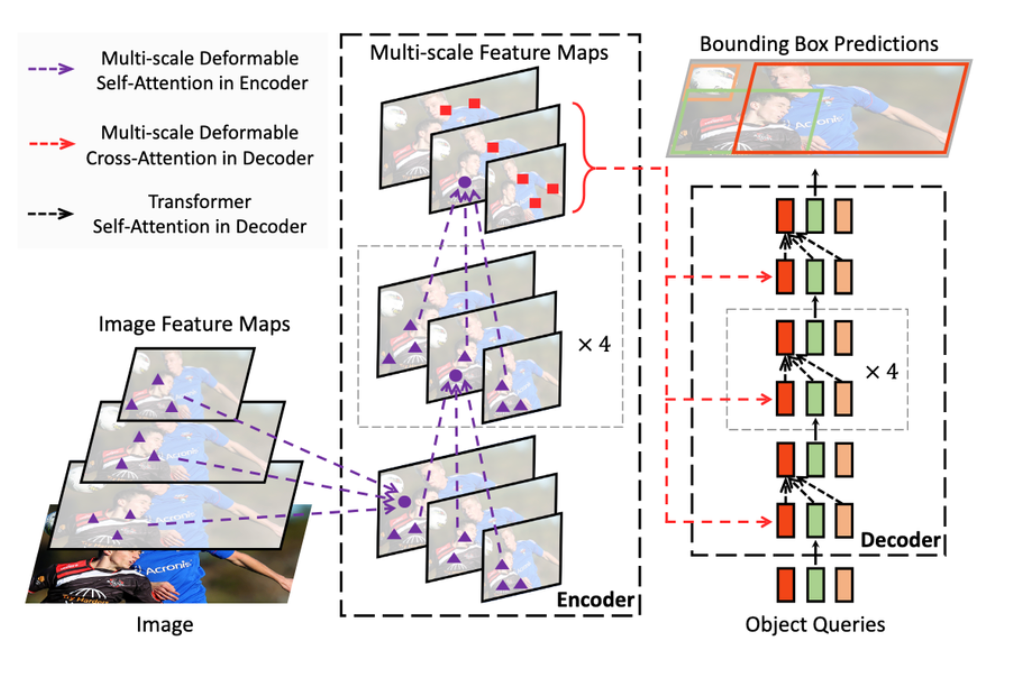

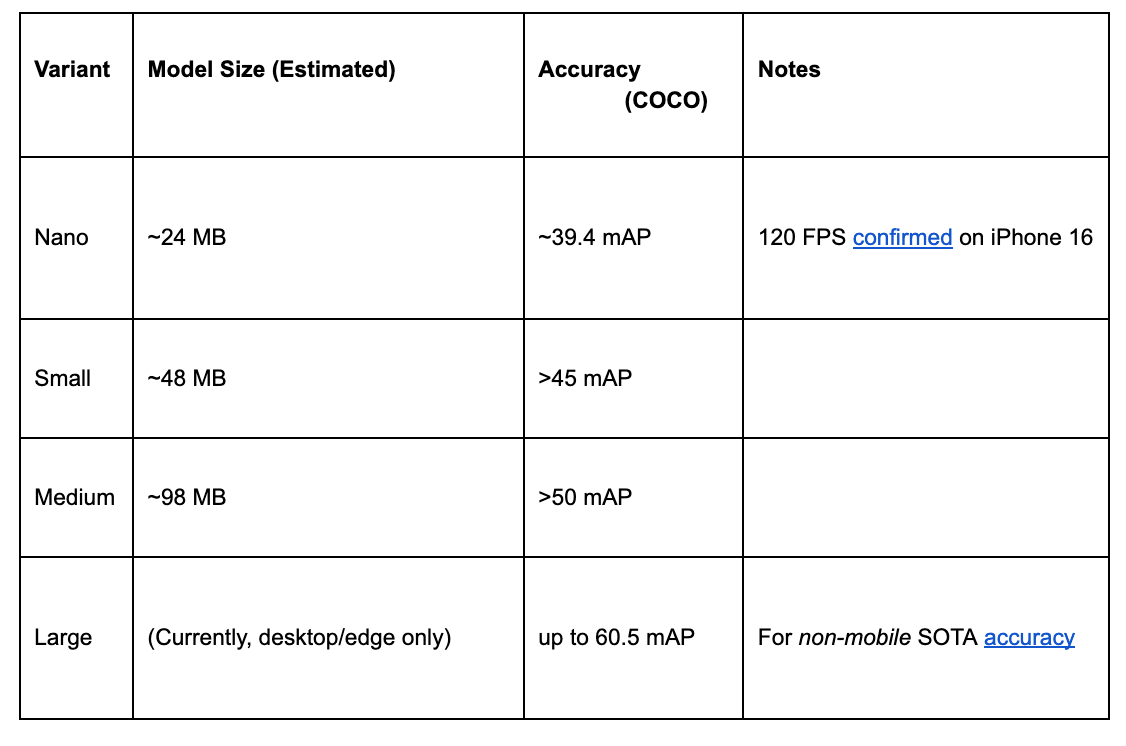

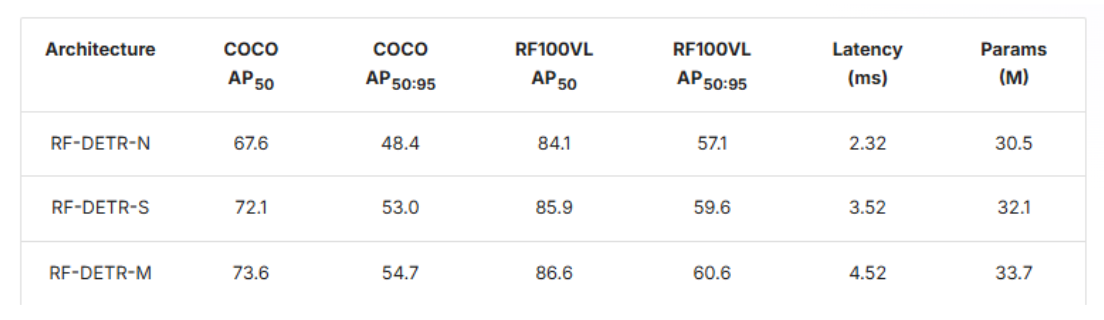

1. RF-DETR

RF-DETR is Roboflow's state-of-the-art real-time object detection model, now available for iOS deployment through Roboflow's native Swift SDK. Released in March 2025, RF-DETR is the first real-time model to exceed 60 mAP on domain adaptation benchmarks while achieving production-ready speeds on edge devices. What makes RF-DETR particularly suited for iOS is its architecture: built on the DINOv2 vision backbone, it eliminates traditional components like anchor boxes and Non-Maximum Suppression (NMS), resulting in a cleaner, more efficient end-to-end pipeline that maps perfectly onto Apple's neural processing capabilities.

iOS-Specific Advantages:

- Swift SDK Integration: The Roboflow Swift SDK provides seamless CoreML model loading and inference, handling model downloads and caching automatically.

- Multiple Model Sizes: Available in Nano, Small, and Medium variants, allowing you to match your iOS device's capabilities and battery constraints.

- Domain Adaptability: RF-DETR's transfer learning capabilities mean you can fine-tune models on custom datasets with exceptional performance across diverse visual conditions, critical for real-world iOS applications.

- Efficient Transformer Architecture: Despite using transformers, RF-DETR achieves 54.7% mAP at just 4.52ms latency on T4 GPUs, translating to excellent on-device performance when quantized for iOS.

The model's quantization-friendly design means it maintains strong accuracy even when converted to INT8 format for iOS deployment. This is essential because INT8 quantization can reduce model size by 75% while keeping accuracy degradation minimal.

Deployment with Roboflow Swift SDK: The Swift SDK streamlines iOS integration with automatic model management, optimized inference pipelines, and camera integration. Deploy trained RF-DETR models directly to production iOS apps with minimal setup.

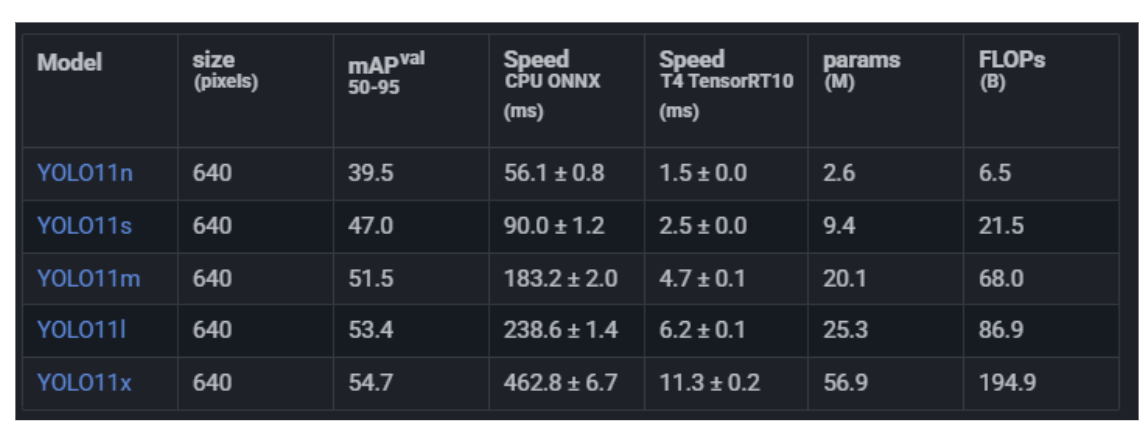

2. YOLO11

YOLO11, released in October 2024, is Ultralytics' latest single-stage object detector and is now available for iOS deployment via CoreML export. Building on the proven YOLO architecture, YOLO11 introduces refined backbone and neck designs that improve feature extraction while maintaining the real-time speeds iOS developers expect.

Why YOLO11 for iOS:

- Proven Real-time Performance: YOLO11 achieves 53.4% mAP on COCO while maintaining 200+ FPS on GPUs; when quantized and deployed on iPhones Neural Engine, it easily hits 60+ FPS for live video.

- Extensive Model Variants: Five size options (Nano, Small, Medium, Large, XLarge) let you choose the right speed-accuracy tradeoff for your iOS device and use case.

- Excellent CoreML Support: Ultralytics provides one-command CoreML export via their YOLO11 framework, generating optimized models ready for iOS deployment.

- Superior to YOLOv8: YOLO11 achieves higher mAP with 22% fewer parameters than YOLOv8m, making it more efficient for battery-constrained devices.

A recent case study demonstrated that exporting YOLO11 to CoreML increased inference speed from 21 FPS (PyTorch on-device) to 85 FPS by leveraging Apple's Neural Engine acceleration. This dramatic improvement comes from CoreML's hardware-aware optimization that automatically distributes computation across the CPU, GPU, and Neural Engine.

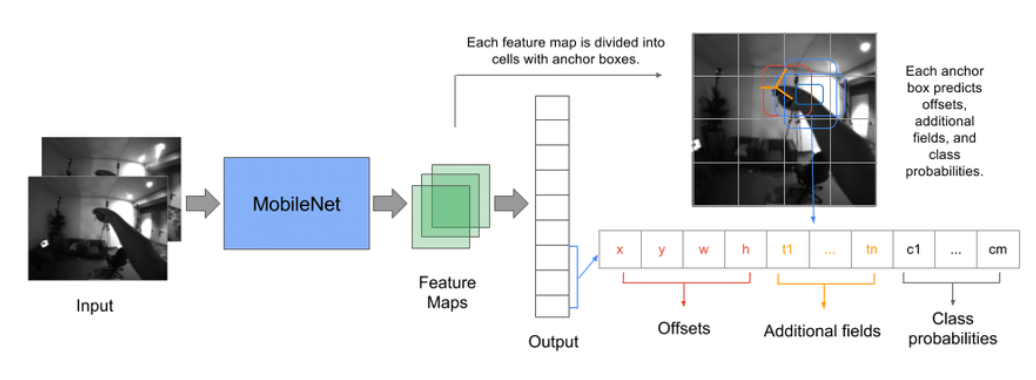

3. MobileNet SSD (Single Shot MultiBox Detector)

MobileNet SSD represents a classical approach to lightweight object detection, optimized from the ground up for mobile devices. While newer transformer models are gaining adoption, MobileNet SSD remains remarkably efficient and reliable for iOS applications, particularly when paired with quantization.

Why MobileNet SSD for iOS:

- Extremely Lightweight: MobileNetV2 + SSDLite achieves 63 FPS on iPhone 7 without GPU acceleration, making it ideal for older devices or power-constrained scenarios.

- Battle-Tested Architecture: Used in countless production iOS apps, MobileNet SSD has years of real-world optimization and deployment best practices.

- Minimal Memory Footprint: Models as small as 8-12 MB even before aggressive quantization, enabling app bundle sizes to remain manageable.

- Superior Multi-scale Detection: SSD's multi-scale feature maps make it exceptionally good at detecting objects of varying sizes, from small distant vehicles to large close-range subjects.

When to Use MobileNet SSD:

- Legacy device support, where deploying larger models isn’t feasible

- Privacy-focused applications requiring offline inference with minimal battery drain

- Real-time video processing on embedded iOS devices

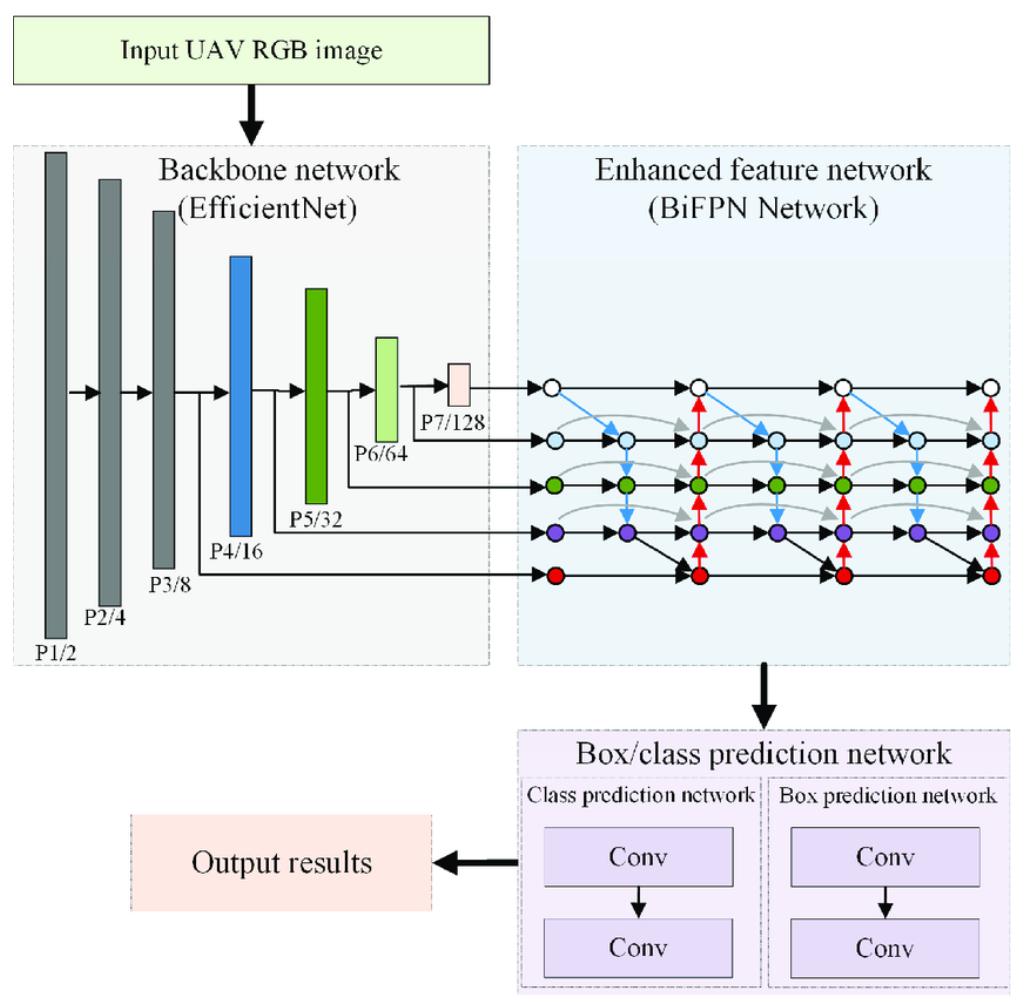

4. EfficientDet

EfficientDet, developed by Google, introduces compound scaling to object detection; a principle that uniformly scales the network's depth, width, and resolution for balanced efficiency. This makes EfficientDet particularly effective at finding optimal trade-offs between accuracy and latency on iOS devices.

Why EfficientDet for iOS:

- Compound Scaling Strategy: Unlike ad-hoc architecture designs, EfficientDet’s systematic compound scaling approach ensures each model size represents a true efficiency frontier, preventing wasted parameters.

- Weighted Bi-directional Feature Pyramid Network (BiFPN): This innovation enables efficient multi-scale feature fusion, making EfficientDet superior at detecting objects across a wide range of sizes; critical for iOS where scenes vary dramatically. Learn more in the official EfficientDet paper, Section 3.2.

- Quantization-Aware Design: EfficientDet architectures are designed with quantization in mind, maintaining strong accuracy when converted to INT8/TensorFlow Lite or FP16 formats. See TensorFlow Lite quantization documentation for details.

- Multiple Efficient Variants: D0 through D7 sizes span from ultra-lightweight to high-accuracy, accommodating any iOS deployment scenario. See the EfficientDet model zoo and benchmarks.

Deployment via TensorFlow Lite or CoreML:

EfficientDet can be exported to both TensorFlow Lite and CoreML formats for comprehensive iOS support. Choose your format based on your development framework and deployment requirements.

Which iOS Object Detection Model Should You Choose?

For most new iOS projects, start with RF-DETR Small for the highest accuracy and strong generalization to your domain. Fine-tune RF-DETR on your specific dataset and validate real FPS performance on your target device.

Considerations for iOS Object Detection

Deploying object detection on iOS requires understanding unique constraints and optimization opportunities. Here are critical considerations that distinguish iOS deployment from cloud or desktop scenarios.

Architecture Must Run on iOS

Not all object detection architectures are practical for iOS. The most critical constraint is the Apple Neural Engine, the specialized hardware in iPhones and iPads that can dramatically accelerate certain operations but not others.

What Works Well on the Neural Engine:

- Convolutional layers with standard configurations

- Depthwise-separable convolutions (used by MobileNet)

- Integer and half-precision quantized operations

- Relatively static computational graphs

What's Problematic:

- Complex dynamic shapes or loops

- Highly specialized CUDA-optimized operations

- Some transformer attention mechanisms (though this is improving with iOS 18+)

- Operations not natively supported by CoreML

Model Highlights:

RF-DETR's Advantage: Despite being transformer-based, RF-DETR is specifically designed for on-device deployment, optimized for CoreML and the ANE.

YOLO11's Advantage: Its pure CNN architecture with standard convolutions aligns perfectly with the Neural Engine for maximal acceleration.

Research FPS to Understand Model Speed

Frames Per Second (FPS) is the most practical metric for iOS applications, but it's often misunderstood. A model achieving 100 FPS on a GPU isn't necessarily achieving 100 FPS on an iPhone.

What Affects iOS FPS:

- Neural Engine Utilization: Models optimized for the Neural Engine see 3-5x speedups over CPU-only execution.

- Memory Bandwidth: Moving large feature maps between memory and compute units creates bottlenecks. Efficient models with smaller activations run faster.

- Quantization Precision: FP32 (full precision) models run slower than FP16 or INT8 quantized models. On iOS, the Neural Engine strongly prefers INT8 and FP16.

- Model Size: Larger models don't always fit in the Neural Engine's cache, forcing memory fetches that reduce FPS.

Testing Actual FPS:

Always test on actual devices, not simulators. iPhone 14 Pro and newer devices with faster processors will achieve significantly higher FPS than iPhone 11 or iPhone SE. Use Xcode Instruments - Apple Docs to profile actual on-device performance and identify bottlenecks before deployment.

Training Smaller Models Is Essential

Large models transfer learned knowledge better to new domains but they also consume battery, memory, and processing resources on iOS. The solution isn't using the largest model available; it's training appropriately-sized models on your specific data.

Why Smaller Models Matter on iOS:

- Battery Efficiency: Inference on the Neural Engine consumes less power with smaller models. A model running at 30 FPS uses half the power of a model at 15 FPS.

- Thermal Management: iPhones throttle CPU/GPU performance when they overheat. Smaller models run cooler, maintaining consistent performance during extended use.

- Real-time Capability: A 10 FPS detection with accurate custom training often outperforms 60 FPS generic detection for your specific use case.

- App Bundle Size: An app containing multiple large models or downloading large models on-device strains user storage. Smaller models have smaller bundle footprints.

How to Train Smaller Models for iOS

Start with a Nano or Small variant of YOLO11 or EfficientDet, fine-tune on your dataset, and validate performance on your target device. Use Roboflow for simplified dataset management and training workflows. The platform automatically handles preprocessing and augmentations, with export to CoreML models. (If Roboflow is unavailable, TensorFlow Lite also provides pipelines for CoreML export.)

Quantization Training:

For maximum iOS efficiency, use Quantization-Aware Training (TensorFlow Guide), which simulates quantization during training to maintain accuracy when deploying quantized models on iOS.

Custom Domain Training:

Train models on your specific domain (retail, manufacturing, security, healthcare, etc.) rather than relying on generic pre-trained weights.

How to Deploy for iOS

Here's a complete workflow for deploying object detection models to iOS.

Step 1: Choose Your Model

- RF-DETR: Best overall for maximum accuracy; ideal when accuracy is paramount and newer iOS hardware is available.

- YOLO11: Best balance of speed and accuracy; ideal for most applications

- EfficientDet: Best for multi-scale object detection

- MobileNet SSD: Best for older devices or extreme efficiency requirements

Step 2: Train or Fine-tune on Custom Data

Use Roboflow Universe or your training framework of choice.

Step 3: Export to CoreML

Export to CoreML format for iOS deployment. Roboflow provides one-command CoreML export. Apply quantization during export for efficiency. Most models maintain >95% accuracy when quantized to FP16 or INT8.

Step 4: Integrate into Xcode

Drag the exported .mlpackage into your project. Use the Vision framework overview for camera input, preprocessing, and inference. For Roboflow, use the Roboflow Swift SDK for simplified integration.

Step 5: Deploy to App Store

Submit your app with the CoreML model bundled. For large models (>100MB), consider on-demand downloads using App Thinning or hosted models.

Explore more object detection models and datasets.

Written by Aarnav Shah

Cite this Post

Use the following entry to cite this post in your research:

Contributing Writer. (Nov 10, 2025). Best iOS Object Detection Models. Roboflow Blog: https://blog.roboflow.com/best-ios-object-detection-models/