This is a guest post by data scientist Jaco Lau; this has been lightly edited by the Roboflow team.

Having worked in construction, I constantly found myself reminding subcontractors to wear the proper Personal Protective Equipment (PPE). Wearing the proper PPE can save lives; from 2018 to 2019, construction injuries rose 61%, with over 5,000 workers dying on the job. In addition, 65,000 are injured around the workplace. Despite the importance of PPE, not wearing proper PPE is a common safety infraction on construction sites. As a result, I built an application that uses computer vision to detect Personal Protective Equipment (PPE).

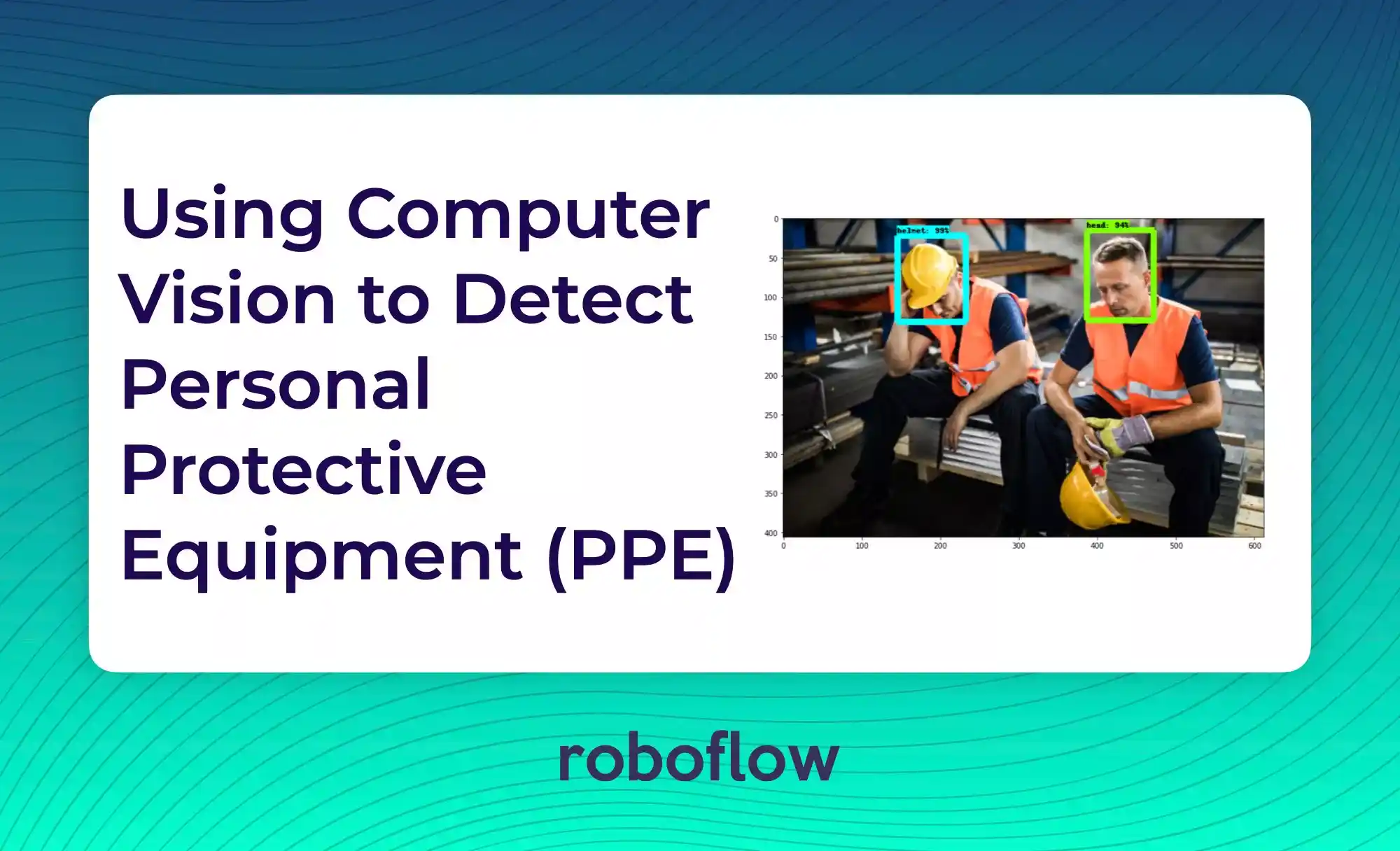

So… what is computer vision? Computer vision is an area of AI that trains computers to interpret and understand the visual world using digital images and videos, kind of like how you and I as humans understand the visual world. The goal with this project is to teach a computer to detect PPE in the same way that I would when I worked in construction -- but the computer will ideally be more efficient than I would be. This is called object detection, where a computer is capable of identifying one or more objects of interest in an image. In this case, the goal was for the computer to identify:

- safety violations (when PPE was worn improperly or not at all)

- and non-safety violations (when PPE was worn properly).

Working Towards the Solution

To begin, I pulled images of construction workers on site. I originally attempted to automate this by scraping images, but found that a lot of images were of cartoons or were otherwise improper for my use case, so I manually gathered these. After compiling the images, I used Roboflow to annotate the images.

Originally, the data showed imbalanced classes. Roughly 80% of images contained no safety violation and about 17% of images contained a safety violation. The remaining images included at least one safety violation and at least one "proper PPE" object. When iterating on my model, I gathered more images containing safety violations added them to my data.

For the purpose and function of my safety violation model, I optimized for the proportion of actual positives that were identified correctly, also known as recall or sensitivity. This is because I would prefer to capture every safety violation and have some false positives, rather than optimize for accuracy, which would lead to a large number of false negatives.

How Roboflow’s Simplicity Saved the Day (or Days) of Preprocessing

Originally, when I set out to build this application, I had very little experience with computer vision, other than using the handwritten digits dataset for practice. After a lot of research on how to preprocess my image data, I came across Roboflow. Roboflow streamlines the whole process of computer vision from image preprocessing and image augmentation to training models.

Roboflow allows for rapid image preprocessing for object detection models by giving users the ability to annotate images, then choose how to preprocess and augment the images. One preprocessing technique I used was to reshape the images so that they are of uniform size. The default resize dimensions, 416x416, compressed the images for faster training and inference.

Upon the completion of image preprocessing, Roboflow allows you to download your image data in various file formats, including PASCAL VOC XML, COCO JSON, Tensorflow TFRecord, and more. These formats are specific to the type of model you build. The computer vision models from which you can choose range from the MobileNet model to the new cutting-edge CLIP model.

Roboflow also provides -- for free! -- notebooks to create your models, making it as simple as changing a couple of lines of code to use your data. Because of its simplicity, I used Roboflow’s notebooks to fit object detection models to my data to see how my model stacked up against the standard pre-trained MobileNet model.

Deploying the Model as an Application

The final step of my project consisted of integrating my model into my Streamlit application for deployment. By simply adding in the ability to resize images when uploaded to the application, I was able to use the user-friendly Streamlit API to set up an application that detected hard hat safety violations and created the necessary forms for each violation.

Between using Roboflow for my image upload, annotation, organizing, and training and using Streamlit for deploying, the process was made significantly faster and easier than writing all of the code from scratch. You can find the images and the code I did write to build the models here and the Streamlit application here.